The ack-koordinator component provides the memory quality of service (QoS) feature for containers. You can use this feature to optimize the performance of memory-sensitive applications while ensuring fair memory scheduling among containers. This topic describes how to enable the memory QoS feature for containers.

Prerequisites

- A Container Service for Kubernetes (ACK) Pro cluster is created. Only ACK Pro clusters support the memory QoS feature. For more information about how to attach the policy to the RAM role, see Create an ACK Pro cluster.

- ack-koordinator installed. For more information, see ack-koordinator (FKA ack-slo-manager).

Background information

- The memory limit of the container. If the amount of memory that a container uses, including the memory used by the page cache, is about to reach the memory limit of the container, the memory reclaim mechanism of the OS kernel is triggered. As a result, the application in the container may not be able to request or release memory resources as normal.

- The memory limit of the node. If the memory limit of a container is greater than the memory request of the container, the container can overcommit memory resources. In this case, the available memory on the node may become insufficient. This causes the OS kernel to reclaim memory from containers. As a result, the performance of your application is downgraded. In extreme cases, the node cannot run as normal.

To improve the performance of applications and the stability of nodes, ACK provides the memory QoS feature for containers. To use this feature, you must use Alibaba Cloud Linux 2 as the node OS and install ack-koordinator. After you enable the memory QoS feature for a container, ack-koordinator automatically configures the memory control group (memcg) based on the configuration of the container. This helps you optimize the performance of memory-sensitive applications while ensuring fair memory scheduling on the node.

Limits

The following table describes the versions of the system components that are required to enable the memory QoS feature for containers.

| Component | Required version |

|---|---|

| Kubernetes | 1.18 and later |

| ack-koordinator (FKA ack-slo-manager) | ≥ 0.8.0 |

| Helm | 3.0 and later |

| Operating System | Alibaba Cloud Linux 2 (For more information about the versions, see the following topics about kernel interfaces: Memcg backend asynchronous reclaim, Memcg QoS feature of the cgroup v1 interface, and Memcg global minimum watermark rating) |

Introduction

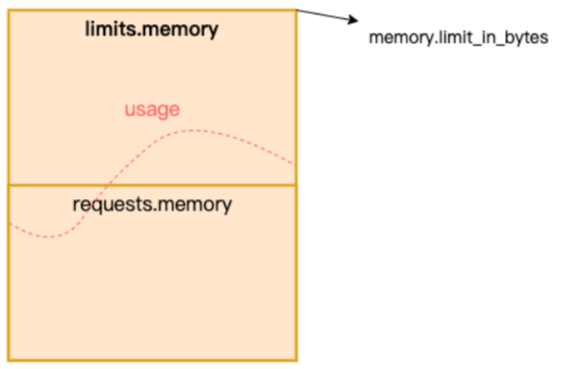

The amount of computing resources that can be used by an application in a Kubernetes cluster is limited by the resource requests and resource limits of the containers for the application. The memory request of a pod in the following figure is only used to schedule the pod to a node with sufficient memory resources. The memory limit of the pod is used to limit the amount of memory that the pod can use. memory.limit_in_bytes in the following figure indicates the upper limit of memory that can be used by a pod.

If the amount of memory that is used by a pod, including the memory used by the page cache, is about to reach the memory limit of the pod, the memcg-level direct memory reclaim is triggered for the pod. As a result, the processes in the pod are blocked. In this case, if the pod applies for memory at a faster rate than the memory is reclaimed, the OOMKilled error occurs and the memory that is used by the pod is released. To reduce the risk of triggering the OOMKilled error, you can increase the memory limit for the pod. However, if the sum of the memory limits of all pods on the node exceeds the physical memory limit of the node, the node is overcommitted. If a pod on an overcommitted node applies for a large amount of memory, the available memory on the node may become insufficient. In this case, the OOMKilled error may occur in other pods if the pods apply for memory and the memory that is used by these pods may be reclaimed. By default, swap is disabled in Kubernetes. This downgrades the performance of the applications that run in these pods. In the preceding scenarios, the behavior of a pod may adversely affect the memory used by other pods, regardless of whether these pods run with memory less than the requested amount. This imposes a risk of downgrading the performance of applications.

ack-koordinator works together with Alibaba Cloud Linux 2 to enable the memory QoS feature for pods. ack-koordinator automatically configures the memcg based on the container configuration, and allows you to enable the memcg QoS feature, the memcg backend asynchronous reclaim feature, and the global minimum watermark rating feature for containers. This optimizes the performance of memory-sensitive applications while ensuring fair memory scheduling among containers. For more information, see Memcg QoS feature of the cgroup v1 interface, Memcg backend asynchronous reclaim, and Memcg global minimum watermark rating.

Memory QoS provides the following optimizations to improve the memory utilization of pods:

- When the memory used by a pod is about to reach the memory limit of the pod, the memcg performs asynchronous reclaim for a specific amount of memory. This prevents the reclaim of all the memory that the pod uses and therefore minimizes the adverse impact on the application performance caused by direct memory reclaim.

- Memory reclaim is performed in a fairer manner among pods. When the available memory on a node becomes insufficient, memory reclaim is first performed on pods that use more memory than their memory requests. This ensures sufficient memory on the node when a pod applies for a large amount of memory.

- If the BestEffort pods on a node use more memory than their memory requests, the system prioritizes the memory requirements of Guaranteed pods and Burstable pods over the memory requirements of BestEffort pods.

For more information about how to enable the memory QoS feature of the kernel of Alibaba Cloud Linux 2, see Overview.

Procedure

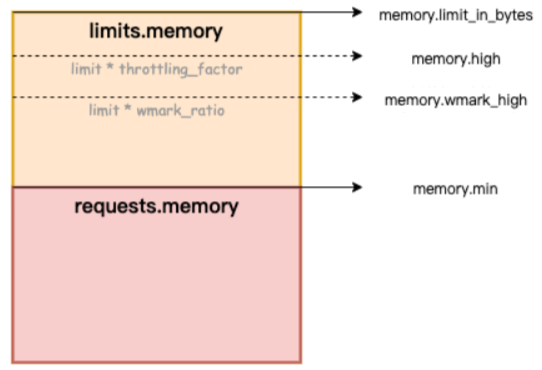

When you enable memory QoS for the containers in a pod, the memcg is automatically configured based on the specified ratios and pod parameters. To enable memory QoS for the containers in a pod, perform the following steps:

- Add the following annotations to enable memory QoS for the containers in a pod:

annotations: # To enable memory QoS for the containers in a pod, set the value to auto. koordinator.sh/memoryQOS: '{"policy": "auto"}' # To disable memory QoS for the containers in a pod, set the value to none. #koordinator.sh/memoryQOS: '{"policy": "none"}' - Use a ConfigMap to enable memory QoS for all the containers in a cluster.

- Use a ConfigMap to enable memory QoS for pods in specified namespaces.

If you want to enable or disable memory QoS for pods of the

LSandBEQoS classes in specific namespaces, specify the namespaces in the ConfigMap. The following ConfigMap is provided as an example. - Optional. Configure advanced parameters. The following table describes the advanced parameters that you can use to configure fine-grained memory QoS configurations at the pod level and cluster level. If you have further requirements, Submit a ticket.

Parameter Type Valid value Description enableBoolean truefalse

true: enables memory QoS for all the containers in a cluster. The recommended memcg settings for the QoS class of the containers are used.false: disables memory QoS for all the containers in a cluster. The memcg settings are restored to the original settings for the QoS class of the containers.

policyString autodefaultnone

auto: enables memory QoS for the containers in the pod and uses the recommended settings. The recommended settings are prioritized over the settings that are specified in the ack-slo-pod-config ConfigMap.default: specifies that the pod inherits the settings that are specified in the ack-slo-pod-config ConfigMap.none: disables memory QoS for the pod. The relevant memcg settings are restored to the original settings. The original settings are prioritized over the settings that are specified in the ack-slo-pod-config ConfigMap.

minLimitPercentInt 0~100 Unit: %. Default value: 0. The default value indicates that this parameter is disabled.This parameter specifies the unreclaimable proportion of the memory request of a pod. The amount of unreclaimable memory is calculated based on the following formula:

Value of memory.min = Memory request × Value of minLimitPercent/100. This parameter is suitable for scenarios where applications are sensitive to the page cache. You can use this parameter to cache files to optimize read and write performance. For example, if you specifyMemory Request=100MiBandminLimitPercent=100for a container,the value of memory.min is 104857600. For more information, see the Alibaba Cloud Linux 2 topic Memcg QoS feature of the cgroup v1 interface.lowLimitPercentInt 0~100 Unit: %. Default value: 0. The default value indicates that this parameter is disabled.This parameter specifies the relatively unreclaimable proportion of the memory request of a pod. The amount of relatively unreclaimable memory is calculated based on the following formula:

Value of memory.low = Memory request × Value of lowLimitPercent/100. For example, if you specifyMemory Request=100MiBandlowLimitPercent=100for a container,the value of memory.low is 104857600. For more information, see the Alibaba Cloud Linux 2 topic Memcg QoS feature of the cgroup v1 interface.throttlingPercentInt 0~100 Unit: %. Default value: 0. The default value indicates that this parameter is disabled.This parameter specifies the memory throttling threshold for the ratio of the memory usage of a container to the memory limit of the container. The memory throttling threshold for memory usage is calculated based on the following formula:

Value of memory.high = Memory limit × Value of throttlingPercent/100. If the memory usage of a container exceeds the memory throttling threshold, the memory used by the container will be reclaimed. This parameter is suitable for container memory overcommitment scenarios. You can use this parameter to cgroups from triggering OOM. For example, if you specifyMemory Limit=100MiBandthrottlingPercent=80for a container,the value of memory.high is 83886080, which is equal to 80 MiB. For more information, see the Alibaba Cloud Linux 2 topic Memcg QoS feature of the cgroup v1 interface.wmarkRatioInt 0~100 Unit: %. Default value: 95. A value of0indicates that this parameter is disabled.This parameter specifies the asynchronous memory reclaim threshold of memory usage to memory limit or memory usage to the value of

memory.high. If throttlingPercent is disabled, the asynchronous memory reclaim threshold for memory usage is calculated based on the following formula: Value of memory.wmark_high = Memory limit × wmarkRatio/100. If throttlingPercent is enabled, the asynchronous memory reclaim threshold for memory usage is calculated based on the following formula:Value of memory.wmark_high = Value of memory.high × wmarkRatio/100. If the memory usage exceeds the reclaim threshold, asynchronous memory reclaim is triggered in the background. For example, if you specifyMemory Limit=100MiBandwmarkRatio=95,throttlingPercent=80for a container, the memory throttling thresholdmemory.highis 83886080 (80 MiB), the memory reclaim ratiomemory.wmark_ratiois 95, and the memory reclaim thresholdmemory.wmark_highis 79691776 (76 MiB). For more information, see the Alibaba Cloud Linux 2 topic Memcg backend asynchronous reclaim.wmarkMinAdjInt -25~50 Unit: %. The default value is -25for theLSQoS class and50for theBEQoS class. A value of0indicates that this parameter is disabled.This parameter specifies the adjustment to the global minimum watermark for a container. A negative value decreases the global minimum watermark and therefore postpones memory reclaim for the container. A positive value increases the global minimum watermark and therefore antedates memory reclaim for the container. For example, if you create a pod whose QoS class is LS, the default setting of this parameter is

memory.wmark_min_adj=-25, which indicates that the minimum watermark is decreased by 25% for the containers in the pod. For more information, see the Alibaba Cloud Linux 2 topic Memcg global minimum watermark rating.

Example

In this example, the following conditions are met:

- An ACK Pro cluster of Kubernetes 1.20 is created.

- The cluster contains 2 nodes, each of which has 8 vCPUs and 32 GB of memory. One node is used to perform stress tests. The other node runs the workload and serves as the tested machine.

- Create a file named redis-demo.yaml with the following YAML template:

--- apiVersion: v1 kind: ConfigMap metadata: name: redis-demo-config data: redis-config: | appendonly yes appendfsync no --- apiVersion: v1 kind: Pod metadata: name: redis-demo labels: koordinator.sh/qosClass: 'LS' # Set the QoS class of the Redis pod to LS. annotations: koordinator.sh/memoryQOS: '{"policy": "auto"}' # Add this annotation to enable memory QoS. spec: containers: - name: redis image: redis:5.0.4 command: - redis-server - "/redis-master/redis.conf" env: - name: MASTER value: "true" ports: - containerPort: 6379 resources: limits: cpu: "2" memory: "6Gi" requests: cpu: "2" memory: "2Gi" volumeMounts: - mountPath: /redis-master-data name: data - mountPath: /redis-master name: config volumes: - name: data emptyDir: {} - name: config configMap: name: redis-demo-config items: - key: redis-config path: redis.conf nodeName: # Set nodeName to the name of the tested node. --- apiVersion: v1 kind: Service metadata: name: redis-demo spec: ports: - name: redis-port port: 6379 protocol: TCP targetPort: 6379 selector: name: redis-demo type: ClusterIP - Run the following command to deploy Redis Server as the test application. You can access the redis-demo Service from within the cluster.

kubectl apply -f redis-demo.yaml - Simulate memory overcommitment. Use the Stress tool to increase the load on memory and trigger memory reclaim. The sum of the memory limits of all pods on the node exceeds the physical memory of the node.

- Run the following command to query the global minimum watermark of the node: Note In memory overcommitment scenarios, if the global minimum watermark of the node is set to a low value, OOM killers may be triggered for all pods on the node even before memory reclaim is performed. Therefore, we recommend that you set the global minimum watermark to a high value. In this example, the global minimum watermark is set to 4,000,000 KB for the tested node that has 32 GiB of memory.

cat /proc/sys/vm/min_free_kbytesExpected output:

4000000 - Use the following YAML template to deploy the memtier-benchmark tool to send requests to the tested node:

apiVersion: v1 kind: Pod metadata: labels: name: memtier-demo name: memtier-demo spec: containers: - command: - memtier_benchmark - '-s' - 'redis-demo' - '--data-size' - '200000' - "--ratio" - "1:4" image: 'redislabs/memtier_benchmark:1.3.0' name: memtier restartPolicy: Never nodeName: # Set nodeName to the name of the node that is used to send requests. - Run the following command to query the test results from memtier-benchmark:

kubectl logs -f memtier-demo - Use the following YAML template to disable memory QoS for the Redis pod and Stress pod. Then, perform stress tests again and compare the results.

apiVersion: v1 kind: Pod metadata: name: redis-demo labels: koordinator.sh/qosClass: 'LS' annotations: koordinator.sh/memoryQOS: '{"policy": "none"}' # Disable memory QoS. spec: ... --- apiVersion: v1 kind: Pod metadata: name: stress-demo labels: koordinator.sh/qosClass: 'BE' annotations: koordinator.sh/memoryQOS: '{"policy": "none"}' # Disable memory QoS.

Analyze the results

The following table describes the stress test results when memory QoS is enabled and disabled.

- Disabled: The memory QoS policy of the pod is set to

none. - Enabled: The memory QoS policy of the pod is set to

autoand the recommended memory QoS settings are used.

| Metric | Disabled | Enabled |

|---|---|---|

Latency-avg | 51.32 ms | 47.25 ms |

Throughput-avg | 149.0 MB/s | 161.9 MB/s |

The table shows that the latency of the Redis pod is reduced by 7.9% and the throughput of the Redis pod is increased by 8.7% after memory QoS is enabled. This indicates that the memory QoS feature can optimize the performance of applications in memory overcommitment scenarios.

FAQ

Is the memory QoS feature that is enabled based on the earlier version of the ack-slo-manager protocol supported after I upgrade from ack-slo-manager to ack-koordinator?

alibabacloud.com/qosClassalibabacloud.com/memoryQOS

| ack-koordinator version | alibabacloud.com protocol | koordinator.sh protocol |

|---|---|---|

| ≥ 0.3.0 and < 0.8.0 | Supported | Not supported |

| ≥ 0.8.0 | Supported | Supported |