GPU monitoring 2.0 is a sophisticated GPU monitoring system developed based on NVIDIA Data Center GPU Manager (DCGM). GPU monitoring 2.0 enables comprehensive monitoring of GPU-accelerated nodes in your cluster. This topic describes how to use GPU monitoring 2.0 to monitor GPU resources in a Container Service for Kubernetes (ACK) cluster.

Prerequisites

An ACK dedicated, ACK dedicated cluster, ACK Basic cluster, ACK Pro cluster or ACK Edge cluster is created. In this example, an ACK Pro cluster is created.

Components required by GPU monitoring 2.0 are installed in the cluster. For more information, see Enable GPU monitoring for a cluster.

Background information

GPU monitoring 2.0 enables comprehensive monitoring of GPU-accelerated nodes and provides a cluster dashboard and a node dashboard.

The cluster dashboard shows monitoring data of clusters and nodes, such as GPU utilization, GPU memory utilization, and XID errors.

The node dashboard shows monitoring data of nodes and pods, such as GPU utilization and GPU memory utilization.

Precautions

GPU metrics are collected at an interval of 15 seconds. Therefore, the monitoring data displayed on Grafana dashboards do not represent real-time information about GPU resources. Even if a dashboard shows that a node does not have idle GPU memory, the node may still be capable of hosting new pods that request GPU memory. A possible reason is that an existing pod on the node finishes running and releases GPU memory before the next point in time when the system collects GPU metrics. Then, the scheduler schedules pending pods to the node before the monitoring data on the dashboard is updated.

The GPU utilization data displayed on the dashboards contains only the GPU resources that are specified in the

resources.limitsparameters of pods. For more information, see Resource Management for Pods and Containers.The following operations may lead to data discrepancy on the dashboards:

Directly run GPU-accelerated applications on a node.

Run the

docker runcommand to directly launch a container that runs a GPU-accelerated application.Directly add the

NVIDIA_VISIBLE_DEVICES=allorNVIDIA_VISIBLE_DEVICES=<GPU ID>environment variable to theenvparameter of a pod to apply for GPU resources, and run programs that use GPU resources.Specify

privileged: truein thesecurityContextparameter of a pod and run programs that use GPU resources.Do not specify the

NVIDIA_VISIBLE_DEVICESenvironment variable in the configuration of a pod that is deployed by using a container image configured with theNVIDIA_VISIBLE_DEVICES=allenvironment variable, and run programs that use GPU resources.

The memory allocated from a GPU may not be completely used. For example, a node has a GPU that provides 16 GiB of memory in total. If the system allocates 5 GiB of the GPU memory to a pod whose startup command is

sleep 1000, the pod does not use GPU memory within 1,000 seconds after it enters the Running state. In this case, 5 GiB of the GPU memory is allocated but 0 GiB is used.

Step 1: Create node pools

GPU monitoring dashboards show the GPU resources that are requested by pods, including individual GPUs, GPU memory, and GPU computing power. In this example, three GPU-accelerated node pools are created. Each node pool contains one node. For more information about how to create a node pool, see Procedure.

The following table describes the three node pools.

Node pool | Node label | How the pod applies for GPU resources | Example of GPU application | Description |

exclusive | - | Apply for individual GPUs. | nvidia.com/gpu: 1 The pod applies for one GPU. | - |

share-mem | ack.node.gpu.schedule=cgpu | Apply for GPU memory. | aliyun.com/gpu-mem: 5 The pod applies for 5 GiB of GPU memory. | You must install the cGPU component in the cluster. For more information, see Install and use ack-ai-installer and the GPU inspection tool. You need to install the cGPU component in the cluster only once. |

share-mem-core | ack.node.gpu.schedule=core_mem | Apply for GPU memory and computing power. |

The pod applies for 5 GiB of GPU memory and 30% of the computing power of a GPU. |

Go to the Node Pools page in the ACK console. If the Status column shows Active for the three node pools, the node pools are created.

Step 2: Deploy GPU-accelerated applications

After the node pools are created, you can run GPU applications in the node pools to check whether GPU metrics can be collected as normal. In this example, a Job is created in each node pool to run a TensorFlow benchmark. At least 9 GiB of GPU memory is required to run a TensorFlow benchmark. In this example, 10 GiB of GPU memory is requested to run a TensorFlow benchmark. For more information about TensorFlow benchmarks, see TensorFlow benchmarks.

The following table describes the three Jobs.

Job | Node pool | GPU resource request |

tensorflow-benchmark-exclusive | exclusive | nvidia.com/gpu: 1 The Job applies for one GPU. |

tensorflow-benchmark-share-mem | share-mem | aliyun.com/gpu-mem: 10 The Job applies for 10 GiB of GPU memory. |

tensorflow-benchmark-share-mem-core | share-mem-core |

The Job applies for 10 GiB of GPU memory and 30% of the computing power of a GPU. |

Create files that are used to deploy Jobs.

Create a file named tensorflow-benchmark-exclusive.yaml and copy the following content to the file:

apiVersion: batch/v1 kind: Job metadata: name: tensorflow-benchmark-exclusive spec: parallelism: 1 template: metadata: labels: app: tensorflow-benchmark-exclusive spec: containers: - name: tensorflow-benchmark image: registry.cn-beijing.aliyuncs.com/ai-samples/gpushare-sample:benchmark-tensorflow-2.2.3 command: - bash - run.sh - --num_batches=5000000 - --batch_size=8 resources: limits: nvidia.com/gpu: 1 # Apply for one GPU. workingDir: /root restartPolicy: NeverCreate a file named tensorflow-benchmark-share-mem.yaml and copy the following content to the file:

apiVersion: batch/v1 kind: Job metadata: name: tensorflow-benchmark-share-mem spec: parallelism: 1 template: metadata: labels: app: tensorflow-benchmark-share-mem spec: containers: - name: tensorflow-benchmark image: registry.cn-beijing.aliyuncs.com/ai-samples/gpushare-sample:benchmark-tensorflow-2.2.3 command: - bash - run.sh - --num_batches=5000000 - --batch_size=8 resources: limits: aliyun.com/gpu-mem: 10 # Apply for 10 GiB of GPU memory. workingDir: /root restartPolicy: NeverCreate a file named tensorflow-benchmark-share-mem-core.yaml and copy the following content to the file:

apiVersion: batch/v1 kind: Job metadata: name: tensorflow-benchmark-share-mem-core spec: parallelism: 1 template: metadata: labels: app: tensorflow-benchmark-share-mem-core spec: containers: - name: tensorflow-benchmark image: registry.cn-beijing.aliyuncs.com/ai-samples/gpushare-sample:benchmark-tensorflow-2.2.3 command: - bash - run.sh - --num_batches=5000000 - --batch_size=8 resources: limits: aliyun.com/gpu-mem: 10 # Apply for 10 GiB of GPU memory. aliyun.com/gpu-core.percentage: 30 # Apply for 30% of the computing power of a GPU. workingDir: /root restartPolicy: Never

Deploy Jobs.

Run the following command to deploy the tensorflow-benchmark-exclusive Job:

kubectl apply -f tensorflow-benchmark-exclusive.yamlRun the following command to deploy the tensorflow-benchmark-share-mem Job:

kubectl apply -f tensorflow-benchmark-share-mem.yamlRun the following command to deploy the tensorflow-benchmark-share-mem-core Job:

kubectl apply -f tensorflow-benchmark-share-mem-core.yaml

Run the following commands to check the status of the pods that are provisioned for the Jobs:

kubectl get poExpected output:

NAME READY STATUS RESTARTS AGE tensorflow-benchmark-exclusive-7dff2 1/1 Running 0 3m13s tensorflow-benchmark-share-mem-core-k24gz 1/1 Running 0 4m22s tensorflow-benchmark-share-mem-shmpj 1/1 Running 0 3m46sThe output shows that three pods are provisioned and are in the

Runningstate. This indicates that the Jobs are deployed.

Step 3: View dashboards provides by GPU monitoring 2.0

View the cluster dashboard

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, click the name of the cluster that you want to manage and choose in the left-side navigation pane.

On the Prometheus Monitoring page, click the GPU Monitoring tab. Then, click the GPUs - Cluster Dimension tab.

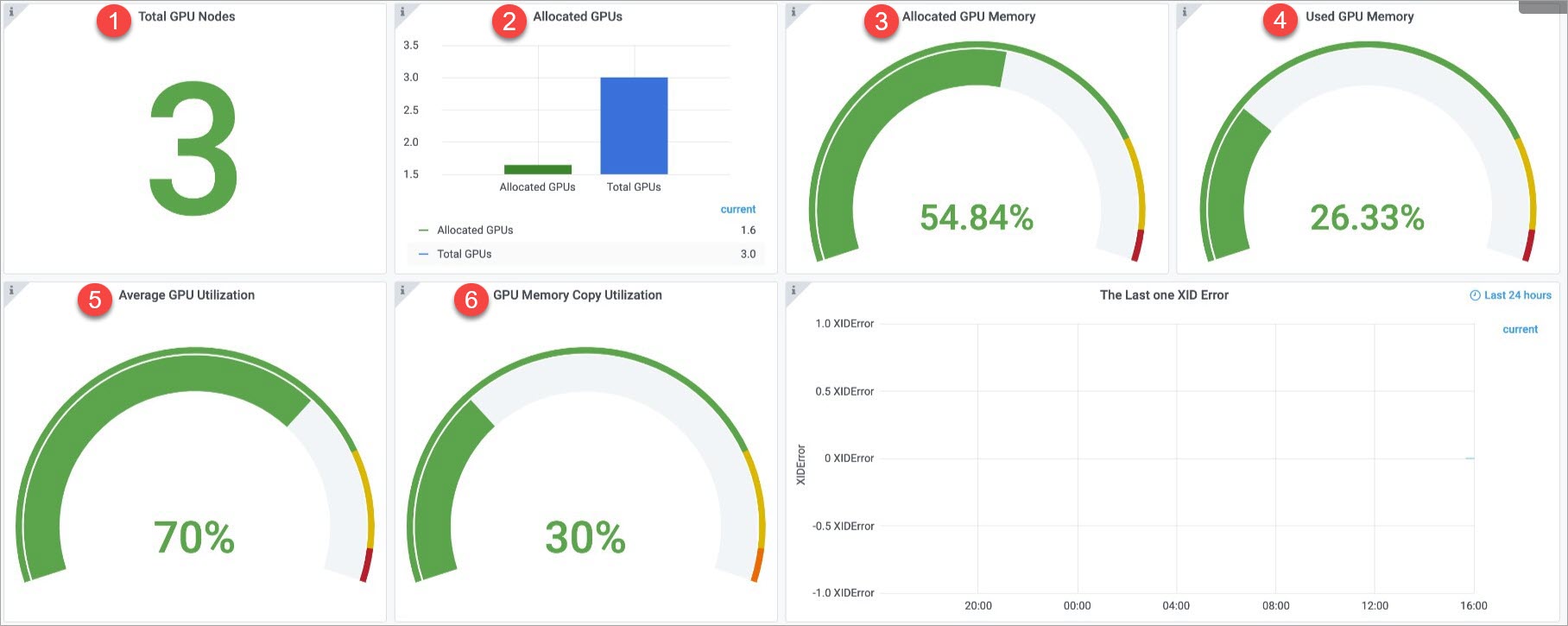

The following figure shows the cluster dashboard. For more information, see Cluster dashboard.

No.

Panel

Description

①

Total GPU Nodes

The cluster has three GPU-accelerated nodes.

②

Allocated GPUs

The cluster has three GPUs in total. 1.6 GPUs are allocated.

NoteIf pods apply for individual GPUs, the allocation ratio of each allocated GPU is 1. If GPU sharing is enabled, the allocation ratio of a GPU is equal to the ratio of the allocated GPU memory to the total GPU memory.

③

Allocated GPU Memory

54.84% of the GPU memory is allocated.

④

Used GPU Memory

26.33% of the GPU memory is allocated.

⑤

Average GPU Utilization

The average GPU utilization is 70%.

⑥

GPU Memory Copy Utilization

The average utilization of GPU memory copies is 30%.

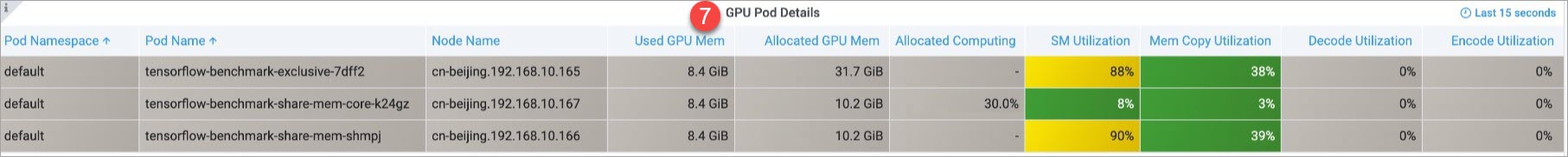

⑦

GPU Pod Details

Information about the pods that request GPU resources in the cluster. The information includes the pod names, the namespaces of the pods, the nodes on which the pods run, and the amount of GPU memory that is used by each pod.

NoteThe Allocated GPU Memory column shows the amount of GPU memory that is allocated to each pod. If GPU sharing is enabled, only integers are supported when nodes report the total amount of memory provided by each GPU to the API server. The reported amount is rounded down to the nearest integer. For example, if a GPU provides 31.7 GiB of memory in total, the node reports 31 GiB to the API server. In this case, if a pod applies for 10 GiB, the actual amount of memory allocated to the pod is calculated based on the following formula: Actual amount of allocated GPU memory = Total amount of GPU memory × (GPU memory request/Amount of GPU memory reported to the API server). In this example, the result is 10.2 GiB (31.7 GiB × 10 GiB/31 GiB = 10.2 GiB).

The Allocated Computing Power column shows the GPU computing power that is allocated to each pod. If a hyphen (-) is displayed in the column, no computing power is allocated to the pod. The figure shows that 30% of the GPU computing power is allocated to the tensorflow-benchmark-share-mem-core-k24gz pod.

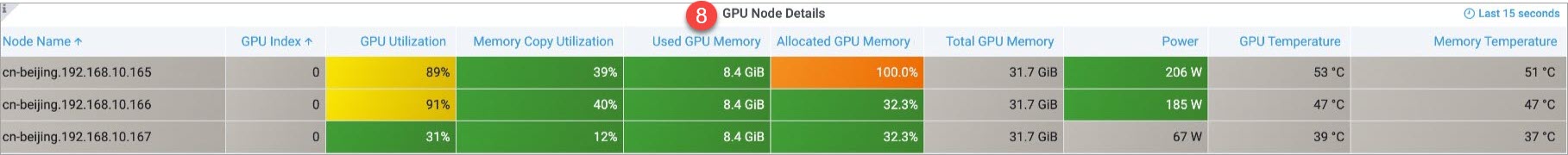

⑧

GPU Node Details

Information about the GPU-accelerated nodes in the cluster. The information includes the node names, the GPU indexes, the GPU utilization, and the utilization of GPU memory copies.

View the node dashboard

On the Prometheus Monitoring page, click the GPU Monitoring tab. Then, click the GPUs - Nodes tab and select the node that you want to view from the GPUNode drop-down list.

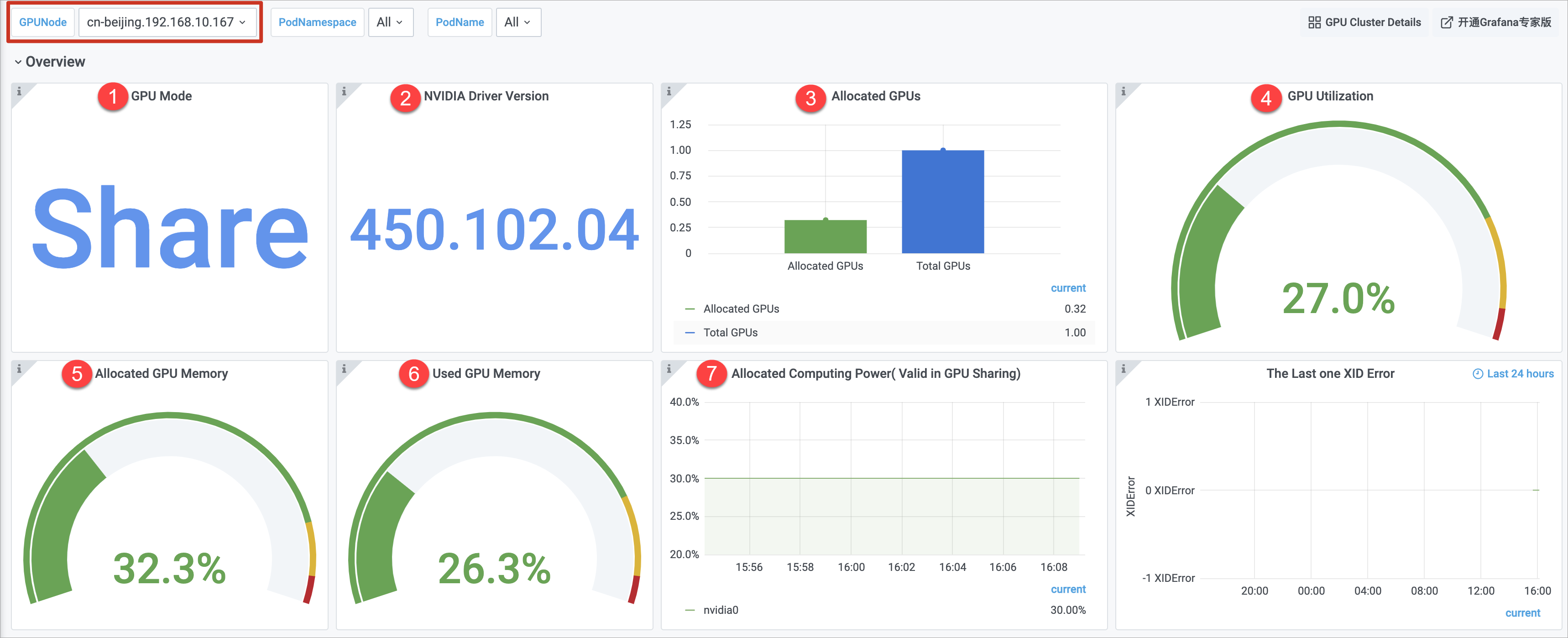

In this example, the cn-beijing.192.168.10.167 node is selected from the GPUNode drop-down list. For more information about the node dashboard, see Node dashboard.

Panel group | No. | Panel | Description |

Overview | ① | GPU Mode | The shared GPU mode is used. This indicates that pods can apply for GPU memory and computing power. |

② | NVIDIA Driver Version | The GPU driver version is 450.102.04. | |

③ | Allocated GPUs | The node has one GPU in total and 0.32 GPUs are allocated. | |

④ | GPU Utilization | The average GPU utilization is 27%. | |

⑤ | Allocated GPU Memory | 32.3% of the total GPU memory is allocated. | |

⑥ | Used GPU Memory | 26.3% of the total GPU memory is used. | |

⑦ | Allocated Computing Power | 30% of the computing power of GPU 0 is allocated. Note The Allocated Computing Power chart shows data only if computing power allocation is enabled for the node. In this example, only the Allocated Computing Power chart on the dashboard of the cn-beijing.192.168.10.167 node shows data. | |

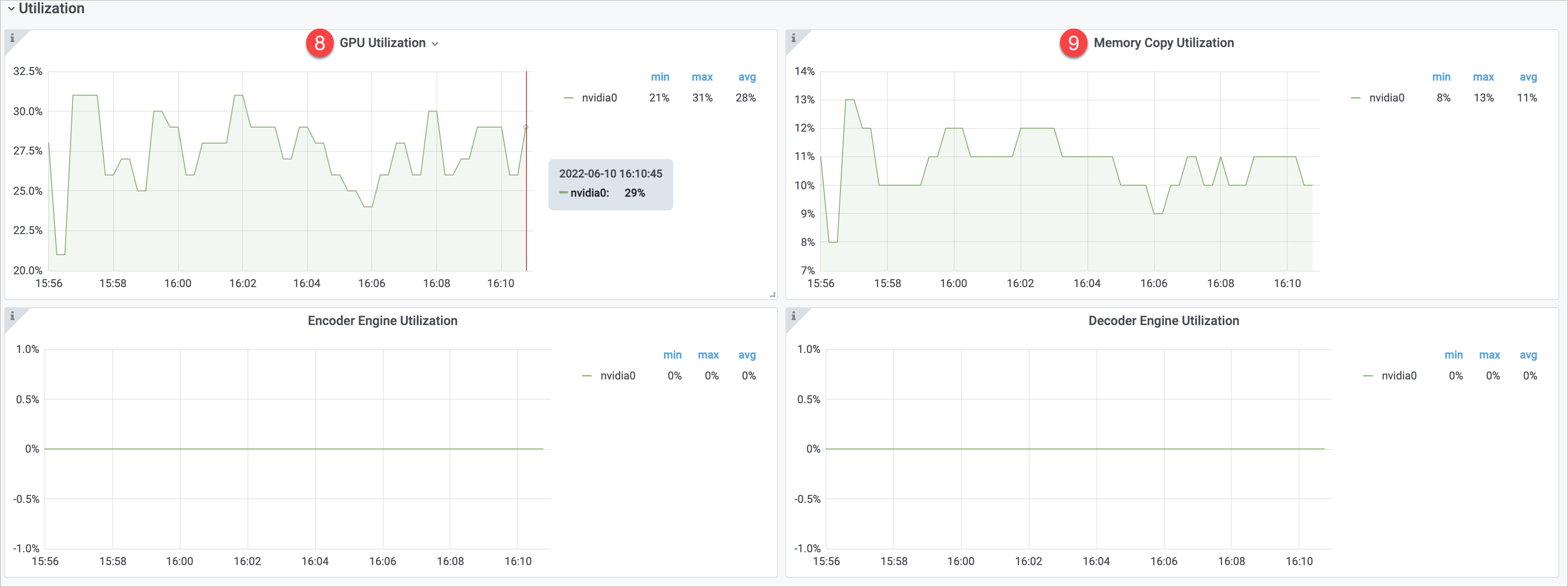

Utilization | ⑧ | GPU Utilization | The minimum, maximum, and average utilization of GPU 0 are 21%, 31%, and 28%. |

⑨ | Memory Copy Utilization | The minimum, maximum, and average utilization of the memory copies of GPU 0 are 8%, 13%, and 11%. | |

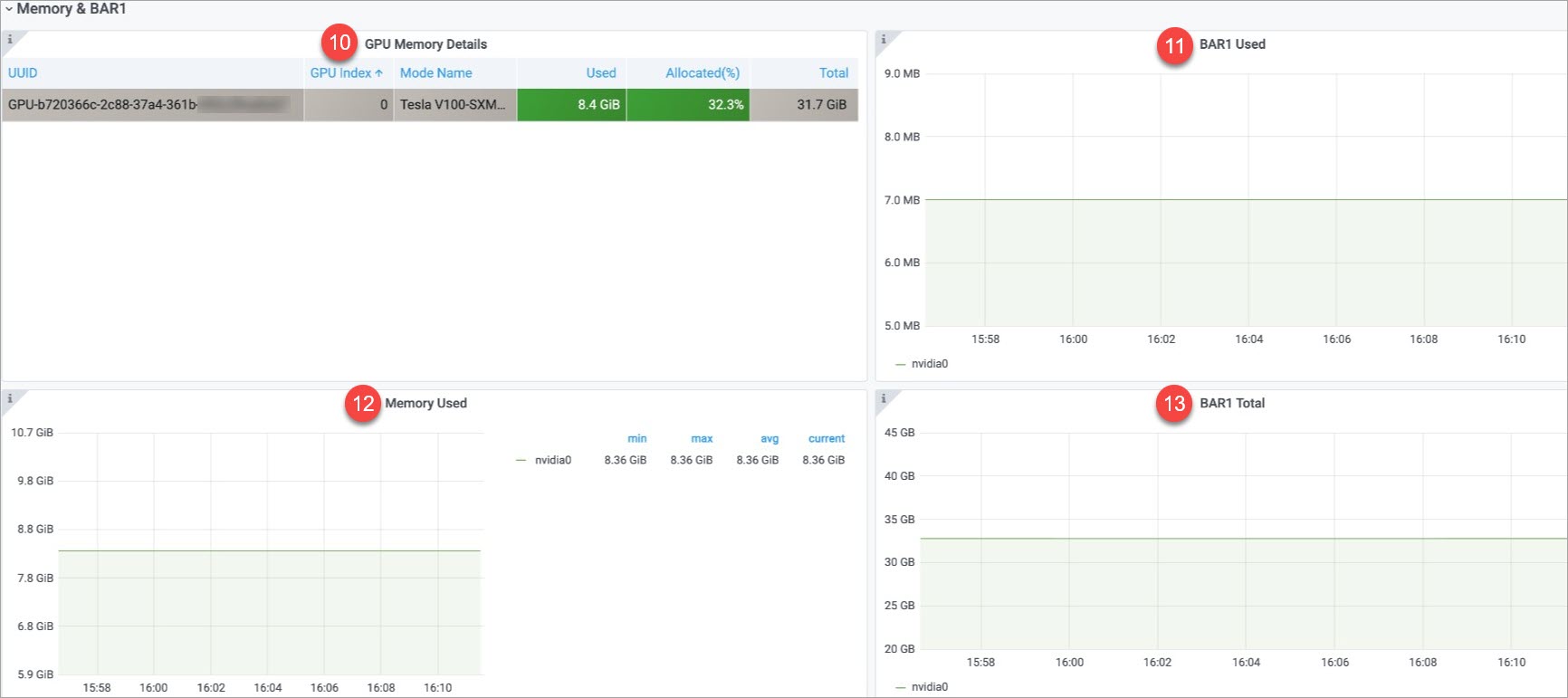

Memory&BAR1 | ⑩ | GPU Memory Details | GPU memory information, including the UUID of the GPU, the GPU index, and the GPU model. |

⑪ | BAR1 Used | 7 MB of BAR1 memory is used. | |

⑫ | Memory Used | 8.36 GB of the GPU memory is used. | |

⑬ | BAR1 Total | The total amount of BAR1 memory is 33 GB. | |

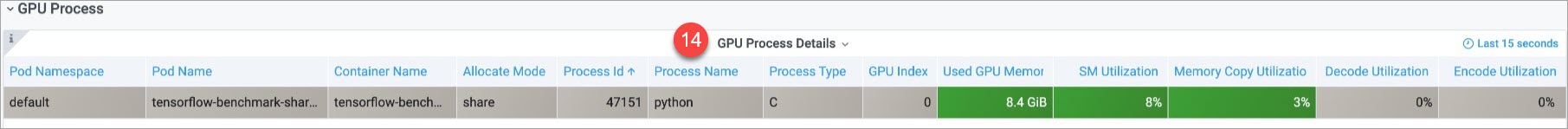

GPU Process | ⑭ | GPU Process Details | Information about GPU processes on the node. The information includes the name and namespace of the pod of each GPU process. |

You can click Profiling, Temperature & Energy, Clock, Retired Pages, and Violation to view more metrics.