In the case of large traffic, potential service overload, resource exhaustion, or malicious attacks, you can configure local throttling in the traffic management center to keep traffic within desired thresholds to ensure continuous availability and stable performance of services. Local throttling is implemented by an Envoy proxy, which uses the token bucket algorithm to control the number of requests sent to a service. This algorithm periodically adds tokens to the token bucket. One token is removed from the bucket each time the Envoy proxy processes a request. When tokens are exhausted, the system stops accepting new requests, thereby effectively preventing overload.

Prerequisites

A Service Mesh (ASM) instance is created and meets the following requirements:

If the ASM instance is of Enterprise Edition or Ultimate Edition, the version of the ASM instance must be 1.14.3 or later. If the version of the ASM instance is earlier than 1.14.3, update the ASM instance. For more information, see Update an ASM instance.

If the ASM instance is of Standard Edition, the version of the ASM instance must be 1.9 or later. In addition, you can use only the native rate limiting feature of Istio to implement local throttling for the ASM instance. The reference document varies with the Istio version. For more information about how to configure local throttling for the latest Istio version, see Enabling Rate Limits using Envoy.

Automatic sidecar proxy injection is enabled for the default namespace in the Container Service for Kubernetes (ACK) cluster. For more information, see the "Enable automatic sidecar proxy injection" section of the Manage global namespaces topic.

The sample services, HTTPBin and sleep, are deployed. The sleep service can access the HTTPBin service. For more information, see Deploy the HTTPBin application.

Scenario 1: Configure a throttling rule for requests destined for a specific port of a service

Configure a throttling rule on port 8000 of the HTTPBin service. After the throttling rule is configured, all requests destined for port 8000 of the HTTPBin service are subject to throttling.

Create a local throttling rule.

Log on to the ASM console. In the left-side navigation pane, choose .

On the Mesh Management page, click the name of the ASM instance. In the left-side navigation pane, choose . On the page that appears, click Create.

On the Create page, configure the following parameters based on your business requirements and then click OK.

Section

Parameter

Description

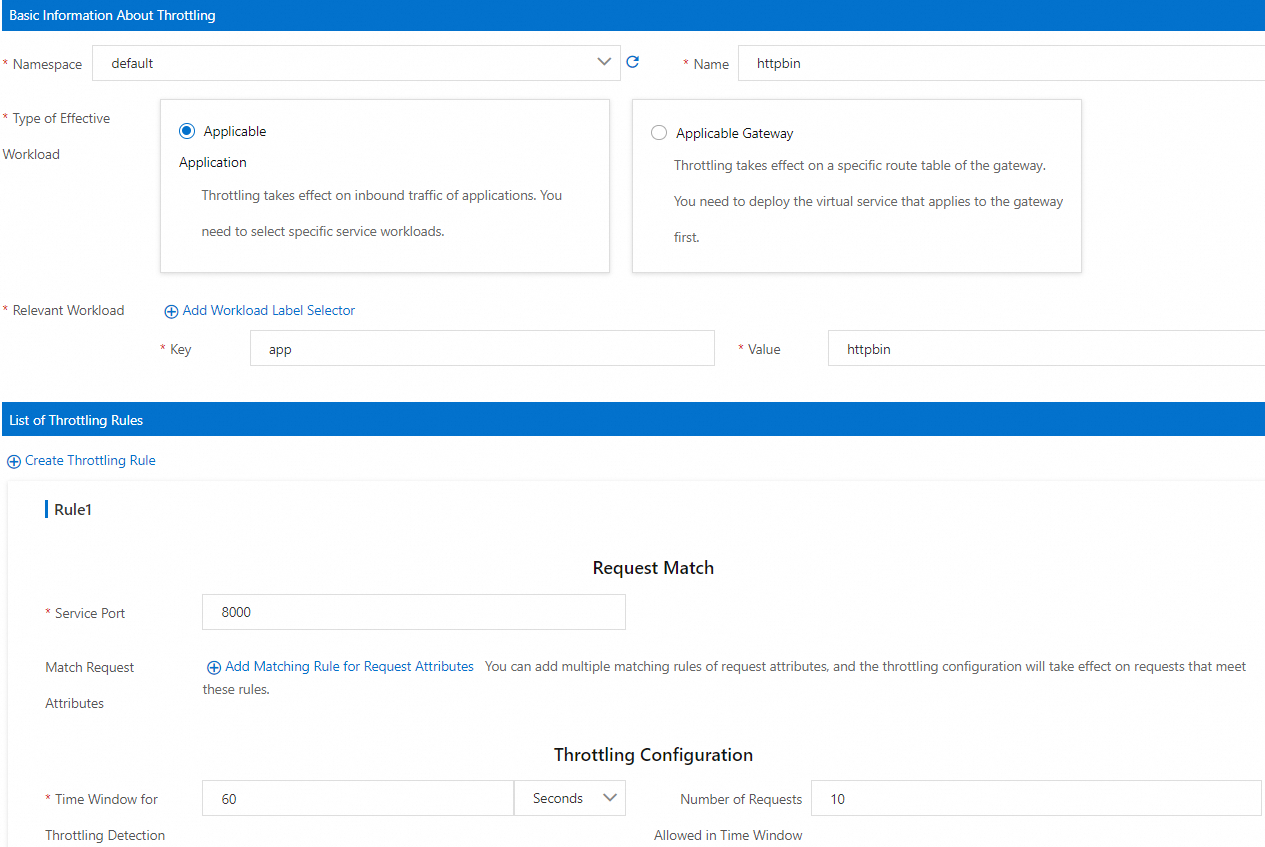

Basic Information About Throttling

Namespace

The namespace in which the workload for which the local throttling rule takes effect resides. For this example, select default.

Name

The name of the local throttling rule. For this example, enter httpbin.

Type of Effective Workload

The type of the workload for which throttling takes effect. You can select Applicable Application or Applicable Gateway. For this example, select Applicable Application.

Relevant Workload

You must enter key-value pairs to select a specific workload. For this example, set Key to app and Value to httpbin.

List of Throttling Rules

Service Port

The HTTP port declared in the Kubernetes Service of the HTTPBin service. For this example, enter the HTTP port 8000 of the HTTPBin service.

Throttling Configuration

Specifies the length of the time window for local throttling detection and the number of requests allowed in the time window. If the number of requests sent within the time window exceeds the upper limit, throttling is triggered for the requests. The following configurations are used in this example:

Set Time Window for Throttling Detection to 60 seconds.

Set Number of Requests Allowed in Time Window to 10.

The preceding configurations indicate that requests destined for workloads of this service cannot exceed 10 within 60 seconds.

The following YAML code shows the configurations of the local throttling rule specified in the preceding figure:

Verify the local throttling rule.

Run the following command to enable bash for the sleep service:

kubectl exec -it deploy/sleep -- shRun the following command to send 10 requests:

for i in $(seq 1 10); do curl -v http://httpbin:8000/headers; doneRun the following command to send the 11th request:

curl -v http://httpbin:8000/headersExpected output:

* Trying 172.16.245.130:8000... * Connected to httpbin (172.16.245.130) port 8000 > GET /headers HTTP/1.1 > Host: httpbin:8000 > User-Agent: curl/8.5.0 > Accept: */* > < HTTP/1.1 429 Too Many Requests < x-local-rate-limit: true < content-length: 18 < content-type: text/plain < date: Tue, 26 Dec 2023 08:02:58 GMT < server: envoy < x-envoy-upstream-service-time: 2The output indicates that the HTTP 429 status code is returned. Throttling is performed on requests.

Scenario 2: Configure a throttling rule for requests destined for a specified path on a specific port of a service

Configure a throttling rule on port 8000 of the HTTPBin service, and specify that throttling takes effect only on requests destined for the /headers path. After the throttling rule is configured, all requests destined for port 8000 of the HTTPBin service and the /headers path are subject to throttling.

Create a local throttling rule.

Log on to the ASM console. In the left-side navigation pane, choose .

On the Mesh Management page, click the name of the ASM instance. In the left-side navigation pane, choose . On the page that appears, click Create.

On the Create page, configure the following parameters based on your business requirements and then click OK.

Section

Parameter

Description

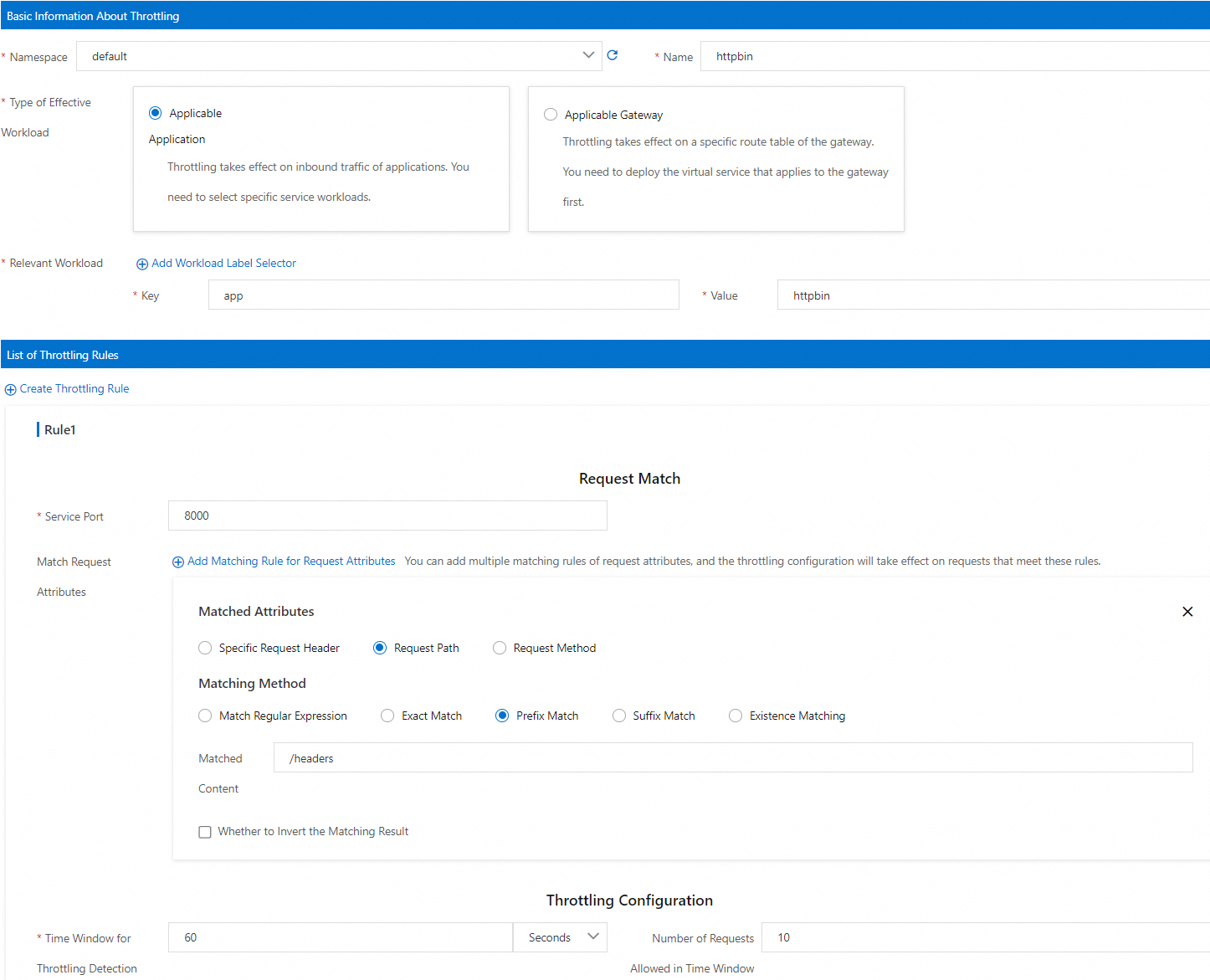

Basic Information About Throttling

Namespace

The namespace in which the workload for which the local throttling rule takes effect resides. For this example, select default.

Name

The name of the local throttling rule. For this example, enter httpbin.

Type of Effective Workload

The type of the workload for which throttling takes effect. You can select Applicable Application or Applicable Gateway. For this example, select Applicable Application.

Relevant Workload

You must enter key-value pairs to select a specific workload. For this example, set Key to app and Value to httpbin.

List of Throttling Rules

Service Port

The HTTP port declared in the Kubernetes Service of the HTTPBin service. For this example, enter the HTTP port 8000 of the HTTPBin service.

Match Request Attributes

The request matching rules. The configured throttling is triggered when requests meet the request matching rules. The following configurations are used in this example:

Select Request Path for Matched Attributes.

Select Prefix Match for Matching Method.

Set Matched Content to

/headers.

Throttling Configuration

Specifies the length of the time window for local throttling detection and the number of requests allowed in the time window. If the number of requests sent within the time window exceeds the upper limit, throttling is triggered for the requests. The following configurations are used in this example:

Set Time Window for Throttling Detection to 60 seconds.

Set Number of Requests Allowed in Time Window to 10.

The preceding configurations indicate that requests destined for workloads of this service cannot exceed 10 within 60 seconds.

Verify the local throttling rule.

Run the following command to enable bash for the sleep service:

kubectl exec -it deploy/sleep -- shRun the following command to send 10 requests:

for i in $(seq 1 10); do curl -v http://httpbin:8000/headers; doneRun the following command to send the 11th request:

curl -v http://httpbin:8000/headersExpected output:

* Trying 172.16.245.130:8000... * Connected to httpbin (172.16.245.130) port 8000 > GET /headers HTTP/1.1 > Host: httpbin:8000 > User-Agent: curl/8.5.0 > Accept: */* > < HTTP/1.1 429 Too Many Requests < x-local-rate-limit: true < content-length: 18 < content-type: text/plain < date: Tue, 26 Dec 2023 08:02:58 GMT < server: envoy < x-envoy-upstream-service-time: 2The output indicates that the HTTP 429 status code is returned. Throttling is performed on requests.

Run the following command to send a request to the

/getpath of the HTTPBin service:curl -v http://httpbin:8000/getExpected output:

* Trying 192.168.243.21:8000... * Connected to httpbin (192.168.243.21) port 8000 (#0) > GET /get HTTP/1.1 > Host: httpbin:8000 > User-Agent: curl/8.1.2 > Accept: */* > < HTTP/1.1 200 OK < server: envoy < date: Thu, 11 Jan 2024 03:46:11 GMT < content-type: application/json < content-length: 431 < access-control-allow-origin: * < access-control-allow-credentials: true < x-envoy-upstream-service-time: 1 < { "args": {}, "headers": { "Accept": "*/*", "Host": "httpbin:8000", "User-Agent": "curl/8.1.2", "X-Envoy-Attempt-Count": "1", "X-Forwarded-Client-Cert": "By=spiffe://cluster.local/ns/default/sa/httpbin;Hash=be10819991ba1a354a89e68b3bed1553c12a4fba8b65fbe0f16299d552680b29;Subject=\"\";URI=spiffe://cluster.local/ns/default/sa/sleep" }, "origin": "127.0.0.6", "url": "http://httpbin:8000/get" }The output indicates that the HTTP 200 status code is returned. Requests destined for other paths of the HTTPBin service are not controlled by the throttling rule.

Related operations

View metrics related to local throttling

The local throttling feature generates the metrics listed in the following table.

Metric | Description |

envoy_http_local_rate_limiter_http_local_rate_limit_enabled | Total number of requests for which throttling is triggered |

envoy_http_local_rate_limiter_http_local_rate_limit_ok | Total number of responses to requests that have tokens in the token bucket |

envoy_http_local_rate_limiter_http_local_rate_limit_rate_limited | Total number of requests that have no tokens available (throttling is not necessarily enforced) |

envoy_http_local_rate_limiter_http_local_rate_limit_enforced | Total number of requests to which throttling was applied (for example, the HTTP 429 status code is returned) |

You can configure the proxyStatsMatcher parameter of a sidecar proxy to enable the sidecar proxy to report metrics. Then, you can use Prometheus to collect and view metrics related to throttling.

Configure the proxyStatsMatcher parameter to enable a sidecar proxy to report throttling-related metrics.

After you select proxyStatsMatcher, select Regular Expression Match and set this parameter to

.*http_local_rate_limit.*. Alternatively, click Add Local Throttling Metrics. For more information, see the "proxyStatsMatcher" section in Configure sidecar proxies.Redeploy the HTTPBin service. For more information, see the "(Optional) Redeploy workloads" section in Configure sidecar proxies.

Configure local throttling and perform request tests by referring to Scenario 1 or Scenario 2.

Run the following command to view the local throttling metrics of the HTTPBin service:

kubectl exec -it deploy/httpbin -c istio-proxy -- curl localhost:15020/stats/prometheus|grep http_local_rate_limitExpected output:

envoy_http_local_rate_limiter_http_local_rate_limit_enabled{} 37 envoy_http_local_rate_limiter_http_local_rate_limit_enforced{} 17 envoy_http_local_rate_limiter_http_local_rate_limit_ok{} 20 envoy_http_local_rate_limiter_http_local_rate_limit_rate_limited{} 17

Configure metric collection and alerts for local throttling

After you configure local throttling metrics, you can configure a Prometheus instance to collect the metrics. You can also configure alert rules based on key metrics. This way, alerts are generated when local throttling occurs. The following section describes how to configure metric collection and alerts for local throttling. In this example, Managed Service for Prometheus is used.

In Managed Service for Prometheus, connect the ACK cluster on the data plane to the Alibaba Cloud ASM component or upgrade the Alibaba Cloud ASM component to the latest version. This ensures that the local throttling metrics can be collected by Managed Service for Prometheus. For more information about how to integrate components into ARMS, see Component management. (If you have configured a self-managed Prometheus instance to collect metrics of an ASM instance by referring to Monitor ASM instances by using a self-managed Prometheus instance, you do not need to perform this step.)

Create an alert rule for local throttling. For more information, see Use a custom PromQL statement to create an alert rule. The following example demonstrates how to specify key parameters for configuring an alert rule. For more information about how to configure other parameters, see the preceding documentation.

Parameter

Example

Description

Custom PromQL Statements

(sum by(namespace, pod_name) (increase(envoy_http_local_rate_limiter_http_local_rate_limit_enforced[1m]))) > 0

In this example, the increase statement queries the number of requests that are throttled in the last 1 minute. The number of requests is grouped by the namespace and name of the pod that triggers throttling. An alert is reported when the number of requests that are throttled within 1 minute is greater than 0.

Alert Message

Local throttling occurs! Namespace: {$labels.namespace }}, Pod that triggers throttling: {{$labels.pod_name}}. Number of requests that are throttled in the current 1 minute: {{ $value }}

The alert message sent to the user. The alert information in the example shows the namespace of the pod that triggers throttling, the name of the pod, and the number of requests that are throttled in the last 1 minute.

FAQ

Why does the local throttling configured for a service not take effect?

The service does not use HTTP protocol for communications or ASM fails to identify HTTP protocol

The local throttling feature is available only for HTTP protocol. Before you configure local throttling rules for a service, ensure that the service uses HTTP protocol or HTTP-based application-layer protocols for communications. These application-layer protocols include gRPC and dubbo3.

If a service uses HTTP protocol for communications, you need to specify the protocol of the service in a standard manner. This helps ensure that ASM can correctly identify the application-layer protocol of the service. For more information, see How do I specify the protocol of a service in a standard manner?

You have used CRDs to modify the inbound traffic configurations of the service in sidecar mode

By default, ASM automatically configures an inbound traffic listener for sidecar proxies based on service declaration. Local throttling and global throttling rules are enabled by this default configuration.

To expose a cluster application that listens to the localhost to other pods in the cluster, you may have used Custom Resource Definitions (CRDs) to modify the default configurations of the service in sidecar mode. For more information, see How can I expose a cluster application that listens to the localhost to other pods in the cluster?

In this case, local throttling does not take effect if you specify a service port for local throttling. You need to change the service port for local throttling to the inbound port specified in the sidecar CRD.

For example, a local throttling rule is configured for the port 8000 of an HTTPBin service. This rule does not take effect if the following sidecar CRD is configured for the HTTPBin service:

apiVersion: networking.istio.io/v1beta1

kind: Sidecar

metadata:

name: localhost-access

namespace: default

spec:

ingress:

- defaultEndpoint: '127.0.0.1:80'

port:

name: http

number: 80

protocol: HTTP

workloadSelector:

labels:

app: httpbinYou must enter 80 as the service port when creating a local throttling rule to make sure that the rule takes effect.

References

If the version of your ASM instance is 1.19.0 or later, you can use the

limit_overridesfield in the local throttling YAML file to match requests by using query parameters. For more information, see Description of ASMLocalRateLimiter fields.You can use ASMGlobalRateLimiter to configure global throttling for ingress gateways and inbound traffic directed to services. For more information, see Use ASMGlobalRateLimiter to configure global throttling for inbound traffic directed to a service into which a sidecar proxy is injected.

You can configure local throttling or global throttling for an ingress gateway in the ASM console. For more information, see Configure local throttling on an ingress gateway and Configure global throttling on an ingress gateway.

You can use the warm-up feature to progressively increase the number of requests within a specified period of time to avoid issues such as request timeout and data loss. For more information, see Use the warm-up feature.

You can configure the connectionPool field to implement circuit breaking. Circuit breaking can be used to protect your system from further damage in the event of a system failure or overload. For more information, see Configure the connectionPool field to implement circuit breaking.