You can create jobs to develop tasks in a project. This topic describes job-related operations.

Background information

Prerequisites

A project is created. For more information, see Manage projects.

Create a job

- Go to the Data Platform tab.

- Log on to the Alibaba Cloud EMR console by using your Alibaba Cloud account.

- In the top navigation bar, select the region where your cluster resides and select a resource group based on your business requirements.

- Click the Data Platform tab.

- In the Projects section of the page that appears, find the project that you want to manage and click Edit Job in the Actions column.

- Create a job.

Configure a job

For more information about how to develop and configure each type of job, see Jobs. This section describes how to configure the parameters of a job on the Basic Settings, Advanced Settings, Shared Libraries, and Alert Settings tabs in the Job Settings panel.

Add annotations

!!! @<Annotation name>: <Annotation content>!!!) that start an annotation. Add one annotation in a line.

| Annotation name | Description | Example |

|---|---|---|

| rem | Adds a comment. | |

| env | Adds an environment variable. | |

| var | Adds a custom variable. | |

| resource | Adds a resource file. | |

| sharedlibs | Adds dependency libraries. This annotation is valid only in Streaming SQL jobs. Separate multiple dependency libraries with commas (,). | |

| scheduler.queue | Specifies the queue to which the job is submitted. | |

| scheduler.vmem | Specifies the memory required to run the job. Unit: MiB. | |

| scheduler.vcores | Specifies the number of vCores required to run the job. | |

| scheduler.priority | Specifies the priority of the job. Valid values: 1 to 100. | |

| scheduler.user | Specifies the user who submits the job. | |

- Invalid annotations are automatically skipped. For example, an unknown annotation or an annotation whose content is in an invalid format will be skipped.

- Job parameters specified in annotations take precedence over job parameters specified in the Job Settings panel. If a parameter is specified both in an annotation and in the Job Settings panel, the parameter setting specified in the annotation takes effect.

Run a job

- Run the job that you created.

- On the job page, click Run in the upper-right corner to run the job.

- In the Run Job dialog box, select a resource group and the cluster that you created.

- Click OK.

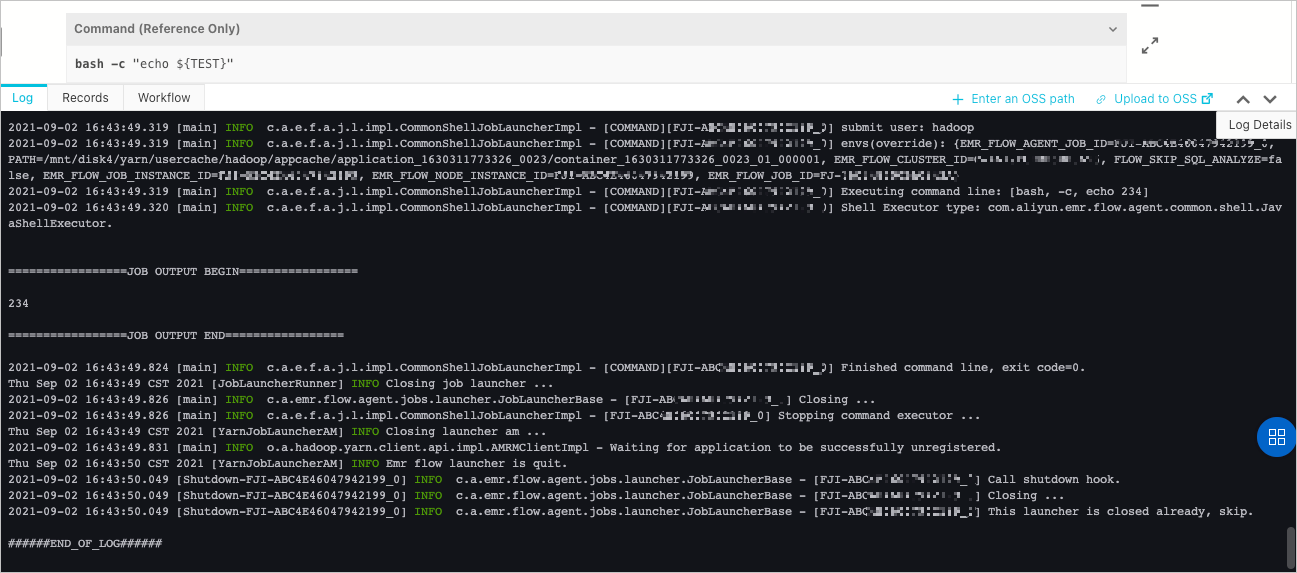

- View running details.

Operations that you can perform on jobs

| Operation | Description |

|---|---|

| Clone Job | Clones the configurations of a job to generate a new job in the same folder. |

| Rename Job | Renames a job. |

| Delete Job | Deletes a job. You can delete a job only if the job is not associated with a workflow or the associated workflow is not running or being scheduled. |

Job submission modes

The spark-submit process, which is the launcher in a data development module, is used to submit Spark jobs. In most cases, this process occupies more than 600 MiB of memory. The Memory (MB) parameter in the Job Settings panel specifies the size of the memory allocated to the launcher.

| Job submission mode | Description |

|---|---|

| Header/Gateway Node | In this mode, the spark-submit process runs on the master node and is not monitored by YARN. The spark-submit process requests a large amount of memory. A large number of jobs consume many resources of the master node, which undermines cluster stability. |

| Worker Node | In this mode, the spark-submit process runs on a core node, occupies a YARN container, and is monitored by YARN. This mode reduces the resource usage on the master node. |

Memory consumed by a job instance = Memory consumed by the launcher + Memory consumed by a job that corresponds to the job instanceMemory consumed by a job = Memory consumed by the spark-submit logical module (not the process) + Memory consumed by the driver + Memory consumed by the executor| Launch mode of Spark application | Process in which spark-submit and driver run | Process description | |

|---|---|---|---|

| yarn-client mode | Submit a job in LOCAL mode. | The driver runs in the same process as spark-submit. | The process used to submit a job runs on the master node and is not monitored by YARN. |

| Submit a job in YARN mode. | The process used to submit a job runs on a core node, occupies a YARN container, and is monitored by YARN. | ||

| yarn-cluster mode | The driver runs in a different process from spark-submit. | The driver occupies a YARN container. | |