This topic provides an in-depth analysis of the causes and solutions for the Too many parts error.

Introduction

Basic concepts

In ApsaraDB for ClickHouse tables, data is stored in data parts, and each data part is sorted by using the primary key. By default, the primary key indicates the columns specified in the ORDER BY clause.

Each data part has its own primary key index to efficiently scan and locate data within the data part.

When data is inserted into a table, a data part is created, and each data part is sorted in lexicographical order based on the primary key.

For example, if the primary key columns are CounterID and Date, data in the data part is first sorted by CounterID and then by Date within each CounterID value.

ApsaraDB for ClickHouse merges data parts to store data efficiently, and this process is similar to the concept of Log-structured Merge (LSM) trees. When data parts are merged, the primary key indexes are also merged.

Data merge mechanism

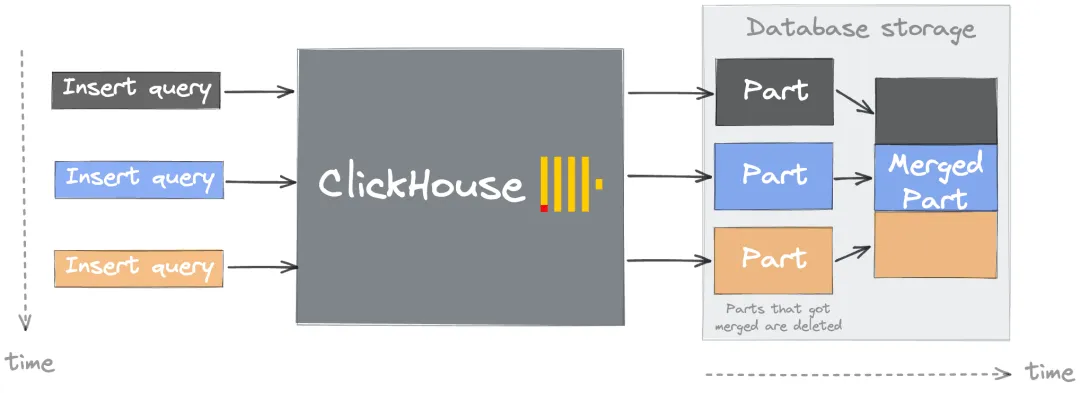

The preceding flowchart shows the data merge mechanism of ApsaraDB for ClickHouse.

Merge triggers: As data is inserted, the number of data parts gradually increases. ApsaraDB for ClickHouse automatically merges small data parts into larger data parts by using the background process Merge Tree. This reduces the number of data parts that need to be scanned during queries.

Merge process:

Ordered merge: During merges, the system reads data from multiple small data parts and writes it in the order specified by the primary key into a new data part, ensuring that the merged data part remains ordered.

No additional sorting: Since each data part is already sorted by a primary key, the merge process uses linear scanning and row-by-row writing. No additional sorting is required.

Compression and optimization: Merged data may have better compression rates, especially when similar values are grouped together. This improves storage and query efficiency.

Merge strategy:

Merges are triggered when the size, number, and age of data parts meet specific conditions. For more information about the parameters controlling merge triggers, see mergetree related parameters.

The system merges the older data parts first to reduce resource contention caused by frequent merges of newly inserted data.

Consequences of excessive data parts

Decreased query performance: More indexes and files need to be scanned, increasing I/O overhead and causing slower queries.

Startup delay: A large number of data parts can extend metadata loading time, resulting in slower startup.

Increased merges: The system needs to frequently merge data parts to maintain efficient queries. Although merges are executed in parallel, improper configuration can lead to further performance degradation. For example, you set the

parts_to_throw_insertormax_parts_in_totalparameter to a value that exceeds the limit.Risk of improper configuration: To merge excessive data parts, you must configure the parameters such as parts_to_throw_insert and max_parts_in_total. However, improper configuration of the parameters can lead to reduced query efficiency.

ClickHouse Keeper pressure: Excessive data parts increase the metadata management burden, exacerbating the load on Keeper.

Cause analysis and solutions

The following section provides cause analysis and solutions for the Too many parts error.

Cause 1: Inappropriate partition key

A common cause is using a high-cardinality column as a partition key when you create a table.

The following items describe the features of partition keys:

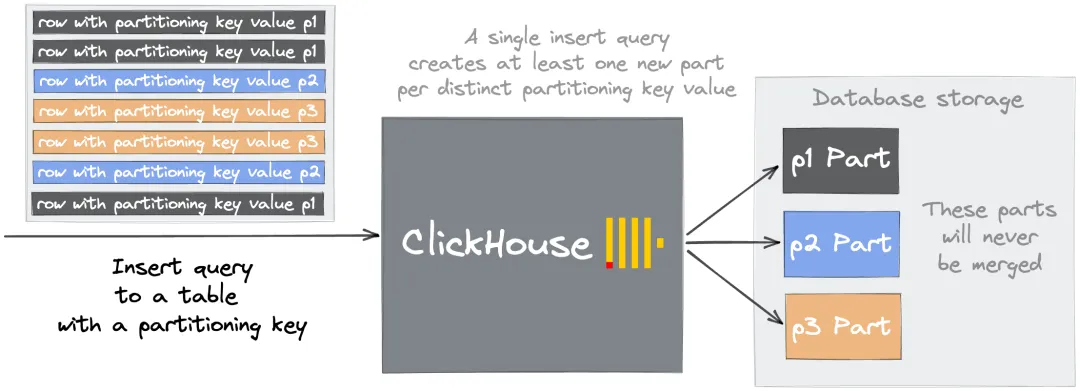

When creating a table, you can specify a column as a partition key to segment data.

For each value of the partition key, a new directory is created.

Partition keys are a data management technique that enables logical partition of data within a table.

For example, after partitioning data by day, you can execute statements such as DROP PARTITION to quickly delete data subsets.

Why does using high-cardinality columns as partition keys lead to the Too many parts error?

Partition keys are used to logically partition data but can be misused if not configured properly. This occurs because each unique partition key value generates a separate partition, and the system create separate data parts for each partition. In this case, the system does not merge data parts across partitions.

For example, if you use a high-cardinality column date_time_ms (DATETIME type) as the partition key, data parts are distributed across thousands of partitions and will never be merged until the number of data parts in a partition exceeds the specified limits. This triggers the "Too many inactive parts (N). Parts cleaning are processing significantly slower than inserts" error in subsequent inserts.

Solution

To solve the preceding issue, select a partition key with cardinality less than 1,000.

Cause 2: Many small batch inserts

The Too many parts error can also be caused by many small batch inserts. When data is inserted in small batches, each insertion generates a new data part.

Solutions

To keep the number of data parts within a manageable range, you can use the following solutions.

Synchronous batch insert: Accumulate data on the client side into a large batch and insert that batch in a single operation. We recommend that each batch contains at least 1,000 rows.

Asynchronous batch insert:

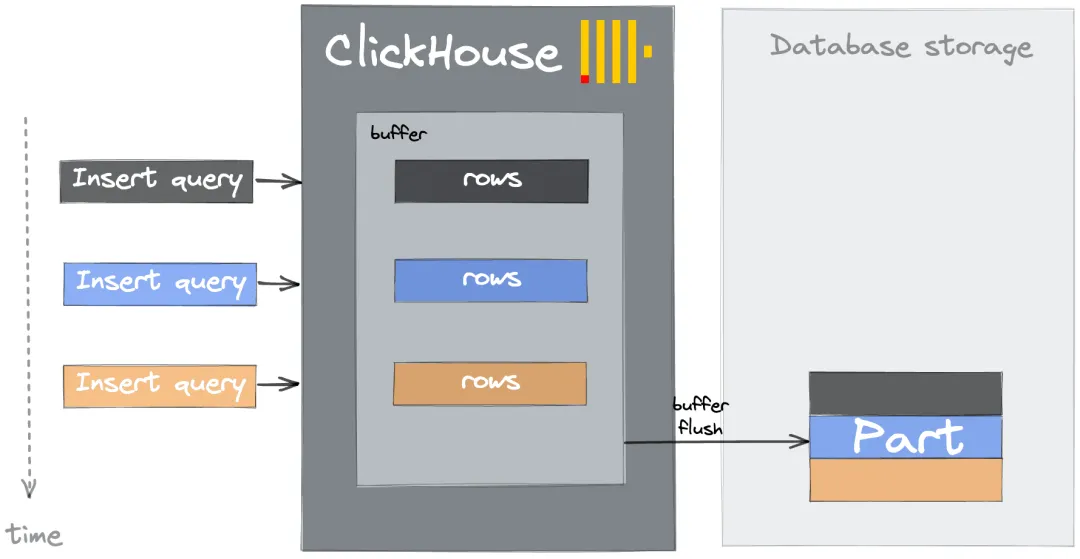

Local cache: If the client cannot cache data, you can perform asynchronous insertion in ApsaraDB for ClickHouse. In this case, ApsaraDB for ClickHouse caches the inserted data locally and then inserts it into the underlying table.

Buffer table cache: Buffer tables can store data temporarily in memory buffers and then periodically write it to the destination table in batches. This can also reduce frequent writes and excessive data parts caused by small batch inserts. However, it also has advantages and disadvantages.

Advantages: Buffer table caches allow data queries in the buffer and buffer table caches are compatible with the buffers of materialized view target tables.

Disadvantages: Buffer table caches have limited fault tolerance, as data remains in the memory until it is flushed to the destination table.

The following diagram shows how buffer tables process data.

Cause 3: Excessive materialized views

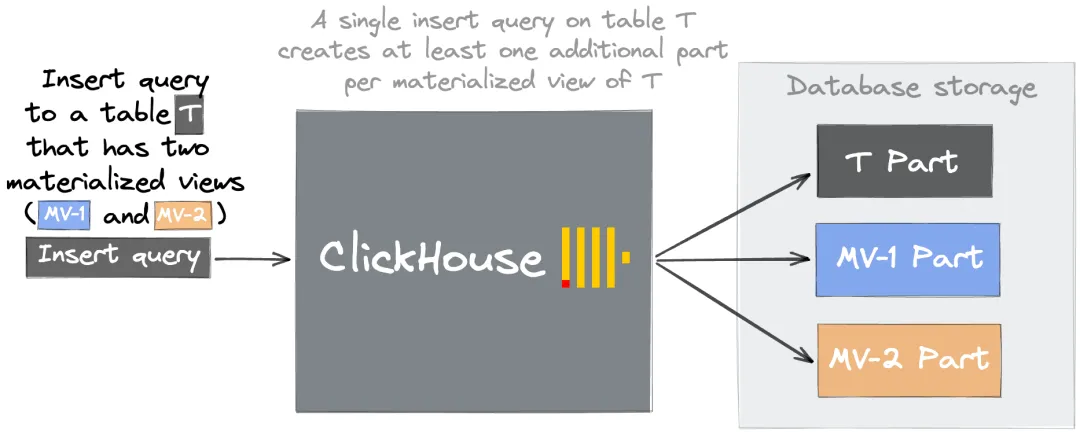

Excessive materialized views can result in an accumulation of data parts.

When data is written to the source table specified in the SELECT clause of a materialized view, the data is processed by the query in the SELECT clause, and then the processed data is inserted into the materialized view. If the source table receives frequent small batch inserts, such as multiple inserts per second, each insert triggers a new data part in the destination table, leading to excessive accumulation of data parts. The following diagram shows the process:

Table T is associated with materialized views MV-1 and MV-2. When one row of data is inserted into Table T, Table T produces one data part, and each materialized view produces one data part.

In addition to excessive materialized views, improperly configured ORDER BY or PARTITION BY settings can prevent data parts from being merged. This further leads to too many data parts. For more information, see Create Materialized View.

Solutions

Optimize materialized view design:

Configure reasonable partitions and primary keys: Reasonable

ORDER BYorPARTITION BYsettings.Avoid high-cardinality partition keys: High-cardinality partition keys prevent data part merging by creating isolated partitions. Example: DATETIME type columns.

Reduce redundant views:

Merge similar views: If multiple views have similar aggregation logic, they can be integrated into one view with unified output results.

Evaluate necessity: Delete infrequently used materialized views, or replace pre-computation with lightweight queries.

Cause 4: Mutation issues

Mutations can also cause the accumulation of data parts.