PolarDB Serverless is the culmination of mature technology presented in the "PolarDB Serverless: A Cloud Native Database for Disaggregated Data Centers" paper. This topic uses the Sysbench tool to test the scalability of PolarDB Serverless. You can follow the methods described in this topic to conduct your own tests and quickly understand the scalability of Serverless.

Test configuration

Both the ECS instance and PolarDB for PostgreSQL cluster are located in the same region and within the same virtual private cloud (VPC).

The PolarDB for PostgreSQL cluster has the following configurations:

Number of clusters: 1

Billing method: Serverless

Database engine:

Edition: Standard Edition

Storage type and capacity: ESSD AutoPL (Provisioned IOPS: 0), 100 GB

NoteWhen you create a serverless cluster, keep the default settings for Maximum and minimum number of read-only nodes and Maximum and minimum specifications of a single node.

ECS instance configurations:

Number of clusters: 1

Instance type: ecs.g7.4xlarge(16 vCPU 64 GiB)

Image: Alibaba Cloud Linux 3.2104 LTS 64-bit

System disk: standard SSD, performance PL0, 100 GB

Test tool

Sysbench is an open-source, cross-platform performance benchmark tool primarily used to evaluate database performance (such as PostgreSQL). It supports multi-threaded testing and offers flexible configuration options.

Preparation

Set up environment

Based on the test configuration, purchase an ECS instance and a PolarDB for PostgreSQL Serverless cluster.

Install Sysbench

Log on to the ECS instance as the root user and install Sysbench.

sudo yum -y install sysbenchThis example uses Alibaba Cloud Linux. If you are using another operating system, adjust the installation command according to the Sysbench documentation.

Configure cluster information

Go to the PolarDB console, create a database account, create a database, and then view the endpoint.

The ECS instance and the PolarDB for PostgreSQL cluster are in the same VPC. Add the private IP address of the ECS instance to a new IP whitelist group, or add the security group to which the ECS instance belongs to the cluster whitelist.

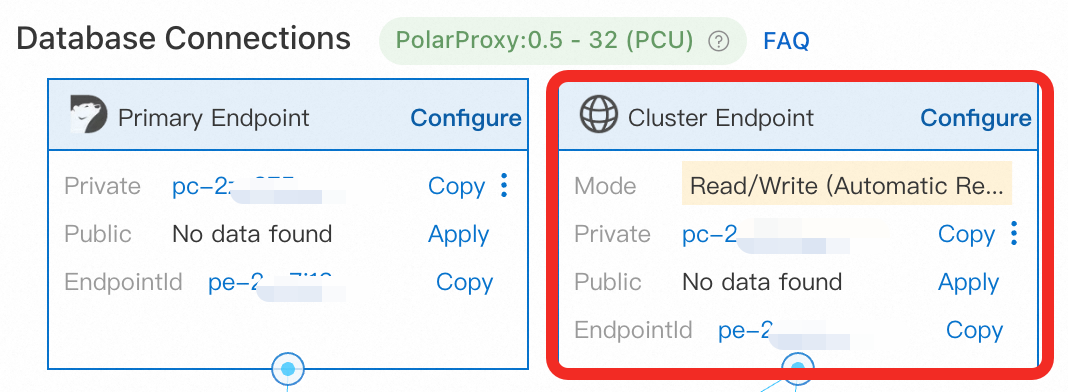

Use the Cluster Endpoint instead of the Primary Endpoint.

Single-node scale-up test

Verify that the primary node's specifications can automatically scale based on the load using Sysbench.

Data preparation

Use Sysbench to prepare 32 tables, each containing 100,000 rows of data in the PolarDB for PostgreSQL cluster. During execution, the Serverless cluster specifications may automatically scale up. After the data is prepared, wait for 3 to 5 minutes until the specifications of the primary node scale back down to 1 PCU. The data preparation command is as follows. Adjust the relevant parameters as needed:

sysbench /usr/share/sysbench/oltp_read_write.lua --pgsql-host=<host> --pgsql-port=<port> --pgsql-user=<user> --pgsql-password=<password> --pgsql-db=<database> --tables=32 --table-size=100000 --report-interval=1 --range_selects=1 --db-ps-mode=disable --rand-type=uniform --threads=32 --db-driver=pgsql --time=12000 prepareServerless cluster parameters:

<host>: cluster endpoint.<port>: port number corresponding to the cluster endpoint, default is 5432.<user>: database account.<password>: password for the database account.<database>: database name.

Other parameters:

--db-ps-mode:disablemeans disabling prepared statements to ensure all transaction SQL statements are executed as raw SQL statements.--threads: number of threads. The number of threads affect the load and performance of the cluster. In this example, 32 is used, indicating that 32 threads are used in the data preparation process, and the estimated duration is 5 minutes.

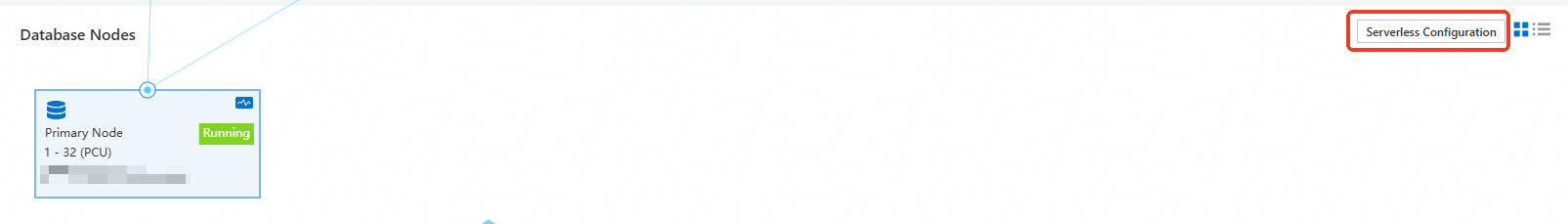

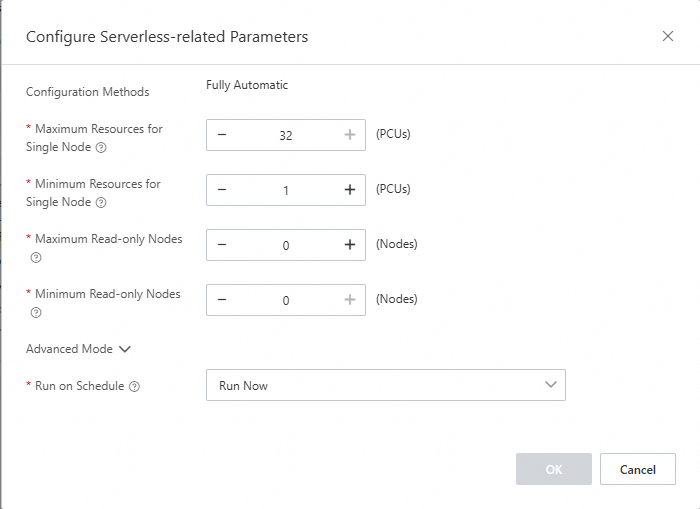

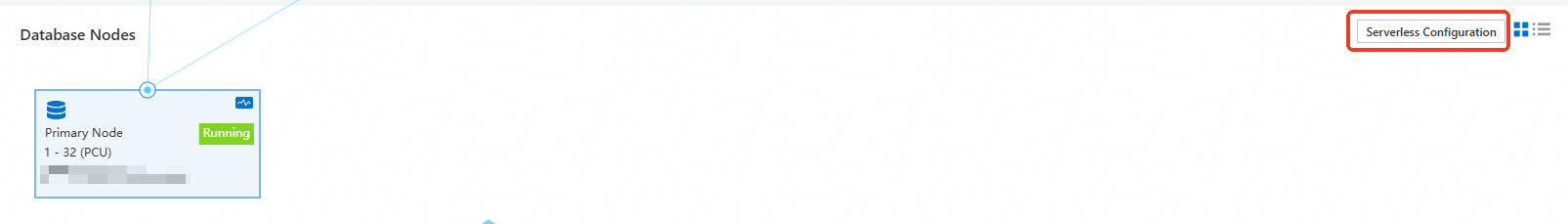

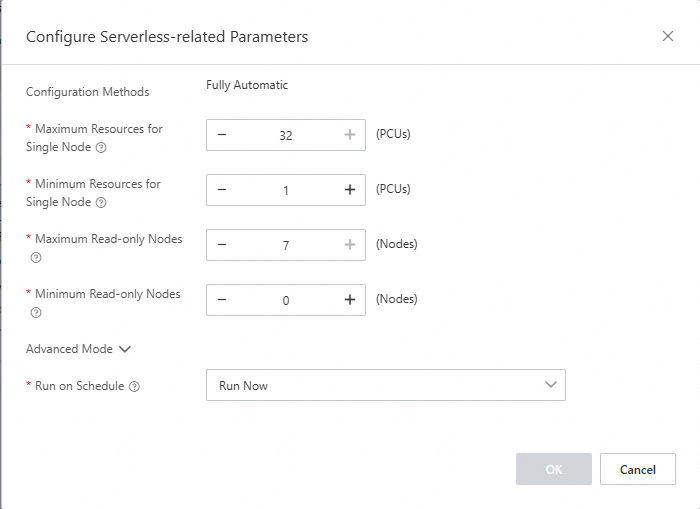

Adjust Serverless configuration

During the scale-up test for a single node, ensure that read-only node scaling is not used during the stress test. Go to the PolarDB console, and in the Database Nodes section of the cluster details page, adjust the Serverless configuration. Set Maximum Resources for Single Node to 32, Minimum Resources for Single Node to 1, and Maximum and Minimum Read-only Nodes to 0.

Initial verification: low load (16 threads)

First, perform a simple test to evaluate the effect of 16 threads, to gain an initial understanding of the scalability of Serverless.

Run the following command. Set the

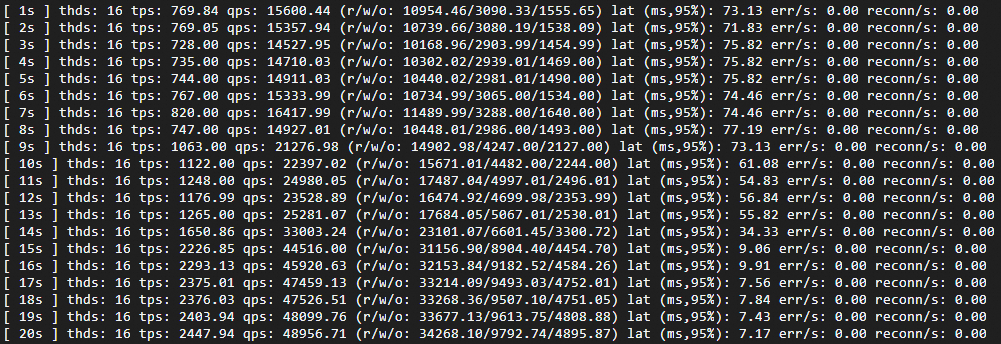

--threadsparameter to 16, and adjust other parameters as needed.sysbench /usr/share/sysbench/oltp_read_write.lua --pgsql-host=<host> --pgsql-port=<port> --pgsql-user=<user> --pgsql-password=<password> --pgsql-db=<database> --tables=32 --table-size=100000 --report-interval=1 --range_selects=1 --db-ps-mode=disable --rand-type=uniform --threads=16 --db-driver=pgsql --time=12000 runThe stress testing results are as follows. According to the Sysbench test results, at a concurrency of 16 threads, the throughput (TPS) increased over time while the latency (LAT) decreased, both eventually reaching stable values. This indicates that after triggering the Serverless scaling, system performance improved significantly.

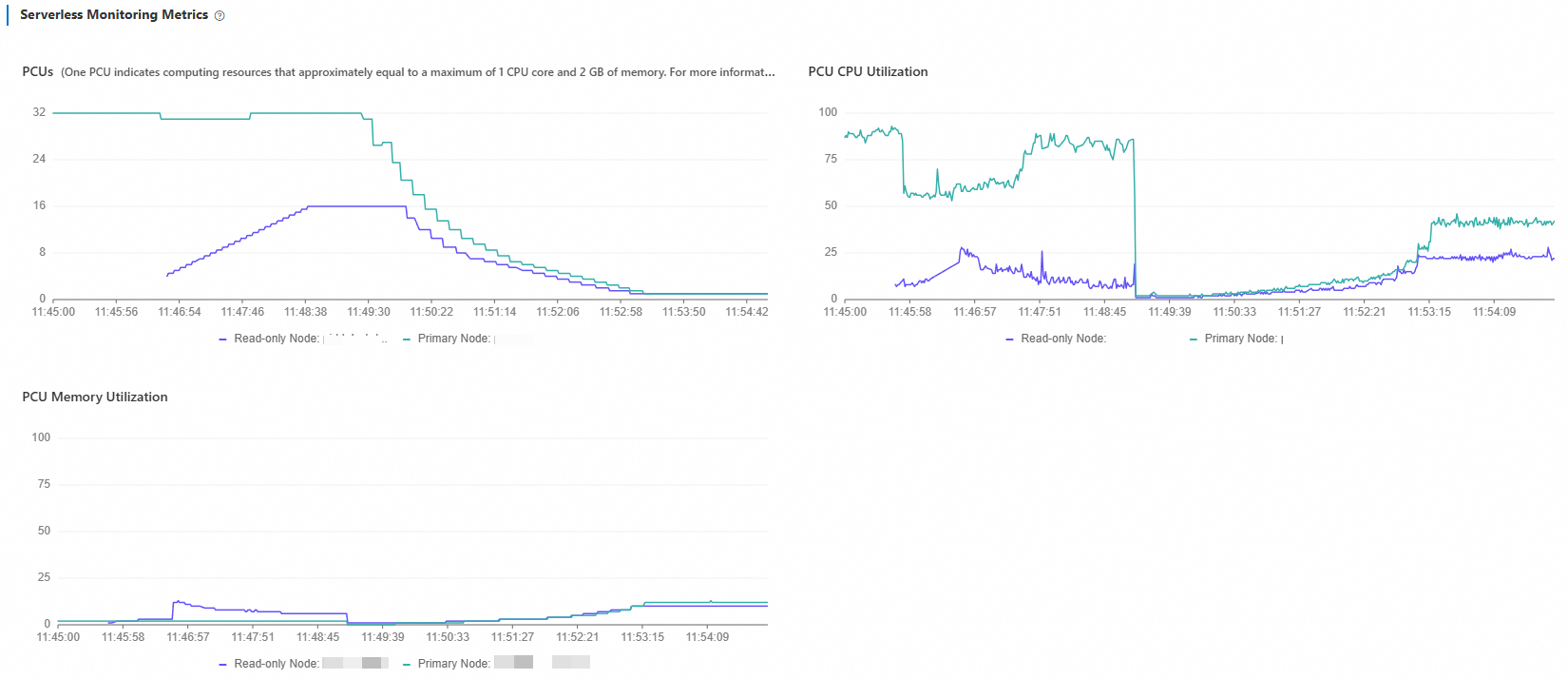

Return to the PolarDB console, and check the Serverless Monitoring Metrics on the Performance Monitoring page. Choose Last 5 Minutes from the drop-down list at the top center of the page to view the monitoring information:

The PCU count scaled up from 1 to 4 and remained stable. During the scaling, CPU utilization gradually reduced as resources expanded. The memory utilization curve showed pulse-like patterns during each scale-up. This is because when PCU increases, memory resources expand, causing an instantaneous drop in memory utilization. The database then used the additional memory to increase its computing power, such as by expanding its buffer pool. This resulted in a gradual increase in memory utilization until it finally reached a stable state.

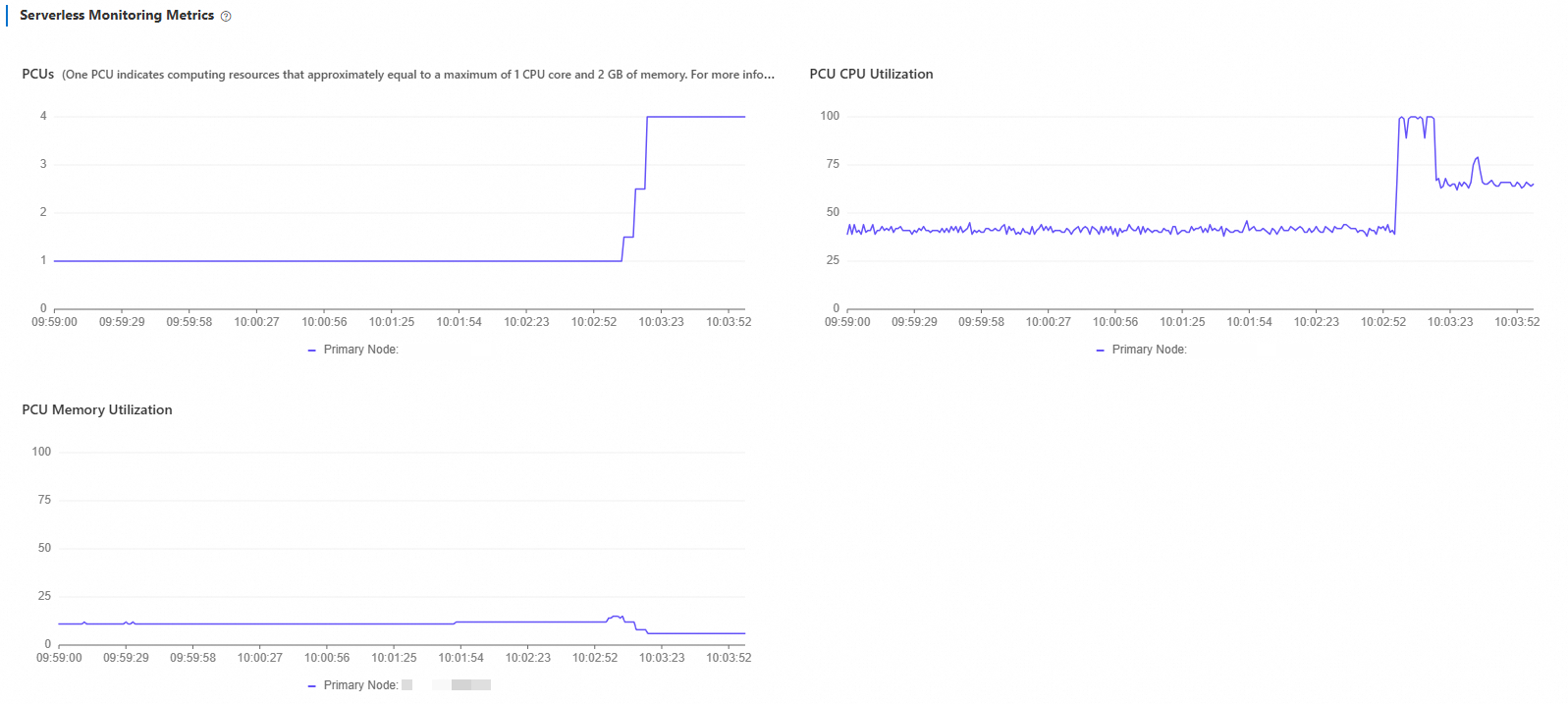

Stop the test and wait for 5 minutes. Then, adjust the time range to the last 10 minutes to view the monitoring information:

As the pressure stopped, the PCU count gradually decreased from 4 to 1. Because the read-write mixed test includes

UPDATErequests, when the pressure stops, PolarDB continues to performvacuumoperations, which consume a small amount of CPU resources.

In-depth verification: high load (128 threads)

Next, set the number of threads (--threads parameter) to 128 to see how long it takes for the PolarDB Serverless cluster to scale up to the maximum specification of 32 PCU. After a period of time, stop the test.

Run the following command. Set the

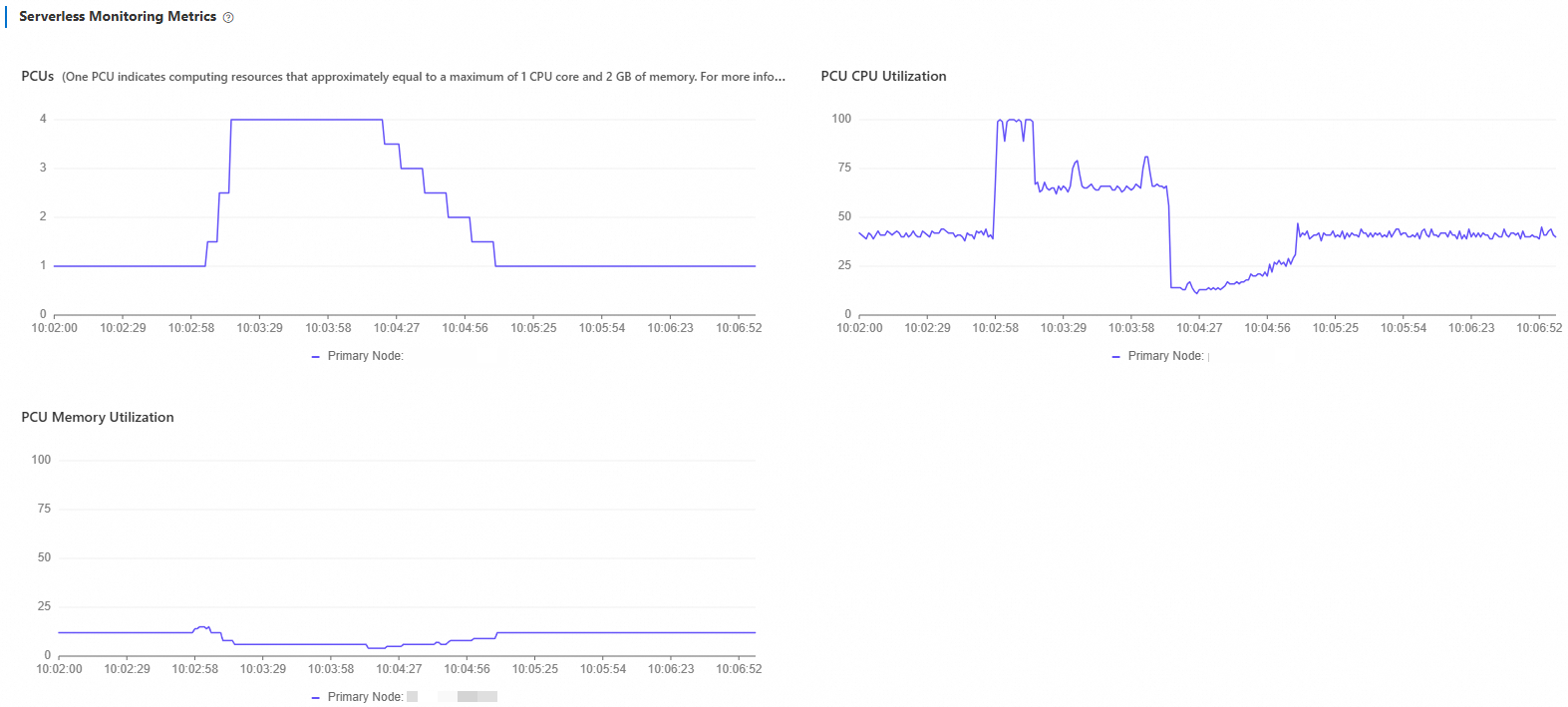

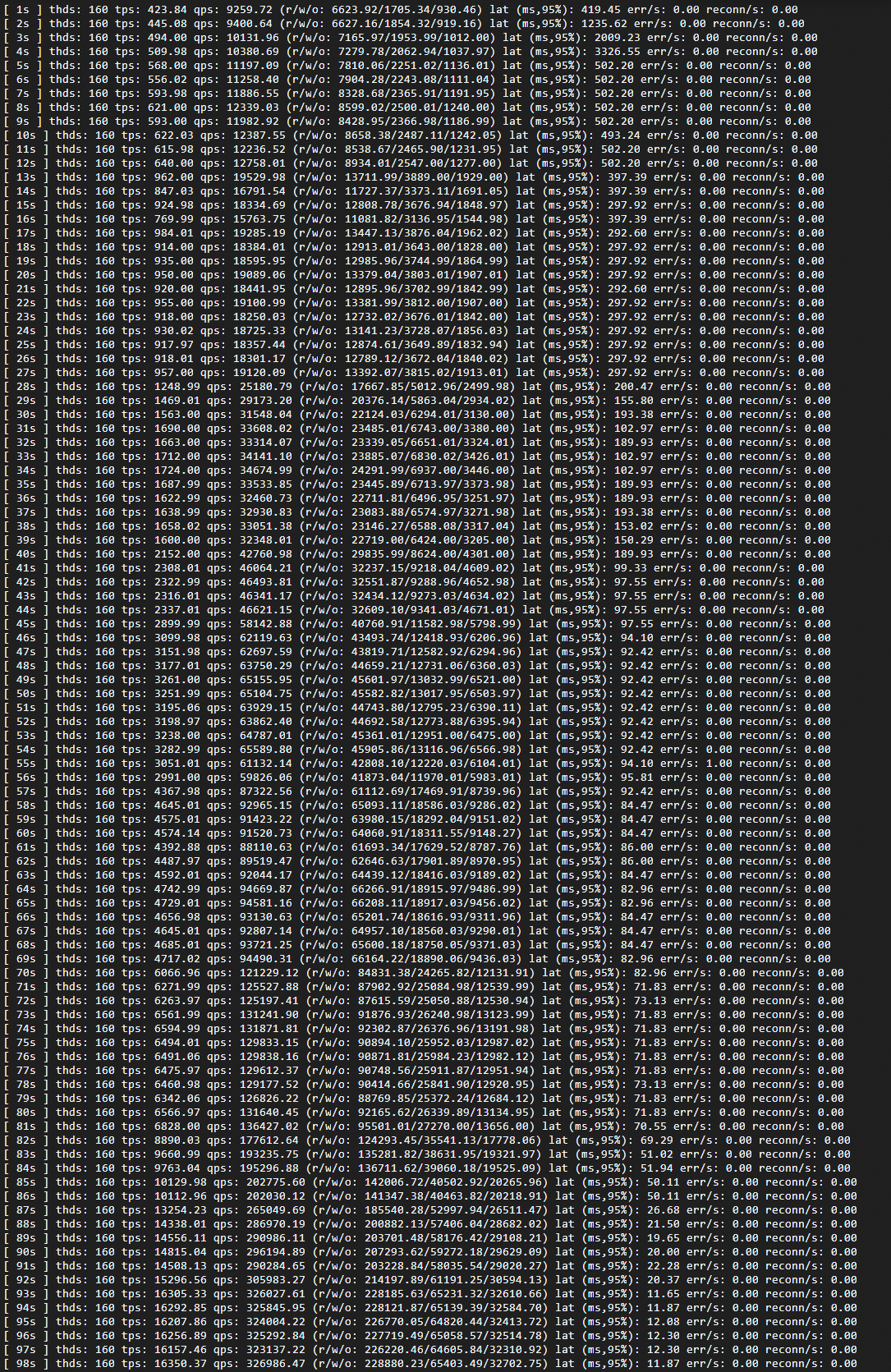

--threadsparameter to 128, and adjust other parameters as needed. For more information, see parameter descriptions.sysbench /usr/share/sysbench/oltp_read_write.lua --pgsql-host=<host> --pgsql-port=<port> --pgsql-user=<user> --pgsql-password=<password> --pgsql-db=<database> --tables=32 --table-size=100000 --report-interval=1 --range_selects=1 --db-ps-mode=disable --rand-type=uniform --threads=128 --db-driver=pgsql --time=12000 runTest results: Based on the execution results, you can clearly see the changes in throughput (TPS) and latency (LAT).

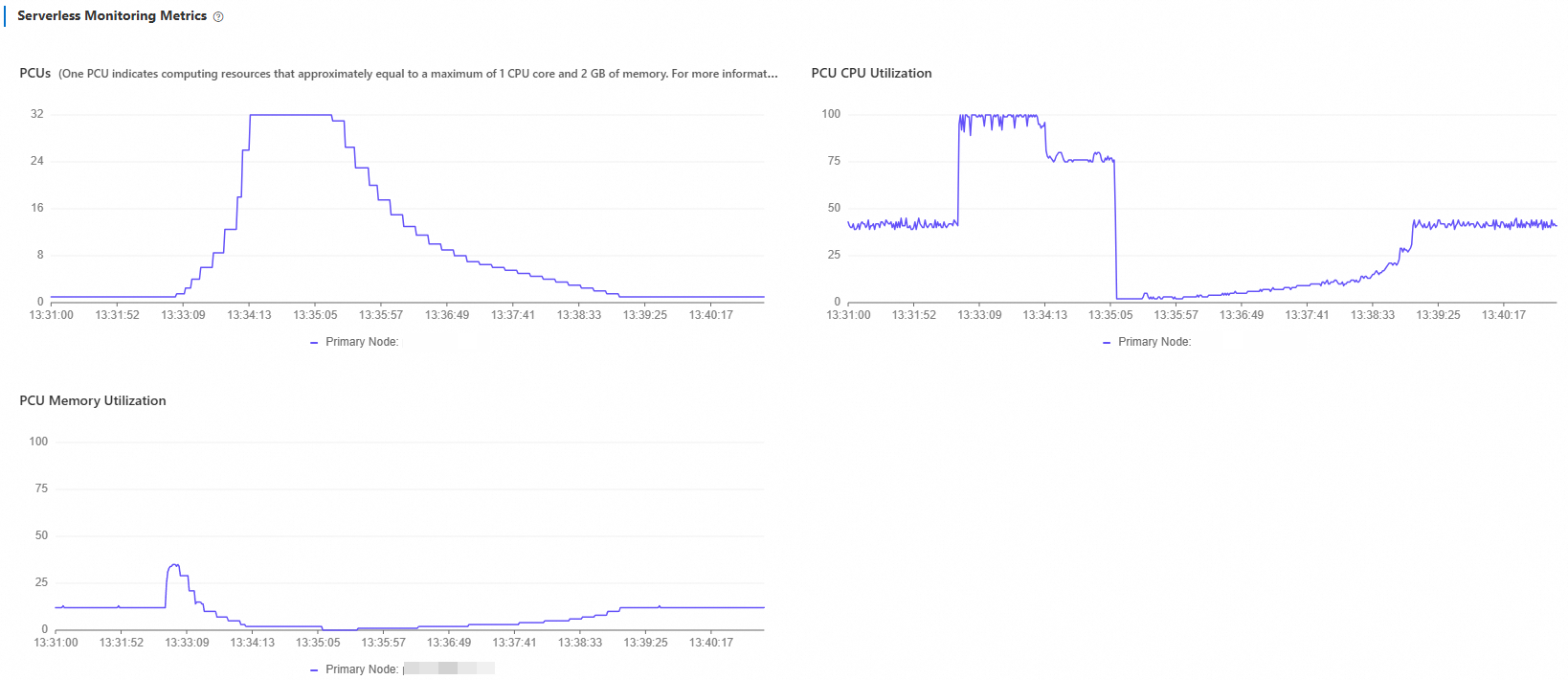

Go to the PolarDB console, and view the Serverless Monitoring Metrics on the Performance Monitoring page. Adjust the time range according to the test time, and you will see the following monitoring information:

Scaling up from 1 PCU to 32 PCU took approximately 87 seconds. Scaling down was slightly more gradual than scaling up, taking approximately 231 seconds.

Multi-node scale-out test

Verify that the number of read-only nodes in the cluster automatically scales based on the load using Sysbench.

Adjust Serverless configuration

During the multi-node scaling test, ensure that read-only nodes are automatically scaled during the test. Go to the PolarDB console, and in the Database Nodes section of the cluster details page, adjust the Serverless configuration. Set Maximum Resources for Single Node to 32, Minimum Resources for Single Node to 1, Maximum Read-only Nodes to 7, and Minimum Read-only Nodes to 0.

Verification

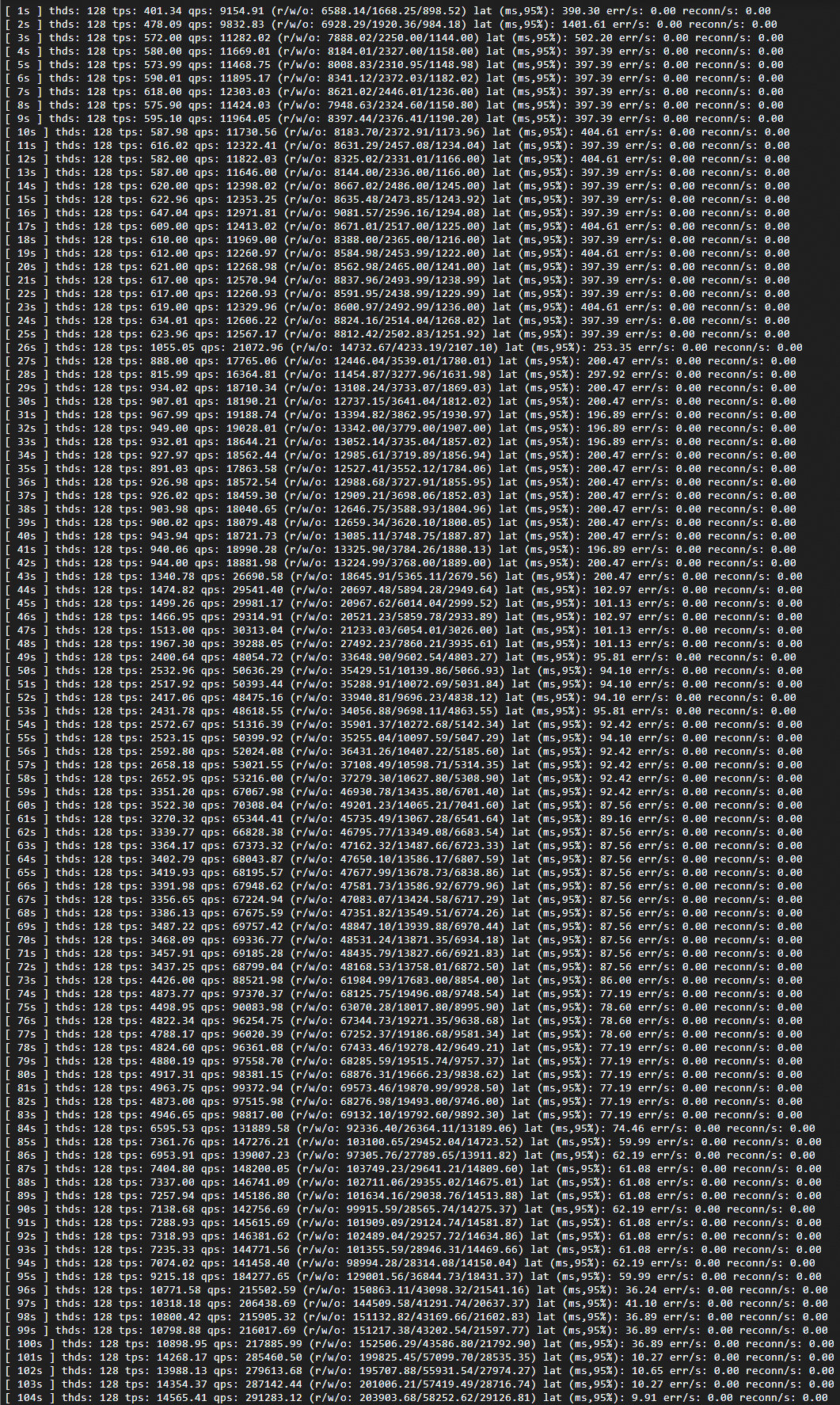

Perform a test with 160 threads to understand the horizontal scalability of Serverless.

Run the following command. Set the

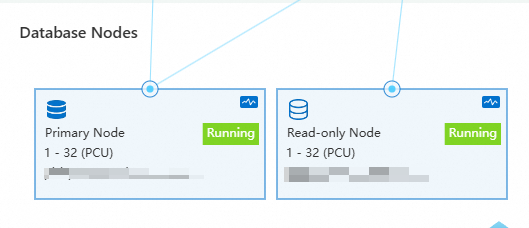

--threadsparameter to 160, and adjust other parameters as needed. For more information, see parameter descriptions.sysbench /usr/share/sysbench/oltp_read_write.lua --pgsql-host=<host> --pgsql-port=<port> --pgsql-user=<user> --pgsql-password=<password> --pgsql-db=<database> --tables=32 --table-size=100000 --report-interval=1 --range_selects=1 --db-ps-mode=disable --rand-type=uniform --threads=160 --db-driver=pgsql --time=12000 runA while after the second test began, several read-only nodes were added.

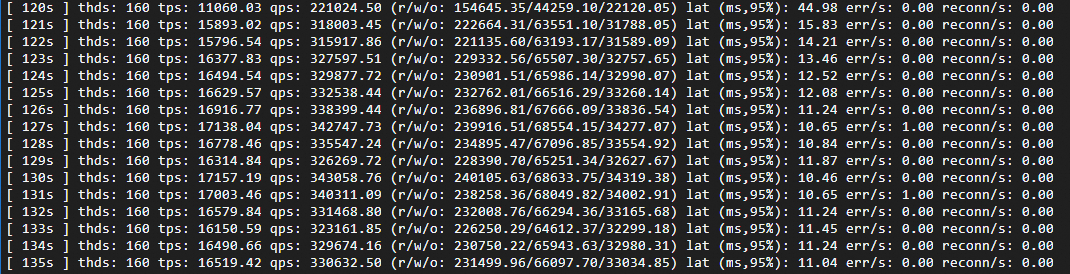

After the cluster entered the stable state, Sysbench returned output as follows. Compared with the previous single-node test results under the same pressure, the QPS increased from 320,000 to 340,000. Based on the QPS performance, the maximum throughput of the single-node test has been exceeded.

Horizontal scaling, baseline comparison, single node:

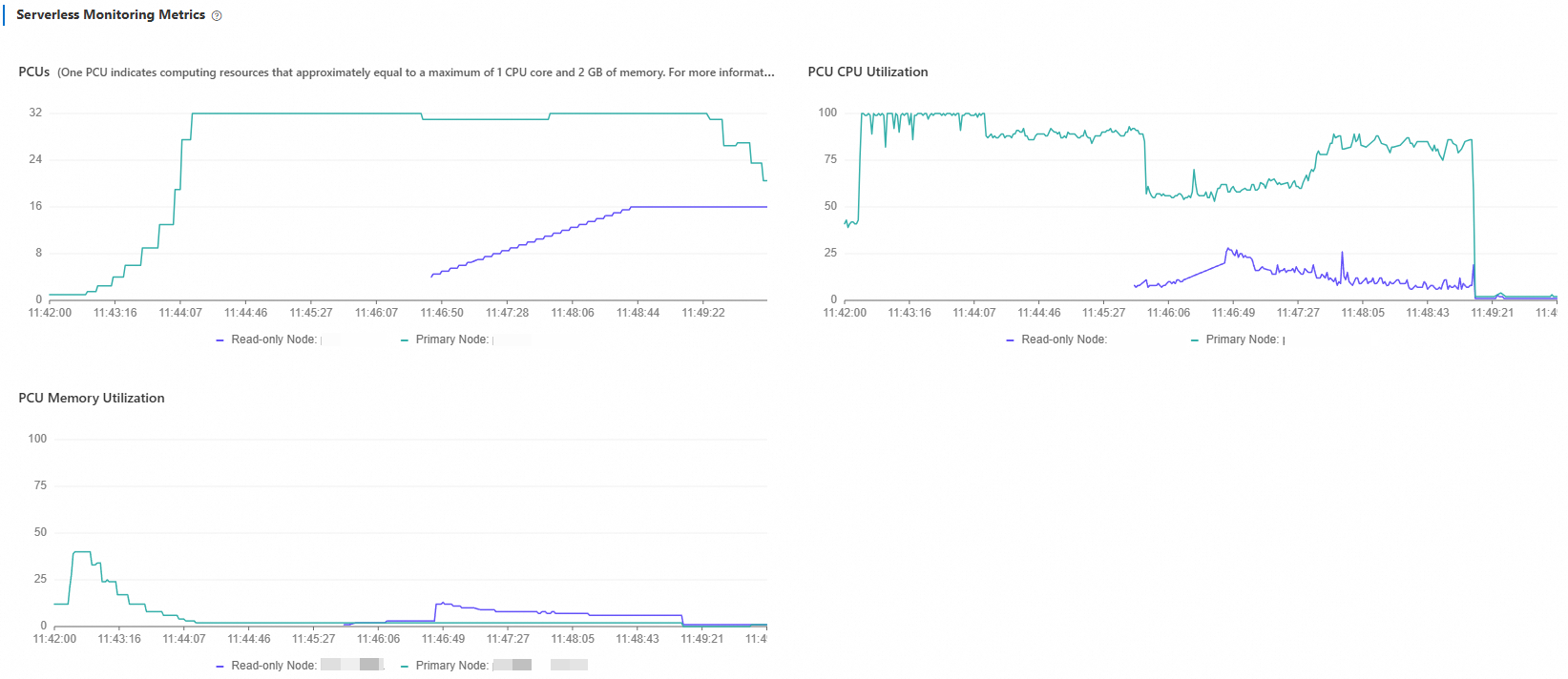

Go to the PolarDB console, and view the Serverless Monitoring Metrics on the Performance Monitoring page. Adjust the time range to view the monitoring information:

In the Serverless monitoring information, you can observe the load curves of the new read-only nodes. After read-only nodes are added, the load on the previous nodes gradually decreases, eventually reaching a roughly balanced state.

After running for a period, stop the stress test. Adjust the time range according to the test time, and you will see the following monitoring information:

The cluster's compute nodes will first automatically scale down, gradually reducing to 1 PCU in approximately 2-3 minutes. When the pressure stops, the CPU utilization of read-only nodes immediately decreases, while the primary node still needs to perform

vacuumoperations, so CPU consumption continues for a short period before finally dropping to 1 PCU.15 to 20 minutes after the test stopped, read-only nodes were released one by one. To avoid frequent elastic oscillations of read-only nodes, Serverless does not immediately reclaim read-only nodes with no load.