Synchronize data to Function Compute

1. Create a function in Function Compute

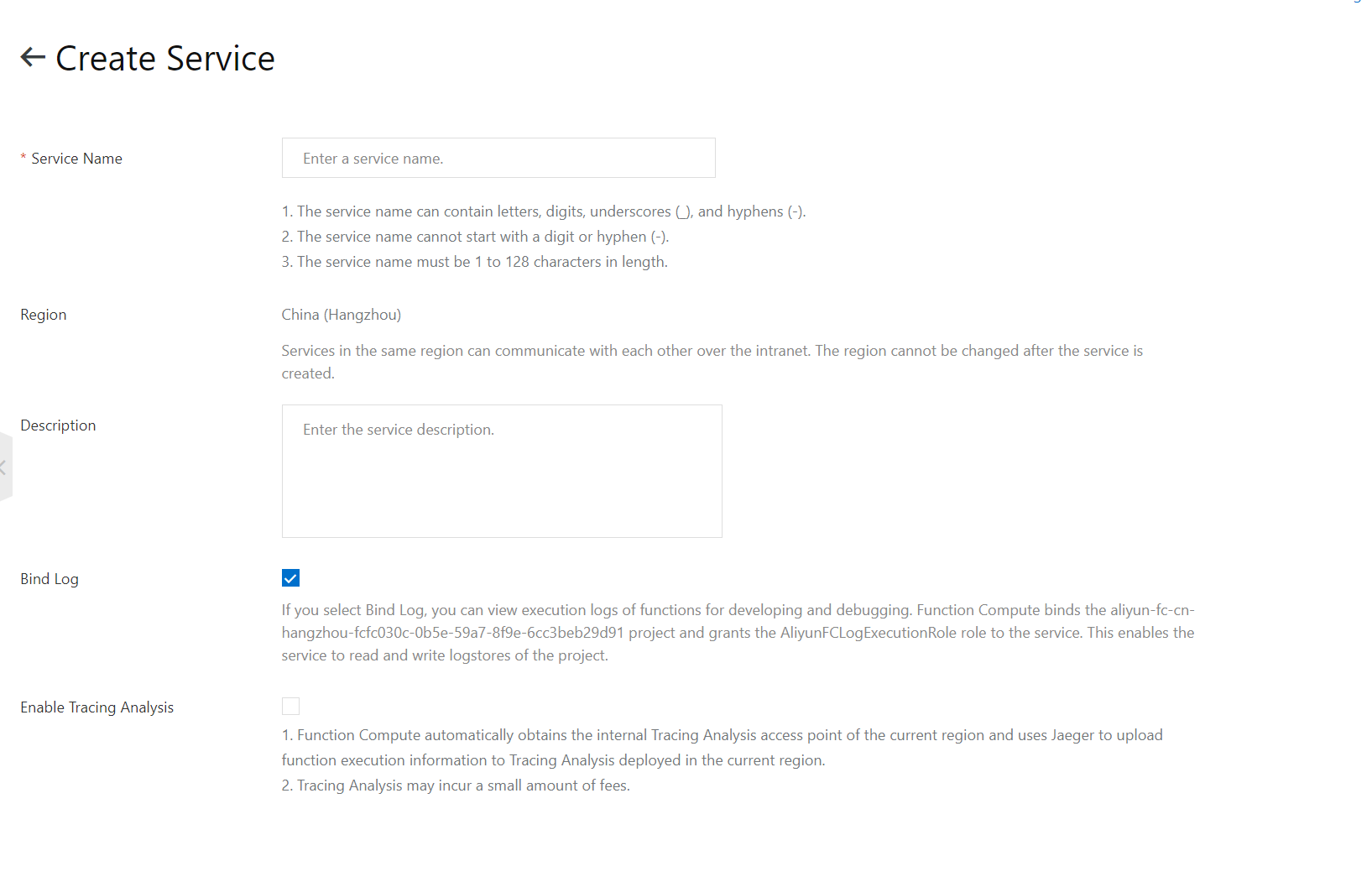

1.1 Create a service

Create a service in the Function Compute console. If a service is created, skip this step.

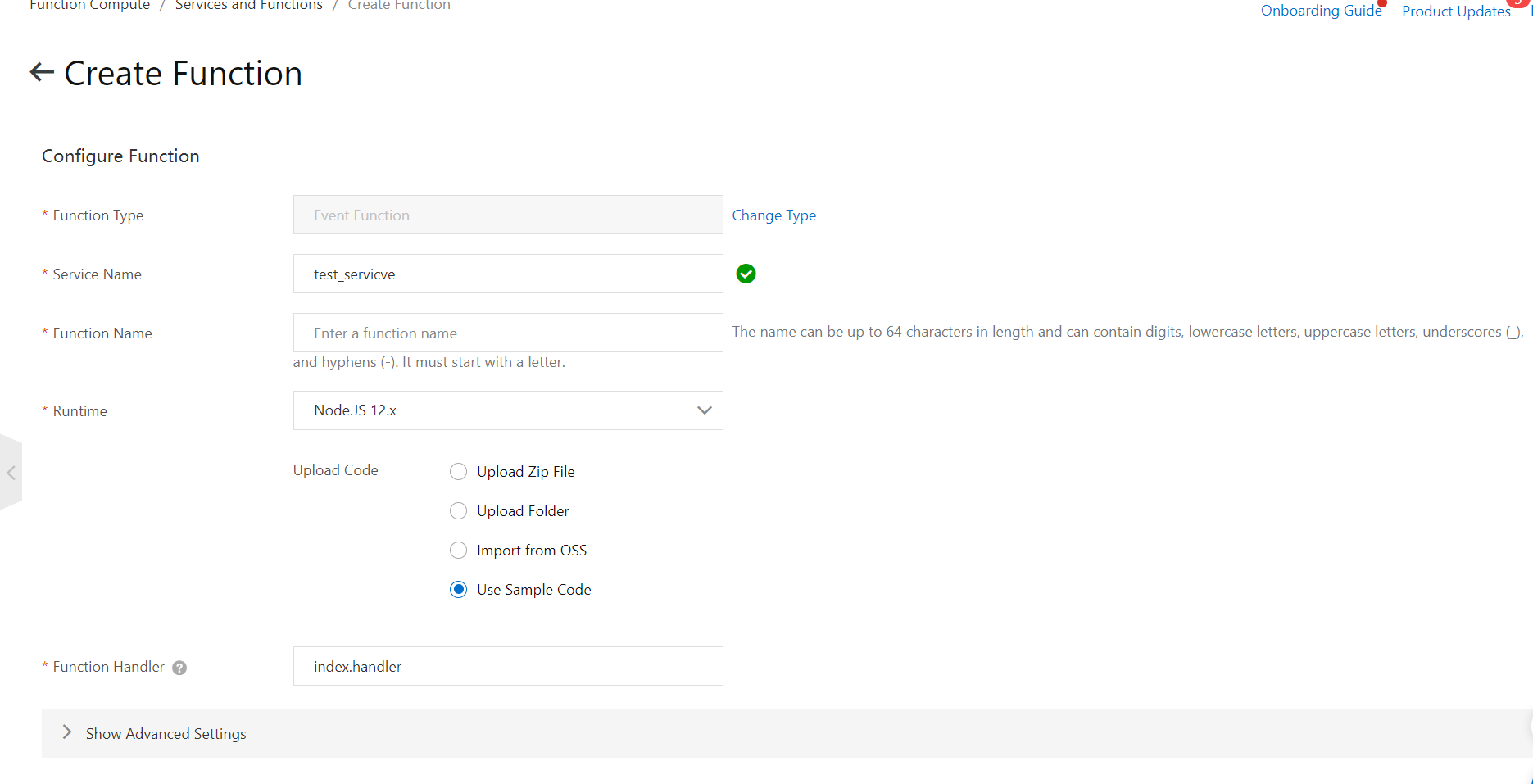

1.2 Create a function

On the Services and Functions page, click the created service and then Create Function. Upload code or use the sample code for the function. For more information, see Overview. Use default values for the trigger parameters. Then, click Create.

2. Create the service-linked role for DataHub

If you use a temporary access credential from Security Token Service (STS), the service-linked role for DataHub is automatically created. Then, DataHub uses the service-linked role to synchronize data to Function Compute.

3. Create a DataHub topic

For more information, see Create a topic.

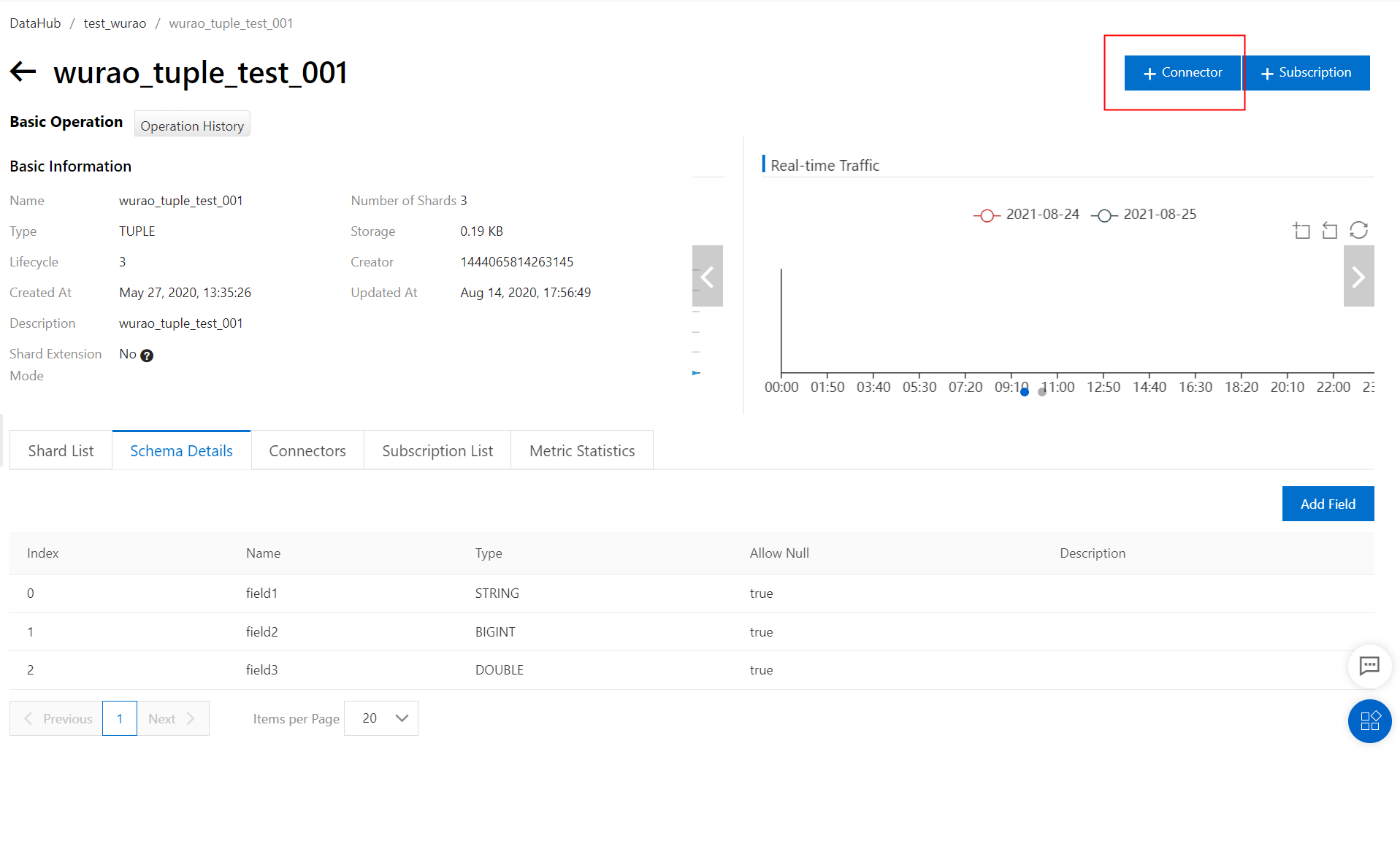

4. Create a DataConnector

4.1 Go to the details page of a topic

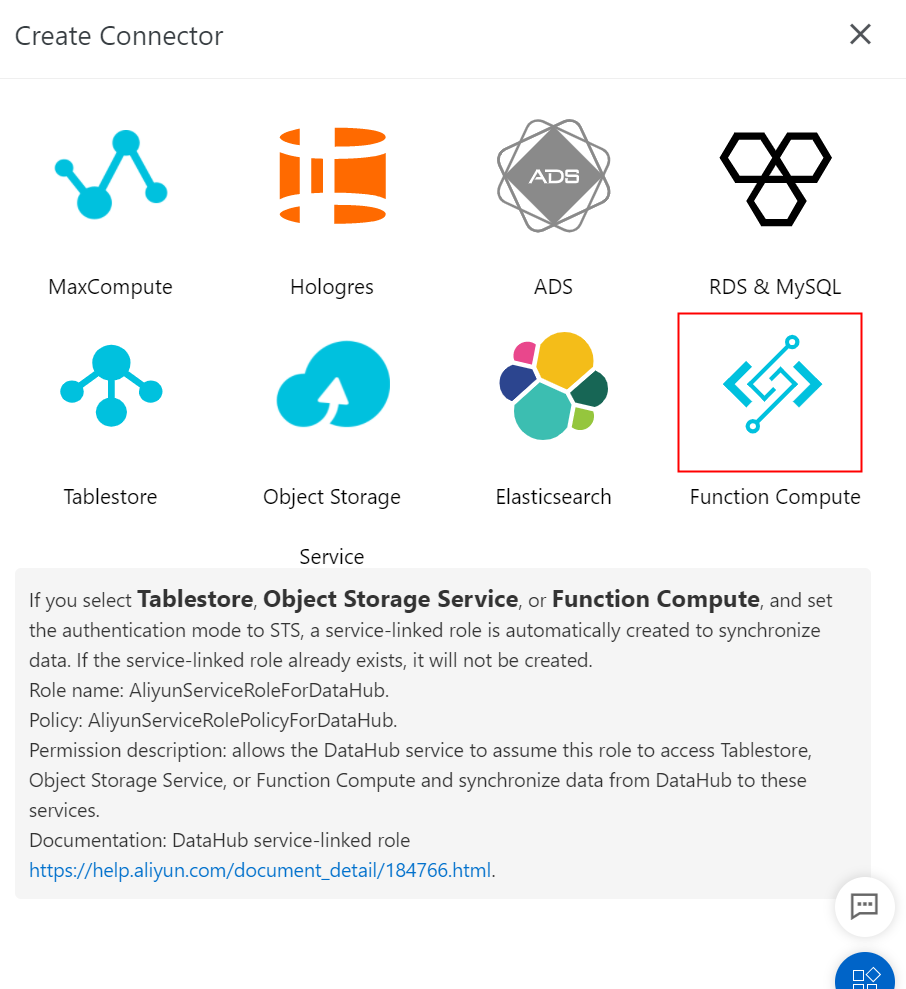

4.2 Click Function Compute

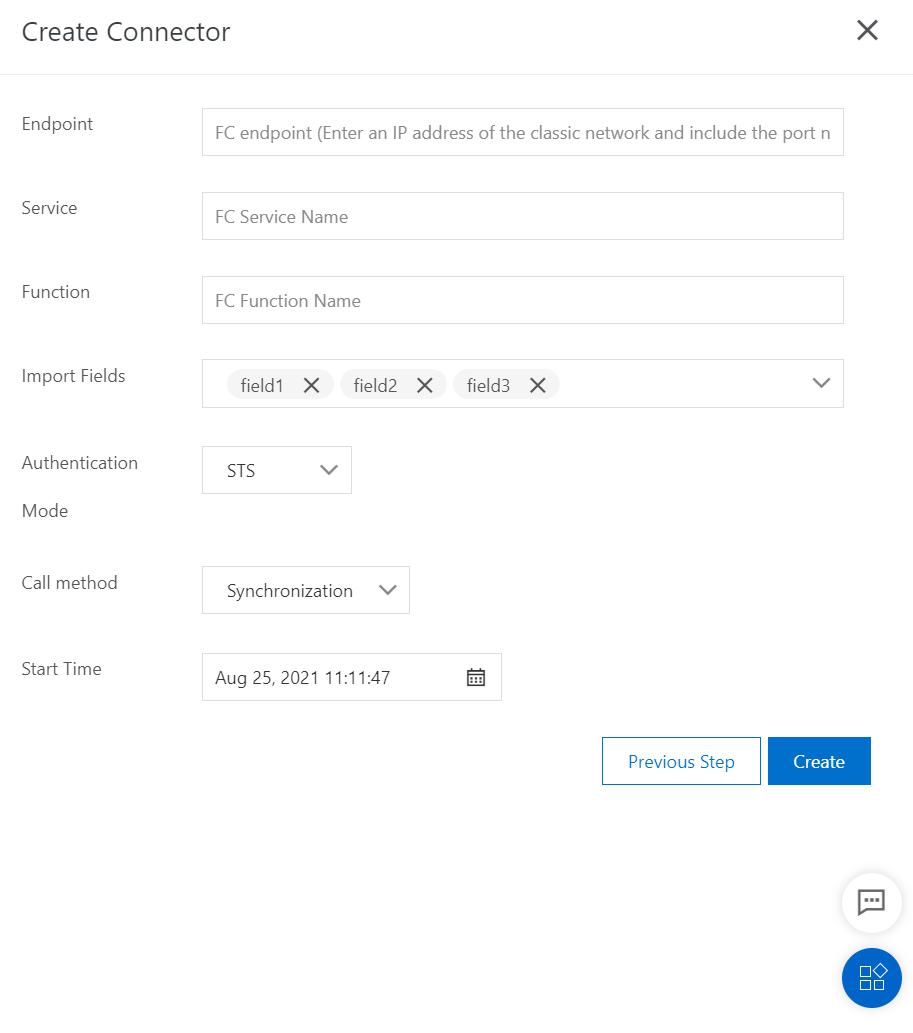

4.3 Set the parameters as required

Endpoint: the endpoint of Function Compute. You must enter an internal endpoint in the following format:

https://<Alibaba Cloud account ID>.fc.<Region ID>.aliyuncs.com. Sample internal endpoint that is used to access Function Compute in the China (Shanghai) region:https://12423423992.fc.cn-shanghai-internal.aliyuncs.com. For more information, see Endpoints.Service: the name of the service to which the destination function belongs.

Function: the name of the destination function.

Start Time: the start time when topic data is synchronized to Function Compute.

5. Data structure of events

The data sent to Function Compute must follow the following structure:

{

"eventSource": "acs:datahub",

"eventName": "acs:datahub:putRecord",

"eventSourceARN": "/projects/test_project_name/topics/test_topic_name",

"region": "cn-hangzhou",

"records": [

{

"eventId": "0:12345",

"systemTime": 1463000123000,

"data": "[\"col1's value\",\"col2's value\"]"

},

{

"eventId": "0:12346",

"systemTime": 1463000156000,

"data": "[\"col1's value\",\"col2's value\"]"

}

]

}Parameters:

eventSource: the source of the event. Set the value to

acs:datahub.eventName: the name of the event. If the data is from DataHub, set the value to

acs:datahub:putRecord.eventSourceARN: the ID of the event source, which contains a project name and a topic name. Example:

/projects/test_project_name/topics/test_topic_name.region: the region of DataHub to which the event belongs. Example:

cn-hangzhou.records: the records in the event.

eventId: the ID of the record. The value is in the

shardId:SequenceNumberformat.systemTime: the time when the event is written to DataHub, in milliseconds.

data: the data of the event. If the data is in a topic of the TUPLE type, the parameter value is a list. Each element in the list is a string that corresponds to a field value in the topic. If the data is in a topic of the BLOB type, the parameter value is a string.

6. Usage notes

The endpoint that is used to access Function Compute must be an internal endpoint. A service and a function must be created in Function Compute.

DataHub supports only function calls in synchronous mode. This ensures that data is processed in order.

DataHub retries synchronization 1 second after an error occurs when the destination function runs. If synchronization failed for 512 times, the synchronization is suspended.

You can go to the DataHub console to view the information about the DataConnector, such as the DataConnector status, synchronization offset, and detailed error messages.