By Jeff Cleverley, Alibaba Cloud Tech Share Author

In previous tutorials I took you through the steps to set up a new server instance, install a performant server on a LEMP server stack, then get your WordPress sites up and running, including transactional emails.

Whilst this is a great start, what will happen if your website suddenly starts to receive lots of concurrent visitors? While the stack we have installed is performant, how can we improve it, to ensure that no matter how popular our site becomes our server won't choke?

The answer to our problem is caching. Caching allows us to store data in RAM so that any future request for that data can be served faster, without any needing to query the database or process any PHP.

We will be implementing two forms of caching, object caching and page caching.

The combination of these solutions should ensure that our WordPress site will continue to perform and serve pages even during periods of extremely heavy traffic. However to be able to test and demonstrate the efficacy of the caching solutions we will also be utilizing two other tools, we shall use loader.io, an online load testing tool that will simulate a heavy load on the site, alongside New Relic, a server monitoring tool that will allow us to see CPU loads (alongside other metrics).

In this first tutorial, we will configure our server monitoring tool and run some load testing on the server prior to enabling any caching, this will give us some benchmarks for later comparison.

Following that, we will install a Redis server as an object cache for DB queries, and configure WordPress to use it.

In the second of these caching tutorials we will configure NGINX FastCGI caching to work with WordPress for static page caching, and then finally we will re-run the same load testing on the server and compare its performance using the New Relic Server monitoring tool. This will allow us to gauge the improvements gained from the caching solutions we have implemented.

New Relic is an amazing tool with a web based dashboard that makes server monitoring easy. It is a paid service but they offer a free 30 day trial which is more than sufficient for our purposes. Head over to their website and sign up for the free trial.

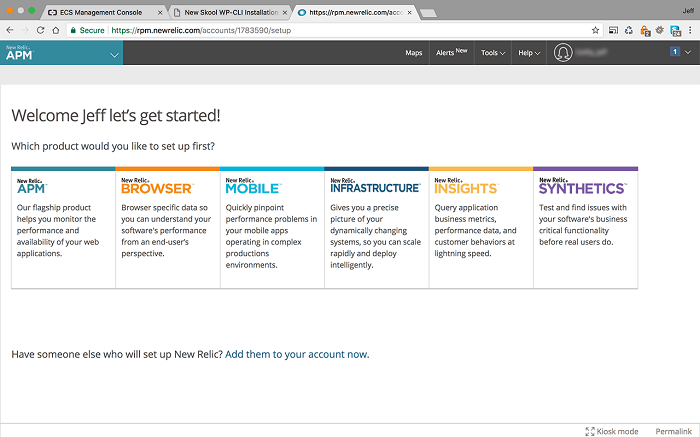

Once you have logged in you will see the following dashboard:

<New Relic Dashboard >

We are interested in the New Relic Infrastructure tool, this is their nomenclature for server monitoring, click on that panel and you will be taking to the screen with installation instructions.

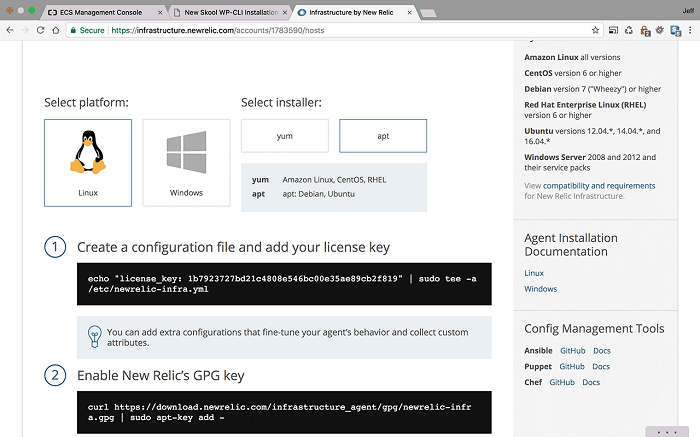

Since our instance is running Ubuntu 16.04, you will need to select 'Linux' and the 'Apt' installer method.

Follow the instructions that are clearly labelled to install the New Relic Infrastructure agent:

<Follow the New Relic agent installation instructions>

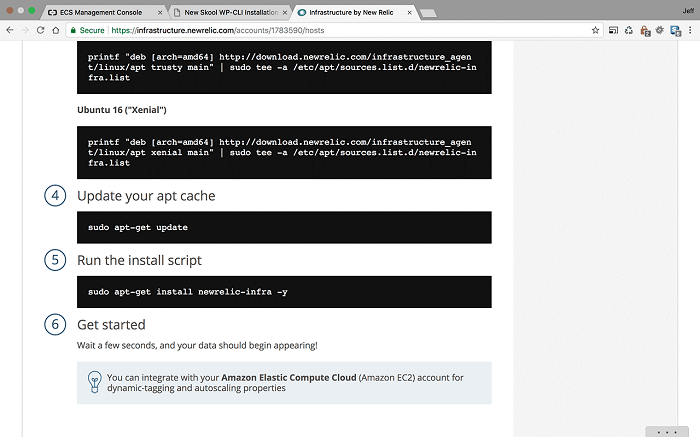

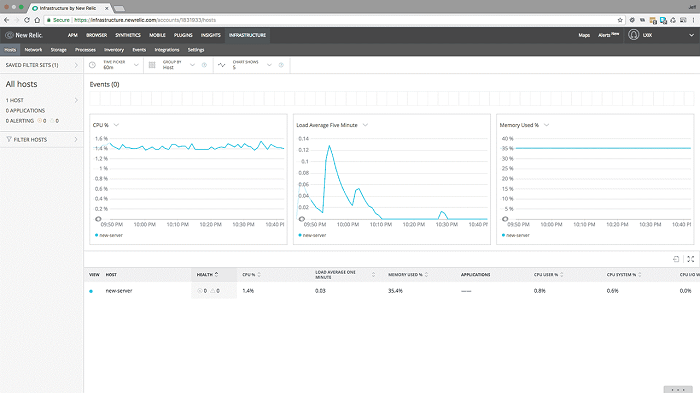

Your terminal should be displaying something similar to the following:

<Installing New Relic – Terminal output>

Within a few minutes, your server will be connected and you will be able to monitor your server from their web dashboard:

<Your server in the New Relic Infrastructure dashboard>

New Relic is set up and you can now monitor your server from their web interface.

Next we will use the loader.io online tool to run load tests on the site before we set up any caching. This will give us some benchmarks so we can see how the site performs in its uncached set up and then compare its performance once we have enabled caching.

Go to loader.io and sign up for a free account if you don't already have one:

<The Loader.io website>

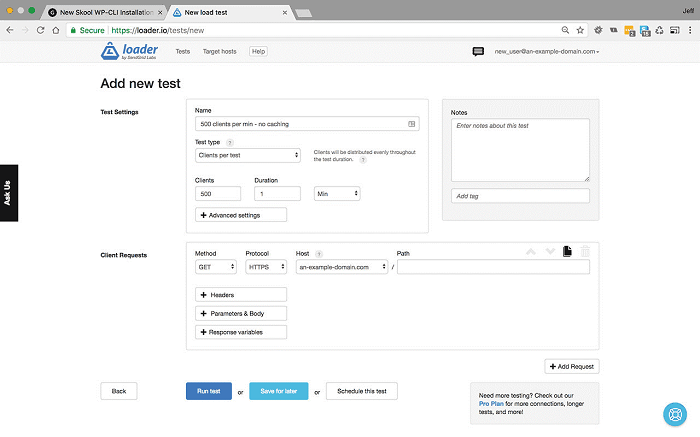

For my initial benchmarks, I began by simulating a load of 500 users per minute, before increasing to 1000 users per minute, then 5000 users per minute, and finally 7500 users per minute.

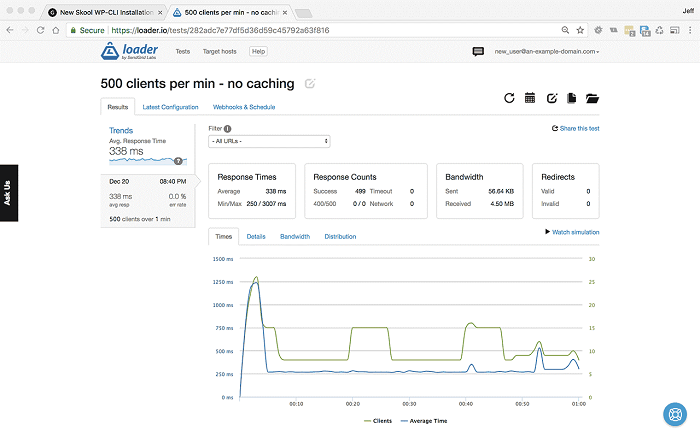

500 users per minute with no caching

<500 clients per minute for 1 minute – Settings & Results>

As you can see with 500 users per minute, our site handles the load with ease. There are no errors and we average a 338ms response time.

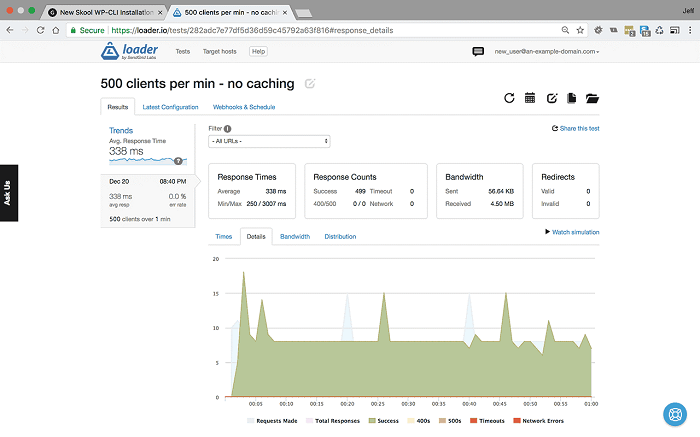

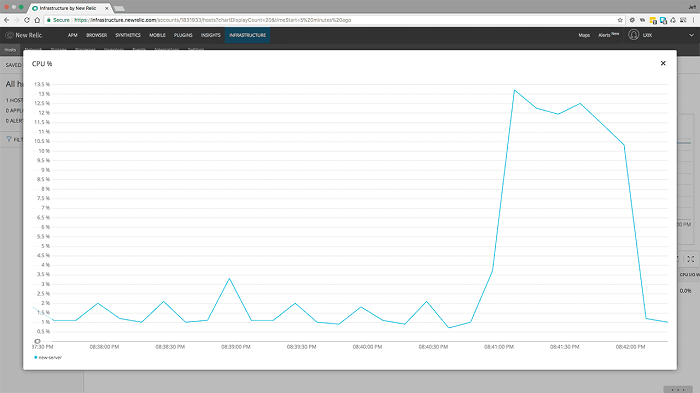

<500 users per minute for 1 minute – CPU monitoring in New Relic>

But when we look at the CPU load on our New Relic dashboard, we can see that the CPU increased to about 13% from 2-3% during the load testing.

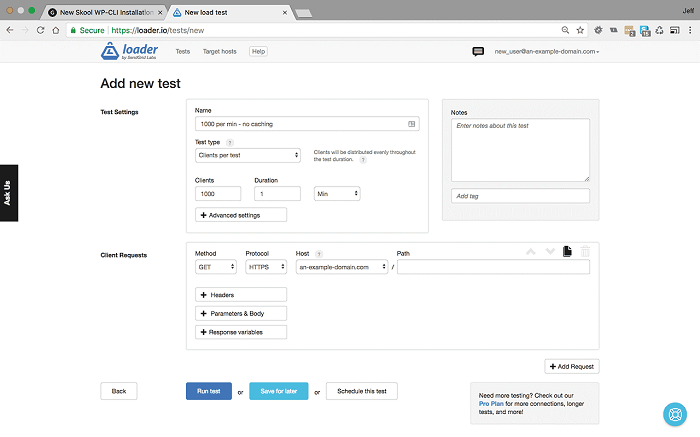

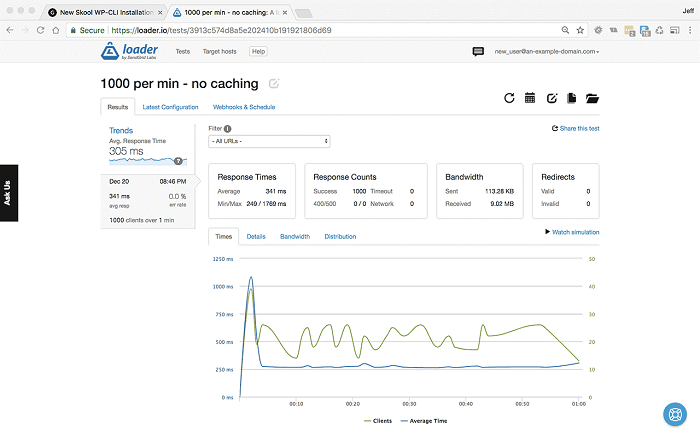

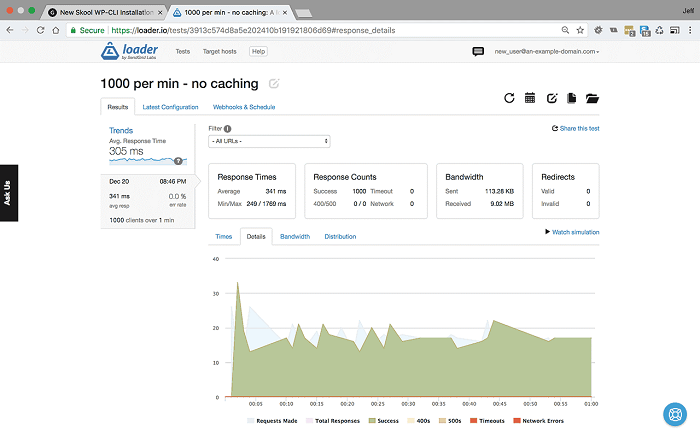

1000 users per minute with no caching

<1000 users per minute for 1 minute – Settings and Results>

Now we have doubled the load, we are still not showing any errors or timeouts, and our response time has increased, but only marginally, to 341ms.

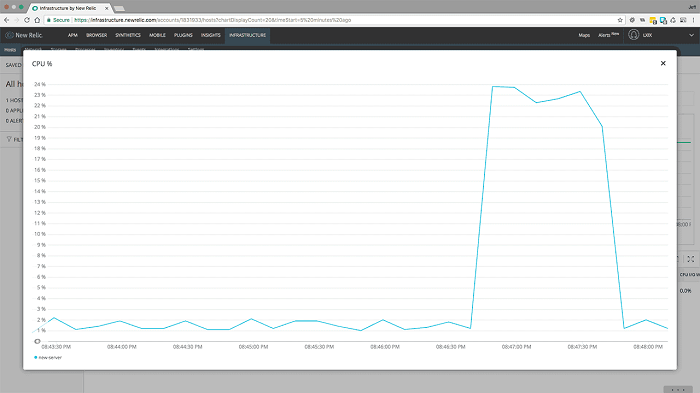

<1000 users per minute for 1 minute – CPU monitoring in New Relic >

However, when we look at the CPU load we can see that doubling the traffic has nearly doubled the CPU load, the load testing resulted in a spike to 24% CPU usage.

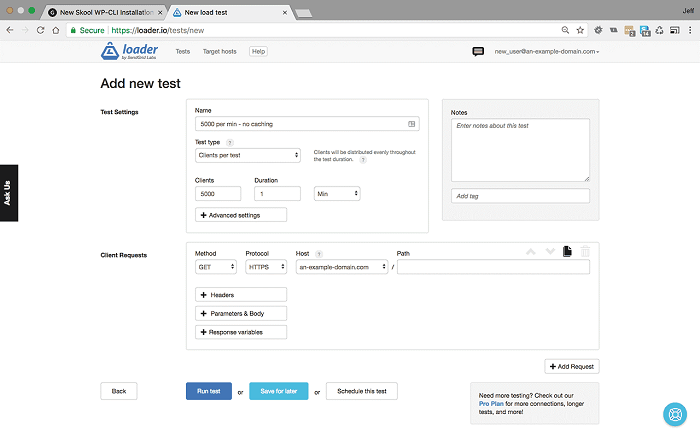

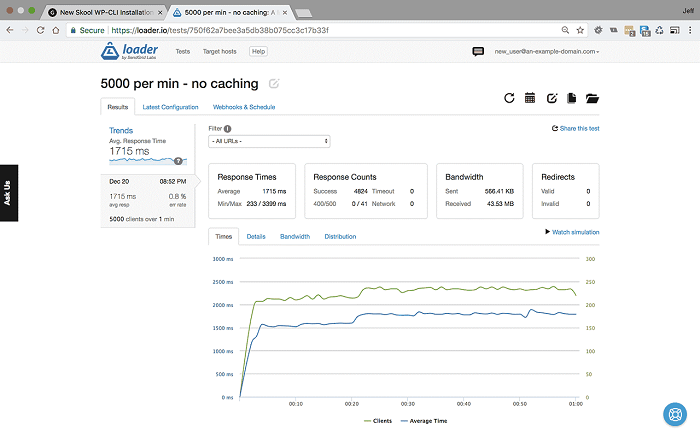

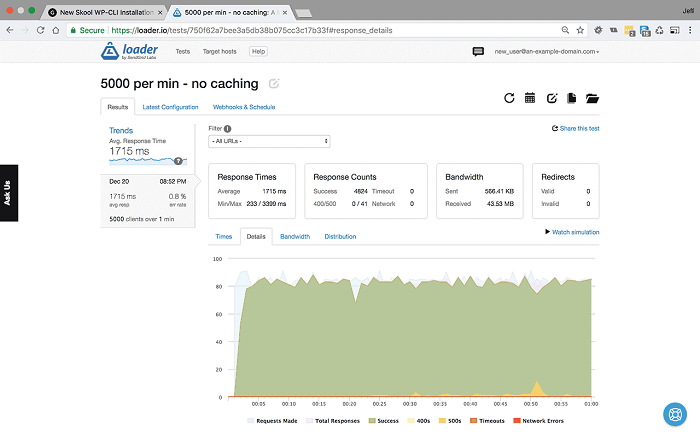

5000 users per minute with no caching

<5000 users per minute for 1 minute – Settings & Results>

After this drastic increase in traffic, our response time has quadrupled to 1715ms, and crucially, we are now showing some errors with an 0.8% error rate. When we check the details, we can see that some users will be getting an http 500 error message.

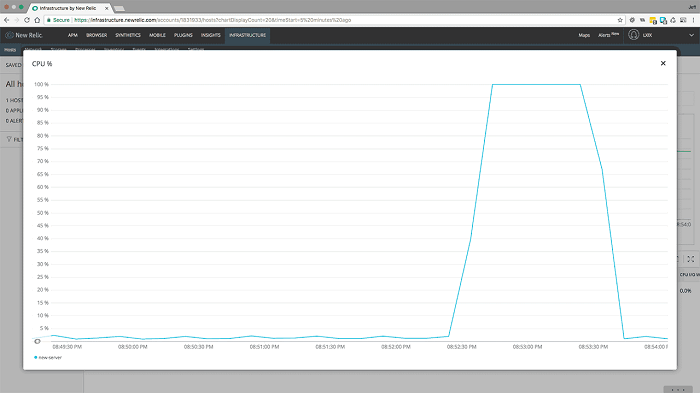

Still, considering this is a $5USD a month server, that is really quite impressive, let's look at the CPU load:

<5000 users per minute for 1 minute – CPU monitoring in New Reliccach>

Remember those http 500 errors, here we can see what's going on. The CPU has hit 100% load during this testing. This is obviously too much for our server, and in production we should have scaled up well before anything like this happens.

Let's push it a little further anyway, just to see what happens…

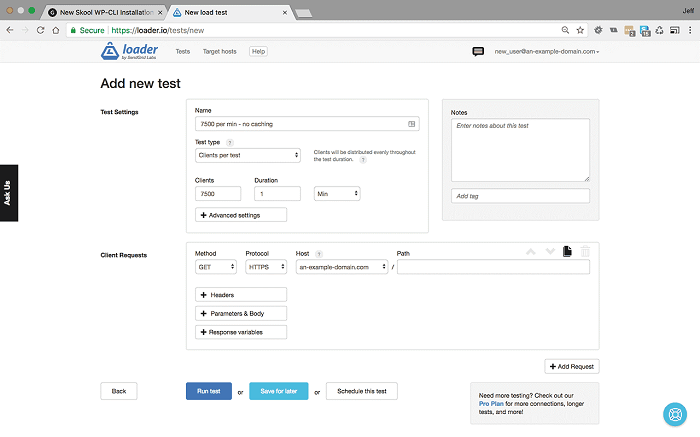

7500 users per minute with no caching

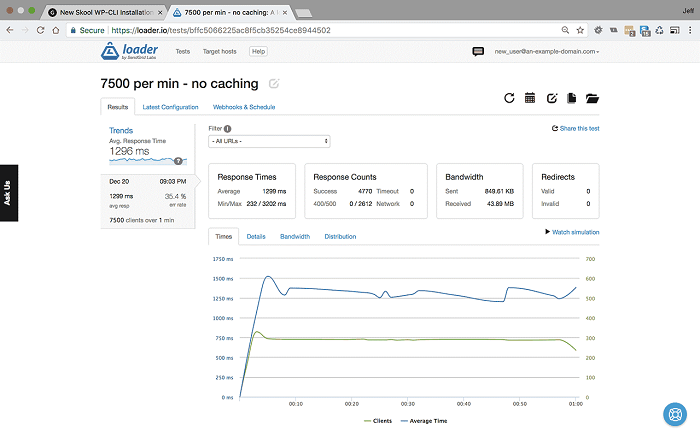

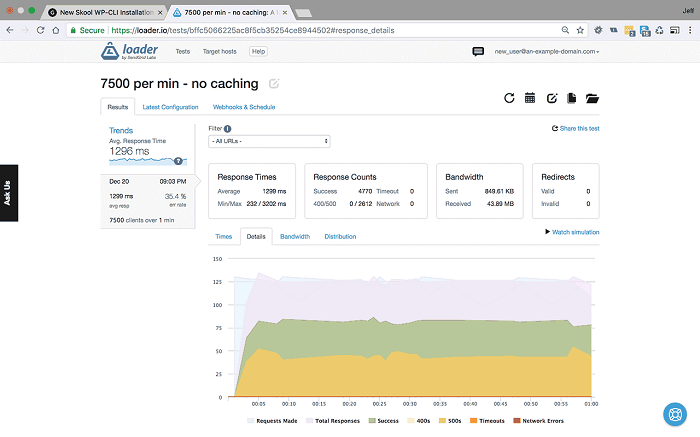

<7500 users per minute for 1 minute – Settings & Results>

Unsurprisingly, our error rate has gone through the roof, the server is returning over 35% of calls with an http 500 error response. Our average response has actually gone down to about 1300ms, but that is just because so many responses are empty.

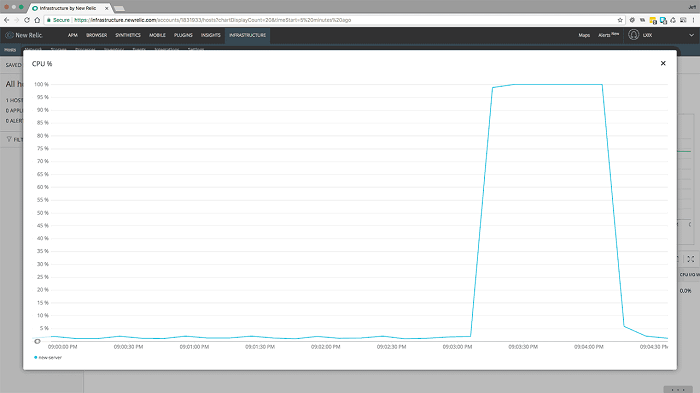

<7500 users per minute cpu>

No surprises here then, as before, our CPU load maxed out at 100% during the load testing.

Now we have our initial benchmarks let's begin to implement our caching solutions.

The first type of caching we will enable is object caching, this stores computationally expensive data such as database queries into memory.

Redis is itself a data structure server that can be used as a NoSQL database, or it can be paired with a relational database like MySQL to act as an object cache and speed things up. This is exactly what we will be doing.

The way this works is simple, the first time a WordPress page is loaded a database query is performed on the server. Redis caches this query into memory, so that when another user loads this page there is no need to query the database again as Redis provides the previously cached query results from memory. This drastically increases page load speeds and also reduces the impact on server database resources.

There are other alternatives for object caching including Memcached, however Redis does everything Memcached can, and has a much larger feature set. To find out more about the differences you can read this Stack Overflow page.

First, we need to install Redis, however the Redis package in the Ubuntu repository is a relatively outdated version (V.3.x). It lacks several security patches and uses the older LRU (Less Recently Used) algorithm for its cache key eviction policy, this has been superseded with the newer LFU (Least Frequently Used) eviction policy that was introduced in version 4.0.

If you followed along through the series, you will have already have installed the following package during the configuration of your SSL, it allows you to add external repositories to the apt package manager. If so, then skip ahead, otherwise you will need to issue the following command:

sudo apt-get install software-properties-common

Once that package is installed, we can add a third party repository that contains the latest version of Redis:

sudo add-apt-repository ppa:chris-lea/redis-server

Now we are ready to install the latest version of Redis and it's associated php module, remember to update your 'apt' package repository first:

sudo apt-get update

sudo apt-get install redis-server php-redisNotice, we can install 2 different programs with a single command, this can be a real time saver.

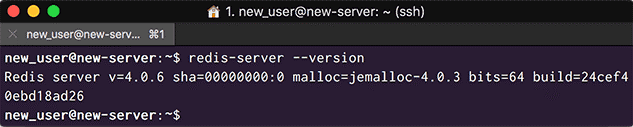

Verify that you have version 4 of Redis installed with the following command:

redis-server --version

Your terminal screen should show you the version and look like the following:

<Make sure you are running Redis server version 4+>

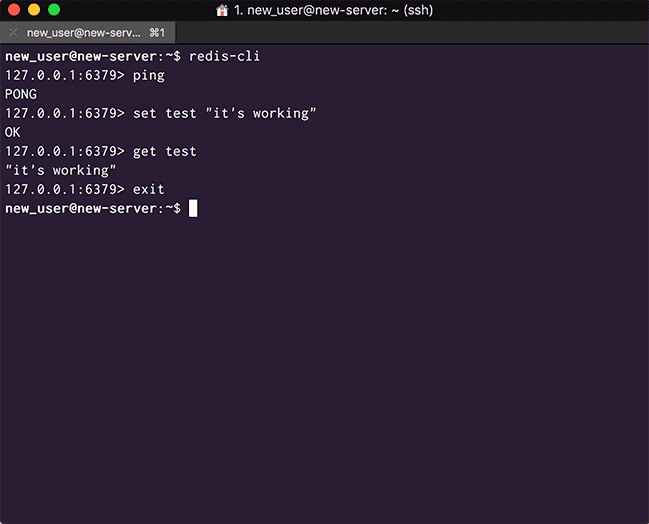

We can verify Redis is up and running by running its command line interface, 'redis-cli'.

redis-cli

Your terminal prompt should change to '127.0.0.1:6397>'. You can test the Redis server using a 'ping' command, Redis should return 'PONG':

127.0.0.1:6397>ping

PONGYou can also set a test and get a test to further verify everything.

First, set a test with an object that you wish to be returned upon getting, for example:

127.0.0.1:6397> set test "It's working"

Redis should confirm the setting with an OK response. Now you can proceed to get the test object with the following command:

127.0.0.1:6397> get test

Redis will now return the string you set earlier. When you are done, enter 'exit' to exit from the 'redis-cli' prompt.

Your terminal should now look like the following:

<Verify Redis is working >

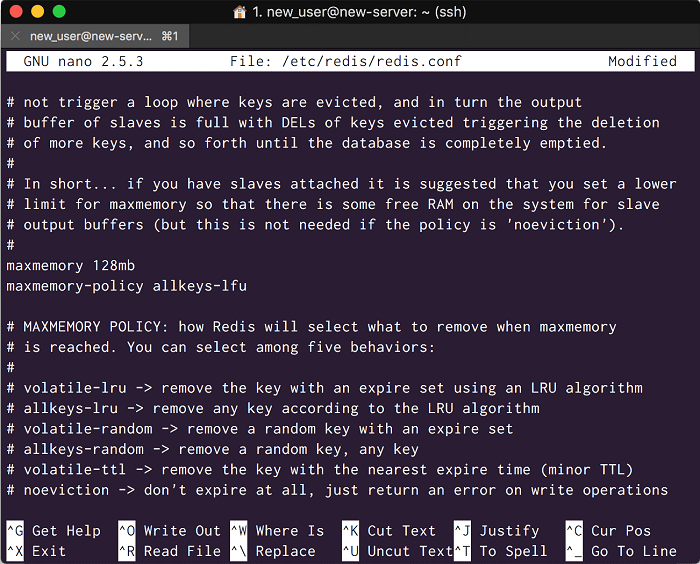

Now we need to configure our Redis cache policy, we will set the maximum memory available to it for caching and also the eviction policy for when the cache memory becomes full and we need to replace old data with new data.

Open the Redis configuration file with your favorite text editor:

sudo nano /etc/redis/redis.conf

Now you need to uncomment the line '# maxmemory' and set your desired value, underneath this we will set the eviction policy, like so:

maxmemory 128mb

maxmemory-policy allkeys-lfuYour conf file should look like this:

<Redis conf file with Memory and Eviction policy configured>

Remember to save the configuration file changes and restart both Redis and PHP-FPM:

sudo service redis-server restart

sudo service php7.0-fpm restartIf you have updated your php installation to php7.1 or php7.2 remember to make the appropriate changes.

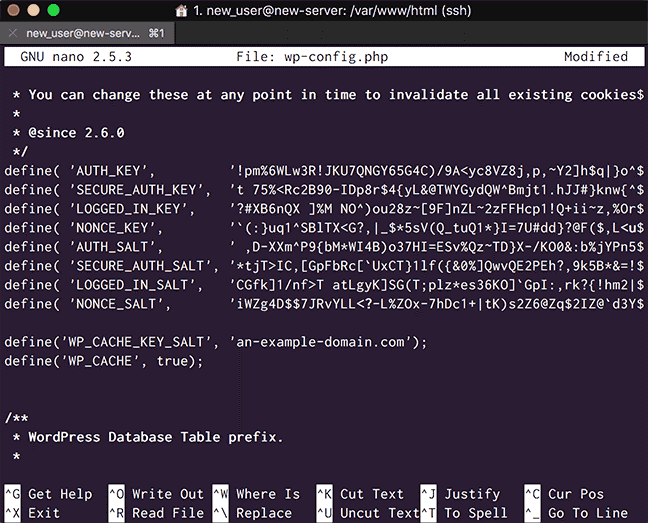

Next we need to edit our WordPress 'wp-config.php' file to add a cache key salt with the name of your site, and define 'WP_CACHE' as true to create a persistent cache with the WordPress Redis Object cache plugin we will install later:

Open your 'wp-config.php' file for editing:

sudo nano /var/www/html/wp-config.php

Immediately after the WordPress unique Keys and Salts section, add the following with your site's url:

define( 'WP_CACHE_KEY_SALT', 'an-example-domain.com' );

define( 'WP_CACHE', true );Your config file should now look like this:

<redis wp-config.php>

Save and exit the file.

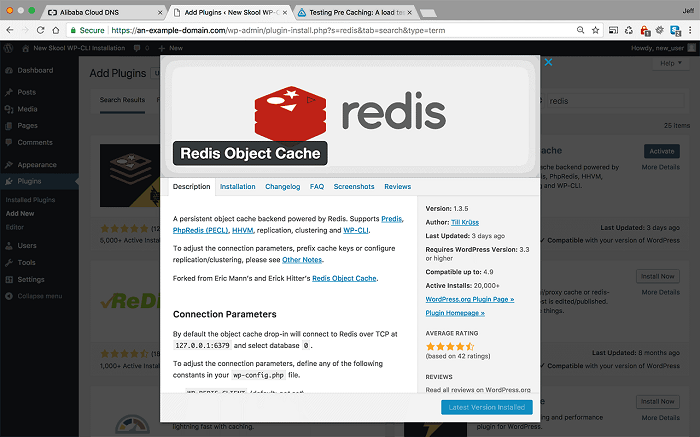

We need to install a Redis Object Cache plugin in order for WordPress to make use of Redis as an object cache, there are several in the WordPress plugin repository, but we will use the Redis Object Cache plugin by Till Krüss.

<Redis Object Cache Plugin>

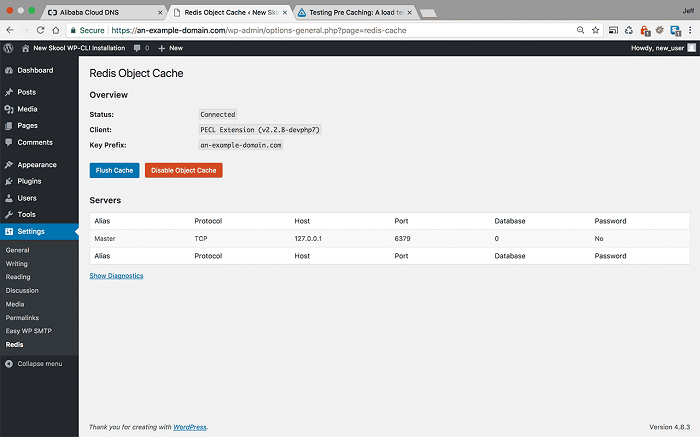

Once the plugin is installed, go to the plugins settings and enable the object cache. If everything has been configured correctly, you should see a settings screen like this:

<Redis Object Cache Plugin Settings - configured correctly>

If you want to flush the Redis cache, you can do it from this screen.

Now we have our object cache set up, it's time to move on to static caching with NGINX FastCGI caching. Stay tuned for Part 2 of the WordPress Caching Solutions tutorial!

Alibaba Cloud MaxCompute vs. AWS Redshift / Azure SQL data warehouse

2,605 posts | 747 followers

FollowAlibaba Clouder - May 17, 2019

Alibaba Clouder - May 20, 2019

Alibaba Clouder - August 15, 2018

Alibaba Clouder - May 16, 2019

ApsaraDB - August 30, 2021

Alibaba Clouder - July 8, 2020

2,605 posts | 747 followers

Follow Tair

Tair

Tair is a Redis-compatible in-memory database service that provides a variety of data structures and enterprise-level capabilities.

Learn More Deploy WordPress on Alibaba Cloud Servers in 5 minutes

Deploy WordPress on Alibaba Cloud Servers in 5 minutes

WordPress hosting solution is designed to provide an effortless way to start a blog for customers.

Learn More Media Solution

Media Solution

An array of powerful multimedia services providing massive cloud storage and efficient content delivery for a smooth and rich user experience.

Learn MoreMore Posts by Alibaba Clouder