Mutually exclusive access to shared resources has always been a problem that many business systems need to solve. In distributed systems, a general-purpose distributed lock solution is often used. This article discusses the implementation principle, technical selection, and specific practices of distributed locking on Alibaba Cloud Storage.

Figure 1: A distributed lock

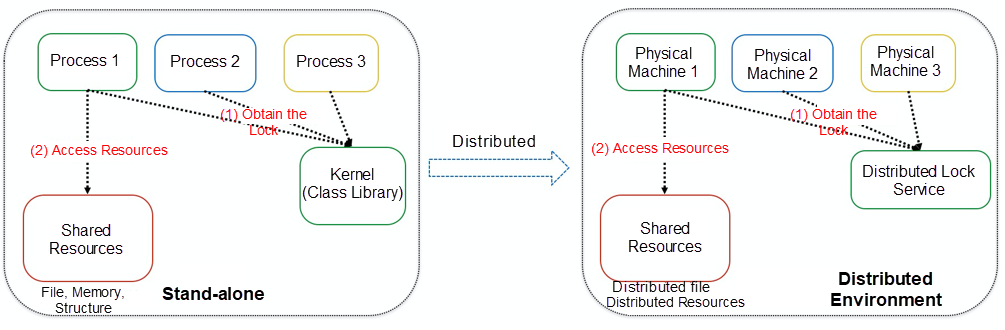

In a standalone environment, when shared resources cannot provide the mutual exclusion capability to prevent data damage caused by multiple threads or processes simultaneously reading and writing the shared resource, the system needs a mutual exclusion capability provided by a third party. In most cases, it is the kernel or a class library that provides mutual exclusion capability. The following figure shows that the process obtains an exclusive lock from the kernel or class library. Then, the process can exclusively access shared resources. After standalone environments evolve into distributed environments, we need a distributed service that provides the same function through which different machines obtain locks. The machines that obtain the locks can access the shared resources exclusively. These kinds of services are collectively called distributed lock services, and those locks are called distributed locks.

Figure 2: From Standalone to Distributed Locks

We can abstract the concept of distributed locks. A distributed lock must be a resource that can provide concurrent control and output an exclusive state:

Lock = Resource + Concurrency Control + Ownership Display

Take a common standalone lock as an example:

Spinlock = BOOL + CAS (Optimistic Lock)

Mutex = BOOL + CAS + notification (Pessimistic Lock)

Spinlock and Mutex are both Bool resources. The atomic CAS instruction is: When the current value is 0, set the value to 1. A machine will hold the lock if it succeeds, and the machine will not hold the lock if it fails. For example, AtomicInteger does not provide the Ownership Display. Although resource (Integer) and CAS are also provided, since prompt ownership is not explicitly prompted, AtomicInteger is not regarded as a lock. Of course, the Ownership Display can be considered as the packaging of a service provision form.

In a standalone environment, the kernel has a "God's eye" and can know if a process is alive. When a process fails, the kernel can release the lock resources held by the process. However, this becomes a challenge in a distributed environment. We need to provide locks with a new feature to cope with various machine failures or crashes: availability.

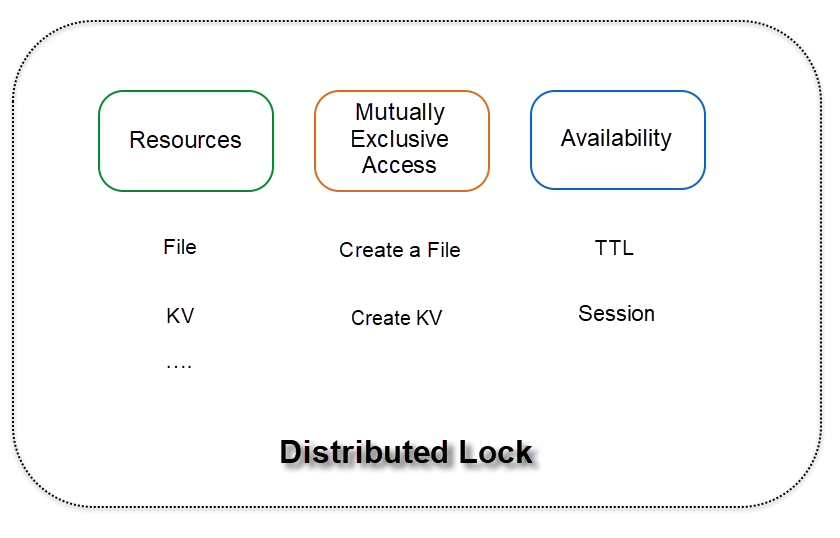

As shown in the following figure, any service that provides the three features can provide the capabilities of distributed locks. The resource can be a file or a key-value (KV) pair, which is operated through atoms, such as a file or KV creation, indicates the ownership through the successful creation result, and ensures the availability of the lock through TTL or session.

Figure 3: Features and Implementation of Distributed Locks

Distributed locks are divided into the following types based on the security of lock resources:

A distributed system based on asynchronous replication faces the risk of data loss (lock loss) and is not secure enough. Such a system often provides fine-grained lock services through the TTL mechanism. It applies to time-sensitive services, need to set a short validity period, have short-term tasks, and are subject to relatively limited impact on the business if the loss of locks occurs.

A distributed system based on the Paxos protocol ensures multiple copies of data through the consensus protocol and has high data security. It often uses the lease (session) mechanism to provide coarse-granularity lock services. This system has certain usage requirements and applies to services that are sensitive to security, need to hold locks for a long time, and cannot afford the loss of locks.

Alibaba Cloud Storage has accumulated a lot of experience in improving the correctness of distributed locks, the availability of locks, and the efficiency of lock switching.

Exclusion is a basic requirement of distributed locks. Multiple user occupancy is not allowed. Then, how do storage and distributed locks avoid this situation?

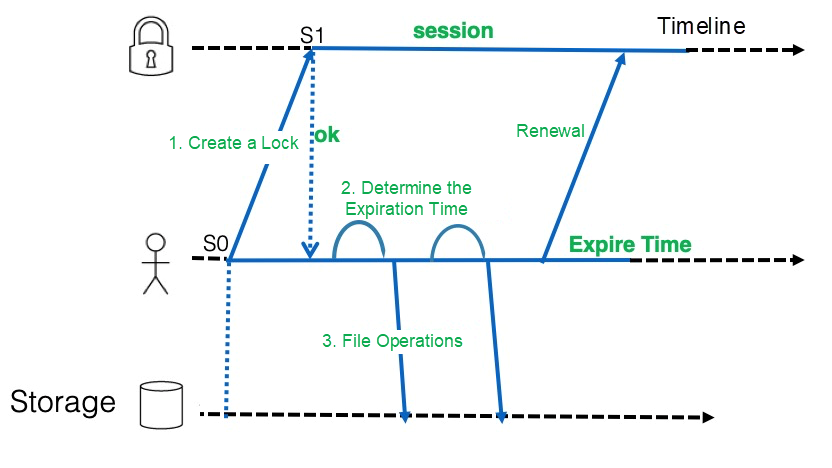

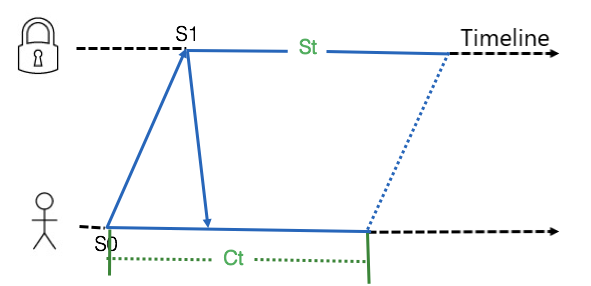

Each lock of the server is bound to a unique session, and the client ensures the validity of the session by regularly sending heartbeats, ensuring the ownership of the lock. When a heartbeat cannot be maintained, the session and the associated lock node are released, and the lock node can be preempted again. The key point is how to ensure the synchronization between the client and the server so the client can perceive an expired session. The following figure shows the validity period of the session is maintained at the client and server. The client starts timing (S0) when the client sends the heartbeat, while the server starts timing (S1) when the server receives the request. This ensures that the client expires before the server. After the user creates the lock, the core worker thread can determine whether there is enough validity period before the core worker thread performs the core operation. In addition, we determine the validity period based on the system time instead of wall time. The system clock is more accurate and will not move forward or backward. The clock may have a millisecond-level deviation in the second-level system time. In the worst-case scenario, if NTP jumps, only the clock speed can be modified.

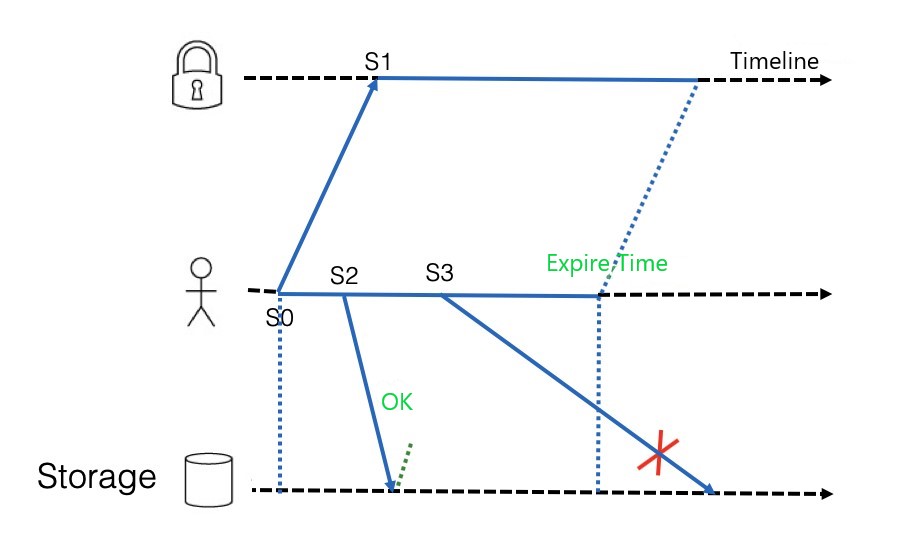

Figure 4: Scenario of Alibaba Cloud Storage

Have we achieved perfect distributed lock exclusion? No, there is a situation where the access mutual exclusion of business based on the distributed lock service may be compromised. As shown in Figure 9, the client tries to preempt a lock at S0 and successfully obtains a lock at the back end at S1. Therefore, a validity period window of the distributed lock is generated. Within the validity period, the client performs an access operation on the storage at S2. This operation is completed quickly, and then at S3, the client determines that the lock is still in the validity period and continues to access the storage. However, this operation takes a long time and exceeds the expiration time of the distributed lock, and the distributed lock may have been obtained by another client. Therefore, two clients may operate on the same batch of data at the same time. There is a small chance this situation could occur.

Figure 5: Cross-Border Scenario

For this scenario, the specific solution is to ensure a sufficient validity period window of locks when the client operates on data. Of course, if the service provides a rollback mechanism, the solution is more comprehensive. This solution is also used by storage products that use distributed locks.

A better solution is if the storage system introduces I/O Fence capability. Here, we must mention the discussion between Martin Kleppmann and antirez, the author of Redis. Redis introduced redlock to prevent the loss of locks caused by asynchronous replication. This scheme introduced the quorum mechanism. The system needs to obtain the lock of the quorum. This ensures the availability and correctness to the greatest extent, but it still has two problems:

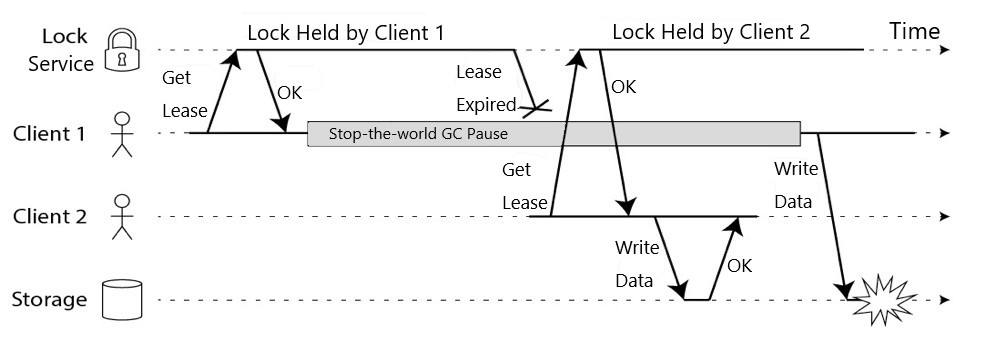

The problem of unreliable wall time can be solved through non-wall time MonoticTime (Redis still relies on wall time), but only one system in a heterogeneous system cannot ensure the correctness of data. As shown in Figure 10, client 1 obtains the lock and garbage collection (GC) occurs during data operations. The ownership of the lock is lost when GC is complete, resulting in data inconsistency.

Figure 6: Heterogeneous Systems Cannot Ensure Exact Data Consistency

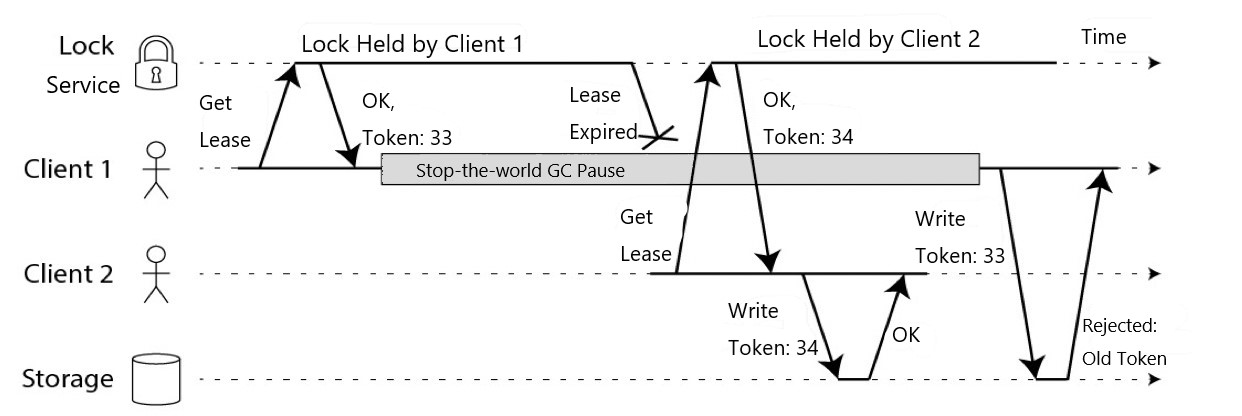

Therefore, two cooperative systems are needed to complete mutually exclusive access. The IO Fence capability is introduced into the storage system, as shown in Figure 11. The global lock service provides global auto-incrementing tokens. The system returns token 33 and brings the token to the storage system after client 1 obtains the lock. Then, GC occurs. The system returns token 34 and brings the token to the storage system after client 2 obtains the lock. The storage system rejects the requests with the smaller token. When client 1 re-writes data after a long time of full GC recovery because the token recorded by the storage layer has been updated, the request with token 33 will be rejected. This protects data.

Figure 7: Introducing the I/O Fence Capability

This is in line with the design idea of the Apsara Distributed File System, which is an Alibaba Cloud distributed storage platform. The Apsara Distributed File System supports write protection similar to I/O Fence. It introduces Inline File types to cooperate with Seal File operations, providing write protection similar to I/O Fence. First, the SealFile operation is used to close the files on the cs to prevent the previous owner from continuing to write data. Second, InlineFile prevents the previous owner from opening the new file. These two functions also provide token support in the storage system.

Storage distributed locks ensure the robustness of locks through continuous heartbeats, so users do not have to pay high attention to availability, but abnormal user processes may continuously occupy the locks. A session blacklist mechanism is provided to make sure locks are released securely and scheduled eventually.

The user can query the session information and add the session into the blacklist to release the lock held by a process in the event of suspended activity. After that, the heartbeat cannot be maintained, which will cause session expiration and the safe release of the lock node. Here, we do not force delete the lock but choose to disable the heartbeat for the following reasons:

When the lock held by a process needs to be rescheduled, the lock owner can delete the lock node. However, if the lock owner encounters an exception, such as process restart or machine failure, then in order to have the lock put into a new process, you must wait until the original session expires before you can successfully preempt the lock in a new process. A distributed lock has a session lifecycle of dozens of seconds by default. It may take a long time before the lock node can be re-preempted when the process with the lock unexpectedly exits with the lock not being released.

Figure 8: The Client and Server Separately Maintain the Expiration Time

We must compress the session lifecycle and enable faster heartbeats to improve the switching precision. This imposes more access pressure on the backend server. We optimized the structure to compress the session further.

We also provide CAS lock releases based on specific business scenarios. For example, if the Daemon process finds that the lock-holding process fails, it releases the lock by CAS so other processes can immediately preempt the lock. For example, a unique ID of a process is stored in the lock node. The locks that are no longer used will be forcibly released and put into preemption again. This method can completely avoid the waiting time required to preempt the locks after the process is upgraded or restarted unexpectedly.

Alibaba Cloud Storage provides a comprehensive distributed lock solution that has been tested in the medium to long term use of many Alibaba Cloud products. It is stable, highly reliable, and provides SDKs of multiple programming languages and RESTful integration solutions.

Distributed locks provide mutually exclusive access to shared resources in a distributed environment. Distributed locks are used to improve the efficiency of services or implement the absolute mutual exclusion of accesses. When accessing the distributed lock service, you need to consider issues, such as access cost, service reliability, switching accuracy, and correctness of distributed locks, to use distributed locks correctly and reasonably.

ApsaraDB - July 26, 2018

Alibaba Developer - June 15, 2020

Alibaba Cloud Storage - February 23, 2021

Alibaba Clouder - December 16, 2020

ApsaraDB - August 13, 2024

Alibaba Cloud Native Community - September 14, 2023

Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More Apsara File Storage NAS

Apsara File Storage NAS

Simple, scalable, on-demand and reliable network attached storage for use with ECS instances, HPC and Container Service.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn More