By He Jun, Alibaba Cloud Technical Expert

The purpose of this article is to dive into the background of the development of relational databases and the special characteristics of the cloud computing era. We will be sharing the theory behind the spiraling evolution of database computing power and how it ties into the developmental path of Alibaba Cloud's RDS products. We will also describe the design concepts behind the proprietary, next generation cloud managed relational database PolarDB along with some key related technical points.

The concept of relational databases may seem a little "antiquated" in this day and age as its history begins with the IT technology present half a century ago. Though, realistically, the technology has always been at the core of even modern society, driving on most of the developments in commercial technical civilization. The three core technical fields – CPU, operating systems, and databases – are the cornerstone of information processing, computing power, and artificial intelligence.

From the publishing of E.F. Codd's thesis "A Relational Model of Data for Large Shared Data Banks" in 1970 to the arrival of the DB2, the commercial relational database supporting SQL on the market in the early '80s, Oracle's start, and the birth of SQL-Server in the '90s, successes of relational databases span the decades.

Today, with the development of the World Wide Web and the broad application of big data, more and more new types of databases are cropping up. However, relational databases continue to dominate the space. One of the primary reasons for the prevalence of relational databases is their adoption of SQL standards. This advanced, non-procedural programming interface language perfectly combines computer science and human-comprehensible data management methods, and remains difficult to surpass even today.

SQL (Structured Query Language) was invented by Boyce and Chamberlin in 1974 to act as a bridge between relational algebra and relational calculus. In essence it is a language that uses key words that resemble natural speech and grammar to define operations on data, program data storage, query, and management.

This abstract programming interface decouples the specific data problem from the details of data storage and query implementation, allowing large swaths of commercial business logic and information management computing models can be mass applied. This has released production power and significantly driven forward the development of commercial relational database systems.

Looking at the continued development and growth of SQL, it's not hard to see why it has already become the top choice in the world of relational databases. Even today, this programming language still has yet to be replaced by an alternative.

In 1976, Jim Gray published a thesis called "Granularity of Locks and Degrees of Consistency in a Shared Database" in which he formally defined the concept of database transactions and data consistency mechanism for relational database events. OLTP, is a classic application of relational databases which involves event processing, primarily basic, even daily processes such as transactions in a bank.

Event processing must follow ACID, four principles that ensure data accuracy. ACID stands for Atomicity, Consistency, Isolation, and Durability. Performance indicators used to measure the processing power of OLTP include response time and throughput.

In our brief overview of the history, position, and developmental phases of relational databases, we came across the names Oracle, SQL-Server, DB2, all of which are relational databases that still hold the top positions in global databases. Though they were once household names in the tech world, Informix and Sybase have already fallen out of the awareness of the general public.

However, beginning in the 1990s, a renewed wave of information sharing and the spirit of free and open software became a popular trend, bringing with it names like Linux, MySQL, PostgreSQL, and other open source software. The appearance of this trend and the strength with which it has grown have released a veritable tsunami of growth in society as these freely shared technical advances encourage massive growth in global Internet technology companies.

This progress belongs to the whole of society, but the credit belongs to those pioneering open-source developers, Richard Stallman, Linus Torvalds, Michael Widenius, and the like. Of course, more and more Chinese companies have become active participants in the open source community over the past few years, also freely contributing their own technological advancements with the rest of the open source world.

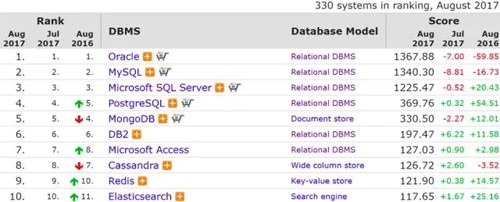

Looking at the latest statistics on the DB-engines website, we can see that open source databases such as MySQL and PostgreSQL, when added together, are used more than even the top commercial database, Oracle, making them the most popular relational databases in the world.

In reaction to the popularity of green computing and the shared economy, we need not just cloud servers, cloud data, networks, hardware chips, and other integrations of hardware and software, but also to continue putting the needs of users at the center of technology. With service that is focused on the user, technology will spread across public consciousness and further drive forward the development of computing efficiency and intelligence.

We currently exist in a phase of vigorous development of the so called "Cloud 2.0". This phase has seen the rise of a number of issues relating to the management of relational databases. It was precisely why AWS, Amazon's cloud computing unit, published Aurora on November 12, 2014. Launched at the AWS re:Invent 2014 conference, Aurora is a new-generation cloud-hosted relational database. The release of this new generation of databases heralds a new phase not only in the age of cloud computing but in the evolution of core technologies given to us by the IT era.

In 2017 in the SIGMOD data conference, Amazon published a thesis entitled "Amazon Aurora: Design Considerations for High Throughput Cloud Native Relational Databases", which further explained how the relational database based on the cloud environment design called Cloud-Native was born.

Even with the power of existing relational databases, we are still yet to solve the issue of integrating traditional relational databases with public cloud environments.

"Cloud computing 1.0" utilizes low costs, quick deployments, flexibility, and scalability to realize the convertibility of IT computing to the cloud. Once low cost technical coverage became the norm, and with the rise in user business on the cloud, we began to see the rise of new user pain points to tackle. For example, figuring out how to sustainably enjoy low cost computing power comparable to or even better than traditional IT computing on the cloud became a matter of urgent importance.

That is to say that the age of cloud computing is still far from reaching its peak, unless it continues to evolve and in the course of evolution maintain a favorable cost to performance ratio. It is only when cloud computing exceeds the computing power of traditional IT architectures, while maintaining flexibility and scalability, that it will be able to truly dominate the space. It's simply a matter of time.

Next Gen Relational databases are one of the major milestones marking this process. Following the same line of thinking, the future will likely bring more and more advanced cloud services, like intelligent cloud operating systems, to integrate with the new chips and networks being designed in the cloud era.

In the IT era, traditional computing power (like relational databases used to process structured data) is used in multi-user use cases where hardware devices are isolated from each other. The age of cloud computing, on the other hand, is marked by an environment of rented, multi-user, self-service applications, where computing load scenarios have become more complicated than they were in the past. In this environment of ever-changing computing loads, solving the conflicts between technical products from the IT age and their application in the cloud computing age has become an intrinsic impetus behind the evolution of the cloud technology itself.

For example, in a public cloud environment, with increasing user numbers, user services, and user data, we are constantly plagued with backup, performance, migration, upgrading, read-only instance, hard disk capacity, and Binlog latency issues. Most of the reasons for these problems come from I/O bottlenecks (storage and networks), which is an issue that must be solved through technological revolutions and new product architectures. Furthermore, from the perspective of product forms, Alibaba Cloud's RDS is currently at a significant advantage, which will be covered in the next section.

From an architectural perspective of relational databases, it's best to have a common technical architecture that is capable of meeting different user scenarios.

The following content will go into detail on the specialties of the different product forms of Alibaba Cloud RDS. By the end, we should have a much clearer understanding of how PolarDB developed by absorbing and adapting the advantages of other products.

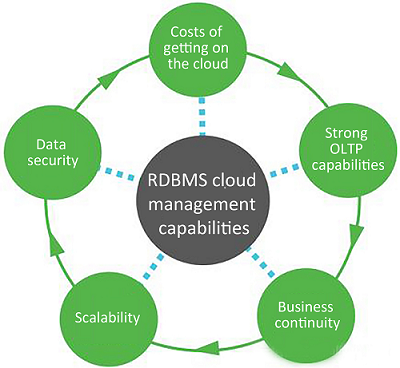

As a cloud hosted relational database, PolarDB is more focused on how to meet the needs to user businesses through services on the cloud. PolarDB aims to continuously evolve through technological revolutions and provide even better database computing capability. User requirements include: costs of migrating services to the cloud, OLTP performance, business continuity, online business scaling, and data security.

Aside from the low cost advantages of cloud computing, the technology also features naturally high flexibility and scalability. To aid in user business scaling and crash recovery, architectures that feature separation of computing and storage have become a hallmark choice in cloud resource environments. This will be further explained in the following section on the evolution of the RDS product architecture.

As was described above, the evolutionary direction of Alibaba Cloud PolarDB is the same as that of Amazon Aurora, but the paths they have taken have their differences. These differences are brought about primarily by the differences in how the two products implement cloud database services. Alibaba RDS MySQL includes the following versions. These different product forms are designed to tackle different user scenarios, so they naturally feature different special characteristics and can be used to complement each other.

MySQL basic version

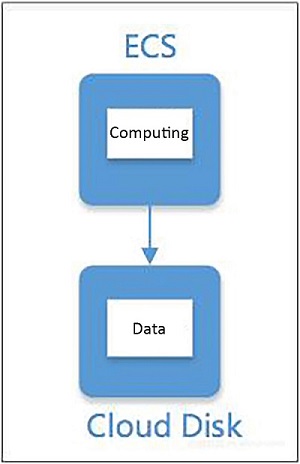

The basic version of MySQL utilizes separation of computing and storage nodes to take advantage of the reliability of cloud drives and characteristics of having multiple copies. It also takes advantage of virtualization on ECS cloud servers to improve deployment standardization, version and O&M management efficiency, and satisfy the needs of casual users who aren't too concerned with having a high availability server.

At the same time, this type of architecture offers natural advantages in database migration, storage capacity scaling, scaling up computer nodes, and disaster recovery for computing nodes. At the root of each of these advantages is the separation of computing and storage nodes. Later we will go over how PolarDB utilizes the concept of separating computing and storage nodes.

MySQL high availability version

MySQL high availability version is targeted at enterprise level users and provides them with a high availability database with a 99.95% SLA guarantee. It uses the Active-Standby high availability framework in which the Master and Standby nodes use MySQL Binlog to execute data Replication. When the Master node suffers a crash, the Standby node takes over managing the service.

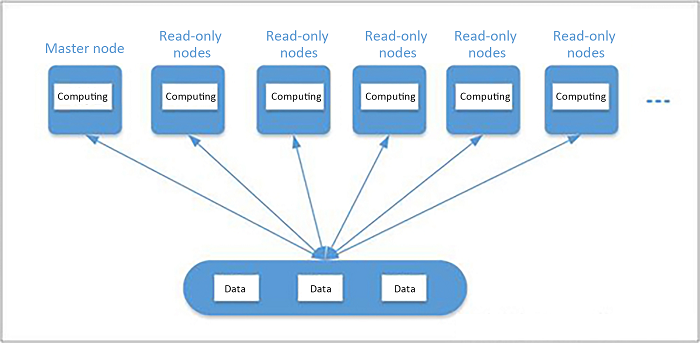

At the same time, it also utilizes multiple read-only nodes in a data read/write separated load balanced access method. Using the Shared-Nothing architecture, computing and data exist on the same node, ensuring performance to the furthest extent possible while providing reliability through multiple data replicas.

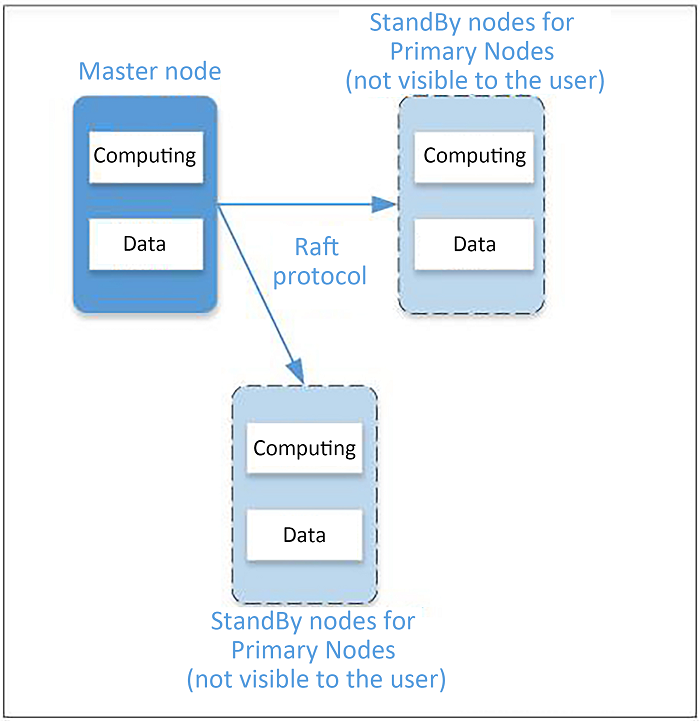

MySQL financial version

It can be said that the MySQL financial version is targeted at high end users such as those in the financial industry. It features high availability, high reliability, and uses the distributed Raft protocol to ensure strong data consistency. It also features excellent crash recovery times to help satisfy business scenarios requiring excellent disaster tolerance.

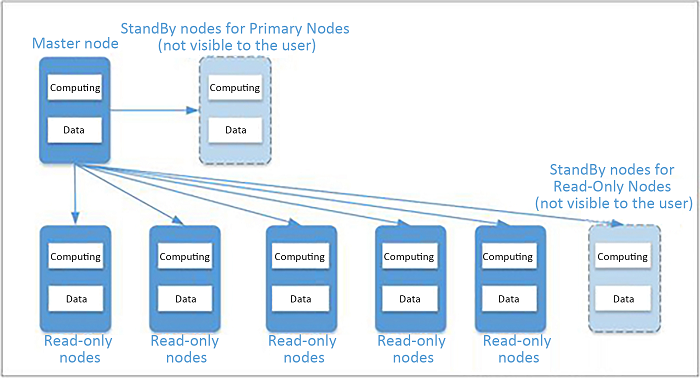

The PolarDB architecture separates computing and storage, and is capable of supporting multiple read-only nodes. The Master node and its read-only nodes use an Active-Active failover method which ensures the computing nodes are used to their full potential. And because it uses shared storage to share a single copy of data across nodes, user costs are lowered significantly. In this next section we will go into more detail on the key characteristics of PolarDB.

The design concept behind PolarDB features a few major revolutionary ideas. One such specialty is how PolarDB redesigned a new file system to retrieve specific WAL I/O data like Redo log. Another is using a high speed network and high performance protocol to put database files and Redo log files on shared devices, which prevents multiple, repeated operations along a single I/O path and makes it much more clever than Binlog methods.

Furthermore, in the design of DB Server, we use concepts that are completely MySQL compatible and embrace the open source ecology to preserve the special characteristics of traditional relational databases from the SQL compiler to the performance optimizer and execution plans. We also specially designed block storage devices to target and improve the Redolog I/O path.

We know that distributed databases have always been a hot topic in the database field, but they are extremely difficult to pull off effectively. Whether it's respecting CAP theory or the concept of BASE, common distributed relational databases typically have difficulty finding a balance between technology and commercial viability. There isn't a commercial relational database in existence that can perfectly and cheaply meet all of the requirements of SQL and popular database compatibility, 100% support for OLTP ACID tasks, 99.99% high availability, high performance low latency concurrent processing, flexible scaling, backup disaster tolerations, and low cost migration.

A common design philosophy shared between PolarDB and Amazon Aurora is to abandon the OLTP multi-path concurrent write support commonly found in distributed databases in favor of a single write, multiple read architecture design. They simplify the difficult to understand theoretical model of distributed systems and are both capable of satisfying the vast majority of OLTP application scenarios and performance requirements.

In summary, 100% MySQL compatibility combined with a dedicated proprietary file system and shared storage block devices and the multiple high tech applications described below make the next gen relational database PolarDB a sure winner in the cloud era.

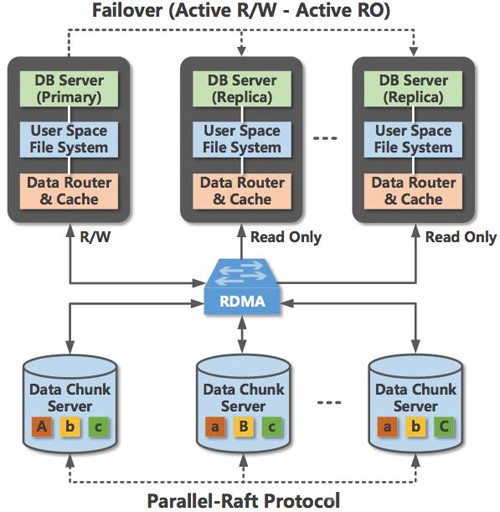

Having described the product form of Alibaba Cloud RDS, let's move forward to look at the overall PolarDB product architecture. The below figure outlines the main modules of the PolarDB product, including the database server, file system, and shared block storage.

PolarDB product architecture

As we can see in the figure, PolarDB features a distributed cluster architecture design. It combines multiple advanced technologies to significantly increase the OLTP processing performance of the database. PolarDB uses separated storage and computing design concepts to satisfy public cloud users' requirements for flexible scaling. The computing nodes and storage nodes for the database are connected with a high speed network and use the RDMA protocol to execute data transmissions, meaning that I/O performance will never again become a bottleneck.

The database nodes are designed to be completely compatible with MySQL. Master and read-only nodes use Active-Active Failover methods to provide high availability DB service. DB data files and redolog are sent to a remote Chunk Server from User-space file system, through a high speed network running the RDMA protocol, routed by block device data management.

At the same time, DB Servers need only to synchronize Redo log metadata information between each other. Chunk Server data reliability is maintained with multiple copies, with consistency guaranteed by the Parallel-Raft protocol.

Now that we've gone over the PolarDB product architecture, let's examine the key technologies used by PolarDB in the aspects of distributed framework, database high availability, network protocol, storage block devices, file systems, and virtualization.

Shared disk architecture

The essence of a distributed system is the ability to separate and combine it freely. Sometimes data needs to be split apart to facilitate concurrent performance, and sometimes it has to be brought back together for the sake of data consistency. Furthermore it might need to synchronously wait because of a distributed lock.

PoloarDB utilizes a Shared Disk architecture, primarily because of the computing and storage separation described above. Logically, DB data is placed on data chunk storage servers that all DB servers can share access to. On the storage server internally, the actual data is cut into chunks to achieve the I/O goal of handling concurrent requests from multiple servers.

Physical replication

We know that MySQL Binlog records tuple row-level data changes. On the InnoDB engine layer, we need to be able to support transactional ACID and maintain a Redo log. Storage is all based on changing physical pages.

This means that MySQL transaction processing, by default, needs to call fsync() twice to execute log persistence operations. This has a direct impact on system response times during event processing and throughput performance. Even though MySQL uses the Group Commit mechanism to increase throughput during high concurrency implementations, it's still unable to completely eliminate the associated I/O bottleneck.

Furthermore, because a single database instance has limited computing capability and network bandwidth, a classic solution is to create multiple read-only instances to share the read load and effectively scale out. PolarDB puts database files and Redolog on shared storage devices to easily solve the issue of data Replication between read-only and Master nodes.

Because of data sharing, adding a read-only node no longer requires making a full copy of the data. Since single copies of the data and Redo log are shared amongst all nodes, all we have to do is synchronize the metadata, support basic MVCC, and ensure that the read data is consistent. This means that when a Master node crashes and the system executes failover, the disaster tolerance time, that is the time that it takes to switch to a read-only node, is reduced to within 30 seconds.

This represents a hearty improvement to system availability. Data latency between the Master and read-only nodes can also be decreased to a matter of milliseconds.

From a concurrency perspective, if we use Binlog replication, our only option is to perform parallel replications on the table level. Physical replication, on the other hand is only performed on the data page dimension. The smaller the granularity, the more efficient the system is in concurrent operations.

The final point is that the benefit of using Redolog for replication is that we can close Binlog to reduce impact on performance, unless we need Binlog for logical disaster tolerance backups or data migrations.

In summary, on the I/O path, usually the lower the layer the easier it is to decouple the business logic and state on the upper layer to reduce the complexity of the system. This kind of WAL Redo log large file read and write I/O method is extremely applicable to concurrency mechanisms in distributed file systems, providing benefits to concurrent read/write performance on PolarDB.

RDMA protocol under high speed networks

RDMA is a technical method that's been used for years in the field of HPC, and has recently seen increased use in cloud computing, proving a theory of mine. In the cloud computing 2.0 era, we are on the verge of redefining the public understanding of the cloud. The cloud is even capable of exceeding traditional IT technology, making industrial implementation more and more rigorous.

RDMA requires a network device that supports high speed network connections (like a switch, NIC, etc.). It passes through a specific programming interface to communicate with the NIC driver, and then it usually applies Zero-Copy technology to implement high performance low latency transmission from the NIC to the remote application memory, instead of copying data from kernel to application which causes CPU interruptions. This significantly reduces performance jitters and increases the overall system processing capability.

Snapshot physical backup

Snapshots are a popular kind of storage block method based on storage block devices. In essence, it uses a Copy-On-Write mechanism that records the metadata changes in a block device in order to perform replication at write time, and send the contents of the write operation to the block device utilized by the new replica. This allows us to later restore the database to any stored snapshot time.

Snapshot is a classic post-processing mechanism based on time point and write load models. This means that when we create a snapshot, the data itself isn't actually backed up, rather it is the time window in which the actual data was written after the data to be backed up has been load balanced to the task of creating a Snapshot. This allows us to quickly create and restore data backups. PolarDB provides mechanisms based on Snapshot and Redo log to restore user data according to a time point more efficiently than a traditional data restore where all of the data is tied to Binlog incremental data.

Parallel-Raft algorithm

Speaking of transactional consistency in distributed databases, it's natural to think of the 2PC (2 Phases Commit) and 3PC (3 Phases Commit) protocols. And talking about data status consensus, we can't avoid mentioning the Paxos protocol invented by Leslie Lamport. Paxos has become one of the most popular data consensus algorithms after being implemented by Google and a number of other Internet companies in their distributed systems. However, the theory behind and implementation of the Paxos system is entirely too complicated, making it difficult to to quickly apply it to engineering technology.

One of the problems solved by Paxos is ensuring that any machine in a cluster that are in the same initial state, can reach the same status point through the same string of commands and form a consistent converged state machine. Another problem is that a member of a cluster needs to, through a micro-time serial communication method, find a protocol that is always valid, so that when a data status changes on a machine, the entire cluster (including other machines) are able to become aware through communication and the protocol and acknowledge the status change.

Based on these two points, the protocol has basically solved the issue of machines with different roles in a distributed cluster framework reaching consensus. With another step of progress, we can further design a framework for the vast majority of distributed systems.

It can be said that Paxos is essentially a P2P design, but with added abstraction that makes it even harder to understand. Raft, on the other hand, is responsible for choosing a Leader, and then going through the Leader to update the rest of the roles and reach a status consensus. Overall this makes the system easier to understand. The implementation process of the protocol itself is quite similar to that of Paxos.

Parallel-Raft is a consensus algorithm based on Raft that makes a number of optimizations targeting the I/O model of the PolarDB Chunk Server. The Raft protocol is continuous based on Log, but if log#n hasn't been submitted, then later Logs won't be allowed to be submitted. Parallel-Raft implemented by PolarDB allows parallel submissions, breaking the assumptions that the log in Raft is continuous and increases concurrency and ensures consensus via extra limitations.

Docker

The earliest appearance of container virtualization technology was in the Linux kernel where it was used to migrate processes between operating systems or while a process was running by decoupling the process and the operating system. This allowed it to save, copy, or restore contexts and states while a process was running. LXC, however, lead to the birth of Docker, which has since become a smash hit.

In theory, container virtualization is lighter than other virtualization technologies like KVM. If a user doesn't need to be aware of all of the functions in the operating system, they can use container virtualization technology theory to achieve a better ratio of computing power to efficiency.

Actually, LXC combined with Cgroup virtualization technology and a method of resource separation has been in use for years, particularly for checkpoint and restart recovery in MPI super tasks. PolarDB uses a Docker environment to run the DB computing nodes. It utilizes a light virtualization method to solve achieve resource and performance separation, saving no small amount of system resources in the process.

User-Space file system

Speaking of file systems, it's impossible not to mention the POSIX syntax invented by IEEE (POSIX. 1 has already been accepted by ISO), just as you can't help but bring up SQL standards in a conversation about databases. The biggest challenge to implementing most distributed file systems is executing strong concurrency file reads and writes on the foundation of POSIX standards.

However, POSIX compatibility requires us to sacrifice a certain amount of performance if we are to support the standard in its entirety. It also makes implementation of the entire system much more complicated. In the end, it's a matter of the classic problem of choosing between a common solution and a proprietary design, finding the balance between ease of use and performance.

Distributed file systems is the most enduring technology in the IT industry. Beginning the HPC era, through to the cloud computing era, the internet era, and the data era, there have been more and more new solutions being put forward. Actually, it would be more accurate to say that these solutions are targeted at and customized for different application I/O scenarios. To put it simply though, it just means that POSIX standards are no longer being supported.

At this point there's almost no choice but to retreat from the POSIX protocol, though to be fair, if a solution only serves a certain I/O scenario and doesn't support POSIX, it's not a major problem. This is similar to development from SQL to NoSQL. In order to support the POSIX file system, we need to implement reading and writing that is compatible with standard operating system call interfaces, this way there's no need for the user to change programs designed to work with POSIX interfaces. This way, we need to use the Linux VFS layer to act as the rivet for the actual file system kernel. This is also one of the reasons behind the huge increase in difficulty of file system engineering.

For distributed file systems, kernel modules must exchange data with the User-Space Daemon to implement data sharding and send data to other machines through Daemon. The User-Space file system provides users with a special API and has no need to fully compatible with POSIX, nor does it need the operating system kernel to call 1:1 mapping docking. All we need to do is implement metadata management and data read/write access in the User-Space kernel. This greatly reduces complexity and lends itself more toward communication between processes on a distributed file system.

Looking at the above introduction, it's apparent that PolarDB is a collection of hot technologies across different fields like computing virtualization, high speed networking, storage block devices, distributed file systems, and physical database replication. It is exactly this melding of technologies and innovations that make the performance of PolarDB absolutely take off.

Source: 51CTO

MaxCompute: The AI Platform Bringing Big Data Analysis to the Masses

2,605 posts | 747 followers

FollowAlibaba Clouder - February 8, 2021

ApsaraDB - April 28, 2020

Alibaba Clouder - August 7, 2020

Alibaba Clouder - April 9, 2018

Alibaba Clouder - February 13, 2021

ApsaraDB - April 30, 2019

2,605 posts | 747 followers

Follow PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More Database Overview

Database Overview

ApsaraDB: Faster, Stronger, More Secure

Learn MoreMore Posts by Alibaba Clouder