By Xunfei and Yemo, from Alibaba Cloud Storage Team

On June 29, 2022, Alibaba Cloud launched the first major update of iLogtail after making it open-source. The full-featured iLogtail Community Edition was released. All the C++ core code was open-sourced. This version is aligned with the Enterprise Edition for the first time in terms of core capabilities. Therefore, developers can build an iLogtail cloud-native observability data collector with the same performance as the Enterprise Edition. This release adds many important features, such as log file collection, container file collection, lock-free event processing, multi-tenant isolation, and the new pipeline-based configuration method, to comprehensively enhance the usability and performance of the Community Edition. Developers are welcome to build it together.

Observability is the ability to measure the internal state of the system by checking its output. This term originated from the control theory years ago, first proposed by a Hungarian engineer named Rudolf Kalman. In distributed IT systems, observability typically uses various types of telemetry data (logs, metrics, and traces) to measure infrastructure, platforms, and applications to understand their operating status and processes. The collection of the data is usually completed by a collection agent that runs together with the observed objects. Under the modern system architecture based on cloud-native and microservices, these observation objects are more distributed, more numerous, and change faster than before. Thus, collection agents are faced with the following challenges:

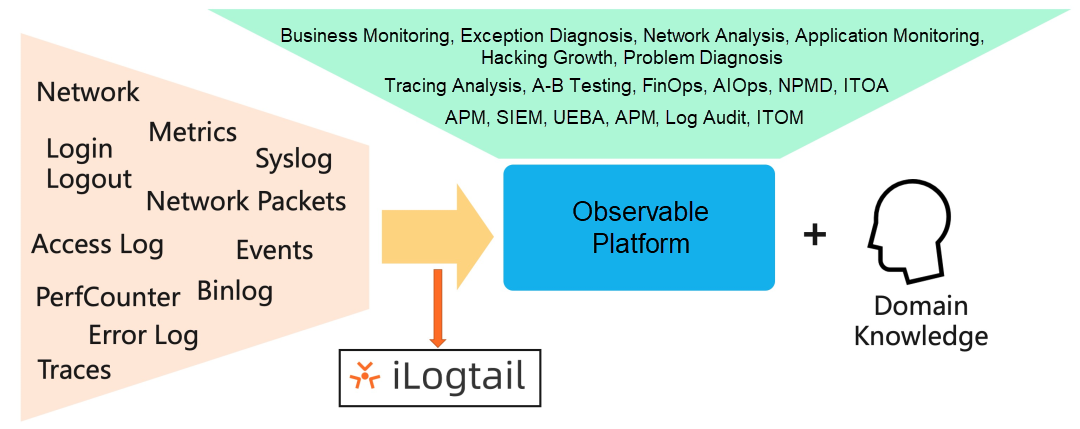

We choose to develop iLogtail because there is no open-source agent that can solve the challenges above perfectly. The core position of iLogtail is the collector of observability data, which helps developers build a unified business data collection layer and helps the observable platform create upper-layer application scenarios.

iLogtail is a collection agent of observable data developed by the Alibaba Cloud Log Service (SLS) Team. iLogtail has many production-level features (such as lightweight, high performance, and automated configuration). It can be deployed in multiple environments (such as physical machines, virtual machines, and Kubernetes) to collect telemetry data. iLogtail serves tens of thousands of customers on Alibaba Cloud for observability collection of hosts and containers. It is also used in the core product lines of Alibaba Group (such as Taobao, Tmall, Alipay, Cainiao, and Amap) as the default collection tool for logs, metrics, traces, and other observability data. iLogtail has been installed on tens of millions of servers and collects tens of petabytes of observability data per day. It is widely used in various scenarios (such as online monitoring, problem analysis and location, operation analysis, and security analysis). Its powerful performance and stability are verified in practice.

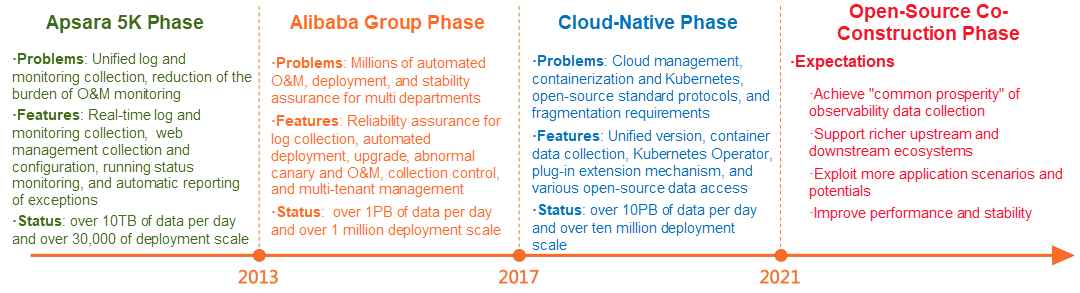

iLogtail originated from the Shennong project of Alibaba Cloud. It was created in 2013 and has been evolving since. In the early days, facing the requirements of Alibaba Cloud and early customers for O&M and observability, iLogtail mainly dealt with the challenges of single-machine, small-scale clusters, and large-scale O&M and monitoring. At that time, iLogtail already had capabilities for basic file discovery and rotation processing. Therefore, it can realize real-time the collection of logs and monitoring with a latency of milliseconds. The speed of single-core processing was about 10 M/s. The web frontend supported the automatic distribution of centralized configuration files. It supported the deployment scale of over 30,000, collects thousands of configuration items, and achieves efficient collection of 10TB of daily data.

In 2015, Alibaba began to migrate Alibaba Group and Ant Financial services to the cloud. Faced with the challenges of super-large data volume from nearly 1,000 teams, millions of terminals, and Double 11 and Double 12 Shopping Festivals, iLogtail needed to make huge improvements in terms of features, performance, stability, and multi-tenant support. Around 2017, iLogtail could parse logs in multiple formats, such as regex, delimiter, and JSON. It supported multiple log encoding methods and advanced processing capabilities such as data filtering and desensitization. The speed of single-core processing in the simple mode increased to 100 M/s, and the performance for processing regex, delimiter, and JSON reached 20 M/s or more. In terms of collection reliability, file discovery polling mode was added, rotation queue order was guaranteed, log cleaning and loss protection was provided, and CheckPoint was enhanced. In terms of process reliability, exception automatic recovery, crash automatic reporting, and daemon process were added. It supported the deployment scale of over 1 million, thousands of tenants, and over 100,000 collection configuration items through the full-process multi-tenant isolation, multi-level high- and low-level queues, configuration-level, and process-level traffic control, and temporary degradation mechanisms, realizing the stable collection of several petabytes of data per day.

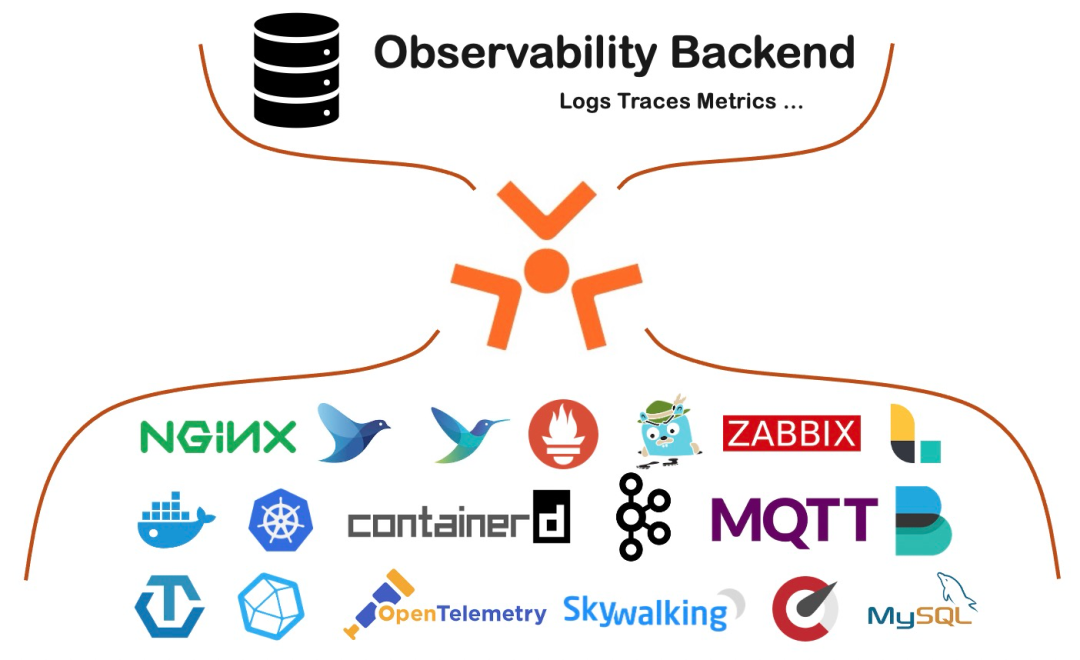

As Alibaba migrates its core business to the cloud and promotes the commercialization of SLS (to which iLogtail belongs on Alibaba Cloud), iLogtail begins to embrace cloud-native in an all-around way. Faced with a diverse cloud environment, a rapidly developing open-source ecosystem, and a large number of industrial customers, iLogtail has shifted its focus of development to solving problems, such as how to adapt to a cloud-native environment, how to be compatible with open-source protocols, and how to deal with fragmentation requirements. In 2018, iLogtail supported Docker container collection. In 2019, it supported containerd container collection. In 2020, it fully upgraded metric collection. In 2021, the support for trace was added. With comprehensive support for containerization, Kubernetes Operator control, and extensible plug-in systems, iLogtail supports the deployment scale of over ten million, tens of thousands of internal and external customers, and millions of collection configuration items, achieving a stable collection of tens of petabytes of data per day.

In November 2021, iLogtail had the code of the Golang plug-in open-source, taking the first step towards open-source. Since then, it has attracted the attention of hundreds of developers, and many of them have contributed processor and flusher plug-ins. Today, the core code of C++ is also officially open-source. As a result, developers can build a complete cloud-native observability data collection scheme based on this version.

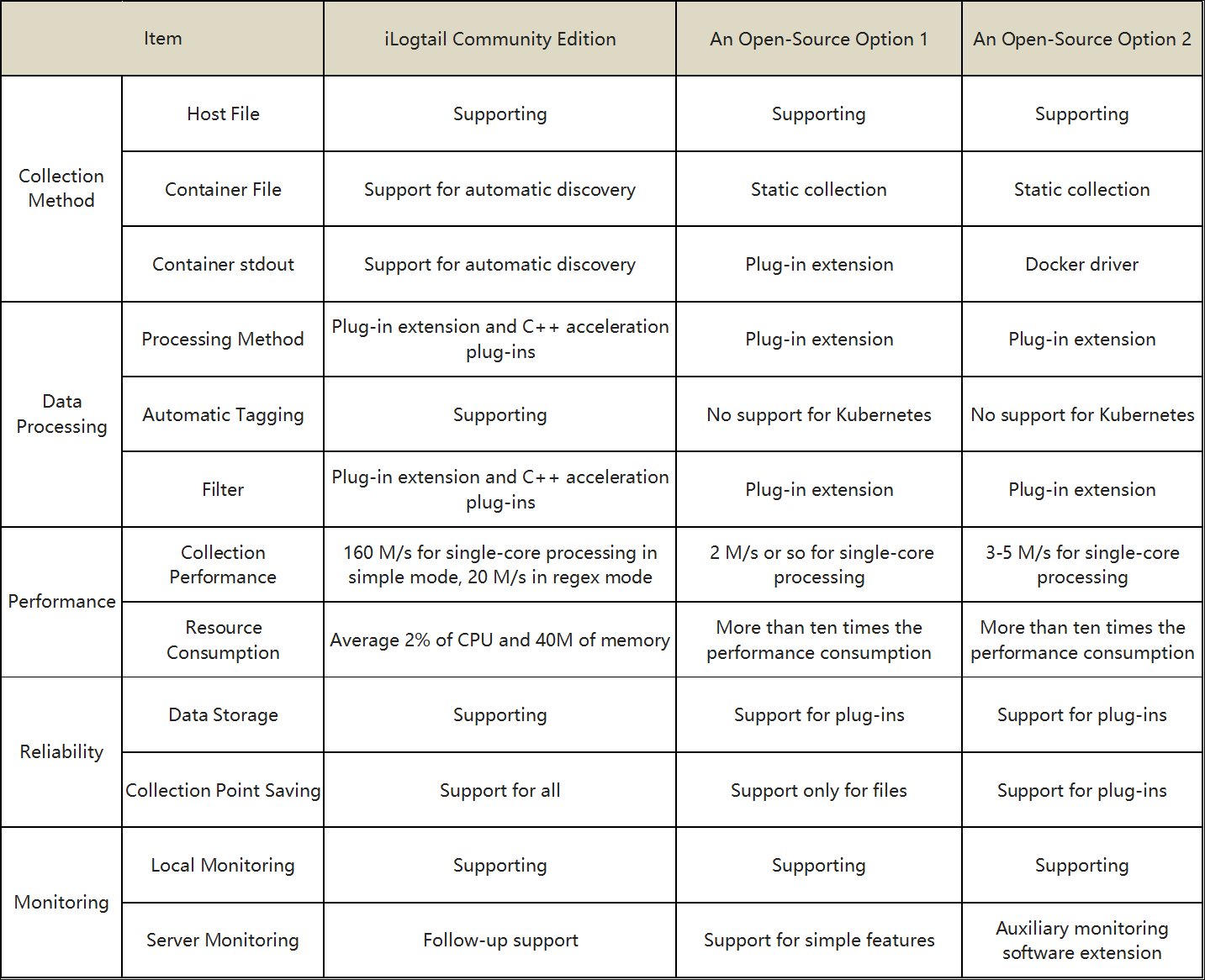

There are many open-source collectors for gathering observability data, such as Logstash, Fluentd, and Filebeats. These collectors have a wide range of features, but iLogtail has advantages due to its unique design in terms of key features, such as performance, stability, and control capabilities.

This time, the open-source iLogtail C++ kernel is fully aligned with the features of its Enterprise Edition, and a pipeline-based simple collection configuration item is added to improve the usability of the Community Edition.

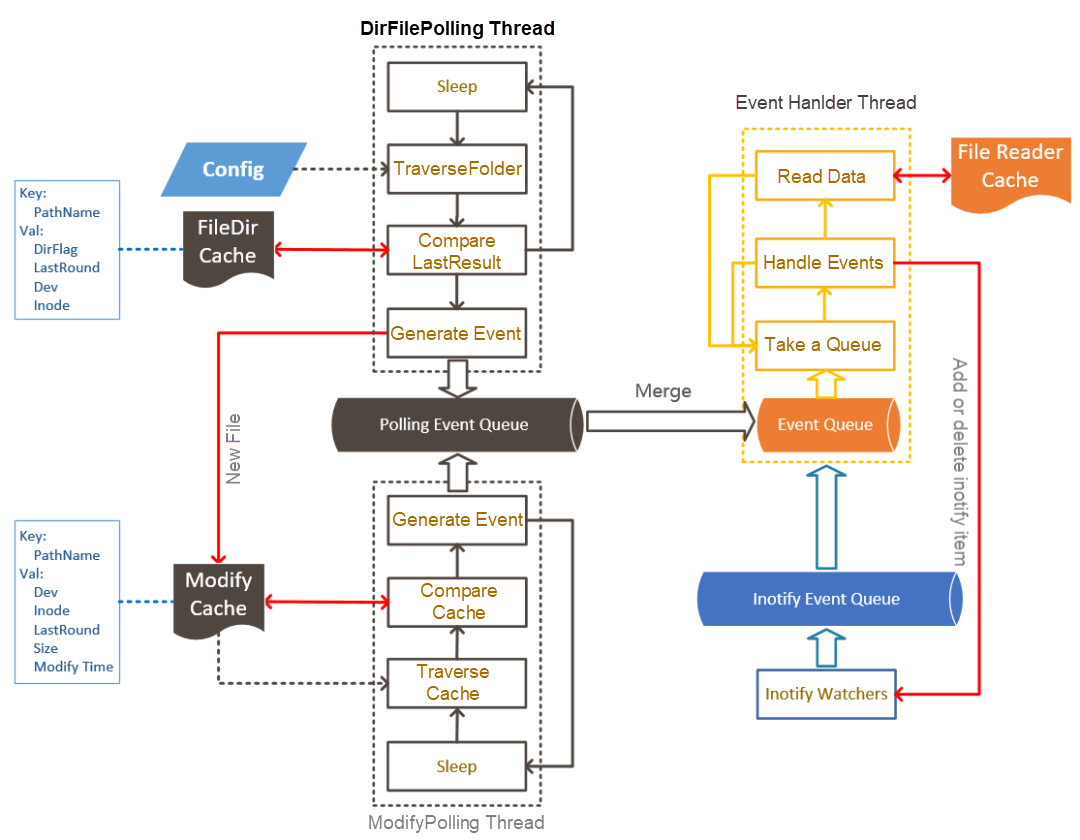

The core source code of C++ contains a fully functional file discovery mechanism. It can support dynamic log file monitoring of wildcards and multi-level directories and support log rotation, log count, and rotation settings. iLogtail uses iNotify as the main method of file monitoring in Linux to provide data discovery with millisecond latency. It also uses polling as the data discovery method to adapt to different operating systems and support various special collection scenarios. iLogtail creates a file discovery mechanism that has both performance advantages and robustness using a hybrid mode of polling and event.

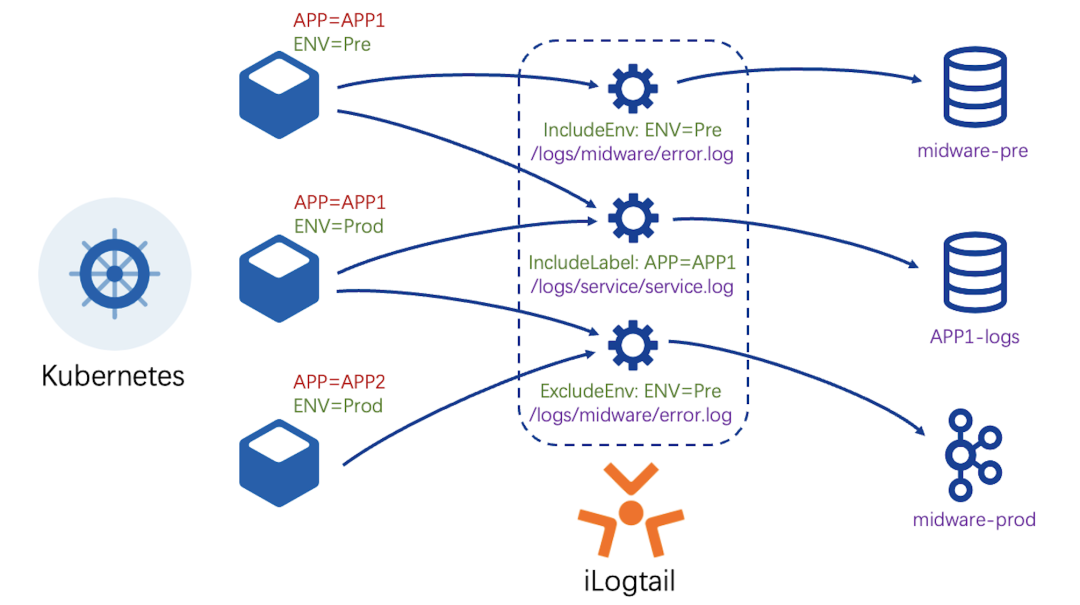

The iLogtail C++ kernel works with the plug-in system to support container data collection in all scenarios. iLogtail uses plug-ins to discover the container list of nodes and maintain the mapping between containers and log collection paths. It combines the efficient capability for file collection of the C++ kernel to provide the ultimate container data collection experience. iLogtail allows you to filter containers using container tags, environment variables, Kubernetes tags, pod names, and namespaces. In addition, iLogtail provides the capability for conveniently configuring collection sources. It supports multiple deployment methods, such as DaemonSet, Sidecar, and CRD, providing flexible deployment capabilities for different scenarios. iLogtail uses a global container list and the Kubernetes CRI protocol to obtain container information. It is lighter in terms of permissions and component dependencies and has higher collection efficiency than other open-source agents.

One of the secrets of iLogtail to achieve such high throughput is a lock-free event processing model. Unlike other open-source agents in the industry that allocate an independent thread or Goroutine for each configuration to read data, iLogtail only configures one thread to read data. Since the problem of data reading is not computing but disk, a single thread is sufficient to complete event processing and data reading of all configurations. Using a single thread enables iLogtail to run event processing and data reading in a lock-free environment, and the data structure is lighter, thus achieving better performance than multi-threaded processing.

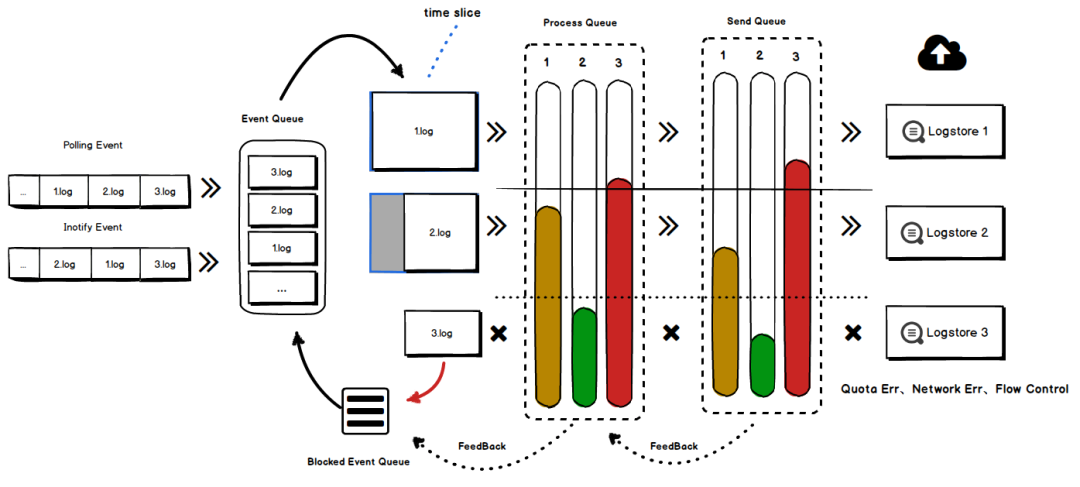

It is normal for a server to have hundreds of collection configurations in the production environment. The priority, log generation speed, processing method, and upload destination of each configuration may be different. Therefore, it is necessary to solve the problem of how to isolate various custom configurations and ensure that the collection configuration QoS is not affected by some configuration exceptions. iLogtail adopts several key technologies (such as time slice-based collection and scheduling, multi-level high- and low-level feedback queues, event non-blocking processing, throttling and shutdown strategies, and dynamic configuration updates) and achieves a multi-tenant isolation scheme with isolation, fairness, reliability, controllability, and cost-effectiveness. The tests of peak traffic in Double 11 over the years prove this solution has greater stability and cost-effective advantages than other open-source agents.

Simple and intuitive configuration files are crucial to the use of agents. In the early days, iLogtail almost relied on graphical configuration. The default JSON configuration files are redundant and difficult to understand. This upgrade uses the YAML format. In addition to the advantages of strong readability, less string escape, support for multiple lines of text, and the ability to add comments, the configuration file is re-divided into four parts: inputs, processors, aggregators, and flushers, based on the latest data pipeline architecture of iLogtail. The feature configuration is focused on rather than implementation details, and the naming specification of configuration items is unified to reduce requirements for the configuration of iLogtail. This is an example of the simplest configuration:

enable: true inputs: - Type: file_log LogPath: /log FilePattern: simple.log flushers: - Type: flusher_stdoutIn November 2021, we made the Golang plug-in open source, which is the most versatile and scalable part of iLogtail. We received a lot of attention and suggestions from developers. It has been added to favorites over 600 times and received more than 60 suggestions and over 120 times of PR. The C++ kernel module is the main factor that iLogtail has advantages over other open-source collection software in terms of performance and resource usage. It is expected that the open-source C++ kernel can help improve the resource efficiency for more enterprises, enrich the product ecology of iLogtail, and attract more developers to participate in community construction.

We believe that being open source is the best development strategy for iLogtail to release its greatest value in the current cloud-native era. As the most basic software in the observable field, iLogtail still has many scenarios to be discovered in various industries. We hope to construct iLogtail together with the open-source community, continue to optimize it, and strive to make it a world-class observable data collector.

Alibaba Cloud Powers CIBI to Digitalise Anti-fraud Initiative in the Philippines

A GitOps Practice with GitLab, Terraform, Docker Hub, and Alibaba Cloud

1,353 posts | 478 followers

FollowAlibaba Cloud Native Community - August 13, 2025

Alibaba Cloud Community - June 27, 2023

Alibaba Cloud Community - August 5, 2022

Alibaba Cloud Native Community - August 14, 2024

Alibaba Cloud Community - December 21, 2023

Alibaba Cloud Community - February 20, 2024

1,353 posts | 478 followers

Follow Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Log Management for AIOps Solution

Log Management for AIOps Solution

Log into an artificial intelligence for IT operations (AIOps) environment with an intelligent, all-in-one, and out-of-the-box log management solution

Learn MoreMore Posts by Alibaba Cloud Community