By Yang Yongzhou, nicknamed Chang Ling.

In this three-part article series, we've been taking a deep dive into the inner-workings of Alibaba's big logistics affiliate, Cainiao, and take a look at the "hows" and "whys" behind its logistics data platform.

In this third part of this three-part series, we're going to continue our discussion about the ark architecture and Cainiao's elastic scheduling system, covering a variety of topics. To be more specific, we will be covering the topics of the policies involved with the architecture as well as the selection between queries-per-second and response time. Then, we will also be taking a look at the topics of threshold comparison and multi-service voting, downstream analysis, and several other miscellaneous topics that are helpful in understanding the overall system architecture.

Want to read on? Have you read the previous articles in this series? Why Cainiao's Special and How Its Elastic Scheduling System Works and The Architecture behind Cainiao's Elastic Scheduling System.

To continue our discussion on the ark architecture that is behind the elastic scheduling system of Cainiao, in this article we're first going to take a look at some of the policies involved in this architecture.

The elastic scheduling system of Ark supports the quick horizontal extension of decision-making policies. Currently, multiple decision-making policies are included, and some policies are being tested or verified. The following introduces several of the earliest core policies that went online.

Resource security policies focus on the usage of system resources. Based on the past O&M experience and the business dynamics of Cainiao, our resource security policies focus on three system parameters, which are CPU, LOAD1, and Process Running Queue.

When the average value of one or more of these parameters on all servers in an application cluster in a recent time window violates the upper threshold to eliminate the impact of glitches and uneven traffic, a scale-out request is initiated. In this case, custom configuration is supported. If multiple violations exist, the decision-making result of the current policy is the one with the largest number of containers to be scaled out. The method of calculating the quantity will be described later in this article.

The above graphs show resource usage at the time when a resource security policy obtains the scale-out result.

Resource optimization policies also focus on the usage of system resources, but they are intended to reclaim resources when the system is idle. These policies also focus on the preceding three system parameters. When all the three parameters are below the lower threshold, a scale-in request is initiated. Note that due to the existence of the second layer of decision-making, when the other decision-making layers require scale-out, scale-in requests generated by resource optimization policies are suppressed.

The above graphs show the resource usage at the time when a resource optimization policy obtains the scale-in result.

The current elastic decision-making model is posterior in nature. That is, a scale-out request is initiated only when a threshold violation occurs. For some services, such as regular computing tasks, traffic may surge on a regular basis.

Therefore, automatic policy configuration is based on the historical traffic changes of an application cluster. When the traffic changes are determined to be periodic changes and are too big and when the posteriori elasticity cannot keep up with the changes in time, a time policy configuration is generated for this application cluster. That is, the number of containers in this application cluster is maintained above a specified number during a specified period of time every day.

Due to the existence of the second layer of decision-making, when other policies determine that the number of containers required in this period of time is greater than the specified number of containers, the larger number prevails. In such a period of time, decision-making results for scale-out are continuously generated based on time policies, and therefore decision-making results for scale-in are continuously suppressed.

During the continuous generation of decision-making results for scale-out, if the current number of containers is equal to the target number of containers, a scale-out decision is generated. In this case, the number of containers to be scaled out is 0.

Service security policies are the most complex among all policies. Currently, services include the Consumer in the message queue, Remote Procedure Call (RPC) service, and Hyper Text Transfer Protocol (HTTP) service. At least half of the scale-out tasks each day are initiated by service security policies.

Next, there's the important question of the selection between queries-per-second (or QPS) and response time (RT). Given the relatively complexity of this topic, we've decided to make this its own section. Many elastic scheduling systems consider QPS as the most important factor.

However, after preliminary thought and discussion, we decided to use RT instead of QPS due to the following reasons: QPS is a service variable. Its change is subject to your actual usage. You can determine whether the QPS is reasonable for a service at the current moment. However, for the system, if it can bear the load and the service response rate and service RT are within a reasonable range, any QPS value is reasonable. In other words, the current total QPS can be used as the basis for judging the service health level, but cannot be used as the basis for judging the system health level in the narrow sense. In this case, note that the maximum QPS that a single server can bear is different from the current QPS. Service RT and the service response rate are the most fundamental characteristics of a service's performance.

The current number of containers is obtained based on the current QPS and the maximum QPS that a single server can bear. However, this method is not valid unless resources are completely isolated and the resources used by each query are almost equal. For the applications of Cainiao, the following applies:

A data query service and a service that involves the operations of a specific service provided by a team may emerge in the same application cluster. In such case, the total QPS becomes meaningless, and it is impossible to obtain the relationship between a single request and resources for different services.

During comprehensive stress testing, containers on the same physical machine often compete with one another for homogeneous resources. This results in a difference between the actual online run-time environment and the environment of the single-server performance stress testing. As a result, the referential significance of data obtained from the single-server performance stress testing is doubtful.

However, single-server performance stress testing cannot keep up with every change promptly, and therefore timeliness cannot be guaranteed.

Automatic threshold configuration for RT of massive services was introduced in a preceding example and will not be described further in this section.

However, it needs to be noted that such threshold setting provides mathematically reasonable thresholds, which need to be modified with other methods. Therefore, we introduce another assumption that an RT threshold violation caused by insufficient resources can affect all the services in an application cluster. This is because the resources used by services in this application cluster are not isolated but uses the same system environment. Still, being affected does not mean all services violate the threshold. The resource types on which different services depend are different, and the services also vary in their tolerance for resource shortages.

In view of this, we use a multi-service voting mode to determine RT. The threshold of each service is compared separately. A scale-out decision is made only when services with RT threshold violations occupy a certain proportion of total services, the number of containers determined by service security policies to be scaled out is decided by the service that has the highest degree of threshold violation, which is in percentage terms.

According to the actual operating situation, incorrect and excessive scale-out occurs a lot when the principle that containers are scaled out when the RT of a service violates the threshold is used. After the implementation of the multi-service voting mode, incorrect and excessive scale-out has been almost eliminated. Actually, this mode is often used when whether scale-out is incorrect or excessive is manually determined. In this case, when the RT of multiple services simultaneously becomes high, scale-out is considered as reasonable. Additionally, the more services an application cluster provides, the better this mode performs.

Scale-out is not conducted for all RT threshold violations. If the RT rises due to the middleware or downstream services on which a service depends, scale-out cannot solve the problem and may even exacerbate it. An example of this is if the database thread pool becomes full. Therefore, during computing, when a service security policy finds that the RT of a service violates its own threshold, the service security policy will first check whether the RT of the middleware and the RT of downstream services on which this service depends violate their respective SLAs. This service participates in the voting on scale-out only when it violates its own threshold but the corresponding middleware and downstream services do not violate their thresholds. When an offline computing task calculates the SLA of a service based on historical data, it also calculates the thresholds of the middleware and downstream services on which this service depends. Among the historical data, data on the link structure derives from the offline data of EagleEye.

An elastic scheduling system needs to ensure that scaling is timely enough, while also having enough tolerance for glitches. Otherwise, incorrect scale-out or scale-in may occur. Glitches are common in the actual environment. Problems with the environment, such as uneven traffic and jitters, can result in glitches, and potential anomalies and bugs in the monitoring and statistical system may also cause glitches.

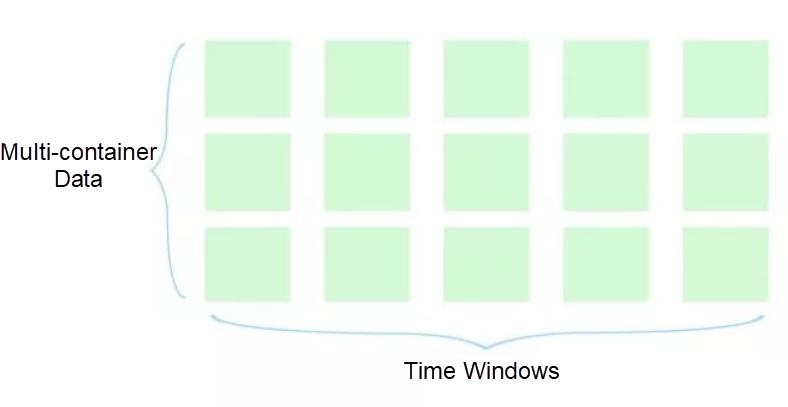

To prevent glitches, the elastic scheduling system of Ark takes time window data as the computing source when performing any calculation, and the data of each period of time covers all containers in an application cluster.

For each required metric, the maximum and minimum values in a time window are removed during computing, and the average value is obtained to offset the influence caused by glitches. Besides, the size of a time window greatly affects the elimination of glitches and the timeliness of the elimination. Currently, 5-minute and 10-minute time windows are available for the elastic scheduling system of Ark. For policies that may result in scale-out, the smaller time window is selected, whereas for policies that may result in scale-in, the larger time window is selected. We want scale-out to be more aggressive than scale-in.

Determining the number of containers to be scaled out or in is the second step after a scaling decision is made. Let's first take a look at scale-in. The speed of scale-in is faster than that of scale-out, and scale-in does not affect stability. Therefore, the elastic scheduling system of Ark scales in containers steadily and promptly. When a scale-in decision is made, the fixed number of containers to be scaled in is 10% of the current number of containers in an application cluster.

Determining the number of containers to be scaled out is much more complicated than determining the number of containers to be scaled in. Take quantitative decision-making, the most common threshold comparison policy, as an example. First, we define a "maximum" principle that the number of containers to be scaled out every time cannot be higher than 50% of the current number of containers in an application cluster. Within this range, the higher the degree of threshold violation is, the more containers are scaled out. Here, we introduce the sigmoid function. Through certain conversion, the computing result of this function is limited between 0 and 0.5. The degree of threshold violation is considered as an input parameter and put into the sigmoid function, and then the percentage of containers to be scaled out is obtained. During the process, some parameters are adjusted.

Sigmoid is a curve that changes between 0 and 1, and is often used in computing for data normalization. In the equation that f (x) is sigmoid (x) minus 0.5, when x is greater than 0, the range of sigmoid is between 0 and 0.5.

For data like CPU usage, the range of sigmoid is at most 100. However, there is no upper limit for data like service RT. Obviously, using the same formula can result in the CPU-triggered number of containers to be scaled out being smaller than that triggered by service RT. In such cases, we only need to set a goal. For example, when the CPU usage reaches 80% (that is, the degree of threshold violation is calculated by dividing 80% minus a threshold by a threshold), we want the percentage of containers to be scaled out to be close to 50% of the current number of containers in an application cluster. Based on this goal, the inverse operation of f (x) produces coefficient a. This coefficient is put into the computation of the number of containers to be scaled out for reaching the target CPU usage, and then the expected result is obtained.

The capacity of a link is considered to be elastic when all the application clusters along this link have been connected to the elastic scheduling system.

Ark provides a complete suite of solutions for resource management by combining its container scheme and elastic scheduling. The container scheme allows all application owners to request capacity during the specified promotional periods involving stress testing and big promotions. When application clusters connected to the elastic scheduling system of Ark enter the specified promotional periods, the scaling mode of this system will be immediately switched to the manual verification mode. Moreover, the number of containers in each application cluster will be scaled out to the number of containers that you requested through the container scheme. When the promotional periods end, the number of containers in an application cluster not connected to the elastic scheduling system will be directly scaled in to its original number of containers, whereas an application cluster connected to the elastic scheduling system will slowly reclaim resources through an elastic scheduling policy.

During the promotional periods, although the elastic scheduling system does not automatically scale out or scale in containers, the decision-making for scaling is still underway. The elastic scheduling system continuously sends suggestions on scale-out or scale-in to the administrator or your decision-making team for big promotions. In this way, it promptly sends alerts and provides a suggested number of containers to be scaled out when the traffic exceeds expectation and resources are insufficient. Moreover, it helps the administrator to reclaim some resources promptly and to make scale-in decisions.

Using elastic scheduling to push forward the evolution of services has been our permanent goal. For us, only this can enable a truly closed loop of services. This impetus is based on data analysis. Currently, the elastic scheduling system of Ark produces the following types of data:

This reflects the degree of correlation between service RT and the usage of system resources like CPUs. A higher correlation indicates that service usage is more sensitive to resources and that elastic scheduling brings more significant benefits.

This reflects service RT threshold violations caused by middleware RT threshold violations. The increase in service RT directly affects service availability. Therefore, when this metric is too high, we recommend that you check and optimize the usage of middleware.

This reflects service RT threshold violations caused by RT threshold violations of downstream services. When this metric is too high, we recommend that the current application promote the update of downstream services in stability or promote the elasticity of downstream services.

A scale-out task is not considered successful until the corresponding application instance is started and can provide services normally for users. The less time it takes to start an application, the more quickly protection is provided when traffic peaks occur.

We have found that the service RT of many applications connected to the elastic scheduling system is within the normal range and that their configured rate limits are unexpectedly triggered when the usage of system resources is extremely low. In this case, we recommend that applications conduct reasonable service analysis and testing to adjust their rate limits.

2,593 posts | 789 followers

FollowAlibaba Clouder - January 2, 2020

Alibaba Clouder - January 2, 2020

Alibaba Clouder - May 13, 2021

Alibaba Clouder - December 13, 2019

Alibaba Clouder - January 22, 2020

Data Geek - November 11, 2024

2,593 posts | 789 followers

Follow ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More Security Center

Security Center

A unified security management system that identifies, analyzes, and notifies you of security threats in real time

Learn More Server Load Balancer

Server Load Balancer

Respond to sudden traffic spikes and minimize response time with Server Load Balancer

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn MoreMore Posts by Alibaba Clouder