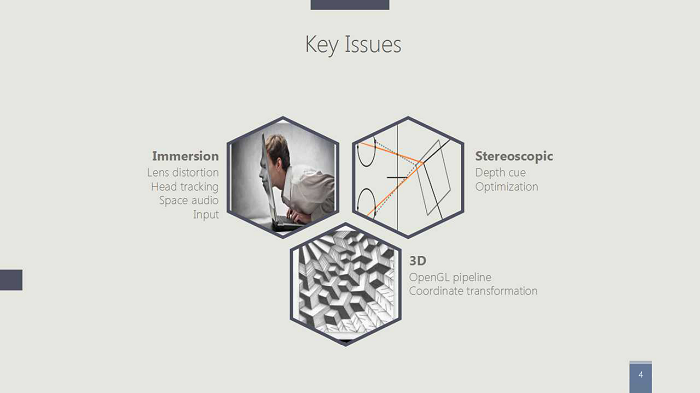

The wonderful new world of Virtual Reality is exciting. There is no denying that. However, for a VR newbie looking to uncover the technical side to the technology, it may be an instant headache once you lift the hood off of the VR engine. Not only do VR developers need to have a strong two-fold knowledge of partial graphics, but they also need to knowledgeable of technologies such as gyroscopes and filters as well. Complex in nature, it may be difficult for the newcomer to know where to start. This article aims to give you the breakdown of the 3 main components of VR: immersion, stereoscopy and 3D.

The ultimate mission of VR is to allow users to perform interactions and undergo experiences in the virtual environment just as would they feel in the real environment. To achieve this, first, it is imperative to successfully block the external world's inputs to the user assigned to experience VR. The best way to do this is to cover up the eyes using some device such as a box, so that the external facilities and surroundings are not visible and stay completely in the virtual image environment.

However, this approach has its own drawbacks. Human eyes cannot focus if an item is too close, therefore, lenses are required to view things clearly. Although lenses have their own set of challenges such as the magnifier effect and dispersion, which allows the eyes to focus and view the objects clearly when it is very close. Immersion enables users to feel the same in a virtual environment as in the real one. While shaking their head, they are unable to differentiate between the two environments. Hence, it is important to track the head movements accurately.

The second major issue is stereoscopy. For example, if the left ear of the user hears something loud, then he or she will turn around to see what happened. In addition, we should be able to imitate various input sources in the virtual environment to reproduce the interactions in the real environment.

Stereoscopy and 3D are two different things. While the latter focuses more on the monocular vision, a common approach in traditional games, one can feel the former in the cinema or wiggle 3D scenarios, with a sense of depth. But before we get into that, lets take a step back and dive into the concept of 'immersion'.

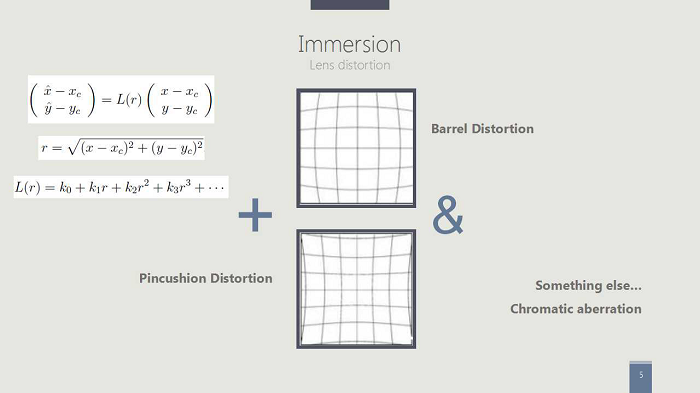

Straight lines are concave in the view of VR glasses, so performing a counter-distortion operation is necessary for the concave line to make it a barrel distortion. This will make the lines appear straight again on seeing it through the lenses.

Apart from this, the introduction of lenses also causes chromatic dispersion. Often, individuals ignore dispersion correction as it hinders the performance and dispersion are not very obvious in general VR usage.

Moving forward, let us discuss the problems with head tracking. All general phones have a nested IMU that can move on three axes. As such, they can estimate a user's current posture under certain circumstances. One major problem is that the curve noise in the transmitted data may be high due to different hardware and user operation frequencies. The function curve made out of the data may present serrated waves. Consequently, there is a need to smooth out these serrations. In head tracking, it is the most crucial proposition, and hence some filters are essential. Some frequently used filters are Kalman and complementary filters.

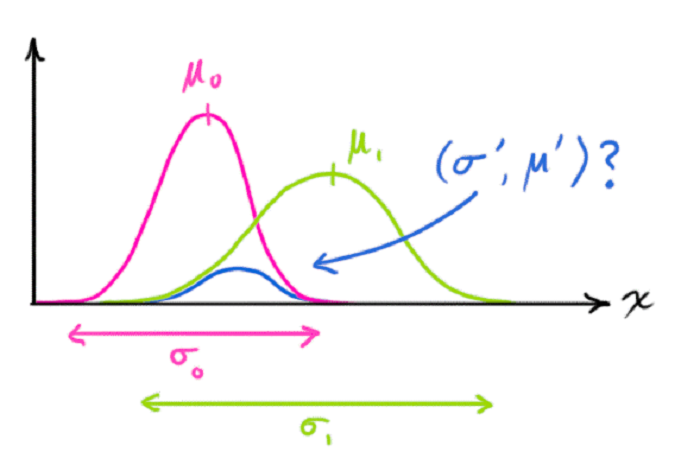

Mobile phones have three important sensors namely geomagnetic sensor, acceleration sensor and gyroscope. Ideally, the mobile phone can deduce mobile rotation posture using geomagnetic and acceleration sensors. Gyroscope, on the other hand, can measure the current rotating posture independently. However, the two results and two Gaussian distributions are not equally credible. We can overlay the Gaussian distribution of the two to calculate a comparatively reliable value, which is an application of filters in the VR head tracking field.

Below is an example using the complementary filter. The angular velocity measured by gyroscope is reliable only for a short period. The reason is that the result should be an iterated one with repeated multiplication of angular velocity by time. If a minor deviation exists in every measurement, the accumulated deviation will keep increasing as time goes by. However, accelerated velocity, on the other hand, is reliable over the long term and not over the short term. Thus, a reliable-only-in-short-term gyroscope is ideal for measurement to correct the range of the general trend.

To learn about the stereoscopy, one needs to understand the manner in which people perceive depth. The perception of depth in the monocular vision is a result of brain learning. Therefore, binocular vision is quite necessary to perceive it. The depth cues include perspective projection, the level of image details, occlusion as well as lighting, shadows and motion rates. The most primary reason is nevertheless, binocular disparity.

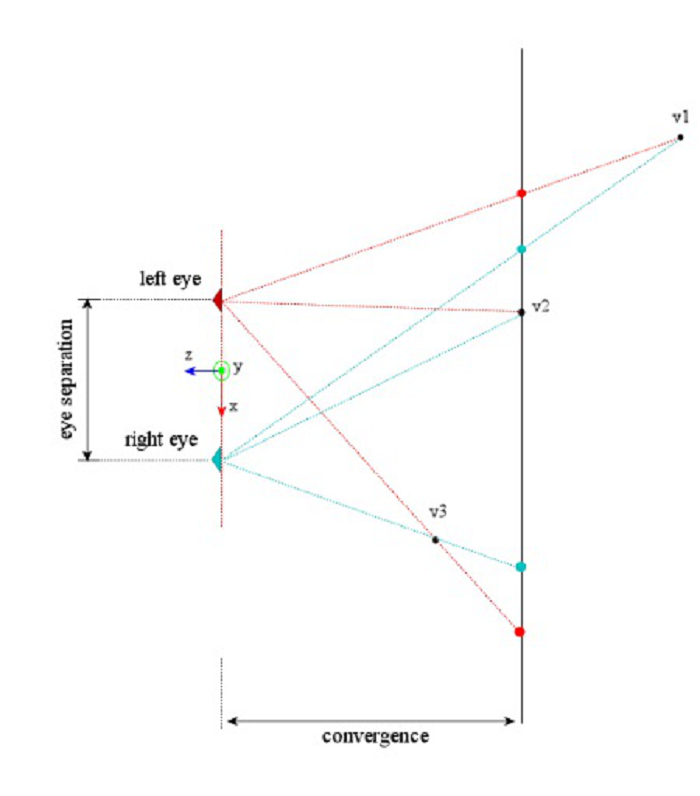

Calculation of optical parallax proceeds as demonstrated below.

As shown in the figure above, the lines of sight of the left and right eyes are parallel and vertical. The vertical plane is the projective plane. There exists a positive and negative relation in the binocular disparity. There are two focal points on the projective plane upon the projection of Point V1 to the two eyes respectively. For V1, the left and right eyes share the same direction of focal points, that is, the positive parallax. For V2, its projective point falls on the physical plane, with a zero parallax. If the projective point falls out of the screen, the parallax may go reverse to form the negative parallax. Therefore, people will feel that an object with a positive parallax is located on the screen, while an object with a negative parallax is located out of the screen. An object with zero parallaxes will be seen located on the screen.

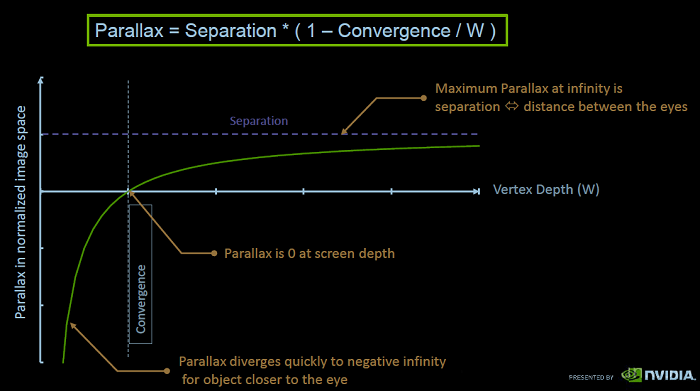

The curve shown in the image above presents the discussed situations of positive, zero and negative parallaxes. The parallax grows slowly with the increase of vertex depth. When the distance is infinitely large, the parallax change rate slows down gradually. Therefore, people may have a lower perception of depth for an object that is far away. However, when it comes closer, the depth perception will grow relatively obvious. In addition, NVIDIA does not recommend negative parallax operations. Although it may look attractive to project objects out of the screen, people's eyes may have trouble focusing on the closer objects. Such strain can cause an eye muscle tension and vertigo. Some documents also suggest that the negative parallax should never exceed a half of the convergence plane.

In parallax calculation formula, the Separation refers to the interpupillary distance which is 65 mm. Convergence refers to the convergence plane, that is, the distance between the eyes and the projective plane, and W refers to the vertex depth. Calculating the respective parallaxes for projection to the two planes proceeds based on the depth.

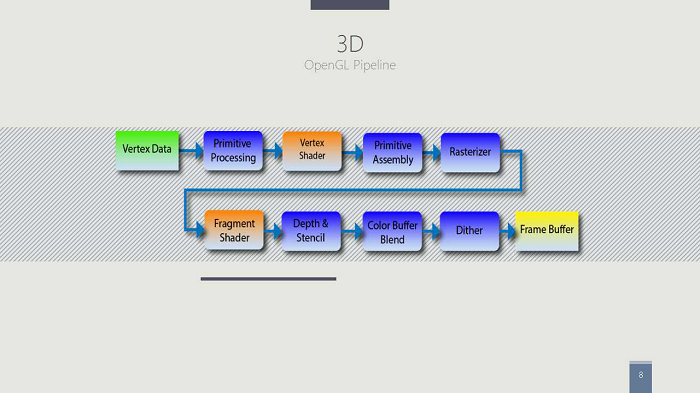

In the OpenGL Pipeline, some vertex data will receive the graphic primitives assembling in the Primitive Processing stage. Upon receipt, the Vertex Shader will transform the vertex of the graphic primitives. Thereafter, it will assemble the transformed vertices into corresponding graphic primitives, such as triangles, line segments or points. Once the graphic primitive assembling is complete, rasterization of the zones bordered by graphic primitives follows. Then the Fragment Shader colors every rasterized fragment. After this step, depth measurement for every fragment follows. If the depth is too much, the fragment is of no value, thus directly discarded, skipping all the steps afterward. If retained, the fragment proceeds to the Frame Buffer for coloring. This step checks the transparency of this fragment. It also determines whether to replace the color applied in the previous Frame Buffer step directly, or proceed to Color Buffer Blend, before passing it to the Dither step and then to the Frame Buffer.

In fact, it is possible to complete the lens distortion correction in the Vertex Shader and Fragment Shader. The performance in the former step is better than that in the latter step.

The above illustration shows the object translation process. The model space projects the object to the world space, which in turn translates it into the eye space that further translates it into the NDC. In fact, the head tracking of VR is more of a process of transforming the object in the world space to the one in eye space. The game's camera view has become the eyes of the users.

Good question. Quick answer – Yes. 3D only involves monocular rendering while stereoscopy requires binocular rendering to form a stereoscopic effect. 3D actually does not touch on depth perception. For example, if you stare at a rotating cube for a long time, you will be unable to tell whether it is a clockwise or an anticlockwise rotation. However, if we render the cube into two with a parallax and see it through the VR box, such confusion ceases to exist.

Under the hood of VR lies 3 vastly complex fascinating technologies which all work together to bring the world Virtual Reality. Hopefully VR newbies now have a better launch pad to jump a level up in VR. Leave questions and comments below.

Three industries you didn't realize are benefitting from big data

2,593 posts | 791 followers

FollowIain Ferguson - March 22, 2022

Alibaba Cloud Community - May 9, 2022

Rupal_Click2Cloud - September 4, 2024

Alibaba Clouder - April 25, 2017

Alibaba Cloud Community - November 18, 2022

Iain Ferguson - November 22, 2021

2,593 posts | 791 followers

Follow Metaverse Solution

Metaverse Solution

Metaverse is the next generation of the Internet.

Learn MoreMore Posts by Alibaba Clouder

Raja_KT March 6, 2019 at 3:06 am

Interesting one.... immersion, stereoscopy and 3D. 3D yes, but the first two are new to me :)