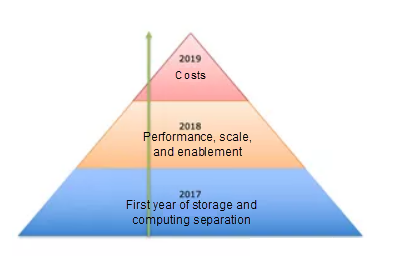

Way back in 2017, Dr. Wang Jian initiated a lively discussion about whether "IDC as a computer" was possible. To achieve this objective, storage and computing resources must be separated and independently and freely scheduled by the scheduler. Among all businesses, databases lead the difficulty in achieving storage and computing separation. This is because databases impose extremely demanding requirements on I/O latency and stability. However, storage and computing separation is becoming a technical trend in the industry, and it has been implemented in Google Spanner and Aurora.

In 2017, we successfully implemented the separation of storage and computing. Based on the Apsara Distributed File System and AliDFS (ceph branch), the amount of storage and computing separation accounted for 10% of the transaction volume in the Zhangbei unit. 2017 was the first year of the implementation of database storage and computing separation, laying a solid foundation for storage and computing separation on a large scale in 2018.

As breakthroughs had been made for storage and computing separation in 2017, we pursued extreme performance and development from experiments to large-scale deployment in 2018. To reach these goals, developers faced considerable challenges. On the basis of the breakthroughs made in 2017, making storage and computing separation even more efficient, adaptive, universal, and simple presented even greater challenges in 2018.

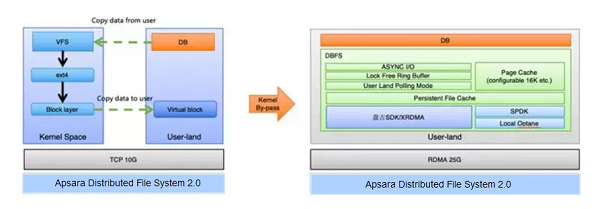

To achieve optimal I/O performance and throughput of databases with separated storage and computing resources, we developed the user-state cluster file system, DADI DBFS. By migrating technologies to DADI DBFS user-state cluster files, we enabled full-unit and large-scale storage and computing separation for database transactions of Alibaba Cloud. As a storage middleground product, which technical innovations does DBFS offer?

"Zero" Replication

The user-state technology and bypass kernel are used for "zero" replication of the I/O path. This eliminates replication operations both inside and outside the kernel, greatly improving the throughput and performance.

In the past, two data copies were required when the kernel state was used: The user-state process of the business copies data to the kernel, and the kernel data is copied to the user-state network for forwarding. These copies affected the overall throughput and latency.

After the user state is applied, the polling model is used to send I/O requests. For CPU usage in polling mode, the adaptive sleep technology is applied so that core resources are not wasted during idle periods of the CPU.

RDMA

DBFS directly exchanges data with Apsara Distributed File System by using the RDMA technology to provide a higher throughput and a latency that is similar to that of the local SSD. This development in 2018 made it possible to implement extremely low cross-network I/O latency, laying a firm foundation for large-scale storage and computing separation. The group's RDMA cluster introduced in this year's great promotion campaign is the largest cluster in the industry.

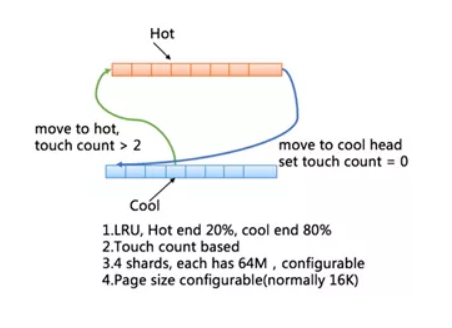

To achieve buffer I/O capabilities, page caching was implemented separately by using the touch-count-based LRU algorithm. The touch count was introduced to improve the integration with the I/O features of databases. Large table scanning is common in databases, however, because scanning data pages that are rarely used can compromise LRU efficiency, this is not desirable. To address this issue, pages are moved between the hot and cold ends based on the touch count.

In addition, the page size is configurable for page caching. By combining this with the page size of a database, the page caching efficiency can be even higher. In general, DBFS page caching has the following capabilities:

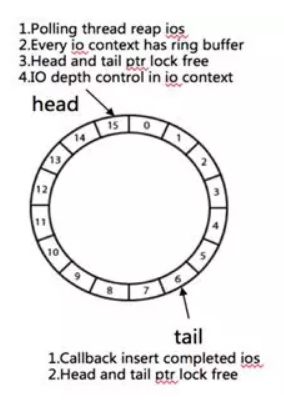

Most database products use asynchronous I/O to improve I/O throughput. Similarly, DBFS implements asynchronous I/O to adapt to the I/O features of upper-layer databases. Asynchronous I/O has the following features:

DBFS implements atomic writing to ensure that partial write does not occur when a database page is written. DBFS-based InnoDB safely disables the double write buffer, conserving the bandwidth for the entire database during storage and computing separation.

In addition, similar to PostgreSQL, DBFS uses buffer I/Os to prevent the page missing problem that is prone to PostgreSQL during dirty page flushing.

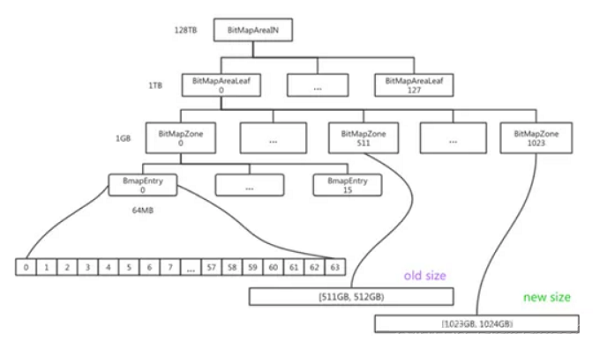

To prevent data migration due to resizing, DBFS is combined with the underlying Apsara Distributed File System for online volume resizing. DBFS uses its own bitmap allocator to manage underlying storage space. The bitmap allocator is optimized to achieve lock-free resizing at the file system layer, making it possible for the upper-layer business to efficiently resize at any time without loss. This makes DBFS superior to the traditional ext4 file system.

Online resizing prevents wasted storage space because the storage space does not need to be reserved for a certain proportion, for example, 20%, when the system can be resized and written at any time.

The following figure shows the bitmap change during resizing.

The extensive use of RDMA is risky to the Group's databases. By using DBFS along with the Apsara Distributed File System, they can implement switching between TCP and RDMA and provide switching drills throughout the link. In this way, the RDMA risks can be controlled to ensure stability.

In addition, the DBFS, Apsara Distributed File System, and network teams performed many capacity, water-level, and stress tests as well as fault drills to prepare for the release of the largest RDMA in the industry.

After gaining technical breakthroughs and performing troubleshooting, DBFS eventually underwent the daunting task of withstanding the full-link traffic of the big sales campaign during the Double Eleven. This success verified the feasibility of the overall technical trend towards storage and computing separation.

In addition to the preceding features, as a file system, DBFS also provides many other features to ensure its universality, ease of use, stability, and security for businesses.

The introduction of all our technical innovations and capabilities as products into DBFS, enables more businesses in user state to access different underlying storage media and databases to implement storage and computing separation.

POSIX Compatibility

Currently, DBFS is compatible with the majority of common POSIX file interfaces to support database services, facilitating interconnectivity with upper-layer database services. Page caching, asynchronous I/O, and atomic writing are also enabled to provide rich I/O capabilities for database services. In addition, DBFS is compatible with glibc interfaces to support the processing of file streams. The support for these interfaces greatly reduces the complexity of database access, making DBFS easy to use and allowing DBFS to support more database services.

POSIX interfaces will not be described here due to their popularity. Instead, the following sections will describe some glibc interfaces for your reference:

// glibc interface

FILE *fopen(constchar*path,constchar*mode);

FILE *fdopen(int fildes,constchar*mode);

size_t fread(void*ptr, size_t size, size_t nmemb, FILE *stream);

size_t fwrite(constvoid*ptr, size_t size, size_t nmemb, FILE *stream);

intfflush(FILE *stream);

intfclose(FILE *stream);

intfileno(FILE *stream);

intfeof(FILE *stream);

intferror(FILE *stream);

voidclearerr(FILE *stream);

intfseeko(FILE *stream, off_t offset,int whence);

intfseek(FILE *stream,long offset,int whence);

off_t ftello(FILE *stream);

longftell(FILE *stream);

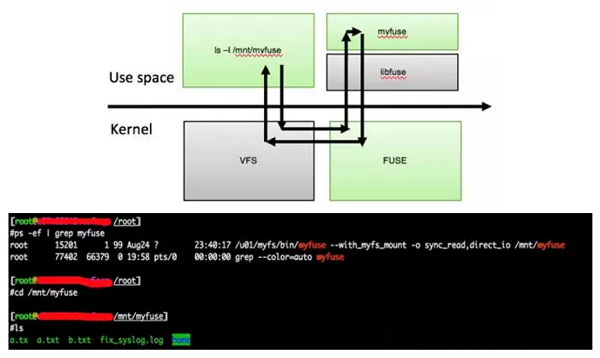

voidrewind(FILE *stream);Implementation of FUSE

For compatibility with the Linux ecosystem, FUSE is implemented and linked to VFS. By introducing FUSE, DBFS can be accessed without any complex code modifications, greatly facilitating the application of the product. This also dramatically simplifies traditional O&M.

Service Capabilities

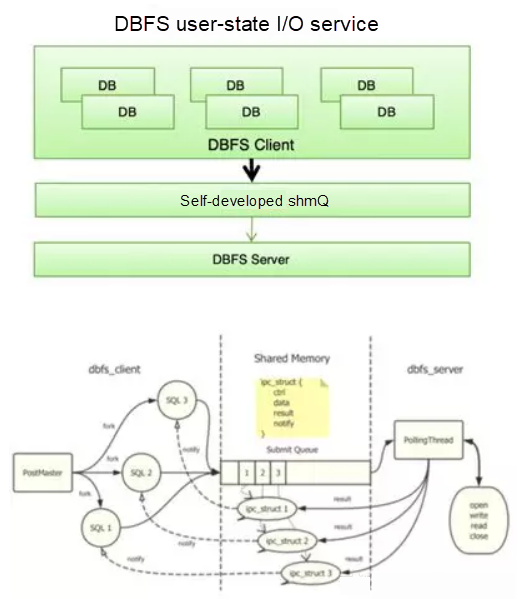

DBFS provides the self-developed shmQ component based on the shared-memory IPC. In this case, DBFS supports the process-architecture-based PostgreSQL and the thread-architecture-based MySQL. This makes DBFS more universal and secure and lays a solid foundation for future online upgrades.

shmQ is implemented with a lock-free mechanism and provides excellent performance and throughput. From the latest test results, the access latency can be controlled within several microseconds when the database page size is 16 KB. shmQ also supports the service provision capability and multi-process architecture and offers the expected performance and stability.

Cluster File System

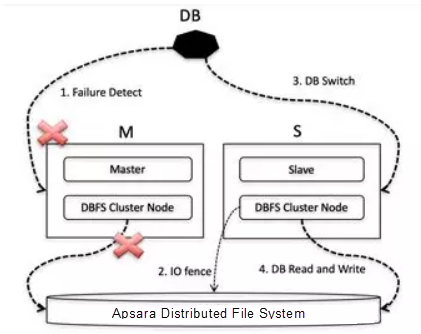

DBFS also supports clustering as another major feature. It enables the linear expansion of computing resources in a database in shared-disk mode to reduce storage costs for the business. In addition, the shared-disk mode provides the database with a high elasticity, greatly improving the SLA for efficient active-standby switchover. The cluster file system provides single-write-and-multi-read and multi-write capabilities, laying a solid foundation for the shared-disk and shared-nothing architectures of databases. In contrast to traditional OCFS, DBFS is implemented in the user state, providing better performance and controllability. OCFS highly relies on Linux VFS, for example, it does not provide page caching itself.

When working in single-write-and-multi-read mode, DBFS provides multiple optional roles (M and S). One M node and multiple S nodes can share data and access Apsara Distributed File System data simultaneously. The upper-layer database restricts the M and S nodes so that data in the M node can be read and written while that in the S node can only be read. When the M node fails, its data is taken over by the S node. M-S failover procedure:

When writing data into multiple nodes, DBFS performs global metalock control, blockgroup allocation optimization, and other operations on all nodes. During the writing, it also involves the disk-based quorum algorithm, which is not described here due to its complexity.

With the emergence of new storage media, databases need to improve performance or lower costs as well as to control the underlying storage media.

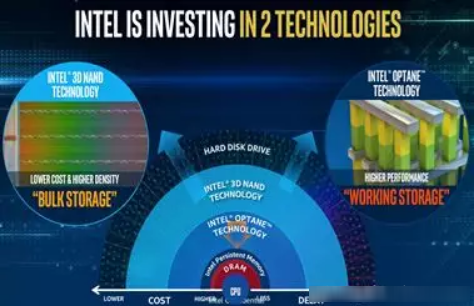

From Intel's planning of storage media, AEP, Optane, and SSD products are provided for increased performance and capacity, while QLC is provided for extremely large capacities. From the perspective of comprehensive performance and costs, we regard Optane as an ideal cache product and have used it to implement persistent file caching for DBFS headers.

Persistent File Caching

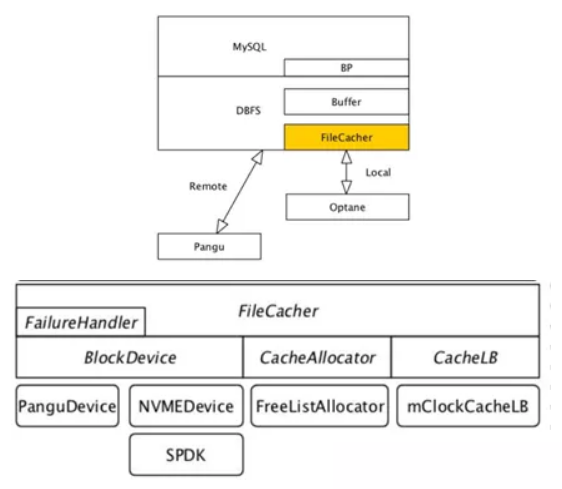

DBFS provides the Optane-based local persistent caching function to further improve the read and write performances of databases with separated storage and computing resources. File caching incorporates the following features to implement production availability:

These features lay a solid foundation for achieving high online stability. Because Optane I/O uses the pure user-state technology of SPDK, DBFS implements it by combining the vhost function of Fusion Engine. The page size of file caching can be configured based on the block size of the upper-layer database to achieve the optimal effect.

The following figure shows the file caching architecture.

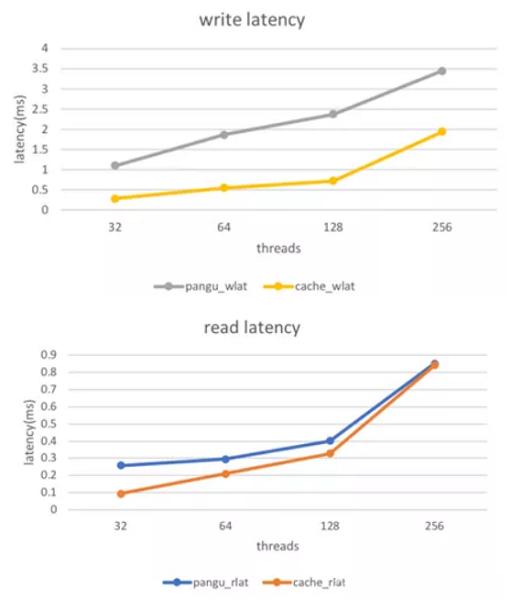

The following figure shows the resulting read and write performance gains:

In the figure, the lines marked with "cache" result from file caching. From the perspective of overall performance, as the hit rate increases, the read latency decreases. In addition, many performance metrics are monitored against file caching.

Open-Channel SSDs

X-Engine works with the DBFS and Fusion Engine teams to further build a storage controllable system based on object SSDs. They have explored and implemented the reduction of SSD loss, improvement of SSD throughput, and reduction of the interference between reads and writes, making excellent progress. Currently, with X-Engine's tiered storage policies, we have solved the read and write paths and are moving towards the in-depth development of intelligent storage.

In 2018, DBFS widely supported X-DB and the Double 11 in storage and computing separation mode. Meanwhile, it enables ADS to implement the single-write-and-multi-read function and the Tair solution.

In addition to supporting businesses, DBFS also supports the association between PostgreSQL processes and the MySQL thread architecture. It also establishes interfaces with VFS and is compatible with the Linux ecosystem, becoming an authentic storage middleground product, a cluster user-state file system. In the future, DBFS will integrate with more software and hardware and enable tiered storage, NVMeoF, and other technologies to add even more value for databases.

Configuring and Mapping Multiple EIPs to ENI Using NAT Gateway

2,593 posts | 791 followers

FollowApsaraDB - November 26, 2024

Alibaba Clouder - November 13, 2018

ApsaraDB - November 13, 2024

Ling Xin - November 28, 2019

Alibaba Container Service - July 31, 2024

Alibaba Clouder - October 25, 2018

2,593 posts | 791 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreLearn More

OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn MoreMore Posts by Alibaba Clouder

5129713982554930 October 1, 2021 at 7:01 am

I think the user-state is not the appropriate word in this context. It is more likely to be userland or user-space.