rinna Co., Ltd. (Head office: Shibuya-ku, Tokyo/Representative director: Zhan Cliff Chen, hereinafter rinna) announces the development and release of the Nekomata series, which are continuously pre-trained in Japanese based on Alibaba Cloud's Qwen-7B and Qwen-14B models. These models have been released under the Tongyi Qianwen License Agreement.

Advancements in AI technology have made previously challenging processes feasible. Large Language Models (LLMs), which are foundation models designed for text processing, have revolutionized human-computer interfaces using natural languages. Researchers and developers worldwide have collaborated, sharing, and publishing results to develop high-performance LLMs that can be operated as services.

rinna has contributed to Japanese-language AI development by training base models for processing the Japanese language in text, audio, and images, such as GPT, BERT, HuBERT, CLIP, and Stable Diffusion. Since April 2021, rinna's released models have been downloaded over 4.5 million times and are widely used by researchers and developers. In October 2023, the company developed and released the Youri series trained based on Meta's Llama2, which was originally designed for the English language but achieved excellent performance in the Japanese language. However, due to Llama 2’s primary focus (on the English language) with a vocabulary size of 32,000, the Youri series lacked vocabulary for writing Japanese, resulting in poor inference efficiency. Subsequently, LLMs with larger vocabulary sizes, targeting languages other than English, were released after Llama2. The Qwen series released by Alibaba Cloud achieves high inference efficiency in Japanese with a vocabulary size of 152,000.

To address this, rinna continuously trained Qwen-7B and Qwen-14B using Japanese training data for the new Nekomata series, which combines high-performance Japanese text generation and high-speed inference. The company then developed and launched a total of four instruction-following language models, which are further trained based on the general language models to respond to users' instructions interactively. With the release of these models, rinna aims to take Japanese AI research and development to a higher level.

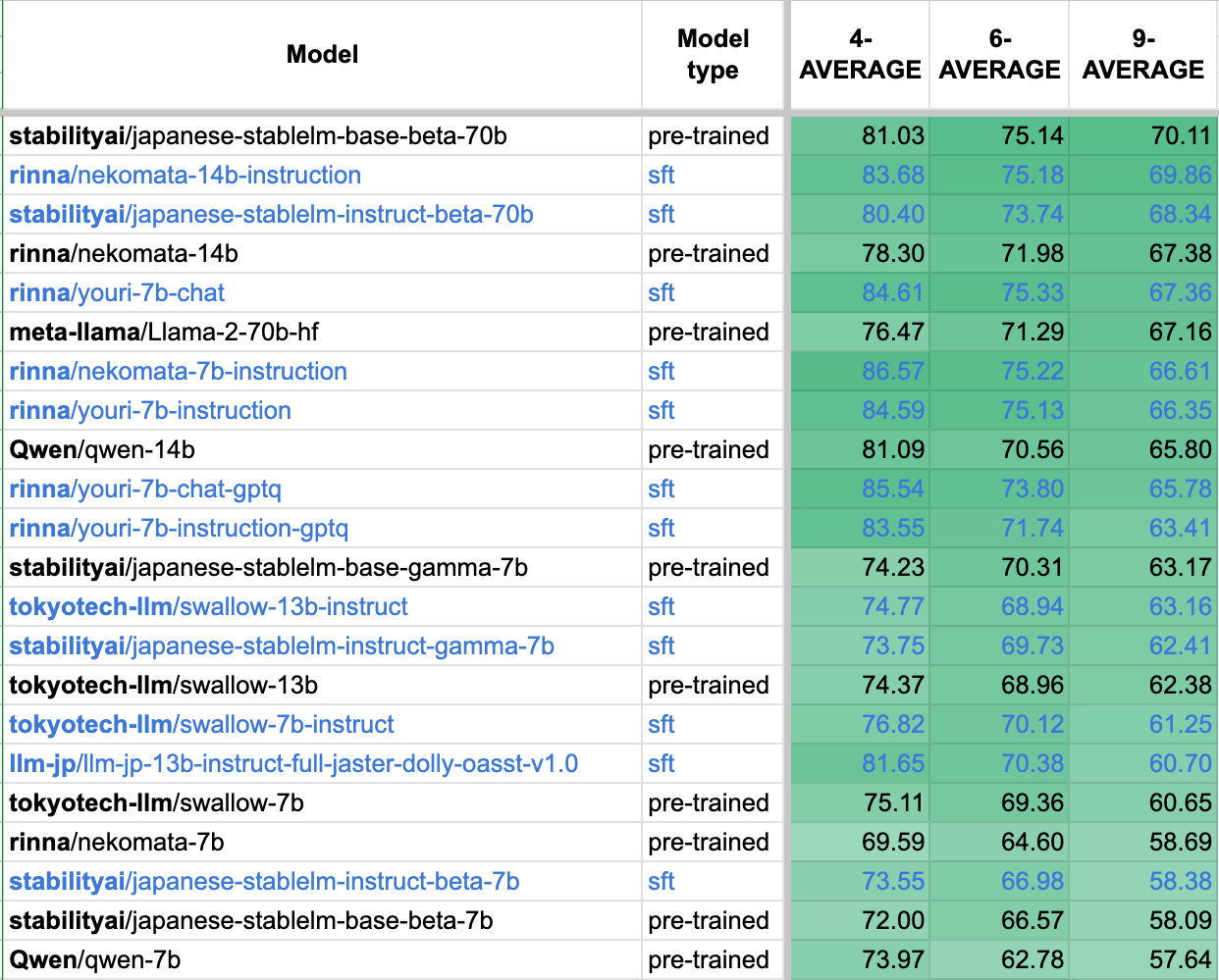

Nekomata 7B/14B are models that have been obtained by continuously training Qwen-7B (7 billion parameters) and Qwen-14B (14 billion parameters) on 30 billion and 66 billion tokens of Japanese and English training data, respectively. These models inherit Qwen's impressive performance and demonstrate high performance in Japanese-language tasks. According to the Stability-AI/LM-evaluation-harness, a benchmark for evaluating Japanese language models, Nekomata 7B and 14B achieved average scores of 58.69 and 67.38, respectively, across 9 tasks (Figure 1). Additionally, the number of tokens per 1 byte of Japanese text is 0.40 for Llama2/Youri and 0.24 for Qwen/Nekomata, resulting in high inference efficiency. The model names are derived from the Japanese folklore monster Nekomata.

Figure 1: Japanese Language Model Benchmark Stability-AI/LM-Evaluation-Harness Scores

The Nekomata Instruction is an interactive response language model that has been further trained to respond to user instructions interactively. The Nekomata 14B Instruction achieved a benchmark score of 69.86, reaching the same level as some 70B-parameter models.

The Nekomata series inherits the license from Tongyi Qianwen LICENSE AGREEMENT and can be used for commercial purposes, subject to the terms of use. For more details, please refer to the official information on the license.

The introduction of ChatGPT has made text generation technology easily accessible. However, to implement and operate specific tasks, it is crucial to develop a model that aligns with the intended purpose, such as task specialization, cost reduction, and security enhancement. rinna has extensive experience in research, development, and operations using LLMs and has accumulated a wealth of knowledge in this field. Leveraging these high technical skills and expertise, the company offers "Tamashiru Custom," an LLM solution customized for businesses with specific needs. With the development of the Nekomata series, we can now provide custom LLMs that better meet the specific requirements of our customers. Moving forward, rinna will continue conducting research and development to advance the social implementation of AI, and we will share our research findings and incorporate them into our products.

rinna is an AI company with a vision of co-creation world between humans and AI. Its strengths are research and development of generative AI models for text, audio, image, video, etc., and data analysis by artificial intelligence. Utilizing a variety of AI technologies born from research, we are working with partner companies to develop and provide solutions to solve a variety of business challenges. We also aim to create a diverse world where people and AI live together through a variety of familiar AI characters created based on the technology of our flagship AI rinna.

The company name, trade name, etc. in the text may be trademarks or registered trademarks of their respective companies.

This is a translated article from rinna, all rights reserved to the original author.

1,322 posts | 464 followers

FollowAlibaba Cloud Community - January 30, 2024

Alibaba Cloud Community - January 2, 2024

Alibaba Cloud Community - March 14, 2024

Alibaba Cloud Community - November 10, 2023

vincentsiu - July 16, 2024

JwdShah - February 13, 2024

1,322 posts | 464 followers

Follow Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Community