By Digoal

Apart from social networking sites and e-commerce websites, people tend to visit popular audio, video, image, and text content websites the most. For web developers and publishers, content management is very important, and data pivoting is an important tool for content management. Video websites are now capable of playback on various devices, such as mobile phones, computers, TV boxes, TVs, and projectors. This means that organizations need to keep track of data including device attributes, member attributes, channel attributes, and so on.

1. Generate device/user profiles

IDs, multi-dimensional tags, multi-valued column tags (for example, movies with certain directors/actors that have been watched by users within the last 7 days/one month).

Generally, there will be tens of thousands of values for multivalued columns (for example, tens of thousands of actors/movies). A device/person generally has dozens or even hundreds of attribute columns. There may be dozens of VALUEs in a single multivalued column for a device.

2. Profile pivoting

2.1. Query for the number of targets (the number of selected devices/members) based on any combination of tag conditions

2.2. Select a group according to any combination of tag conditions, and count the proportion taken by each category of certain columns of the group (count, group by, quantile, multidimensional pivoting)

The concurrency requirement is low.

3. Select target devices and members

Pagination query for IDs that satisfy any combination of tag conditions (to select devices or members that satisfy the conditions)

The concurrency requirement is low.

4. Point query (key value query) requirements (check whether user meets the selection rules according to any combination of tag conditions and user IDs)

The concurrency requirement for point query is high, which may involve tens of thousands of requests per second.

For audio and video websites, there are typically less than a million pieces of content (however, after introducing short video clips or user-generated media, there may be billions of pieces of content).

There can't be more than 10 billion users and devices (based on the world's population). In addition, devices will age, and there won't be more than a billion active devices.

Depending on people's capacity for abstract thinking, the number of tag columns may be in the hundreds. Multivalued columns (such as favorite actors, movies, and directors) may make up a larger proportion, maybe 50%.

The VALUE range of multivalued columns (such as actors, movies, and directors) is expected to be in the millions. (Favorite variety star tag of user A: Wang Han, Zhang Yu, Liu Wei)

There may be dozens of multivalued column tags, among which "recently watched movies" is generally useful. Aside from porn identifiers, I'd guess no one watches movies all day long.

1. Storage and pivot of multivalued columns

1.1. Storage of multivalued columns

PostgreSQL supports array type multivalued tag columns. Since they belong to a data type, they have built-in common array operators and functions, such as CONTAINS, INTERSECT, SYM_DIFFERENCE, OVERLAP, APPEND, UNNEST, and type conversion. They are really convenient to use.

https://www.postgresql.org/docs/9.6/static/functions-array.html

1.2. Multi-valued column pivoting:

For example, the "user's favorite actor" is a multivalued column—determining the favorite TOP 10 actors of users in Zhejiang Province.

For a conditional TOP N query that requires actual computing, use unnest(array), for example

select unnest(c2) as c2, count(*) from -- Uses unnest to break an array into individual elements for statistics

tbl

group by 1

order by 2 desc

limit 10; -- Determines TOP 10 elements of the c2 multivalued column under certain conditions

2. Time and spatial (geographical) dimension analysis

Members and devices have geographic attributes. We can add them into GIS pivoting. PostgreSQL supports geometry types, indexes, operators, aggregate functions, window functions, clustering functions, and so on. It is extremely powerful in terms of GIS processing.

3. Computing workload

Pivoting requires powerful computing capability. PostgreSQL 9.6 began to support multi-core parallelism. Currently, a 100,000 RMB machine generally supports a 10 GB/s standalone throughput, which reaches the level of HTAP.

If you want better analytic computing capabilities, you can use Alibaba Cloud HybridDB for PostgreSQL, which is a multi-machine parallel (OLAP) version of PostgreSQL. It supports column-store, row-store, compression, multi-machine parallelism and other features.

4. Data import

Usually, video systems do not require highly real-time user profiles. New user profiles are generated on a daily basis. You can simply write daily generated profiles into Alibaba Cloud RDS PostgreSQL or HybridDB for PostgreSQL to provide pivot information.

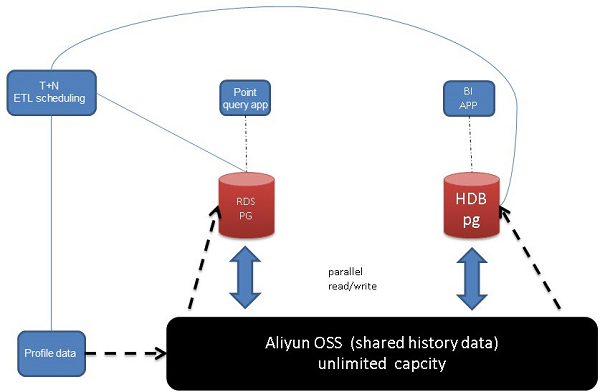

There are two solutions that meet the requirements of both pivoting and highly concurrent point queries.

Import the profile data into RDS PG and the HDB PG through OSS using the ETL scheduling system.

Leave point query requirements to RDS PG 9.4.

Leave pivoting requirements to HDB PG.

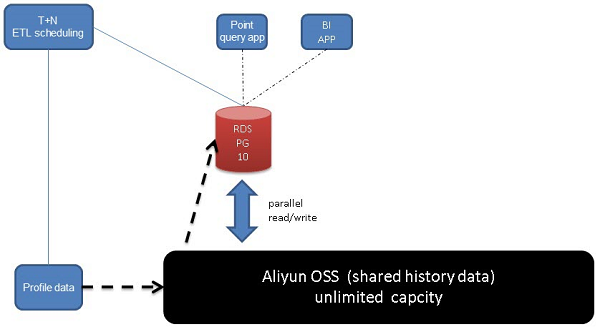

RDS PG 10 supports multi-core parallelism. A 64-core machine processes about 10 GB of data per second (the hard disk bandwidth is not fixed, and is usually about 1.6 GB/s for a single NVME card). It is easy to estimate the time of pivoting.

If your business can deal with a longer pivoting time, you could achieve your business goals by using RDS PG 10.

In addition, RDS PG 10 supports multi-index BITMAP SCAN combined scans, without scanning the entire table. It is very efficient.

Create a function to generate the multivalued columns we need.

create or replace function gen_rand_int(

int, -- Number of categories: movies, actors, genres (such as comedy and horror), year, category (such as movie and TV series)...

int, -- The number of popular categories. Must be less than $1

int -- Number of tags

) returns int[] as

$$

select array(

select (ceil(random()*$1))::int+$2 from generate_series(1,$3/2) -- reasonably common

union all

select (ceil(random()*$2))::int from generate_series(1,$3/2) -- reasonably popular

) ;

$$

language sql strict;

postgres=# select gen_rand_int(10000,100,30);

gen_rand_int

-------------------------------------------------------------------------------------------------------------------------

{5946,6877,7287,9091,1761,4870,2100,573,8388,2079,1400,9744,703,4834,6843,18,13,32,97,53,68,43,72,32,62,85,47,15,79,70}

(1 row)

postgres=# select gen_rand_int(10000,100,30);

gen_rand_int

--------------------------------------------------------------------------------------------------------------------------

{4558,3818,3836,1744,2808,5496,6513,4808,5969,4801,6786,7268,4444,7388,2002,11,91,32,43,88,85,11,30,56,43,92,40,90,7,19}

(1 row)

postgres=# select gen_rand_int(10000,100,30) from generate_series(1,10);

gen_rand_int

---------------------------------------------------------------------------------------------------------------------------

{5950,6793,6047,7559,4635,1463,8022,1337,9059,8822,1641,6099,116,6626,5311,77,89,62,30,72,48,56,29,72,72,61,29,64,60,38}

{9932,1943,579,5878,9401,5113,7241,7322,6349,6200,6043,7889,2199,6059,4415,74,37,32,35,66,3,83,22,31,54,93,91,83,56,51}

{2109,5528,7033,2587,1306,6333,7600,8446,3555,3848,4546,9498,1636,6644,5456,59,39,90,90,74,56,93,56,77,24,10,70,15,92,26}

{6594,1261,8079,3526,3748,9284,9759,1247,7630,3213,4995,2075,2610,6531,8619,79,23,24,69,13,97,24,5,53,1,28,62,70,42,54}

{9646,769,6562,7625,4195,210,6808,3853,1356,4337,6966,6251,6312,9476,2681,48,73,49,72,41,62,68,65,66,21,65,94,82,35,36}

{3558,3132,4296,10019,657,8391,128,7364,2143,1384,1601,9008,7534,7813,8383,1,25,56,49,96,97,10,64,61,76,84,26,70,65,61}

{543,10050,9165,4739,9969,9721,3029,9997,6985,5071,1280,8486,3979,8714,6198,22,87,86,77,36,81,73,45,45,34,21,28,59,90,93}

{2024,9511,9292,1089,4149,9160,710,7078,9056,7595,2048,236,5980,5927,8850,20,80,74,6,57,9,87,30,54,31,64,75,58,22,64}

{5106,4223,5900,4297,5211,9949,3357,5821,6926,2313,3315,8874,2449,9195,4701,11,11,26,85,16,83,94,2,13,48,33,76,22,90,98}

{8637,4072,3953,4436,8268,9064,4285,1525,4784,1110,3737,7999,9884,6086,7093,44,71,81,70,56,97,53,50,99,65,97,31,40,18,21}

(10 rows)

1. Alibaba Cloud RDS PostgreSQL

-- 70 fields

-- 40 INT fields, 10000, 1000, 100 value range.

-- 30 array fields, generated using the above random array generation function (100,000 tags in total, the values are 150 for Popular tags and 20 for Follow tags)

-- PostgreSQL 10

do language plpgsql

$$

declare

sql text := '';

begin

for i in 1..10 loop

sql := sql||'c'||i||' int default random()*10000,';

end loop;

for i in 11..20 loop

sql := sql||'c'||i||' int default random()*1000,';

end loop;

for i in 21..40 loop

sql := sql||'c'||i||' int default random()*100,';

end loop;

for i in 41..70 loop

sql := sql||'c'||i||' int[] default gen_rand_int(100000,150,20),';

end loop;

sql := rtrim(sql, ',');

sql := 'create table test ('||sql||') with (autovacuum_enabled=off, toast.autovacuum_enabled=off, parallel_workers=32)';

execute sql;

end;

$$

;

/*

-- Create partition tables

-- PostgreSQL 10

do language plpgsql

$$

declare

begin

for i in 1..64 loop

execute 'create unlogged table test'||i||'(like test including all) inherits(test) with (autovacuum_enabled=off, toast.autovacuum_enabled=off, parallel_workers=32)';

end loop;

end;

$$

;

*/

2. Alibaba Cloud HybridDB for PostgreSQL

-- Greenplum

create or replace function cb() returns void as

$$

declare

sql text := '';

begin

for i in 1..10 loop

sql := sql||'c'||i||' int default random()*10000,';

end loop;

for i in 11..20 loop

sql := sql||'c'||i||' int default random()*1000,';

end loop;

for i in 21..40 loop

sql := sql||'c'||i||' int default random()*100,';

end loop;

for i in 41..70 loop

sql := sql||'c'||i||' int[] default gen_rand_int(100000,150,20),';

end loop;

sql := rtrim(sql, ',');

sql := 'create table test ('||sql||') with (APPENDONLY=true, ORIENTATION=column, COMPRESSTYPE=zlib, CHECKSUM=false, COMPRESSLEVEL=5) distributed randomly';

execute sql;

end;

$$

language plpgsql strict;

select cb();

1. Write test values

insert into test values (1);

postgres=# select * from test;

-[ RECORD 1 ]-------------------------------------------------------------------------------------------

c1 | 1

c2 | 4880

c3 | 6058

c4 | 1080

c5 | 9862

c6 | 7605

c7 | 9139

c8 | 2541

c9 | 5599

c10 | 9818

c11 | 658

c12 | 882

c13 | 249

c14 | 514

c15 | 504

c16 | 390

c17 | 97

c18 | 422

c19 | 906

c20 | 374

c21 | 25

c22 | 4

c23 | 74

c24 | 87

c25 | 52

c26 | 56

c27 | 83

c28 | 47

c29 | 36

c30 | 18

c31 | 40

c32 | 29

c33 | 67

c34 | 1

c35 | 40

c36 | 66

c37 | 77

c38 | 31

c39 | 91

c40 | 33

c41 | {29495,57121,21227,54417,8477,71587,93375,18150,13788,84006,84,58,133,45,38,62,128,12,133,32}

c42 | {26442,28622,50446,93545,29479,90221,59274,6384,21385,50413,59,76,11,91,8,24,48,148,51,68}

c43 | {82075,89069,83949,70379,18540,9073,11113,3676,17058,99304,38,65,42,113,55,86,98,144,95,130}

c44 | {46129,2464,37175,53362,62667,42021,68922,94306,40090,2482,60,33,137,35,139,15,49,5,20,74}

c45 | {2359,38160,92410,29984,13302,29003,86937,78513,24719,50124,98,106,79,3,36,23,66,139,14,126}

c46 | {95012,48541,5658,86261,71773,97751,95901,3926,806,9065,80,5,71,68,50,91,111,30,58,148}

c47 | {69608,3397,69214,21894,5231,92972,36696,48935,85503,45654,49,121,141,57,100,99,54,94,104,55}

c48 | {71140,22280,39205,18064,67376,71903,78140,41324,91387,16578,60,92,30,14,124,38,3,29,111,131}

c49 | {64638,6116,67292,58532,44051,33617,24049,79587,95692,93341,24,100,23,83,127,124,40,94,36,27}

c50 | {79012,63559,78516,98686,72313,60953,23440,73888,79936,96978,91,67,5,42,4,71,92,40,40,86}

c51 | {19894,41908,23496,35213,96590,7941,17758,23024,70375,41477,61,74,8,29,72,116,120,107,76,90}

c52 | {67889,11450,3921,70683,39257,6576,17377,530,33128,43508,86,80,128,121,132,123,133,9,7,88}

c53 | {46869,45123,7791,51604,64032,55412,28502,43744,26323,79136,5,141,136,11,97,45,20,123,45,70}

c54 | {25178,87116,99137,10293,67656,86921,91847,55986,92314,96275,22,59,62,34,136,8,116,29,73,6}

c55 | {97823,51814,97527,88109,58677,61970,17501,71964,43640,47272,28,103,52,26,118,3,6,106,87,145}

c56 | {66630,71970,35032,7726,94002,25368,12705,71295,44055,61277,112,63,20,108,45,107,51,71,65,116}

c57 | {94158,61360,45962,28245,78426,24621,29838,82264,94976,87266,118,92,89,20,104,80,58,123,36,124}

c58 | {42712,98691,23844,55502,70678,53379,26818,4484,265,69948,123,142,47,42,34,14,78,78,138,71}

c59 | {39169,69661,8193,98104,82656,77075,50890,20869,58510,74821,5,2,110,40,85,66,120,125,73,120}

c60 | {52889,29852,74145,83896,57293,96361,93179,9204,48264,84576,84,131,81,96,128,55,62,54,86,149}

c61 | {10646,60146,190,83857,86676,56463,27596,66435,39404,75669,70,138,8,31,114,94,25,104,108,97}

c62 | {53342,27357,51760,7277,91190,36845,43718,31948,72670,878,47,125,92,47,101,71,131,142,21,40}

c63 | {69836,59842,18662,75056,79995,94400,37418,96359,63166,8834,92,25,54,19,36,41,74,101,89,33}

c64 | {67779,89791,4675,28502,20745,71397,75751,8011,65565,89284,52,53,74,80,16,44,71,71,38,16}

c65 | {56107,85714,26515,91997,98009,49849,18926,46998,16751,77652,103,127,101,110,19,132,67,133,144,15}

c66 | {77465,30127,44793,26290,83019,54798,54960,30160,2072,79839,61,87,98,100,75,95,25,103,15,50}

c67 | {46151,78465,17210,13293,51346,29791,1029,95475,17972,96626,8,143,40,75,32,14,7,115,59,10}

c68 | {55925,79647,64106,21177,46179,13831,84287,62410,82330,94244,143,43,109,19,62,36,63,64,29,90}

c69 | {38828,23945,54898,65279,73454,76174,74600,77610,52260,13930,126,12,140,72,44,59,92,20,3,66}

c70 | {7291,96804,71685,79699,8954,13008,3303,50744,55210,22232,16,141,69,98,89,29,62,50,145,140}

2. Method for dynamically writing data into the corresponding partitions

PostgreSQL 10

create or replace function ff(

int, -- Partition number

int -- Writes the number of records

) returns void as

$$

declare

begin

execute 'insert into test'||$1||' select random()*100 from generate_series(1,'||$2||')';

end;

$$

language plpgsql strict;

3. Method of directly writing data to a single table

PostgreSQL 10

vi test.sql

insert into test select random()*100 from generate_series(1,100);

nohup pgbench -M prepared -n -r -P 5 -f ./test.sql -c 64 -j 64 -t 50000 >/tmp/log 2>&1 &

About 62,000 rows/s, performance depends on the size of each single row.

According to my testing, 320 million pieces of data takes up 977 GB space. If the size of each single row is smaller, the performance will be better.

transaction type: ./test.sql

scaling factor: 1

query mode: prepared

number of clients: 64

number of threads: 64

number of transactions per client: 50000

number of transactions actually processed: 3200000/3200000

latency average = 102.605 ms

latency stddev = 29.016 ms

tps = 622.235371 (including connections establishing)

tps = 622.236656 (excluding connections establishing)

script statistics:

- statement latencies in milliseconds:

102.611 insert into test select random()*100 from generate_series(1,100);

postgres=# \dt+ test

List of relations

Schema | Name | Type | Owner | Size | Description

--------+------+-------+----------+--------+-------------

public | test | table | postgres | 977 GB |

(1 row)

4. Write into Alibaba Cloud HybridDB for PostgreSQL

-- Greenplum

vi test.sql

\timing

insert into test select random()*100 from generate_series(1,320000000);

nohup psql -f ./test.sql >/tmp/log_gpdb 2>&1 &

GPDB column-store, 458 GB after compression.

postgres=# select pg_size_pretty(pg_total_relation_size('test'));

pg_size_pretty

----------------

458 GB

(1 row)

5. You can choose to export data from PG to HDB. For example

date

psql -c "copy test to stdout"|psql -U dege.zzz -p 15432 -d postgres -c "copy test from stdin"

date

6. Most of the time, we import data into HDB or RDS PG from OSS in the production environment.

Refer to: https://www.alibabacloud.com/help/doc-detail/44461.htm

1. The number of qualifying devices and members

1.1 HybridDB for PostgreSQL (column-store) direct computing

Equivalent query:

postgres=# select count(*) from test where c2=1;

count

-------

32233

(1 row)

Time: 581.304 ms

Array (multi-value type) INTERSECT (including ANY) query:

postgres=# select count(*) from test where c41 && array [1,2,1000];

count

----------

40227896

(1 row)

Time: 10227.078 ms

Equivalent AND array (multi-value type) INTERSECT (including ANY) query:

postgres=# select count(*) from test where c40=1 and c41 && array [1,2,1000];

count

--------

401802

(1 row)

Time: 6011.031 ms

Equivalent OR array (multi-value type) INTERSECT (including ANY) query:

postgres=# select count(*) from test where c40=1 or c41 && array [1,2,1000];

count

----------

43025528

(1 row)

Time: 9945.750 ms

Array (multi-value type) CONTAINS (including ALL) query:

postgres=# select count(*) from test where c41 @> array [1,2,1000];

count

-------

123

(1 row)

Time: 10934.176 ms

1.2 Value estimation using statistical information

Improper use of count and offset as well as the optimization of pagination

1.3 Value estimation using HLL

2. Pivoting of qualified data multivalued columns and common columns (TOP N)

2.1 Pivoting of multi-valued columns:

postgres=# select unnest(c41) c41, count(*) from test where c2=1 group by 1 order by 2 desc limit 100;

c41 | count

-----+-------

72 | 2276

132 | 2255

65 | 2250

130 | 2244

84 | 2241

......

41 | 2137

31 | 2137

137 | 2135

(100 rows)

Time: 8058.408 ms

2.2 Pivoting of scalar columns:

postgres=# select c40, count(*) from test where c2=1 group by 1 order by 2 desc limit 100;

c40 | count

-----+-------

40 | 363

5 | 358

2 | 356

93 | 355

67 | 353

18 | 351

99 | 350

......

86 | 288

71 | 287

84 | 277

0 | 160

(100 rows)

Time: 1328.181 ms

3. Select qualifying device IDs and user IDs

Use the cursor to select the target group, and each page is silky smooth.

postgres=# begin;

BEGIN

Time: 0.764 ms

postgres=# declare cur1 cursor for select c1 from test where c2=1;

DECLARE CURSOR

Time: 215.695 ms

postgres=# fetch 10 from cur1;

c1

----

44

50

86

48

27

71

10

83

24

96

(10 rows)

Time: 0.288 ms

postgres=# fetch 10 from cur1;

c1

----

39

16

24

90

25

1

41

33

76

0

(10 rows)

Time: 0.087 ms

Select a group of 30,000 people:

postgres=# explain analyze select c1 from test where c2=1 ;

QUERY PLAN

-----------------------------------------------------------------------------------------

Gather Motion 48:1 (slice1; segments: 48) (cost=0.00..35186640.00 rows=31953 width=4)

Rows out: 32233 rows at destination with 877 ms to end, start offset by 1.299 ms.

-> Append-only Columnar Scan on test (cost=0.00..35186640.00 rows=666 width=4)

Filter: c2 = 1

Rows out: 0 rows (seg0) with 10 ms to end, start offset by 62 ms.

Slice statistics:

(slice0) Executor memory: 347K bytes.

(slice1) Executor memory: 478K bytes avg x 48 workers, 494K bytes max (seg2).

Statement statistics:

Memory used: 128000K bytes

Settings: optimizer=off

Optimizer status: legacy query optimizer

Total runtime: 878.970 ms

(13 rows)

Time: 880.017 ms

Select a group of 58 million people:

postgres=# explain analyze select c1 from test where c41 && array[1,2,100];

QUERY PLAN

-------------------------------------------------------------------------------------------

Gather Motion 48:1 (slice1; segments: 48) (cost=0.00..35186640.00 rows=1600000 width=4)

Rows out: 58538502 rows at destination with 23842 ms to end, start offset by 1.282 ms.

-> Append-only Columnar Scan on test (cost=0.00..35186640.00 rows=33334 width=4)

Filter: c41 && '{1,2,100}'::integer[]

Rows out: 0 rows (seg0) with 7.488 ms to end, start offset by 35 ms.

Slice statistics:

(slice0) Executor memory: 347K bytes.

(slice1) Executor memory: 494K bytes avg x 48 workers, 494K bytes max (seg0).

Statement statistics:

Memory used: 128000K bytes

Settings: optimizer=off

Optimizer status: legacy query optimizer

Total runtime: 23843.827 ms

(13 rows)

Time: 23845.061 ms

Select a group of 60,000 people:

postgres=# explain analyze select c1 from test where c41 @> array[1,2,100];

QUERY PLAN

------------------------------------------------------------------------------------------

Gather Motion 48:1 (slice1; segments: 48) (cost=0.00..35186640.00 rows=320000 width=4)

Rows out: 63866 rows at destination with 8920 ms to end, start offset by 1.375 ms.

-> Append-only Columnar Scan on test (cost=0.00..35186640.00 rows=6667 width=4)

Filter: c41 @> '{1,2,100}'::integer[]

Rows out: 0 rows (seg0) with 28 ms to end, start offset by 13 ms.

Slice statistics:

(slice0) Executor memory: 347K bytes.

(slice1) Executor memory: 490K bytes avg x 48 workers, 494K bytes max (seg0).

Statement statistics:

Memory used: 128000K bytes

Settings: optimizer=off

Optimizer status: legacy query optimizer

Total runtime: 8921.803 ms

(13 rows)

Time: 8922.994 ms

4. Point query according to device ID and user ID

(Create an ID index. Here we use BLOCK NUM for testing, the actual effect is the same)

postgres=# select c1 from test where ctid='(1,1)';

c1

----

49

(1 row)

Time: 0.408 ms

Stress test preparation

postgres=# show block_size;

block_size

------------

32768

(1 row)

postgres=# analyze test;

ANALYZE

postgres=# select relpages from pg_class where relname='test';

relpages

----------

32000002

(1 row)

postgres=# select c1 from test where ctid='(1,10)';

c1

----

28

(1 row)

postgres=# select c1 from test where ctid='(1,11)';

c1

----

(0 rows)

Stress test

vi test.sql

\set x random(1,32000002)

\set y random(1,10)

select * from test where ctid=('('||:x||','||:y||')')::tid;

Single response 1.1 milliseconds

transaction type: ./test.sql

scaling factor: 1

query mode: extended

number of clients: 64

number of threads: 64

duration: 120 s

number of transactions actually processed: 6762499

latency average = 1.136 ms

latency stddev = 6.042 ms

tps = 56349.372585 (including connections establishing)

tps = 56353.497075 (excluding connections establishing)

script statistics:

- statement latencies in milliseconds:

0.001 \set x random(1,32000002)

0.000 \set y random(1,10)

1.135 select * from test where ctid=('('||:x||','||:y||')')::tid;

The data size is 977 GB, which exceeds the memory size. If the entire network data is hot data, the disk read rate reaches 11 GB/s.

1 1 98 0 0 0| 90M 33M| 0 0 | 0 0 |9775 18k

36 21 8 34 0 1| 11G 0 | 239B 446B| 0 0 | 426k 369k

37 21 8 33 0 1| 11G 4096B| 120B 334B| 0 0 | 430k 374k

37 22 7 32 0 2| 11G 660k| 239B 727B| 0 0 | 433k 383k

26 44 5 23 0 1|8313M 0 | 120B 753B| 0 0 | 307k 260k

35 28 7 29 0 1| 11G 172k| 477B 1183B| 0 0 | 390k 328k

36 17 9 37 0 2| 11G 0 | 344B 2385B| 0 0 | 441k 381k

33 26 8 32 0 1| 10G 0 |1449B 1093B| 0 0 | 396k 333k

31 34 7 26 0 1|9585M 0 | 120B 588B| 0 0 | 347k 303k

HybridDB for PostgreSQL specification: 48C SSD.

RDS PostgreSQL specification: 60 cores.

1. The number of qualifying devices and members

1.1 Equivalent query:

0.5 seconds

1.2 Array (multi-value type) INTERSECT (including ANY) query:

10 seconds

1.3 Equivalent AND array (multi-value type) INTERSECT (including ANY) query:

6 seconds

1.4 Equivalent OR array (multi-value type) INTERSECT (including ANY) query:

10 seconds

1.5 Array (multi-value type) CONTAINS (including ALL) query:

10 seconds

2. Pivoting of qualified data multivalued columns and common columns (TOP N)

2.1 Pivoting of multi-valued columns:

8 seconds

2.2 Pivoting of scalar columns:

1.3 seconds

3. Select qualifying device IDs and user IDs

Using cursors, each page has the same efficiency

3.1 Scalar conditions, select a group of 30,000 people:

Total time consumption 0.9 seconds

3.2 Multi-value conditions, select a group of 58.5 million people:

Total time consumption 24 seconds

3.3 Multi-value conditions, select a group of 60,000 people:

Total time consumption 9 seconds

4. Point query according to device ID and user ID

1.1 ms

Alibaba Cloud ApsaraDB RDS for PostgreSQL and HybridDB for PostgreSQL can efficiently meet the multi-dimensional (time, space (GIS), multivalued columns, single-valued columns) data pivoting, group selection, and point query requirements.

PostgreSQL App Massive FEED LOG Real-time Quality Statistics

digoal - January 18, 2021

digoal - June 26, 2019

Alibaba Clouder - July 7, 2020

Alibaba Clouder - March 22, 2019

digoal - December 14, 2018

Alibaba Cloud Community - December 27, 2022

ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by digoal