By Digoal

Value estimation is a common tool in statistics. Because of the large data size, it takes a huge amount of resources to obtain accurate values. However, statistical analysis does not require completely accurate data. Therefore, value estimation is often a compromise and is widely used in statistical analysis scenarios.

PostgreSQL is a powerful database that provides a number of methods for estimated value statistics. In PostgreSQL, we can use the HLL plug-in to determine the estimated UV and incremental UV (using the COUNT DISTINCT function). You can also use a count-min sketch top-n extension https://github.com/remzicanaksoy/cms_topn

We can determine the TOP VALUEs (including the TOP elements of an array field) and COUNT of any fields by using the statistical information bar chart. We can use pg_class.reltuples to determine the data record count of an entire table.

We can use the estimated value of EXPLAIN to determine the number of returned records (for example, when determining pagination) or to determine the estimated value of COUNT (*) (simply convert the SQL statement to select 1 from ...).

When determining the number of unique values for multiple fields, we can obtain very accurate estimates by defining custom statistics. When determining the estimated values that meet certain conditions, for example when we are required to list TOP N movie stars of a province, we can take samples first and then compute using the samples.

This article describes how to take data samples and estimate values.

I have previously written about a scenario for pivot analysis of a pan-content website, which involves a large amount of data. The amount of data that needs to be fully scanned is relatively large. Let's see whether the sampling method meets the requirements.

create table tbl (

id int8, -- Sequence

tag1 int[], -- Array

c1 int, -- 1–100

c2 int, -- 1–10000

c3 timestamp -- Timestamp

); Value range $1-$2, taking an array of $3 random values

create or replace function gen_rand_ints(int, int, int) returns int[] as $$

select array(select (random()*($2-$1))::int+$1 from generate_series(1,$3));

$$ language sql strict;

postgres=# select gen_rand_ints(10,25,5);

gen_rand_ints

------------------

{20,19,24,22,21}

(1 row) -- Write a hot spot array of 50 million values

insert into tbl select id, gen_rand_ints(1,1000,10), random()*100, random()*10000, clock_timestamp() from generate_series(1,50000000) t(id);

-- Write non-hot spot arrays of 100 million values

insert into tbl select id, gen_rand_ints(1,1000000,10), random()*100, random()*10000, clock_timestamp() from generate_series(1,100000000) t(id); The data model is as follows

postgres=# select * from tbl limit 10;

id | tag1 | c1 | c2 | c3

----------+-------------------------------------------+----+------+----------------------------

38931521 | {424,448,91,420,382,657,677,60,530,503} | 59 | 6120 | 2017-09-11 14:32:06.610512

38931522 | {66,87,468,207,79,780,307,714,520,149} | 44 | 7848 | 2017-09-11 14:32:06.610522

38931523 | {99,628,798,558,415,74,863,839,522,953} | 26 | 9032 | 2017-09-11 14:32:06.610531

38931524 | {610,935,962,140,438,551,752,503,636,220} | 71 | 7136 | 2017-09-11 14:32:06.61054

38931525 | {998,16,428,518,164,868,303,263,496,102} | 82 | 9102 | 2017-09-11 14:32:06.61055

38931526 | {175,683,749,696,637,8,599,247,942,561} | 39 | 3796 | 2017-09-11 14:32:06.610559

38931527 | {112,138,882,747,356,591,461,355,605,888} | 87 | 7684 | 2017-09-11 14:32:06.610568

38931528 | {756,175,31,252,276,850,162,450,533,910} | 15 | 1691 | 2017-09-11 14:32:06.610578

38931529 | {917,744,416,860,306,801,240,416,937,122} | 16 | 2927 | 2017-09-11 14:32:06.610587

38931530 | {712,623,647,317,511,519,86,267,693,116} | 52 | 9676 | 2017-09-11 14:32:06.610596

(10 rows) Determine the TOP N elements of tag 1 under any conditions.

postgres=# analyze tbl;

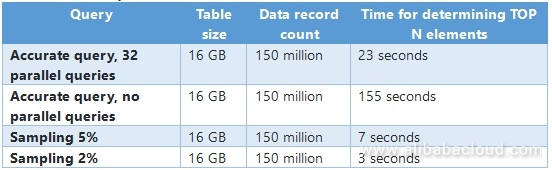

ANALYZE Table size 16 GB.

postgres=# \dt+ tbl

List of relations

Schema | Name | Type | Owner | Size | Description

--------+------+-------+----------+-------+-------------

public | tbl | table | postgres | 16 GB |

(1 row) -- Query time with 32 parallel queries

postgres=# select unnest(tag1) tag1, count(*) from tbl where c1 between 1 and 10 group by 1 order by 2 desc limit 10;

tag1 | count

------+-------

134 | 50935

768 | 50915

663 | 50876

567 | 50821

146 | 50821

332 | 50814

450 | 50807

884 | 50789

58 | 50781

605 | 50774

(10 rows)

Time: 23441.247 ms (00:23.441)

-- Query time without parallel queries

postgres=# select unnest(tag1) tag1, count(*) from tbl where c1 between 1 and 10 group by 1 order by 2 desc limit 10;

tag1 | count

------+-------

134 | 50935

768 | 50915

663 | 50876

567 | 50821

146 | 50821

332 | 50814

450 | 50807

884 | 50789

58 | 50781

605 | 50774

(10 rows)

Time: 154935.686 ms (02:34.936) For sampling methods, refer to the end of this article. PostgreSQL has two built-in common sampling methods, and two built-in extended sampling methods. In total, there are four built-in sampling methods.

Use block-level sampling (currently, parallel sampling is not supported).

postgres=# select unnest(tag1) tag1, (count(*))*20 -- Multiplied by 100/sampling coefficient

from

(select * from tbl TABLESAMPLE system (5)) t

where c1 between 1 and 10 group by 1 order by 2 desc limit 10;

tag1 | ?column?

------+----------

724 | 53380

798 | 52680

24 | 52640

371 | 52480

569 | 52400

531 | 52280

979 | 52160

429 | 52140

980 | 52080

350 | 51920

(10 rows)

-- Sampling 5%, about 7 seconds.

Time: 6887.745 ms (00:06.888)

postgres=# select unnest(tag1) tag1, (count(*))*50 -- Multiplied by 100/sampling coefficient

from

(select * from tbl TABLESAMPLE system (2)) t

where c1 between 1 and 10 group by 1 order by 2 desc limit 10;

tag1 | ?column?

------+----------

324 | 55450

435 | 55150

720 | 55050

943 | 54950

475 | 54750

958 | 54600

13 | 54400

742 | 54300

739 | 54100

301 | 53950

(10 rows)

-- Sampling 2%, about 3 seconds.

Time: 2720.140 ms (00:02.720) A larger number of samples leads to a higher accuracy. The TOP N elements are very accurate when using the sampling method, because the example uses 1000 random values, and the probability of each random value is the same. If it is required to return TOP 1000, then it will be 100% accurate.

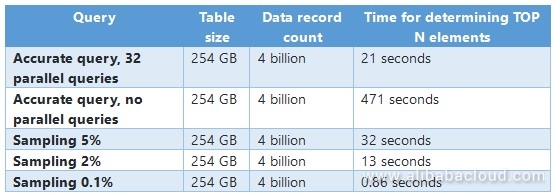

Re-design hot spot data, write 4 billion pieces of test data in total:

Level 1 hot spot data, 1, 500 million

Level 2 hot spot data, 2–4, 500 million

Level 3 hot spot data, 5–10, 500 million

Level 4 hot spot data, 11–30, 500 million

Common data, 1–100000, 2 billion

create table tbl1 (

id int8, -- Sequence

c1 int8, -- Target field

c2 int8, -- 1–100

c3 int8, -- 1–100000

c4 timestamp -- Timestamp

); nohup psql -c "insert into tbl1 select id, 1, random()*100, random()*100000, clock_timestamp() from generate_series(1,500000000) t(id);" >/dev/null 2>&1 &

nohup psql -c "insert into tbl1 select id, random()*(4-2)+2, random()*100, random()*100000, clock_timestamp() from generate_series(1,500000000) t(id);" >/dev/null 2>&1 &

nohup psql -c "insert into tbl1 select id, random()*(10-5)+5, random()*100, random()*100000, clock_timestamp() from generate_series(1,500000000) t(id);" >/dev/null 2>&1 &

nohup psql -c "insert into tbl1 select id, random()*(30-11)+11, random()*100, random()*100000, clock_timestamp() from generate_series(1,500000000) t(id);" >/dev/null 2>&1 &

nohup psql -c "insert into tbl1 select id, random()*100000, random()*100, random()*100000, clock_timestamp() from generate_series(1,2000000000) t(id);" >/dev/null 2>&1 & postgres=# analyze tbl1;

ANALYZE

Time: 502.421 ms

Table size: 254 GB

postgres=# \dt+ tbl1

List of relations

Schema | Name | Type | Owner | Size | Description

--------+------+-------+----------+--------+-------------

public | tbl1 | table | postgres | 254 GB |

(1 row) -- Query time with 32 parallel queries

postgres=# select c1,count(*) from tbl1 where c2 between 1 and 10 group by 1 order by 2 desc limit 30;

c1 | count

----+----------

1 | 49991259

3 | 25006580

2 | 12502559

4 | 12498741

9 | 10004285

6 | 10002597

8 | 9999530

7 | 9999215

5 | 5003219

10 | 4998870

29 | 2636193

18 | 2635457

13 | 2635344

17 | 2634693

26 | 2633965

19 | 2633690

28 | 2633526

14 | 2633512

15 | 2633363

24 | 2633260

20 | 2633014

25 | 2632926

16 | 2632779

22 | 2632508

27 | 2632288

23 | 2632216

21 | 2631443

12 | 2631315

11 | 1318483

30 | 1318451

(30 rows)

Time: 20845.738 ms (00:20.846)

-- Query time without parallel queries

postgres=# select c1,count(*) from tbl1 where c2 between 1 and 10 group by 1 order by 2 desc limit 30;

c1 | count

----+----------

1 | 49991259

3 | 25006580

2 | 12502559

4 | 12498741

9 | 10004285

6 | 10002597

8 | 9999530

7 | 9999215

5 | 5003219

10 | 4998870

29 | 2636193

18 | 2635457

13 | 2635344

17 | 2634693

26 | 2633965

19 | 2633690

28 | 2633526

14 | 2633512

15 | 2633363

24 | 2633260

20 | 2633014

25 | 2632926

16 | 2632779

22 | 2632508

27 | 2632288

23 | 2632216

21 | 2631443

12 | 2631315

11 | 1318483

30 | 1318451

(30 rows)

Time: 471112.827 ms (07:51.113) select c1,(count(*))*20 from -- Multiplied by 100/sampling coefficient

(select * from tbl1 TABLESAMPLE system (5)) t

where c2 between 1 and 10 group by 1 order by 2 desc limit 30;

c1 | ?column?

----+----------

1 | 50068840

3 | 25108820

2 | 12558680

4 | 12513080

7 | 10009300

9 | 10006260

6 | 10005400

8 | 9987220

5 | 5008280

10 | 5007980

17 | 2652940

16 | 2648640

25 | 2646800

28 | 2646600

15 | 2642480

20 | 2642220

14 | 2641620

26 | 2640500

23 | 2639420

29 | 2637740

22 | 2637320

13 | 2636900

19 | 2636100

18 | 2635120

24 | 2634440

12 | 2631480

27 | 2629880

21 | 2624940

11 | 1330140

30 | 1316480

(30 rows)

Time: 31884.725 ms (00:31.885)

-- Sampling 5%, about 32 seconds.

select c1,(count(*))*50 from -- Multiplied by 100/sampling coefficient

(select * from tbl1 TABLESAMPLE system (2)) t

where c2 between 1 and 10 group by 1 order by 2 desc limit 30;

c1 | ?column?

----+----------

1 | 50173200

3 | 24993550

2 | 12487100

4 | 12474100

6 | 9998250

8 | 9980450

7 | 9973950

9 | 9960450

10 | 4999050

5 | 4995000

29 | 2642700

28 | 2640900

16 | 2640300

26 | 2630250

24 | 2627500

23 | 2623700

19 | 2622350

27 | 2622000

18 | 2621200

12 | 2619450

20 | 2616200

17 | 2616050

21 | 2615800

15 | 2613200

22 | 2612200

14 | 2607700

13 | 2605900

25 | 2604150

30 | 1312300

11 | 1311950

(30 rows)

Time: 12942.455 ms (00:12.942)

-- Sampling 2%, about 13 seconds.

postgres=# select c1,(count(*))*1000 from -- Multiplied by 100/sampling coefficient

(select * from tbl1 TABLESAMPLE system (0.1)) t

where c2 between 1 and 10 group by 1 order by 2 desc limit 30;

c1 | ?column?

----+----------

1 | 48077000

3 | 25061000

2 | 12762000

4 | 12262000

8 | 9851000

6 | 9789000

7 | 9718000

9 | 9654000

5 | 4971000

10 | 4885000

18 | 2731000

28 | 2727000

29 | 2710000

23 | 2697000

15 | 2687000

27 | 2681000

22 | 2672000

17 | 2672000

25 | 2670000

19 | 2637000

20 | 2632000

12 | 2628000

14 | 2628000

21 | 2622000

26 | 2618000

13 | 2601000

24 | 2522000

16 | 2513000

11 | 1406000

30 | 1301000

(30 rows)

Time: 863.604 ms

-- Sampling 0.1%, about 0.86 seconds. When the sampling one thousandth of the data (only about 254 MB of data is scanned), it takes less than one second to obtain the TOP 30 with high accuracy.

Using this feature in Greenplum will be amazing, and it is possible to complete data pivoting for one trillion pieces of data from any dimensions within seconds.

Determine the array element TOP N

Determine scalar type TOP N

Although parallel computing is also very fast, it requires more CPU and I/O resources, which directly affect the degree of parallelism. Unless there are enough resources, we still recommend that you use value estimation.

Since the current value estimation methods do not support multi-core parallelism, the processing speed is about 254 MB per second. To respond within 1 second, the sampling rate should be set to 0.1% for a 254 GB table, and 0.025% for a 1 TB table. This means that value estimation from any dimensions can be achieved within seconds for TB level tables.

https://www.postgresql.org/docs/9.6/static/sql-select.html

TABLESAMPLE sampling_method ( argument [, ...] ) [ REPEATABLE ( seed ) ] A TABLESAMPLE clause after a table_name indicates that the specified sampling_method should be used to retrieve a subset of the rows in that table. This sampling precedes the application of any other filters such as WHERE clauses. The standard PostgreSQL distribution includes two sampling methods, BERNOULLI and SYSTEM, and other sampling methods can be installed in the database via extensions.

The BERNOULLI and SYSTEM sampling methods each accept a single argument which is the fraction of the table to sample, expressed as a percentage between 0 and 100. This argument can be any real-valued expression. (Other sampling methods might accept more or different arguments.) These two methods each return a randomly-chosen sample of the table that will contain approximately the specified percentage of the table's rows. The BERNOULLI method scans the whole table and selects or ignores individual rows independently with the specified probability. The SYSTEM method does block-level sampling with each block having the specified chance of being selected; all rows in each selected block are returned. The SYSTEM method is significantly faster than the BERNOULLI method when small sampling percentages are specified, but it may return a less-random sample of the table as a result of clustering effects.

The optional REPEATABLE clause specifies a seed number or expression to use for generating random numbers within the sampling method. The seed value can be any non-null floating-point value. Two queries that specify the same seed and argument values will select the same sample of the table, if the table has not been changed meanwhile. But different seed values will usually produce different samples. If REPEATABLE is not given then a new random sample is selected for each query, based upon a system-generated seed. Note that some add-on sampling methods do not accept REPEATABLE, and will always produce new samples on each use.

https://www.postgresql.org/docs/9.6/static/tsm-system-rows.html

CREATE EXTENSION tsm_system_rows;

SELECT * FROM my_table TABLESAMPLE SYSTEM_ROWS(100);

https://www.postgresql.org/docs/9.6/static/tsm-system-time.html

CREATE EXTENSION tsm_system_time;

SELECT * FROM my_table TABLESAMPLE SYSTEM_TIME(1000);

Note 1: PostgreSQL also supports many other value estimation methods.

Note 2: If sampling supports parallelism, it could estimate larger tables with greater accuracy. For example, 1% is a good sampling rate for value estimation, which means 10 GB for a 1 TB table. If parallelism is supported, it could complete the entire sampling and value estimation process within 3 seconds.

Supporting 200 Billion Data Records in a Single RDS PostgreSQL Instance

Performance of PostgreSQL Multi-Field Random Combination Searches

Alibaba Clouder - October 15, 2018

ApsaraDB - August 1, 2022

Alibaba Clouder - December 12, 2017

digoal - December 21, 2020

digoal - June 26, 2019

Alibaba Clouder - July 7, 2020

ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB supports multiple storage engines, including MySQL InnoDB to meet different user requirements.

Learn MoreMore Posts by digoal

Raja_KT March 15, 2019 at 1:56 pm

Good one. It will be more fascinating if you can share the infra details.