By Digoal

If you have watched the web series, Candle in the Tomb — Tomb of the Dragon Deception, you may have some questions. What is Hu Bayi's "16-Volume Yin and Yang Feng Shui Secrets"? What is "Tomb of the Dragon Deception"?

Let’s take a look at the following information from the internet.

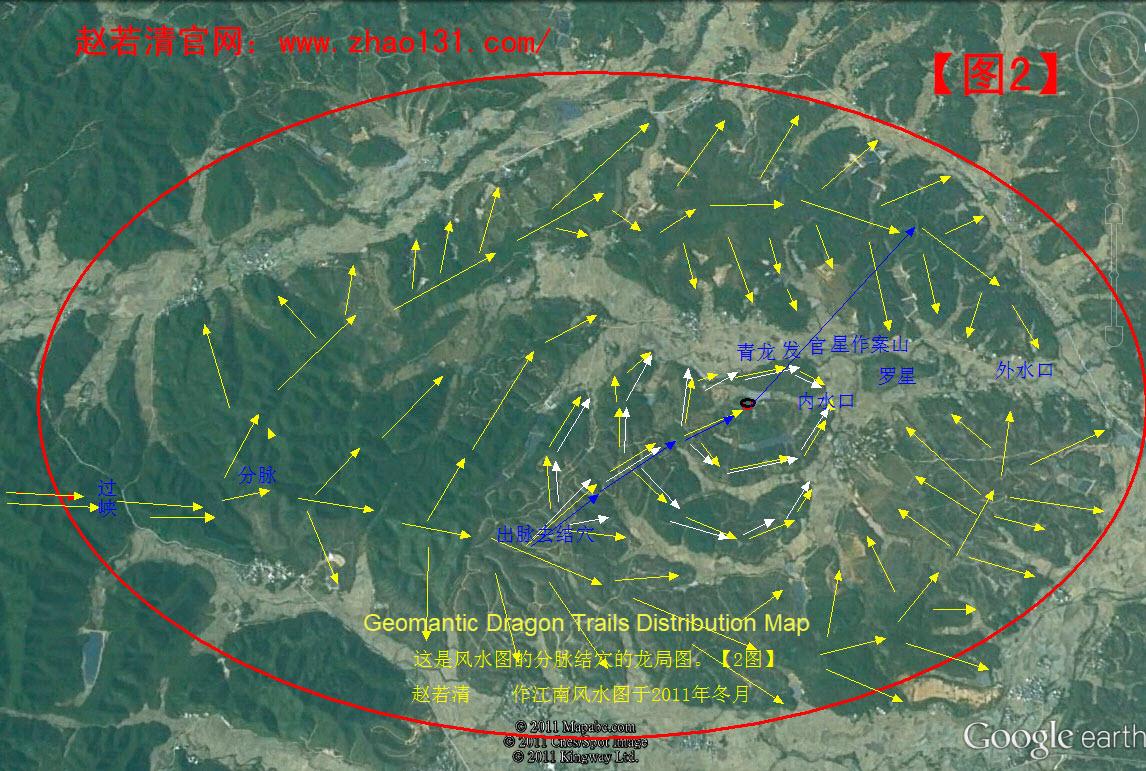

The Chinese name of the movie "Tomb of the Dragon Deception" is used in Feng Shui. Also known as Chinese geomancy, Feng Shui is a traditional practice dating back to ancient China, which claims to use energy forces to harmonize individuals with their environment. The Chinese name of "Tomb of the Dragon Deception" literally means to find the geomantic dragon trail (treasure land) and the auspicious site.

Ancient Chinese believed that it takes three years to find a geomantic treasure land and ten years to find the auspicious site where most of the spirit and energy in this land gather. In other words, it takes a long time to learn how to find a geomantic treasure land. But it is even more challenging to locate the exact auspicious site, which may take ten years. However, if you go wrong, you may not be able to find the auspicious place even in 100 years, and all your efforts will go in vain. It is never easy for beginners, and even some experienced experts, to find the exact site.

Instead of finding a geomantic auspicious site, we may be driven to find something else in real life. For example, finding where potential customers gather.

We must believe in the power of science. Nowadays, big data and AI are so powerful that we can make predictions based on massive amounts of data.

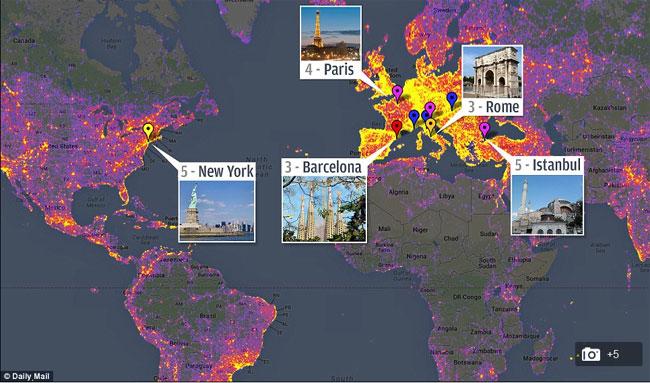

We have a large variety of data, such as human activity, car activity, and sensor data. We can analyze this data to find the most suitable places to live and do business.

Let's get back to the theme of this article: spatial aggregation analysis with PostgreSQL. It discusses two new features of PostGIS 2.3 about spatial data aggregation analysis.

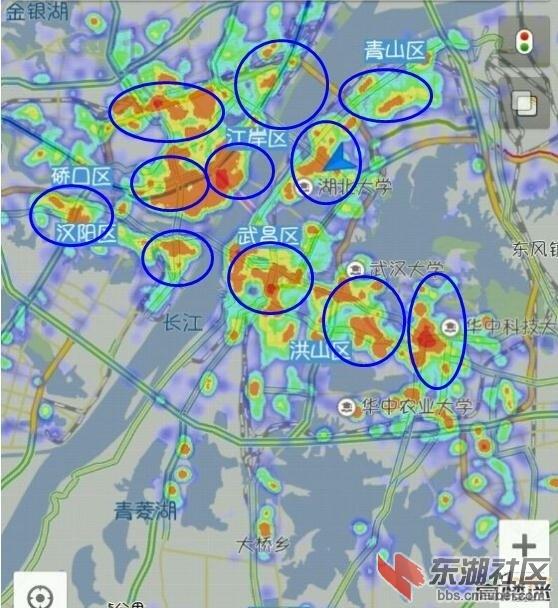

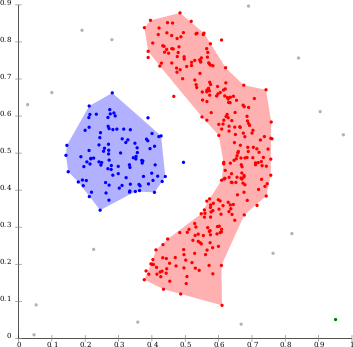

For example, with massive amounts of data, including data points about human activities, we can get a heatmap of areas where people gather during a specified period through spatial aggregation analysis.

Here is a story about the "auspicious turtles' tomb" in "Candle in the Tomb":

A man looked into the sea and suddenly saw a small black "island" moving slowly in the water. He observed it carefully, and it turned out to be a dozen old turtles swimming in the sea, with a giant dead turtle on their backs. These old turtles swam into a cave under a cliff, placed the giant turtle corpse in a safe place, and then swam back to the sea one by one.

The man who saw this special funeral knows how to locate the auspicious site. He immediately knew that these spiritual creatures selected the cave to rest themselves after they were dead. The cave showed intense auspicious energy and was a perfect tomb for his parents, who died a few days ago.

As the turtles did, he placed his parents' bodies inside the cave without covering them with a coffin. After that, the man quickly became rich and powerful. The turtles' cave then became a private tomb of his house. Hundreds of years later, the cave’s spirit and energy were depleted, and it collapsed. A lot of bodies inside the cave were exposed to the sun and wind. All these bodies were covered with birds' feathers and dragons' scales. A day and night later, these bodies disappeared in the wind.

Then what is the relationship between this story and data analysis? I simply want to show you how difficult it is for the ancient people to find an "auspicious turtles' tomb”. Now that we have the data, will it be easier?

Assume that our data covers the following dimensions:

1) Time

2) User location

3) User attributes (such as income, industry, and age)

Aggregate and analyze spatial data by user attributes and time to generate user heatmaps in different analytic dimensions. This is similar to what Feng Shui masters do.

Then how to analyze the aggregation of spatial data?

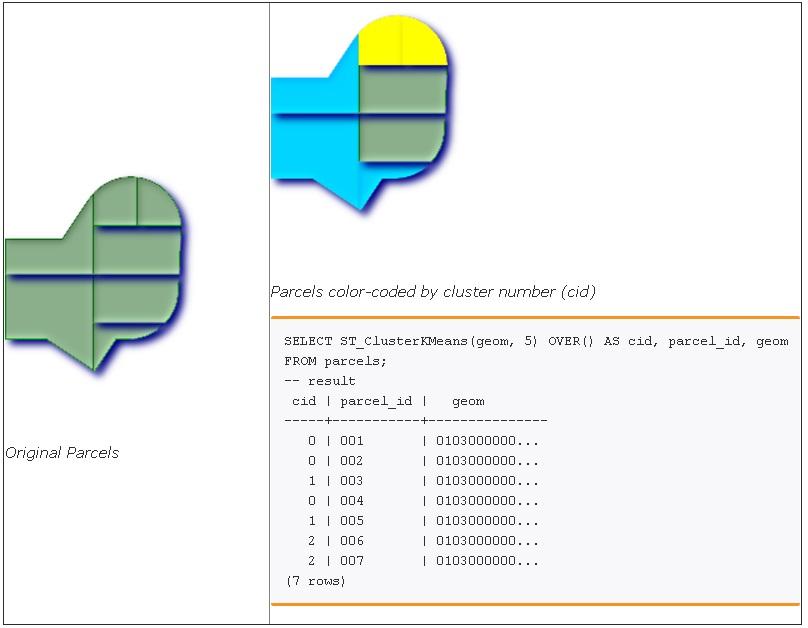

To do this, PostGIS 2.3 provides two new window functions:

1) ST_ClusterDBSCAN, a spatial data aggregation analysis function based on density-based spatial clustering of applications with noise (DBSCAN) algorithm:

2) ST_ClusterKMeans, a spatial data aggregation analysis function based on the K-means algorithm:

Isn't it interesting?

1)

-- Partitioning parcel clusters by type

SELECT ST_ClusterKMeans(geom,3) over (PARTITION BY type) AS cid, parcel_id, type

FROM parcels;

-- result

cid | parcel_id | type

-----+-----------+-------------

1 | 005 | commercial

1 | 003 | commercial

2 | 007 | commercial

0 | 001 | commercial

1 | 004 | residential

0 | 002 | residential

2 | 006 | residential

(7 rows) 2)

SELECT name, ST_ClusterDBSCAN(geom, eps := 50, minpoints := 2) over () AS cid

FROM boston_polys

WHERE name > '' AND building > ''

AND ST_DWithin(geom,

ST_Transform(

ST_GeomFromText('POINT(-71.04054 42.35141)', 4326), 26986),

500); These two window functions generate only the aggregation ID of each record. Aggregate data records by their aggregation IDs into geometric objects (for example, point sets), and then aggregate point sets into planes.

A lot of customer traffic is not always a good thing. Rivers do not always bring fortune to people who live alongside them.

We need to analyze customer traffic from multiple dimensions, the stay time, and the increased number and decreased number of customers.

For example, a subway station has massive customer traffic, but people stay for a short time. It is not wise to open a supermarket in it. However, convenience stores and soft drink shops are popular and generate a lot of revenue.

Decide by using StreamCompute in combination with PostGIS.

1) Create Table 1 (a polygonal table for an administrative region, community, or neighborhood) through spatial data aggregation.

2) Create streams and JOIN Table 1.

3) Create a stream view and group the streams by "polygon and time window" (for example, by 10 minutes, 30 minutes, and 1 hour). Collect statistics of the increased number, decreased number, and people’s sfinal number by polygon and time window.

4) Write the real-time location data of people into the streams.

For more information, see the PipelineDB document. PipelineDB is a plug-in that you can use together with PostgreSQL 10. To use it, you must install and configure it with PostgreSQL 10.

These window functions enable you to create a pivot chart based on people and the monitored objects in multiple dimensions such as time, space, and object attributes.

In addition to these two window functions, PostgreSQL provides the MADlib machine learning library. Call this library using SQL, PivotalR, and Python APIs for data pivoting and easy programming based on databases’ analysis capabilities. Use massively parallel processing (MPP) (for large amounts of data) and multi-core parallel computation, vector computation, and just-in-time (JIT) compilation in PostgreSQL (for middle-volume data) to significantly improve the computation capability of your database.

Technologies Used in Pilotless Driving – Part 2: PostGIS Point Cloud Application

ApsaraDB - October 8, 2024

Alibaba Clouder - February 15, 2018

ApsaraDB - October 22, 2024

digoal - May 16, 2019

digoal - December 18, 2020

digoal - January 21, 2021

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by digoal