By Digoal

The PipelineDB cluster is a powerful distributed stream computing engine, made popular by its scalability, high availability, load balancing, read and write optimization, and automatic data merging capabilities. In this article, we'll introduce the PipelineDB cluster architecture, and discuss how it optimizes its network and maintains high availability for read/write operations amid shard failures.

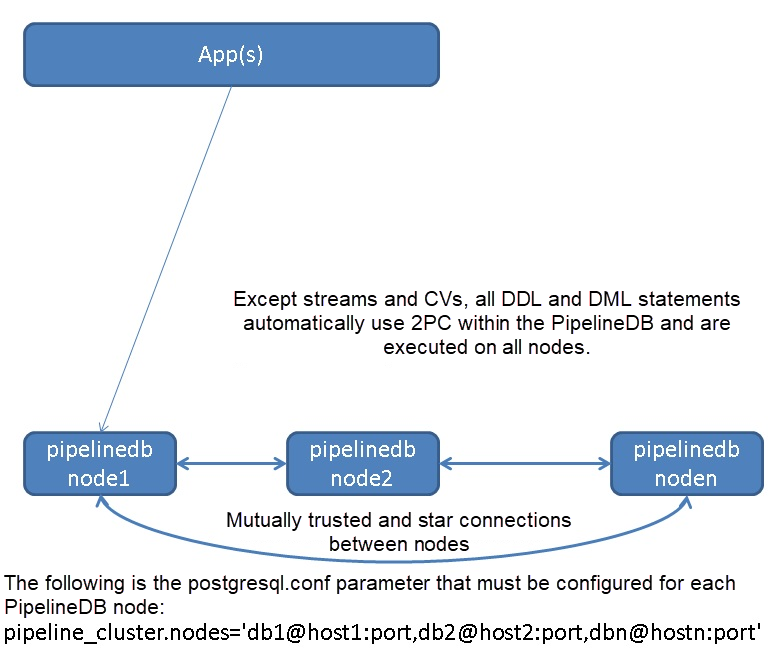

To achieve a master-less architecture, all nodes in the PipelineDB cluster communicate with each other in a trusted manner to implement automatic distribution of intermediate Continuous View (CV) aggregation results, as well as full distribution and 2 Phase Commit (2PC) of DML and DDL statements of common data (other than streams and CVs).

Clients can connect to any PipelineDB shared node thanks to the master-less architecture.

Except for CVs, all other DDL and DML actions use the 2PC mode and are executed on all nodes. Ensure that all nodes have complete non-CV and non-stream data.

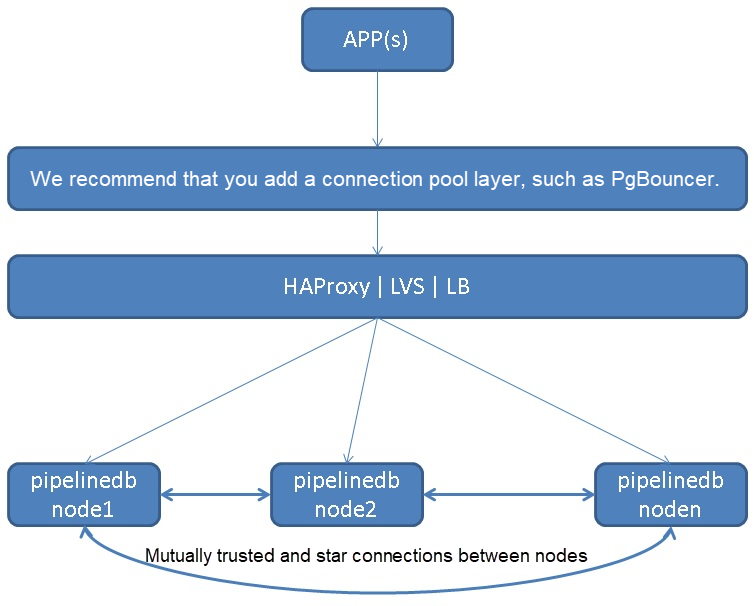

We recommend that applications connect to HAProxy or other load balancing software through the connection pool, and then to each PipelineDB node to achieve the maximum throughput. We also recommend that you use HAProxy or LVS to achieve load balancing. Currently, JDBC, libpq, or many other drivers support load balancing configurations.

You can use PgBouncer to implement the connection pool function. PgBouncer was developed in pure C and is an efficient PostgreSQL connection pool.

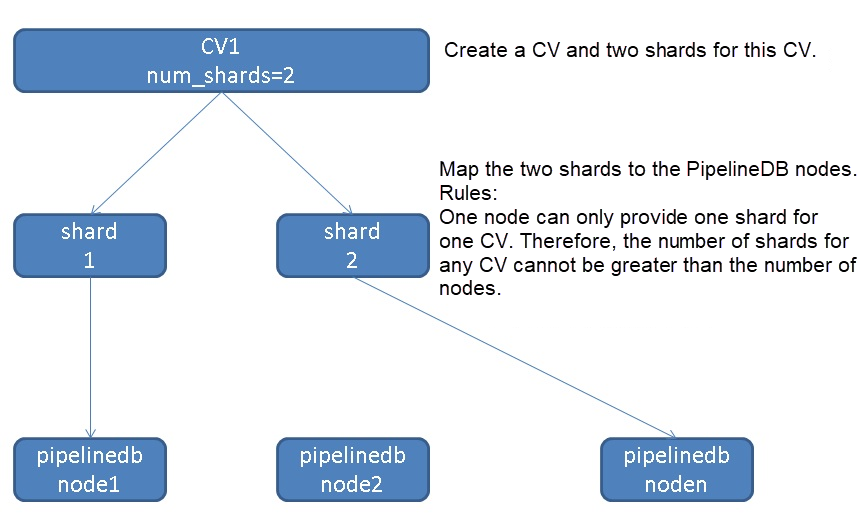

A node refers to a PipelineDB data node. A shard is a logical concept. When creating a CV, you must specify the number of shards to be enabled for this CV.

Note that each node is responsible for only one shard of the same CV to maximize horizontal scaling efficiency. So, what is the maximum number of shards that can be created for a CV? The answer is that the number of shards created for a CV cannot exceed the number of nodes.

You can use the following two methods to define the number of shards for a CV:

CREATE CONTINUOUS VIEW v WITH (num_shards=8) AS

SELECT x::integer, COUNT(*) FROM stream GROUP BY x;

CREATE CONTINUOUS VIEW v WITH (shard_factor=50) AS

SELECT x::integer, COUNT(*) FROM stream GROUP BY x; When using shard_factor, you actually specify a percentage value ranging from 1 to 100. Therefore, if you set shard_factor to 50 for a 16-node cluster, eight shards are created.

When you create a CV, metadata is also generated to indicate the location of the CV and sharding policy (read optimization or write optimization). The metadata structure is as follows:

\d pipeline_cluster.shards

View "pipeline_cluster.shards"

Column | Type | Modifiers

-----------------+----------+-----------

shard | text |

owner | text |

continuous_view | text |

shard_id | smallint |

shard_type | text |

sharding_policy | text |

is_local | boolean |

balanced | boolean |

reachable | boolean | When creating a CV, you can use sharding_policy to specify the data routing policy.

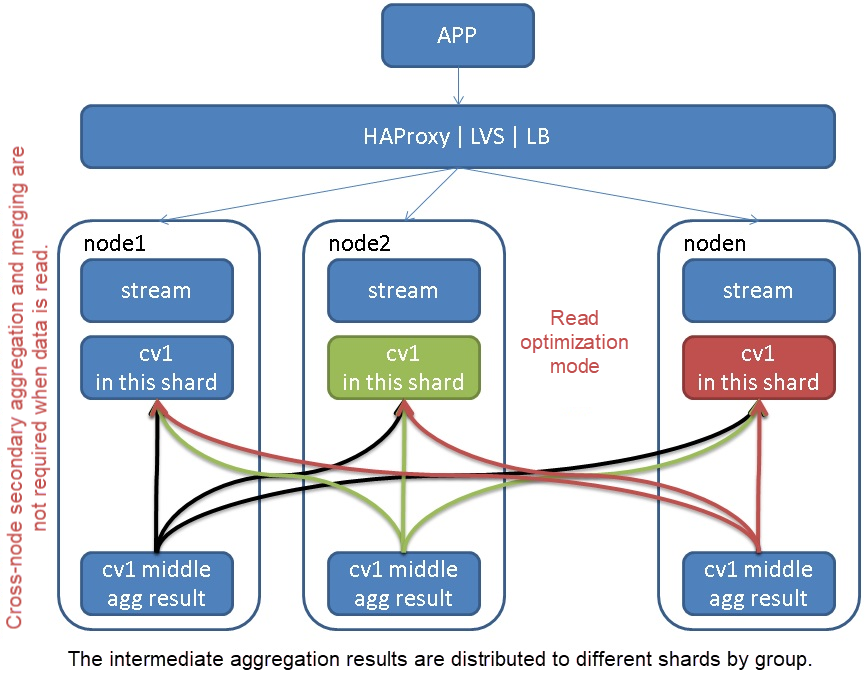

In a CV, the data in the same aggregation group is routed to a node and does not need to be merged for a second time when being read. However, writing may involve data redistribution. This action is completed by a PipelineDB shard node and is transparent to the application. However, the write performance will definitely be reduced.

Note that the data distributed on the network is the aggregated data, that is, the aggregation results. The data is distributed by group to the corresponding shard.

What this means is that only the aggregate result of incoming rows actually needs to be routed. This is designed to both minimize network overhead and distribute work.

INSERT INTO stream (x) VALUES (0), (0), (0), (0), (0); Assuming that the worker process reads these five rows all at once fast enough, only the aggregate row (0, 5) would be routed to the grouping's designated node, and subsequently combined with on-disk data as usual.

CREATE CONTINUOUS VIEW v0 WITH (sharding_policy='read_optimized') AS

SELECT x::integer, COUNT(*) FROM stream GROUP BY x; Each shard manages its own CV, so data of the same dimension may appear on multiple shards. The write performance reaches maximum throughput.

Part of the aggregated results must be distributed only when the local node does not contain any shard of the target CV. Pay attention to the code and check whether a batch of intermediate results are distributed to all shards at random or to a randomly selected shard, and whether the entire batch of the intermediate results is distributed to this shard.

Routing is then only necessary if a node that produces a partial aggregate result for a given group does not have any shards for the group's continuous view.

CREATE CONTINUOUS VIEW v1 WITH (sharding_policy='write_optimized') AS

SELECT x::integer, COUNT(*) FROM stream GROUP BY x; When using the write optimization mode, you need to merge the read operations to ensure data integrity. Therefore, PipelineDB must support the merging of all the CVs, including common indicators (such as count, avg, and sum) and probabilistic indicators (such as CMS-TOP and HLL). Fortunately, all these probabilistic types currently support UNIONs of indicators of the same type.

CREATE CONTINUOUS VIEW v WITH (sharding_policy='write_optimized', shards=16) AS

SELECT x::integer, AVG(y::integer) FROM stream GROUP BY x; Since it uses a write_optimized grouping policy, multiple local copies for each grouped average may exist. At read time, these groups would be combined with no loss of information, producing a finalized result with exactly one row per group. Please refer to the official documentation to learn more http://docs.pipelinedb.com/aggregates.html#combine

PipelineDB provides a "per-shard replication" feature to allow for high availability in case of node failure or downtime. Please refer to the official documentation to learn more http://enterprise.pipelinedb.com/docs/high-availability.html

The PostgreSQL asynchronous logical subscription function (logical decoding) is used within the CV to implement multiple replicas of the CV results. When creating a CV, you can use num_replicas to specify the number of replicas for each shard.

Note that asynchronous replication is used here. Therefore, when you create a CV, "num_shards = 3" indicates three primary shards, and "num_replicas = 2" indicates that each primary shard has two replicas.

CREATE CONTINUOUS VIEW v WITH (num_shards=3, num_replicas=2) AS

SELECT x::integer, COUNT(*) FROM stream GROUP BY x; If you do not specify num_replicas when creating a CV, the value of the pipeline_cluster.num_replicas parameter is used instead.

When multiple replicas are configured, read load balancing can be implemented among shards:

Read load balancing: If you set pipeline_cluster.primary_only to the default value FALSE (indicating that reading replica shards is allowed), replica shards are included as a read load balancing node. For example, if a primary shard has three replica shards, the read load can be balanced among the four shards. Note that the PipelineDB replicas adopt asynchronous logic replication mode, and data latency may exist. Read load balancing may result in data inconsistency.

1. Read: As long as you set pipeline_cluster.primary_only to FALSE and num_replicas is greater than or equal to 1, when the node where the primary shard is located fails, the system reads data on the replica shard.

High availability of the read operation depends on the number of replicas. The read operation is not affected if three shards fail, that is, num_replicas on the same shard = 3.

To achieve high availability of the read operation, you must set pipeline_cluster.primary_only to FALSE.

2. Read: When a primary shard and all its corresponding replica shards are unavailable, the shard is really unavailable. At the very least, data on this shard cannot be read.

However, you can skip the unavailable shard by setting pipeline_cluster.skip_unavailable to TRUE. This means that part of data can be returned.

3. Write: When a primary shard is unavailable, data is written to other available primary shards. The intermediate results of this aggregation are discarded only when all primary shards are unavailable.

High availability of the write operation depends on the number of primary shards. The write operation is not affected and data is not lost if two nodes fail, that is, (num_shards - 1) = 2.

When all primary shards are unavailable, if the cluster still has available nodes (that is, when the num_shards set for the CV is smaller than the number of nodes in the PipelineDB cluster), the node continues to compute data, but the result will be discarded because no primary shard is available.

4. Write: If the CV adopts the read optimization mode and a primary shard fails, the data can be normally routed to other primary shards. However, the distribution of the CV group data in the read optimization mode is disrupted, resulting in unbalanced data. If you read an unbalanced CV without performing COMBINE, inconsistent results are read. For more information about how to solve this problem, see the later chapter about balancing.

When the node where the primary shard is located fails and cannot be recovered, data may be lost. Replica shards use asynchronous logical replication mode. Therefore, they cannot ensure that data will not be lost. In addition, replicas aim to improve the read load balancing capability, and are not designed as failover nodes. That is, replicas adopt one-way replication.

You can implement high availability and backup policies for the PostgreSQL instance where a node is located. To achieve zero loss, you can use multiple replicas at the instance-level or share multiple storage replicas.

No data will be lost as long as the data on the primary node is not lost.

During reading, how can you skip unavailable shards? That is, when the primary shard of a shard and all its replica shards are unavailable, how can you obtain part of the results? When querying, you can skip unavailable shards and obtain incomplete CV results by setting the following parameter to "true".

pipeline_cluster.skip_unavailable = true In read optimization mode (read_optimized), when a primary shard is abnormal, data that should be distributed to the CV group of this primary shard may be written to another primary shard to ensure normal writing and data integrity.

However, in read optimization mode, data is not combined when being read from a CV. Therefore, this part of the data exists but cannot be read.

If the balanced column of the pipeline_cluster.shards metadata table is set to FALSE, the data of this shard must be rebalanced. If the shard balanced column is set to FALSE in read optimization mode, manual rebalance is needed.

In write optimization mode, pipeline_cluster.shards.balanced is always TRUE, because no rebalance is needed in this mode and the agg columns are combined during query.

In the future, the PipelineDB cluster will automatically check CVs in read optimization mode in the background. If the check result indicates that rebalance is needed, data is automatically rebalanced to ensure global consistency of the query. This problem occurs just occasionally, and no perfect solution is available yet.

The following provides a core optimization recommendation:

In read optimization mode, you can first check whether rebalance is needed. If yes, use COMBINE for query and notify the rebalance task to enable asynchronous rebalance.

PipelineDB has a certain relationship with Citus. The PipelineDB cluster edition borrows a lot of design concepts from the Citus pg_shard plug-in, and may even reuse a large amount of code.

https://www.citusdata.com/product

https://github.com/citusdata/pg_shard

https://github.com/citusdata/citus

PipelineDB cluster is a distributed stream computing engine. It delivers good computing scalability, reliability, and load balancing capabilities. The same CV supports cross-shard computing, read optimization mode, and write optimization mode. It also supports automatic merging of aggregate computing results in write optimization mode and is an excellent distributed stream computing engine.

PipelineDB cluster optimizes the network overhead by distributing intermediate aggregation results.

In terms of read availability, a CV implements multiple replicas through internal logical subscription, achieving read high availability and load balancing.

In terms of write availability, any primary shard can accept data storage (data is combined when being read), so the write operation itself is highly available.

http://enterprise.pipelinedb.com/docs/index.html

PostgreSQL Swinging Door Trending (SDT) Algorithm for Stream Computing Applications

digoal - September 17, 2019

digoal - September 6, 2019

Alibaba Clouder - January 17, 2018

digoal - September 6, 2019

digoal - June 26, 2019

digoal - September 18, 2019

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More Media Solution

Media Solution

An array of powerful multimedia services providing massive cloud storage and efficient content delivery for a smooth and rich user experience.

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn MoreMore Posts by digoal