The Performance Challenge Championship (PCC) is an event organized by ArchNotes. After learning about the rules of the competition, I found PostgreSQL is very suitable for this scenario. The scenario is reproduced as it is, implemented with PG, but how does it perform?

The competition is described as follows (page in Chinese): https://github.com/archnotes/PCC

Download the test data: https://github.com/archnotes/PCC/tree/master/data (non-stress testing data)

Uid is in uint64, allowing 100 million data entries.

1,Tom

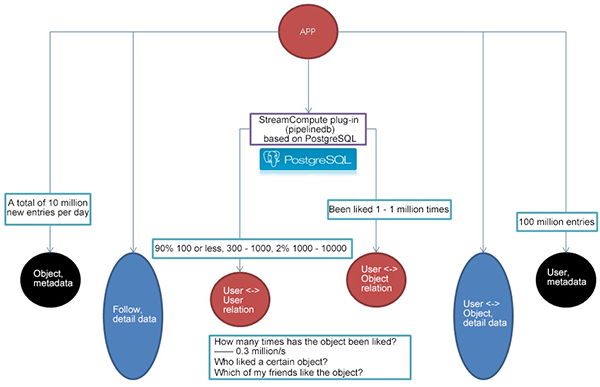

2,Jerry Uid and friend_id is in uint64, and only two-way friend relations exist. Friend relation is usually a long-tailed distribution. For a friend relation of 100 million users * 1000, 100 or less friends in the relation range of 90%, 300 - 1000 friends in the relation range of 8%, and 1000 - 10,000 friends in the relation range of 2%

1,2 Oid and uid is in uint64. 200 million objects, and 1 - 100,000 likes per object

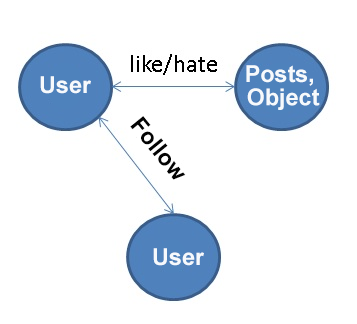

101:[1,2,3,4,5] The relation between users is following (being followed) or mutual following.

The relation between users and objects is liking or disliking.

During the design, detailed data and statistical data are divided. Statistical data is to query the relation and number being followed more quickly.

Detailed data can be recorded in logs or databases. Statistical data (relation, count, been liked) is written into the database in a streaming manner.

create table user_like(uid int8 primary key, like_who int8[]); -- who to follow?

create table user_liked(uid int8 primary key, who_like int8[]); -- being followed by whom? It is not involved in this scenario (you can create a reverse relation table if necessary).

create table obj(id int8 primary key, who_like int8[], like_cnt int8); -- who likes the object, how many users like it altogether?The peak value of like_cnt is queried 300,000 times per second

create table user_like_obj(uid int8 primary key, ids int8[]); -- objects that the user likes. It is not involved in this scenario (you can create a reverse relation table if necessary). 1. Users that the user follows

select like_who from user_like where uid=? ; 2. Users that follow the user. It is not involved in this scenario (you can create a reverse relation table if necessary).

select who_like from user_liked where uid=? ; 3. Objects that the user likes. It is not involved in this scenario (you can create a reverse relation table if necessary).

select ids from user_like_obj where uid=? ; 4. Users that like the object

select who_like from obj where id=? ; 5. The number of times the object has been liked

elect like_cnt from obj where id=? ; 6. Which users who like the object are also my friends?

CREATE FUNCTION array_intersect(anyarray, anyarray)

RETURNS anyarray

language sql

as $FUNCTION$

SELECT ARRAY(

SELECT UNNEST($1)

INTERSECT

SELECT UNNEST($2)

);

$FUNCTION$;

select array_intersect(t1.who_like, t2.like_who) from

(

select who_like from obj where id=?

) t1

,

(

select array[like_who] as like_who from user_like where uid=?

) t2; Create a stream. The "Follow" behavior is written to the stream and also to the detail data (optional).

create STREAM user_like(uid int8, like_who int8); -- who to follow?

create STREAM obj(id int8, who_like int8); -- who likes the object? Create a continuous view and make real-time statistics based on the "Follow" behavior.

create CONTINUOUS VIEW cv_user_like as select uid, array_agg(like_who) as like_who from user_like group by uid; -- who to follow?

create unique index idx1_cv_user_like on cv_user_like(uid);

create CONTINUOUS VIEW cv_obj as select id, array_agg(who_like) as who_like, count(*) as like_cnt from obj group by id; -- who likes the object, how many users like it altogether? The peak value of like_cnt is queried 300,000 times per second

create unique index idx1_cv_obj on cv_obj(id); Activate StreamCompute

pipeline=# activate ;

ACTIVATE The Follow operation function is to determine whether the user is followed or not. If the user is already followed, an exception is returned. Otherwise, the user is followed. (This can also be written in the program, but it needs to interact with the database many times, which is not good)

The function can be adjusted based on actual needs. For example, if you need to return the array after being liked, just query the continue view.

create or replace function f_user_like(i_uid int8, i_like_who int8) returns void as

$$

declare

begin

perform 1 from cv_user_like where uid=i_uid and like_who @> array[i_like_who]; -- follow the user if the user is not followed

if not found then

insert into user_like values (i_uid, i_like_who);

end if;

end;

$$

language plpgsql strict;

create or replace function f_obj(i_id int8, i_who_like int8) returns void as

$$

declare

begin

perform 1 from cv_obj where id=i_id and who_like @> array[i_who_like];

if not found then

insert into obj values (i_id, i_who_like);

end if;

end;

$$

language plpgsql strict; Test

1 likes article 1

pipeline=# select f_obj(1,1);

f_obj

-------

(1 row)

1 likes article 1

pipeline=# select f_obj(1,1);

f_obj

-------

(1 row)

Who likes article 1

pipeline=# select * from cv_obj;

id | who_like | like_cnt

----+----------+----------

1 | {1} | 1

(1 row)

2 likes article 1

pipeline=# select f_obj(1,2);

f_obj

-------

(1 row)

Who likes article 1

pipeline=# select * from cv_obj;

id | who_like | like_cnt

----+----------+----------

1 | {1,2} | 2

(1 row)

100 likes article 1

pipeline=# select f_obj(1,100);

f_obj

-------

(1 row)

Who likes article 1

pipeline=# select * from cv_obj;

id | who_like | like_cnt

----+-----------+----------

1 | {1,2,100} | 3

(1 row) 1. User ID range

1 - 100 million

2. Article ID range

1 - 200 million

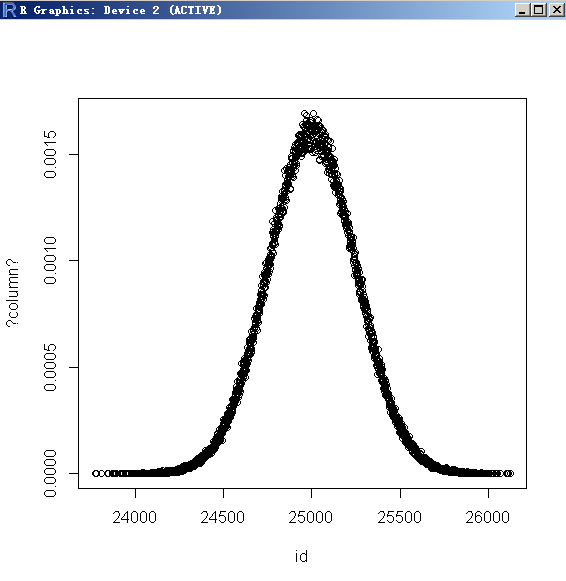

3. Hot article ID range

A total of 200 million articles, using Gaussian distribution for LIKE operation. The article IDs distributed in the range of 2.0/xx centered on the top of the bell-shaped curve cover 95% of the occurrence probability. The article IDs distributed in the range of 1.0/xx cover 67% of the occurrence probability.

The closer the horizontal axis is to the value of the top of the curve (that is, the article ID = 100 million), the higher the probability of occurrence.

The smaller the value of xx, the sharper the curve, that is, the fewer hot articles.

4. Random users like random articles

5. Random users like hot articles

Basic data includes the stress testing script, liked articles, and article IDs generated by using Gaussian distribution. After a long time of stress testing, the number of articles being liked presents Gaussian distribution, and the article IDs at the top of the curve is liked the most times.

xx is set to 10.0, indicating the article IDs in the range of 20% centered on the top of the bell-shaped curve cover 95% of the occurrence probability . The article IDs distributed in the range of 10% cover 67% of the occurrence probability.

The larger the value of xx, the higher the probability of the article IDs at the top of the curve.

vi test.sql

\setrandom uid 1 100000000

\setrandom id 1 200000000 gaussian 10.0

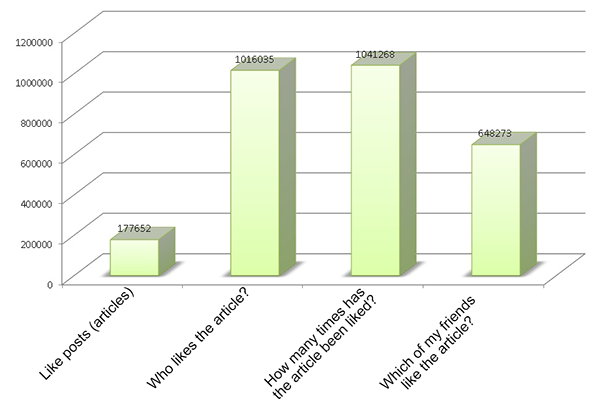

select f_obj(:id,:uid); 256 connections are stress tested, resulting in 177,000 LIKE requests per second.

pgbench -M prepared -n -r -P 1 -f ./test.sql -c 256 -j 256 -T 120

transaction type: Custom query

scaling factor: 1

query mode: prepared

number of clients: 256

number of threads: 256

duration: 120 s

number of transactions actually processed: 21331348

latency average: 1.438 ms

latency stddev: 0.591 ms

tps = 177652.080934 (including connections establishing)

tps = 177665.827969 (excluding connections establishing)

statement latencies in milliseconds:

0.002267 \setrandom uid 1 100000000

0.002384 \setrandom id 1 200000000 gaussian 10.0

1.433405 select f_obj(:id,:uid); Number of articles after staged stress testing

pipeline=# select count(*) from cv_obj;

count

----------

86842876

(1 row)

-- Query the number of times articles near the top of the curve are liked.

pipeline=# select like_cnt from cv_obj where id=100000000;

like_cnt

----------

18317

(1 row)

pipeline=# select like_cnt from cv_obj where id=100000001;

like_cnt

----------

18410

(1 row)

pipeline=# select like_cnt from cv_obj where id=100000002;

like_cnt

----------

18566

(1 row)

pipeline=# select like_cnt from cv_obj where id=100000000-1;

like_cnt

----------

18380

(1 row)

pipeline=# select like_cnt from cv_obj where id=100000000-2;

like_cnt

----------

18399

(1 row)

Articles on the bottom edge of the curve are rarely liked

pipeline=# select * from cv_obj where id>199999990;

id | who_like | like_cnt

-----------+------------+----------

199999991 | {89433428} | 1

(1 row) The results are in line with expectations, and the stress testing can be continued. (Or we can choose the exponential distribution for testing)

CPU usage is as follows when no optimization is performed

Cpu(s): 35.2%us, 17.4%sy, 13.8%ni, 33.2%id, 0.3%wa, 0.0%hi, 0.1%si, 0.0%st

Overhead of other irrelevant processes on the host

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

16725 digoal 20 0 18.4g 11m 948 S 320.6 0.0 1:15.63 pgbench -M prepared -n -r -P 1 -f ./test.sql -c 256 -j 256 -T 120

18411 root 20 0 445m 58m 7048 R 98.8 0.0 0:03.25

18434 root 20 0 445m 58m 7040 R 87.5 0.0 0:02.71 The continuous stress testing on the Like operation generates the Like data of 200 million articles, and then stress testing 2 is carried out.

1. User ID range

1 - 100 million

2. User friend distribution

100 or less friends in the relation range of 90%, 300 - 1000 friends in the relation range of 8%, and 1000 - 10,000 friends in the relation range of 2%

Relation table

create table user_like_agg(uid int8 primary key, like_who int8[]);

Generates an array with a specified range of elements

create or replace function gen_uids(c1 int, c2 int) returns int8[] as

$$

select array(select (random()*100000000)::int8 from generate_series(1, c1+(random()*(c2-c1))::int));

$$

language sql strict;

Sequence

create sequence seq cache 100; Generate 90% of user relations

vi gen90.sql

insert into user_like_agg select nextval('seq'), gen_uids(1,100);

pgbench -M prepared -n -r -P 1 -f ./gen90.sql -c 100 -j 100 -t 900000 Generate 8% of user relations

vi gen8.sql

insert into user_like_agg select nextval('seq'), gen_uids(300,1000);

pgbench -M prepared -n -r -P 1 -f ./gen8.sql -c 100 -j 100 -t 80000 Generate 2% of user relations

vi gen2.sql

insert into user_like_agg select nextval('seq'), gen_uids(1000,10000);

pgbench -M prepared -n -r -P 1 -f ./gen2.sql -c 100 -j 100 -t 20000 Finally, 100 million users are generated, occupying 123 GB of space and 2.7 GB of index.

pipeline=#

pipeline=# \dt+ user_like_agg

List of relations

Schema | Name | Type | Owner | Size | Description

--------+---------------+-------+----------+--------+-------------

public | user_like_agg | table | postgres | 123 GB |

(1 row)

pipeline=# \di+ user_like_agg_pkey

List of relations

Schema | Name | Type | Owner | Table | Size | Description

--------+--------------------+-------+----------+---------------+---------+-------------

public | user_like_agg_pkey | index | postgres | user_like_agg | 2706 MB |

(1 row)

pipeline=# select count(*) from user_like_agg ;

count

-----------

100000000

(1 row) 1. Who likes the article?

2. How many times has the article been liked?

3. Which of my friends like the article?

Stress testing script 1: Who likes the article?

vi test1.sql

\setrandom id 1 200000000

select who_like from cv_obj where id=:id;

pgbench -M prepared -n -r -P 1 -f ./test1.sql -c 128 -j 128 -T 120 Stress testing script 2: How many times has the article been liked?

vi test2.sql

\setrandom id 1 200000000

select like_cnt from cv_obj where id=:id;

pgbench -M prepared -n -r -P 1 -f ./test2.sql -c 128 -j 128 -T 120 Stress testing script 3: Which of my friends like the article?

vi test3.sql

\setrandom id 1 200000000

\setrandom uid 1 100000000

select array_intersect(t1.who_like, t2.like_who) from (select who_like from cv_obj where id=:id) t1,(select array[like_who] as like_who from user_like_agg where uid=:uid) t2;

pgbench -M prepared -n -r -P 1 -f ./test3.sql -c 128 -j 128 -T 120 Stress testing result 1: Who likes the article. It is not unexpected to reach 1.01 million/s.

transaction type: Custom query

scaling factor: 1

query mode: prepared

number of clients: 128

number of threads: 128

duration: 120 s

number of transactions actually processed: 121935264

latency average: 0.125 ms

latency stddev: 0.203 ms

tps = 1016035.198013 (including connections establishing)

tps = 1016243.580731 (excluding connections establishing)

statement latencies in milliseconds:

0.001589 \setrandom id 1 1000000000

0.123249 select who_like from cv_obj where id=:id;Stress testing result 2: How many times has the article been liked? The result is 1.04 million/s.

transaction type: Custom query

scaling factor: 1

query mode: prepared

number of clients: 128

number of threads: 128

duration: 120 s

number of transactions actually processed: 124966713

latency average: 0.122 ms

latency stddev: 0.204 ms

tps = 1041268.730790 (including connections establishing)

tps = 1041479.852625 (excluding connections establishing)

statement latencies in milliseconds:

0.001708 \setrandom id 1 1000000000

0.120069 select like_cnt from cv_obj where id=:id;Stress testing result 3: Which of my friends like the article? The result is 648,000/s.

transaction type: Custom query

scaling factor: 1

query mode: prepared

number of clients: 128

number of threads: 128

duration: 120 s

number of transactions actually processed: 77802915

latency average: 0.196 ms

latency stddev: 1.649 ms

tps = 648273.025370 (including connections establishing)

tps = 648368.477278 (excluding connections establishing)

statement latencies in milliseconds:

0.001719 \setrandom id 1 1000000000

0.000695 \setrandom uid 1 100000000

0.193728 select array_intersect(t1.who_like, t2.like_who) from (select who_like from cv_obj where id=:id) t1,(select array[like_who] as like_who from user_like_agg where uid=:uid) t2;1. The longer the array, the larger the space occupied by a record. Using TOAST slicing storage can effectively improve the efficiency of querying non-array fields.

Example

alter table cv_obj alter column who_like set (storage=extended);2. Profiling for targeted optimization.

3. Compression interface to reduce the CPU consumption of PGLZ compression.

https://commitfest.postgresql.org/21/1294/

The most common operations in Weibo and Facebook:

1. Follow a user or like a message or a post.

This is the write operation, requiring fast writing and reflecting immediately after writing (being liked or followed).

2. Query the friends list

To query quickly, the fastest method is PK query, but one user may follow many users. Therefore, if it is to query multiple records, it is obviously slow.

An array can be used to store the friends list.

However, you must consider the write speed when using an array to store the list.

Therefore, it is best to use StreamCompute aggregation, because PG has a StreamCompute plug-in that can complete stream computation in the database.

3. Query the list of friends to be followed

This is the reverse friend relation, which also requires fast query and the same method as the forward relation.

4. Query the number of times articles (posts) have been liked, users who like the articles (posts), and which of them are my friends.

First, for the number of times articles (posts) have been liked, only a counter is actually needed. To increase the query speed, it must be a VALUE, instead of using COUNT(*) for aggregation during query.

For users who like the articles (posts), an array is also considered for storage to improve the query speed. Built-in StreamCompute of PG is used for aggregation.

It is very simple to determine which of the users are my friends. You only need intersect the two arrays, the friends list and users who like the articles (posts).

StreamCompute of PG solves the problem of real-time writing and real-time aggregation.

And, data is aggregated in real time, so several query requirements can be easily implemented.

Performance indicators (not optimized) obtained after tests:

1. Like posts (articles)

177,000 articles/s, which is expected to be optimized to 300,000 articles/s.

2. Who likes the articles?

1,016,000/s

3. How many times has the article been liked?

1,041,000/s

4. Which of my friends like the article?

648,000/s

5. Devices:

(X86 with a price of about 100 thousand yuan, 12*8 TB SATA hard drive, and one SSD as BCACHE)

The built-in StreamCompute feature in the database is great.

PostgreSQL Practices of Elective Selection Relation in Colleges

digoal - April 22, 2021

Apache Flink Community - August 8, 2025

Alibaba Cloud Native Community - March 2, 2023

Alibaba Clouder - November 8, 2018

Alibaba EMR - September 23, 2022

Alibaba Clouder - April 16, 2019

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn More Database Migration Solution

Database Migration Solution

Migrating to fully managed cloud databases brings a host of benefits including scalability, reliability, and cost efficiency.

Learn MoreMore Posts by digoal