By Gupu (Liping Zhang)

Why do we always fall into the trap of complex software for large systems such as distributed applications or enterprise software? How can we identify factors that increase complexity? What principles should be followed in code development and evolution? This article shares Gu Pu's thoughts on software complexity: What is software complexity? What brings it? How it can be solved? We recommend that you add it to your favorites so that you can read it later.

The essence of software design and implementation is that engineers exchange abstract concepts with rich details through "writing" and constantly iterate the process. This article is of little use if the lifetime of your code generally does not exceed six months.

The larger a system is, the more important it is for software designers to ensure its simplicity.

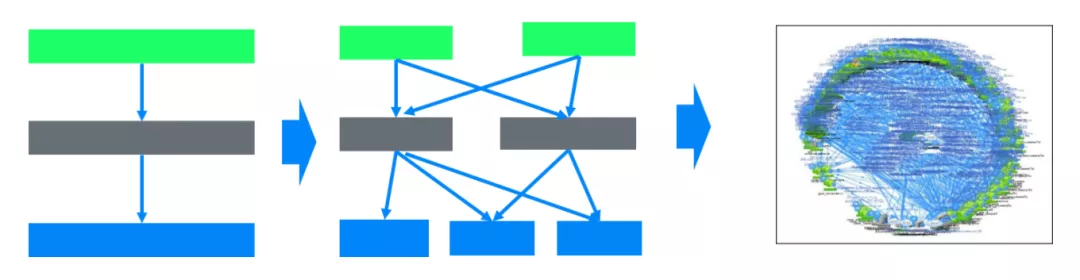

The essential problem of large system is complexity. Internet-based software is a typical large-scale system. As shown in the following figure, hundreds of microservices call or depend on each other. They are components of a system that involves complex behaviors and is dynamic and constantly changing (such as release and configuration change). There are also many aspects in software engineering that may not be very clearly defined through code alone, leading to the saying, "when things work, nobody knows why".

Image source: https://divante.com/blog/10-companies-that-implemented-the-microservice-architecture-and-paved-the-way-for-others/

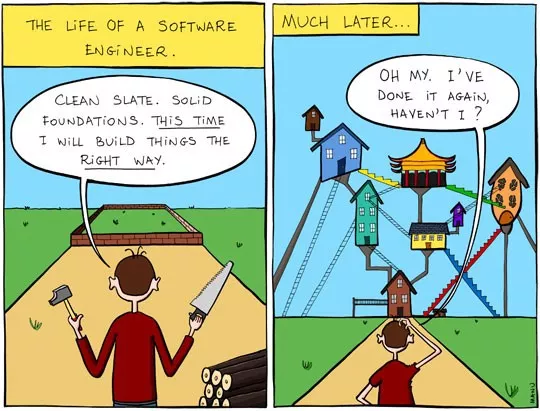

If we just wrote a piece of independent code that does not interact with other systems, the design requirements will not be very high. Whether the code is easy to use, easy to understand, or easy to test and maintain is not a problem at all. However, once we encounter large-scale software systems such as Internet distributed applications or enterprise-grade software, we often fall into the complexity trap. The life of a software engineer cartoon in the following figure vividly demonstrates the complexity trap.

Source: http://themetapicture.com/the-life-of-a-software-engineer/

As an insightful software engineer, everyone has thought about how to avoid this seemingly inevitable complexity dilemma in a project.

However, it is unexpectedly difficult to give an answer to this question; many articles give suggestions on the design of software architecture. As the software classic book No Silver Bullet indicates, there is no magic solution to this problem. I am not saying that many architecture articles are useless (in fact, most of these methods are useful), but it is difficult for people to really follow and implement these suggestions. Why? We still need to thoroughly understand the thinking and logic behind these architectures. Therefore, it is necessary to start from the beginning: What is software complexity? What brings it? How it can be solved?

To understand the root cause for the rapid increase in software complexity, it is necessary to understand where software comes from. First of all, we have to answer the question whether a large software grows or is built.

Software is not built or even designed. Software grows up.

This statement seems different from our common sense at the first glance. We often talk about software architecture. The word architecture seems to have an implication of construction and design. However, for software systems, we must realize that what architects design is not software architectures, but software genes. How these genes affect the future form of the software is difficult to predict and completely control.

Why? What are the differences between so-called construction and growth?

In fact, a complex software system today looks like a complex building. But comparing software to a skyscraper is not accurate. The reason is that no matter how complex a skyscraper is, it can be built according to the complete and detailed drawings designed in advance to ensure the quality. However, the large software system was not built in this way.

For example, Taobao evolves four or five generations from a single PHP application. This application is now an e-commerce trading platform that serves billions of users. Alipay, Google Search, and Netflix Microservices are all in similar processes.

Must it take several generations of evolution to build large software? If a team leaves Taobao, it is almost impossible to reproduce a transaction system based on the structure of Taobao. Any startup team does not have so many resources and cannot invest so much in component development. It is impossible to develop a super complex architecture from the beginning.

As shown in the preceding figure, the dynamic growth of the software is a process from a simple structure to a complex structure. The system is a gradual growth process with the development of the project itself and the growth of the R&D team.

The core feature of a complex software system is that it is developed and maintained by many engineers. The essence of software is that engineers use programming languages to communicate abstract and complex concepts, but not human and machine communication, as Brooks clearly stated in [2].

If you agree on this definition, imagine how complex software is produced. No matter how complex a software program is, it must be developed from the first line and began with several cores. At this time, its architecture is simple and the program can be maintained by a small number of programmers. After the success of the program, functions need to be constantly refined and scalability and distributed microservices are added. More business needs are met, which brings business growth in turn. Business growth brings more requirements to software system iteration. Architectures also evolve and the number of developers increases with the success of the program. Continuous iteration requires complex systems to be maintained by hundreds or even thousands of engineers.

The core element of large software design is to control complexity [1]. This is very challenging. The fundamental reason is that software is not a combination of mechanical activities. Careful architecture design in advance cannot avoid the risk of high complexity. The same architecture diagram or blueprint may produce completely different software programs. The design and implementation of large software is essentially a process in which a large number of engineers exchange abstract concepts with rich details through "writing" and constantly iterate the process. A slight mistake may cause high system complexity.

Do I just want to talk about how complex a software program may be? Not exactly. Our conclusion is that the most important job of software architects is not to design the structure of software, but to control the growing software complexity through APIs, team design criteria and attention to details.

Based on our analysis and understanding of the reasons for the rapid increase in software complexity, we naturally hope to solve this seemingly eternal challenge. Before doing so, we still need to analyze clearly the following question: What is complexity and how to measure it?

Is the code complexity measured by the number of rows? Is it the number of classes or files? After in-depth thinking, we will realize that these metrics are not the core metrics of software complexity. As analyzed earlier, software complexity can be basically a subjective concept (read it patiently), because software complexity is only meaningful when programmers need to update, maintain, or troubleshoot software. However, the architecture and code of a system that does not need to be iterated or maintained do not necessarily matter much (though this is rare in reality).

Since the essence of software design and implementation is that engineers exchange abstract concepts with rich details through "writing" and constantly iterate the process. (I have repeated this sentence the third time), complexity refers to the difficulty in software that people understand, modify and maintain the code. Simplicity is an element that makes it easier to understand and maintain the code.

"The goal of software architecture is to minimize the manpower required to build and maintain the required system." Robert Martin, Clean Architecture [3].

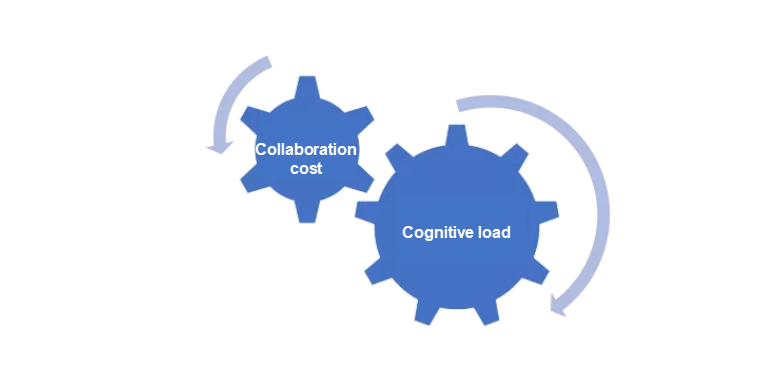

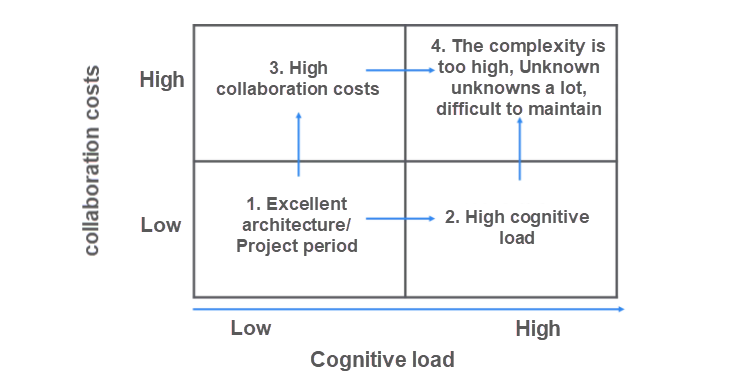

Therefore, we divide software complexity into two dimensions, which are related to the costs of software maintenance and understanding:

We can see that the two dimensions are different but related. The high costs of collaboration slow down the evolution of software systems and deteriorates its efficiency. The pressure on engineers increases and it is difficult to make progress for a long time. Engineers tend to leave the project. Finally, this will cause a vicious circle of quality deterioration. Software modules with high cognitive load are difficult for programmers to understand This brings two consequences:

Cognitive load includes:

Look at the following cases from Google testing blog [7]:

A. Code with too much nesting

response = server.Call(request)

if response.GetStatus() == RPC.OK:

if response.GetAuthorizedUser():

if response.GetEnc() == 'utf-8':

if response.GetRows():

vals = [ParseRow(r) for r in

response.GetRows()]

avg = sum(vals) / len(vals)

return avg, vals

else:

raise EmptyError()

else:

raise AuthError('unauthorized')

else:

raise ValueError('wrong encoding')

else:

raise RpcError(response.GetStatus())B. Code with less nesting

response = server.Call(request)

if response.GetStatus() != RPC.OK:

raise RpcError(response.GetStatus())

if not response.GetAuthorizedUser():

raise ValueError('wrong encoding')

if response.GetEnc() != 'utf-8':

raise AuthError('unauthorized')

if not response.GetRows():

raise EmptyError()

vals = [ParseRow(r) for r in

response.GetRows()]

avg = sum(vals) / len(vals)

return avg, valsCompare code A and B. The logic is completely equivalent, but the logic of code B is much easier to understand, and naturally easier to add functions. New features are likely to remain in a good state.

However, it is difficult for us to understand the logic of code A. During the maintenance process, bugs are more likely to be introduced, and the quality will continue to deteriorate.

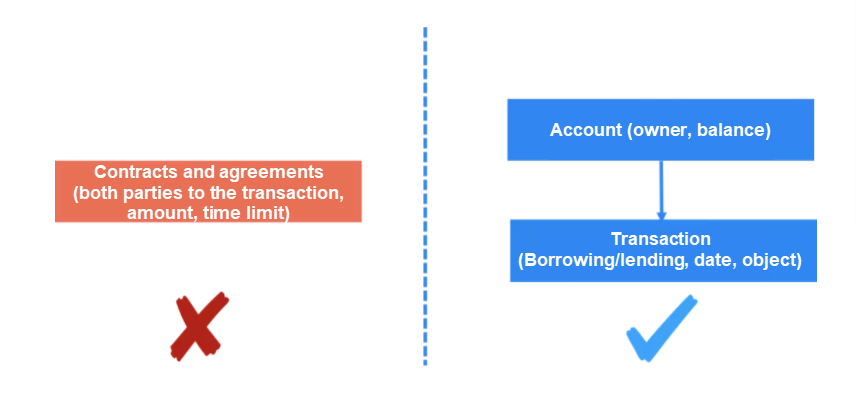

The software model design must match the cognition of the real world. Otherwise it will bring very high cognitive costs. I have encountered the design of such a resource management system. The designer has a very elegant model from the mathematical perspective: resource accounts expressed by contracts (the left section of the following figure). The account balance is obtained by accumulating past contracts to ensure data consistency. However, such a design is completely inconsistent with user cognition. Users only feel accounts and transactions, instead of contracts with complex parameters. Such a design brings very high maintenance costs.

The following is the typical understanding costs of an improper API design from Google testing blog.

class BufferBadDesign {

explicit Buffer(int size);// Create a buffer with given sized slots

void AddSlots(int num);// Expand the slots by `num`

// Add a value to the end of stack, and the caller need to

// ensure that there is at least one empty slot in the stack before

// calling insert

void Insert(int value);

int getNumberOfEmptySlots(); // return the number of empty slots

}I hope our team will not design such module. This problem demonstrates that an unreasonable API design increases maintenance costs. The design of a buffer exposes the details of internal memory management (slot maintenance). Therefore, calling the most common API insert causes a trap: if empty slots are not checked in advance, calling the insert API will produce abnormal behavior.

However, from the design perspective, maintaining the underlying slot logic is irrelevant to the externally visible buffer behaviors, but only involves the underlying implementation details. A better design can simplify the API. Change the maintenance of the slot quantity to internal implementation logic details and do not expose the maintenance externally. This also completely eliminates problems caused by improper application. The API is easy to understand and the cognitive costs are reduced.

class Buffer {

explicit Buffer(int size); // Create a buffer with given sized slots

// Add a value to the end of buffer. New slots are added

// if necessary.

void Insert(int value);

}[1] provided a nice list of signs that indicates improper design:

It is difficult to avoid these problems completely, but we must do our best in the design. Sometimes it is necessary to solve these problems through document interpretation, but good engineers or architects must know that these are not good.

A simple modification in multiple places is also a common factor increasing software maintenance complexity. It mainly affects our cognitive load: maintaining and modifying code requires a lot of effort to ensure that modifications are made in all places.

The simplest case is that there are repeated "constants" in the code. To modify this constant, we must modify the code multiple times. Programmers know how to resolve this issue. For example, they can avoid magic numbers by defining a constant and referencing it. Another example is the style or color of a web page. One way is to use the same color and style for every page. Another way is to use CSS templates, which are easy to maintain. This method corresponds to the principle of data normalization.

A slightly more complicated issue is that similar logic or functions are copied multiple times. This is because slightly different usage methods are required in several places, while maintenance engineers did not extract the public logic using refactor code (more time and effort is required). Instead, they chose the copy-paste option to save time. This is the "don't repeat yourself" principle:

Every piece of knowledge must have a single, unambiguous, authoritative representation within a system.

The naming of APIs, methods and variables in the software is very important for understanding the logic and scope of the code. It is also essential for the designer to clearly convey the intention. However, in many projects, we did not pay enough attention to naming.

Generally, the code is associated with several projects, but it should be noted that the projects are abstract while the code is concrete. You can name projects or product as you like. For example, Alibaba Cloud prefers the naming system of ancient Chinese mythology (Apsara, Fuxi, and Nuwa). Kubernetes is also from Greek mythology. That is OK. However, APIs, variables, and methods in the code cannot be named in this way.

A bad example is that a cluster API was named as Trident API. If the object in the code is called Trident, how do we understand the behavior of this object? Look at the resources in Kubernetes: Pod, ReplicaSet, Service, and ClusterIP. We will notice that they are all clear and simple names that directly match the object attributes. Good names can greatly reduce the cost of understanding objects.

Some people say that naming is the most difficult part of software engineering[9]. This is not just a joke: The difficulty of naming lies in the in-depth thinking and abstraction of the model.

Note the following points [1]:

(a) Intention vs What It Is

Naming avoids "what is it" but involves "for what or intention". What is it makes easy to expose implementation details. For example, if we use LeakedBarrel as rate limiting, it is better to call this class RateLimiter, instead of LeakedBarrel: The former defines the intention (for what), and the latter describes the specific implementation, which may change. Another example is Cache vs FixedSizeHashMap. The former is also a better name.

(b) Naming Conforms to Current Abstraction Levels

Our software always needs to have clear abstraction and layer presentation. In fact, most of the difficulties we encountered in naming are due to the lack of clear abstraction and layer presentation of software.

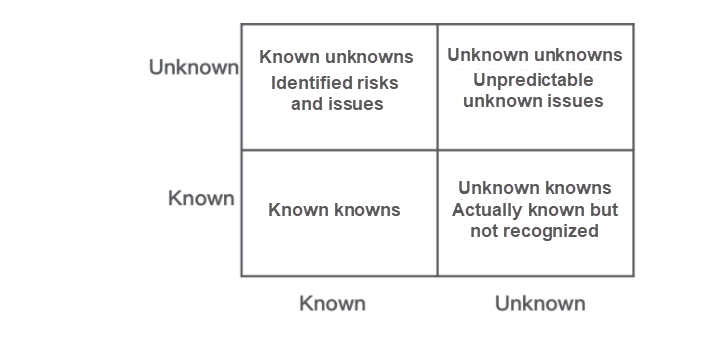

This is the worst case among all the manifestations of cognitive complexity. Unfortunately, everyone has encountered such a situation.

A typical "unknown unknown" case is the code has the following issues:

For maintenance engineers, if no hiding behavior is found when such code is changed (or if the change affects or is affected by such code) based on the description in documents and APIs and test coverage in the code is insufficient, unknown unknown risks may occur. It is difficult to avoid problems at this time. The best way is to try to prevent the deterioration of our system quality.

From the perspective of cognitive costs, we also need to consider the cognitive costs to measure different schemes or writing. Superficial simplification may lead to substantial increase in complexity.

For example, to express a time period, two options are available:

// Time period in seconds.

void someFunction(int timePeriod);

// time period using Duration.

void someFunction(Duration timePeriod);In the preceding example, the second option is better: use Duration instead of int as time period. Although Duration requires some learning costs, this mode can avoid common problems caused by multiple time units.

Collaboration costs refers to the collaboration costs required to add a module. Collaboration costs include:

In the microservice age, module/service splitting and team alignment are conducive to iteration efficiency. The splitting of modules and non-alignment of boundaries increase the complexity of code maintenance. Then new features require joint development, testing, and iteration of multiple teams.

Another expression is:

Any piece of software reflects the organizational structure that produces it (Conway's law).

In other words, the organizational structure determines the system architecture. The software architecture will eventually change around the boundary of organizations (cultural factors also contribute). When the division of labor in organizations is unreasonable, repeated construction or conflicts may occur.

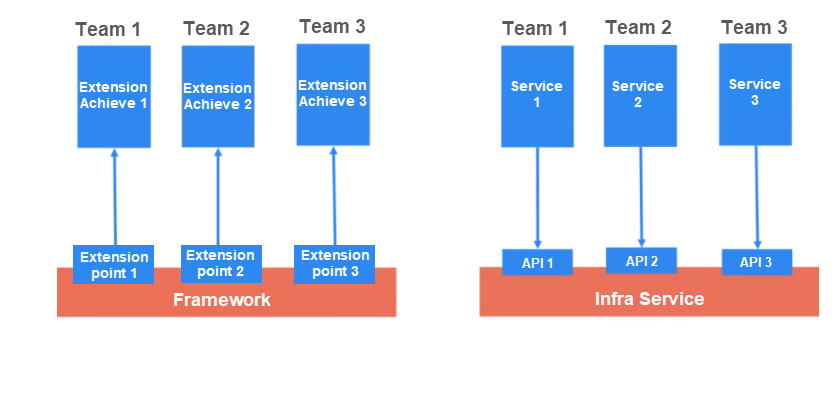

Common service dependency modes include composition and inheritance. These modes exist for dependencies between local modules or classes or remote calls.

The left section of the preceding figure is inheritance (inheritance or extension mode). There are four teams: The Framework team is responsible for implementing the framework. The framework has three extension points. Three different teams implement plugin extensions for the three extension points. These plugins are called by the framework, which is similar to the inheritance mode in terms of architecture.

The right section is the composition mode: The underlying system provides APIs in the form of API services, while upper-layer applications or services implement functions by calling these APIs.

These two modes apply to different system models. When the framework focuses more on the underlying layer, does not involve business logics, and is relatively stable, you can adopt the inheritance mode. The framework is integrated into the business implementations of teams 1, 2, and 3. For example, the RPC framework is such a model: The RPC underlying implementation is provided as the public base code or SDK for the business. The RPC methods are implemented by the services and called by the framework. Services do not need to pay attention to the details of the underlying RPC implementation. Because services depend on the framework code, they want the Framework code to be very stable to avoid being sensed by the framework. The inheritance mode is better.

However, we must use the Inheritance mode with caution. Try to avoid the following common traps of the inheritance mode:

(a) Avoid Management Inversion

The framework is responsible for O&M of the entire system (the framework team packages, builds, and releases code), which brings additional collaboration complexity and affects the efficiency of system evolution. It is inefficient if the gRPC team requires all applications that use gRPC to be packaged and released into a large application).

(b) Avoid Disrupting Separation of Service Logic Processes

If the inheritance mode is not properly used, the logic integrity of the upper-layer services is easily destroyed. The logics of extension implementation 1 depend on the caller's internal logic process or even the internal implementation details. This can cause dangerous coupling and undermine the logical closure of the services.

If your project uses the plugin/inheritance mode and the above-mentioned management inversion and disrupted separation occur, you must check the rationality of the current architecture.

The composition mode on the right is more commonly used: Services interact with each other through APIs and are decoupled from each other. The logic integrity of the services is not destroyed, and the encapsulation of the framework/infrastructure can also be guaranteed. This mode is also more flexible and services 1, 2, and 3 can call each other.

You can read the Favor composition over inheritance section in the Effective Java book for more information about this issue.

The code delivered to other teams (including the test team) must have sufficient unit tests and good encapsulation and API description, and be easy to be integrated and tested. However, due to insufficient unit tests or module tests, the complexity, failure rate, and rework rate at the integration phase increase. This boosts collaboration costs. Therefore, the key to reducing collaboration costs and improving iteration efficiency is to perform sufficient unit tests and provide excellent supports for integration tests.

Insufficient testability increases collaboration costs and often causes the broken window effect: Unknown unknown risks increase.

To reduce collaboration costs, engineers also must provide clear, constantly updated, and consistent documentation for APIs, and clearly describe the scenarios and usage of APIs. These tasks require effort input and sometimes development teams are unwilling to do. However, if users must rely on DingTalk/Slack requests or PR articles, the collaboration costs are too high and the probability of bugs/improper use for the system greatly increases.

Better solutions:

When complexity deteriorates to a certain degree, many unknown unknown risks occur. Good engineers must be able to recognize such a state: If no efforts are made to reconstruct or transform the system, the system will fail with unknown unknown risks.

The preceding figure shows that software evolution is an involuntary process where the system becomes too complex to maintain. How to avoid the system failure? First of all, we must not tolerate any incremental complexity for important and long lifecycle software evolution.

In the software realm, we say "good enough" to balance efficiency and quality. This theory is right because excessive pursuit of perfection compromises efficiency. In most cases, our systems are just Good enough but far from perfect.

The introduction of new code increases the complexity of the system: When a class or method is created, it is referenced or called by other code snippets. This leads to dependencies or coupling and increases the system complexity (unless the previous code is excessively complex, complexity can be reduced through refactoring). If you all notice this problem and can recognize those key factors that increase the complexity, this article serves its purpose. However, how to keep a system simple is a very big topic and it will not be explored in this article.

Some may say that timely delivery of projects is most important. I think this is definitely not the case. In most cases, we have to adopt an attitude of "zero tolerance" towards complexity growth and avoid "it's not bad". The following are reasons:

Zero tolerance do not mean eliminating complexity growth. We all know that this is impossible. What we need is to try our best to control complexity growth.

Of course, this article stressed at the beginning that if the lifetime of the code is only a few months, you don't have to pay too much attention to complexity growth. The code may be phased out before it becomes unmaintainable.

Finally, as software engineers, software is our works. I hope that everyone believes in the following points:

[1] John Ousterhout, A Philosophy of software design

[2] Frederick Brooks, No Silver Bullet - essence and accident in software engineering

[3] Robert Martin, Clean Architecture

[4] https://medium.com/monsterculture/getting-your-software-architecture-right-89287a980f1b

[5] API design best practices https://developer.aliyun.com/article/701810

[6] Andrew Hunt and David Thomas, The pragmatic programmer: from Journeyman to master

[7] https://testing.googleblog.com/2017/06/code-health-reduce-nesting-reduce.html

[8] https://en.wikipedia.org/wiki/Don%27t_repeat_yourself

[9] http://www.multunus.com/blog/2017/01/naming-the-hardest-software/

[10] https://martinfowler.com/bliki/TwoHardThings.html

Get to know our core technologies and latest product updates from Alibaba's top senior experts on our Tech Show series

Empower Your Business Digital Transformation with Tools and Cloud Services

A Guide to Private Geo-DNS with a Cross-Region Failover Group

2,593 posts | 791 followers

FollowAlibaba Developer - February 7, 2022

Alibaba Developer - June 21, 2021

Alibaba Clouder - December 6, 2016

Alibaba Clouder - December 18, 2017

Alibaba Clouder - April 22, 2020

Yadong Xie - March 18, 2021

2,593 posts | 791 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn MoreMore Posts by Alibaba Clouder