By Wu Binga (Zuoshi)

This article is a tool for new users that want to change a single database with a single table into multiple databases with multiple tables. It also describes factors to consider, the corresponding solutions, and common mistakes while implementing sharding.

There are two questions we need to answer before diving into the topic.

There are two key words, improve performance and availability.

As the amount of data in a single database and the query QPS of a database increase, it takes more time to read and write the database. The performance of database read and write may become a bottleneck for business development. Accordingly, the database performance needs to be optimized. This article discusses the optimization at the database level instead of the application layer, such as cache.

When dealing with an exceedingly high query QPS of a database, database sharding should be taken into consideration to lighten the connection pressure of a single database. For example, if the query QPS is 3,500 and a single database can support 1,000 connections, the query connection pressure can be released by splitting the database into four databases.

If a single table contains too much data exceeding a certain level, even after traditional optimizations at the database layer (such as index optimization), there still may be performance problems for data queries or data updates. This is a qualitative change from quantitative changes. In this case, the problem needs to be solved from a new perspective, such as from the point of data production and data processing. Since there is a large amount of data, take the "divide-and-conquer" method to break up the whole into parts, namely, the table sharding. Table sharding splits data into multiple tables based on certain rules to solve the storage and access performance problem that cannot be solved in the single table environment.

There may be a loss of all data if an incident occurs in a single database. An incident occurring on the virtual machine or host may cause irreparable losses, especially in the cloud era when many databases are running on virtual machines. Therefore, the reliability problems can be solved at the deployment layer through traditional Master-Slave and Master-Master and also at the data splitting layer.

Let's take database downtime as an example:

Nevertheless, the database cannot be split without limit since the performance and availability are improved at the cost of the limited storage resources.

According to the section above, the sharding solutions can be divided into the following three types:

| Sharding Scheme | Issues to be Addressed |

| Database Sharding Only | The database read/write QPS is too high with insufficient database connections. |

| Table Sharding Only | Storage performance bottleneck brought by too much data in a single table |

| Database Sharding and Table Sharding | Storage performance bottleneck caused by insufficient connections and too much data |

Since all the technologies are business-oriented, let's review the business background from the data perspective first.

Let's take the Xspace customer service platform system as an example. It meets members' consulting requests. Currently, the data is constructed mainly based on the synchronous offline work order data as the data source.

Assume that each offline work order correspondingly generates a consultation question from a member (hereafter referred to as question order.) Let's imagine:

The total work orders generated per day are 65,000 (15,000 + 20,000 + 30,000 = 65,000).

Given the new business scenarios that will be covered in the future, together with the extra room needed for expansion, let's imagine there are 80,000 work orders generated per day.

In addition to the question order table, there are another two commonly used business tables, namely, user operation log table and user submission form table.

Each question order generates multiple user operation logs. According to historical statistics, each question order generates about eight operation logs on average. With room reserved, let's imagine each question order generates an average of about ten user operation logs.

If the system has a 5-year service life, the data volume of the question order table is about 146 million (5 365 80,000 = 146 million). Then, the estimated number of tables works like this:

In database sharding, the peak read/write queries per second (QPS) of businesses, especially businesses during Double 11, should be estimated in advance.

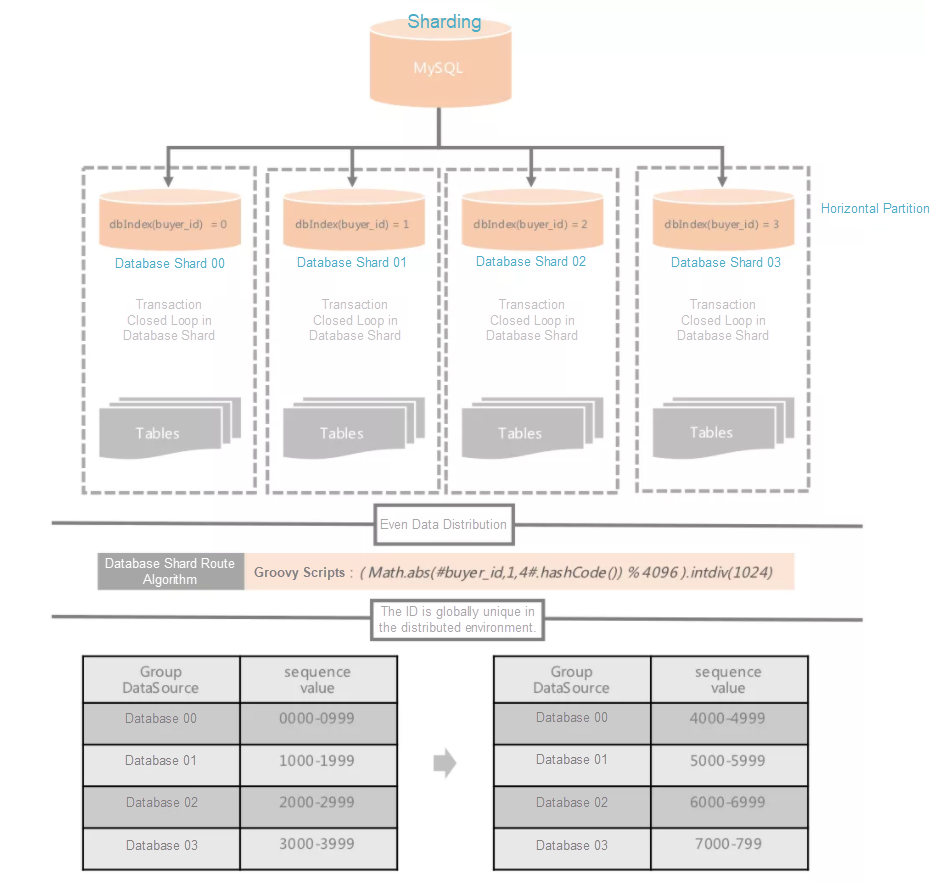

According to the real-world business scenarios, the data for question order queries mainly comes from the Alime homepage. Therefore, let's say only 3,500 database connections are needed based on the historical QPS and RT. The single database can be split into four databases if each bears up to 1,000 database connections.

Data is generally partitioned horizontally or vertically, and some complex business scenarios may involve both.

Here, data is partitioned horizontally by business dimension. For example, data partition by membership scatters data related to different members in different databases and tables based on certain rules. Since data is read and written from the member's perspective in business scenarios, the database is partitioned horizontally.

Vertical partition can be understood as splitting different fields of a table into different tables.

Let's use a small-sized e-commerce business as an example. The order information, including product information, buyer information, seller information, and payment information, is stored in a large table. They can also be vertically partitioned into separate tables and associated with the basic order information through order numbers.

Here is another example. If a table has ten fields and only three of them need to be frequently modified. Then, the three fields can be partitioned separately into a sub-table. As such, the row lock of the other seven fields will not be affected.

When a hotspot event occurs, how can you prevent the hotspot data from being stored in a certain database or table to avoid the uneven read and write pressure on different databases and tables?

This problem is similar to load balancing problems. Therefore, we can refer to the solutions in load balance. The common load balance algorithms are listed below:

| Load Balance Algorithm | Advantages | Disadvantages |

| Round-Robin or Weighted Round-Robin | Simple and in sequence | Applicable to scenarios where requests are not associated with services, such as gateways and reverse proxies. Not applicable to scenarios where requests are bound to database shard routers, such as databases. |

| ID Modulo | Simple implementation; the formula for database shard route is the id% database shard number | Over-reliance on business ID with imbalance. For example, during Double 11 stress testing, a small number of tests are generally created with users initiating requests through Round-Robin. As a result, the testing traffic is mostly concentrated in a few database shards and table shards, thus, not achieving load balance. |

| Hash Modulo | Simple implementation; the formula for database shard route is the hash(id)% database shard number.Data is more evenly distributed than in the ID modulo mode. | Later scaling and data migration are inconvenient. Each scaling requires fission in multiples of two and migration of 50% of the data. |

| Consistent Hash | More evenly distributed data together with easy scaling. When adding database shards, only up to 1/N of the data needs to be migrated (N is the number of database shards), and the scaling is not limited by multiples of two. | The implementation is a bit complex, but it could be ignored based on the advantages provided. |

The solution is the tailored consistent Hash algorithm. The differences between the two are listed below:

1. A difference in the number of Hash chain nodes

The consistent Hash algorithm contains 2 ^ 32-1 nodes. Considering the data is partitioned by buyerId, which is distributed evenly and very large (in number), the number of Hash chains is reduced to 4,096.

2. A difference in database index algorithm

The consistent Hash algorithm calculates the location of the database in the Hash chain using the formula similar to hash (database IP address) % 2 ^ 32. If the number of databases is small, it is necessary to add virtual nodes to solve the Hash chain skew. In addition, the location of the database may change with IP address, especially in the cloud environment.

Users can calculate and locate the Hash chain using the formula Math.abs(buyerId.hashCode()) % 4096 to distribute data evenly in the Hash chain. Then, the remaining problem is to evenly distribute databases to this Hash chain. Since it uses Alibaba's TDDL middleware, it is only needed to locate the databases using the logical index numbers of the database shards to distribute the database shards evenly to the Hash chain. If the Hash chain has 4,096 nodes and the single database is split into four databases, the four databases are located on nodes 1, 1,025, 2,049, and 3,073. The index location of the database shards can be calculated using the formula (Math.abs(buyerId.hashCode()) % 4096) / (4096 / DB_COUNT).

The following is the implementation of the Java pseudocode for database shard index:

/**

* the number of database shards

*/

public static final int DB_COUNT = 4;

/**

* obtain the index numbers of database shards

*

* @param buyerId member ID

* @return

*/

public static int indexDbByBuyerId(Long buyerId) {

return (Math.abs(buyerId.hashCode()) % 4096) / (4096 / DB_COUNT);

}In the single-database environment, the auto-increment method of MySQL is adopted for the main table IDs of question orders. However, this method is likely to cause repeated primary key IDs at each gate after database sharding.

There are many solutions for this situation, such as using UUID. However, UUID is too long. In addition, it occupies a large space coupled with poor query performance and the change of the primary key types, which is not conducive to smooth application migration.

The IDs can be split further. For example, the IDs are split into different segments, and different database tables use different ID segments. However, there is a new question, namely, how long should the ID segment be? If the ID segments of the first database are all allocated, those of the second database may be taken up, resulting in ID non-uniqueness.

However, this problem can be solved if the ID segments used by all database shards are separated by arithmetic sequence and lengthened successively according to a fixed step ratio.

For example, let's say the ID interval for each allocation is 1,000, and the step is 1,000. The starting index and end index for each ID segment can be calculated using the following formula:

X * step length + (Y-1) * (number of databases * step length)X * step length + (Y -1) * (number of databases * step length) + (1000 -1)If the database is divided into four databases, the eventually allocated ID segments will be:

| Database | ID Segment Allocated for the First Time | ID Segment Allocated for the Second Time | ID Segment Allocated for the N-th Time |

| Shard 1 | From 1,000 to 1,999 | From 5,000 to 5,999 | ... |

| Shard 2 | From 2,000 to 2,999 | From 6,000 to 6,999 | ... |

| Shard 3 | From 3,000 to 3,999 | From 7,000 to 7,999 | ... |

| Shard 4 | From 4,000 to 4,999 | From 8,000 to 8,999 | ... |

ID segments are separated first and then lengthened by a fixed step. This method works in the question order database, which is also the TDDL official solution.

Moreover, in practice, some additional information is usually added to the IDs to facilitate troubleshooting and analysis. For example, the question order ID includes the date, version, database shard index, and more.

The Java pseudocode for generating the question order IDs is listed below:

import lombok.Setter;

import org.apache.commons.lang3.time.DateFormatUtils;

/**

* Question order ID builder

* <p>

* ID format (18 digits):6-digit date + 2-digit version number + 2-digit database index number + 8-digit serial number

* Example:180903010300001111

* It indicates that the question order was generated on September 3rd, 2018 based on the ID generation rule of version 01. The data is stored in database 03. The last 8 digits 00001111 is the serial number generated. *The advantage of adopting this ID format is that there are 100 million (8-digit) serial numbers available every day. * </p>

*/

@Setter

public class ProblemOrdIdBuilder {

public static final int DB_COUNT = 4;

private static final String DATE_FORMATTER = "yyMMdd";

private String version = "01";

private long buyerId;

private long timeInMills;

private long seqNum;

public Long build() {

int dbIndex = indexDbByBuyerId(buyerId);

StringBuilder pid = new StringBuilder(18)

.append(DateFormatUtils.format(timeInMills, DATE_FORMATTER))

.append(version)

.append(String.format("%02d", dbIndex))

.append(String.format("%08d", seqNum % 10000000));

return Long.valueOf(pid.toString());

}

/**

* Obtain the database shard index numbers

*

* @param buyerId member ID

* @return

*/

public int indexDbByBuyerId(Long buyerId) {

return (Math.abs(buyerId.hashCode()) % 4096) / (4096 / DB_COUNT);

}

}In the distributed environment, a transaction may cover multiple database shards, which is relatively complex to process. Currently, two solutions are available:

How can you make a choice?

First of all, what we need is not a one-size-fits-all solution but one that works for ourselves. Let's take a look at the business scenarios that use transactions.

There are mainly two core actions for the members that come for a consultation, the customer service agents that solve problems for the members, or the synchronization of relevant data from the third-party system:

Question order data and operation logs are queried separately with no distributed correlated queries involved, which can be ignored.

What's left is the data submission scenario where the question order data and operation log data may be written at the same time.

With the scenario determined, the transaction solution is there to choose. Distributed transactions are easy to implement because the middleware has helped address the complexity; the higher the complexity, the larger the performance loss. Currently, most applications are developed based on Spring Boot. They use embedded tomcat containers by default, unlike the heavyweight application servers with built-in distributed transaction managers, such as WebSphere Application Server (provided by IBM) and WebLogic (provided by Oracle.) Therefore, if we access distributed transactions, additional distributed transaction managers need to be introduced, which is more costly and is excluded. Therefore, the idea of the solution should be to split the large transaction into small transactions that can be solved by a single database.

Now, the point is to write the question order data of the same member and the related operation log data into the same database shard. The solution is simple. Since data is partitioned by member ID, you can use the same sharding route rules.

Let's take a look at the final TDDL sharding rule configuration:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE beans PUBLIC "-//SPRING//DTD BEAN//EN" "http://www.springframework.org/dtd/spring-beans.dtd">

<beans>

<bean id="vtabroot" class="com.taobao.tddl.interact.rule.VirtualTableRoot" init-method="init">

<property name="dbType" value="MYSQL" />

<property name="defaultDbIndex" value="PROBLEM_0000_GROUP" />

<property name="tableRules">

<map>

<entry key="problem_ord" value-ref="problem_ord" />

<entry key="problem_operate_log" value-ref="problem_operate_log" />

</map>

</property>

</bean>

<!—Question (demand) order table -->

<bean id="problem_ord" class="com.taobao.tddl.interact.rule.TableRule">

<property name="dbNamePattern" value="PROBLEM_{0000}_GROUP" />

<property name="tbNamePattern" value="problem_ord_{0000}" />

<property name="dbRuleArray" value="((Math.abs(#buyer_id,1,4#.hashCode()) % 4096).intdiv(1024))" />

<property name="tbRuleArray">

<list>

<value>

<![CDATA[

def hashCode = Math.abs(#buyer_id,1,32#.hashCode());

int dbIndex = ((hashCode % 4096).intdiv(1024)) as int;

int tableCountPerDb = 32 / 4;

int tableIndexStart = dbIndex * tableCountPerDb;

int tableIndexOffset = (hashCode % tableCountPerDb) as int;

int tableIndex = tableIndexStart + tableIndexOffset;

return tableIndex;

]]>

</value>

</list>

</property>

<property name="allowFullTableScan" value="false" />

</bean>

<!-- Operation log table-->

<bean id="problem_operate_log" class="com.taobao.tddl.interact.rule.TableRule">

<property name="dbNamePattern" value="PROBLEM_{0000}_GROUP" />

<property name="tbNamePattern" value="problem_operate_log_{0000}" />

<!-- 【#buyer_id,1,4#.hashCode()】 -->

<!-- buyer_id represents the sharding field; 1 represents the database shard step; 4 indicates that there are 4 database shards, which will be used when scanning the whole table -->

<property name="dbRuleArray" value="((Math.abs(#buyer_id,1,4#.hashCode()) % 4096).intdiv(1024))" />

<property name="tbRuleArray">

<list>

<value>

<![CDATA[

def hashCode = Math.abs(#buyer_id,1,512#.hashCode());

int dbIndex = ((hashCode % 4096).intdiv(1024)) as int;

int tableCountPerDb = 512 / 4;

int tableIndexStart = dbIndex * tableCountPerDb;

int tableIndexOffset = (hashCode % tableCountPerDb) as int;

int tableIndex = tableIndexStart + tableIndexOffset;

return tableIndex;

]]>

</value>

</list>

</property>

<property name="allowFullTableScan" value="false" />

</bean>

</beans>In terms of the database replication solution, Data Transmission Service [1], the database replication and migration solution Alibaba uses internally, is available on Alibaba Cloud. For more information, please contact Alibaba Cloud customer service or Alibaba Cloud database experts.

You can select release with downtime or release without downtime for database shard switch and release:

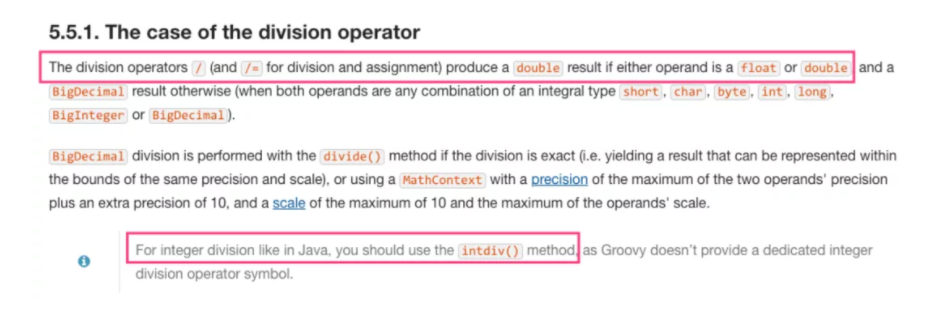

Since Alibaba's TDDL middleware calculates sharding routes using groovy scripts, and the / operator or /= operator of groovy may produce results of double type rather than an integer by Java, the x.intdiv(y) function is necessary for division operation.

// In Java

System.out.println(5 / 3); // the result is 1

// In Groovy

println (5 / 3); // the result is 1.6666666667

println (5.intdiv(3)); // the result is 1 (the correct usage of Groovy division operation)For more information, please see The case of the division operator in the Groovy official description.

[1] https://baijiahao.baidu.com/s?id=1622441635115622194&wfr=spider&for=pc

[2] http://www.zsythink.net/archives/1182

[3] https://www.alibabacloud.com/product/data-transmission-service

[4] https://docs.groovy-lang.org/latest/html/documentation/core-syntax.html#integer_division

Media Integration and Digital Transformation in the Post-Pandemic Era

Six Advantages of Alibaba Cloud DDoS Protection and Four Solutions to Resist DDoS Attacks!

2,593 posts | 791 followers

FollowAlibaba Clouder - January 30, 2019

digoal - July 18, 2023

digoal - April 22, 2021

ApsaraDB - July 29, 2022

Alibaba Clouder - July 16, 2020

Alibaba Clouder - January 30, 2019

2,593 posts | 791 followers

Follow ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB for OceanBase

ApsaraDB for OceanBase

A financial-grade distributed relational database that features high stability, high scalability, and high performance.

Learn MoreMore Posts by Alibaba Clouder