By Shaumik Daityari, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

With the increase in number of smart devices, we are creating unimaginable amounts of data --- as real time updates in our locations, logging of browsing history and comments on social networks. Earlier this year, Forbes reported that we create about 2.5 quintillion bytes of data every day (quintillion is one followed by 18 zeroes), and 90 percent of the data that is present was created in the last two years alone!

To understand the kind of impact technology has had in our lives in recent years, here's a comparison of scenes at Vatican City when the new Pope was being announced in 2005 and 2013. Notice the exponential growth in smartphone usage in just eight years! Much of this data that we generate is unstructured, which leads to a requirement of processing to generate insights, which further drive new businesses.

This tutorial covers the basics of natural language processing (NLP) in Python. If you have encountered a pile of textual data for the first time, this is the right place for you to begin your journey of making sense of the data. First, you will go through a step by step process of cleaning the text, followed by a few simple NLP tasks. You will conclude the tutorial with Named Entity Recognition (NER) and finding the statistically important words in your data through a metric called TF-IDF (term frequency - inverse document frequency).

This tutorial is based on Python version 3.6.5 and NLTK version 3.3. These come pre installed in Anaconda version 1.8.7, although it is not a pre-requisite.

The NLTK package can be installed through a package manager --- pip.

pip install nltk==3.3Once the installation is done, you may verify its version.

>>> import nltk

>>> nltk.__version__

'3.3'The demonstrations in this tutorial would use sample tweets that are a part of the NLTK package. However, they need to be downloaded first.

>>> import nltk

>>> nltk.download('twitter_samples')

[nltk_data] Downloading package twitter_samples to C:\Users\Shaumik

[nltk_data] Daityari\AppData\Roaming\nltk_data...

[nltk_data] Unzipping corpora/twitter_samples.zip.

TrueThe path to which the data gets downloaded depends on the environment. The output here is the result of running on Windows. Once the samples are downloaded, you can import them using the following.

>>> from nltk.corpus import twitter_samples

>>> twitter_samples.fileids()

['negative_tweets.json', 'positive_tweets.json', 'tweets.20150430-223406.json']There are three sets of tweets that NLTK's twitter_samples provides --- a set of 5000 tweets each with negative and positive sentiments, and a third set of 20000 tweets. You will use the negative and positive tweets to train your model on sentiment analysis later in the post. To get all the tweets within any set, you can use the following code ---

>>> text = twitter_samples.strings(

'tweets.20150430-223406.json')

>>> len(text)

20000

>>> text[:3]

['RT @KirkKus: Indirect cost of the UK being in the EU is estimated to be costing Britain £170 billion per year! #BetterOffOut #UKIP',

'VIDEO: Sturgeon on post-election deals http://t.co/BTJwrpbmOY',

'RT @LabourEoin: The economy was growing 3 times faster on the day David Cameron became Prime Minister than it is today.. #BBCqt http://t.co…']There is an alternate way of getting tweets from a specific time period, user or hashtag by using the Twitter API in case you are interested. However, this tutorial will focus on NLTK's sample tweets.

In the next section, you would be able to understand the process of cleaning the data before using any statistical tools and then move on to common NLP techniques.

In its natural form, it is difficult to programmatically analyze textual data. You must, therefore, convert text into smaller parts called tokens. A token is a combination of continuous characters that make some logical sense. A simple way of tokenization is to split the text on all whitespace characters.

NLTK provides a default tokenizer for Tweets, and the tokenized method returns a list of lists of tokens.

>>> twitter_samples.tokenized('tweets.20150430-223406.json')[0]

['RT',

'@',

'KirkKus',

':',

'Indirect',

'cost',

'of',

'the',

'UK',

'being',

'in',

'the',

'EU',

'is',

'estimated',

'to',

'be',

'costing',

'Britain',

'£170',

'billion',

'per',

'year',

'!',

'#',

'BetterOffOut',

'#',

'UKIP']As you can see, the default method returns all special characters too, which you can then be removed through regular expressions. The process of tokenization takes some time because it's not a simple split on white space. For the purpose of this tutorial, you do not need this method.

Although the .tokenized() method can be used on NLTK's Twitter samples, you may also use the word_tokenize method for tokenization of any other text. To use word_tokenize, first download the punkt resource.

>>> nltk.download('punkt')

[nltk_data] Downloading package punkt to C:\Users\Shaumik

[nltk_data] Daityari\AppData\Roaming\nltk_data...

[nltk_data] Unzipping tokenizers\punkt.zip.

True

>>> from nltk.tokenize import word_tokenize

>>> word_tokenize(text[0])

['RT',

'@',

'KirkKus',

':',

'Indirect',

'cost',

'of',

'the',

'UK',

'being',

'in',

'the',

'EU',

'is',

'estimated',

'to',

'be',

'costing',

'Britain',

'£170',

'billion',

'per',

'year',

'!',

'#',

'BetterOffOut',

'#',

'UKIP']Words have different grammatical forms --- for instance, "swim", "swam", "swims" and "swimming" are various forms of the same verb. Depending on the requirement of your analysis, all of these versions may need to be converted to the same canonical form. In this section, you will explore two techniques to achieve this --- stemming and lemmatization.

Stemming is a process of removing affixes from a word. It is a simple algorithm that chops off extra characters from the end of a word based on certain considerations. There are various algorithms for stemming. In this tutorial, let us focus on one of them, the Porter Stemming Algorithm (other algorithms include Lancaster and Snowball Stemming Algorithms).

>>> from nltk.stem.porter import PorterStemmer

>>> stem = PorterStemmer()

>>> stem.stem('swimming')

'swim'So far, so good. Let us try some more.

>>> stem.stem('swam')

'swam'It did not work. Why?

Well, stemming is a process that is performed on a word without context. You can try the stemmer on a sample tweet and compare the changes.

Lemmatization is the process that normalizes a word with context. To use the WordNetLemmatizer, you need to download an additional resource.

>>> nltk.download('wordnet')

[nltk_data] Downloading package wordnet to C:\Users\Shaumik

[nltk_data] Daityari\AppData\Roaming\nltk_data...

[nltk_data] Unzipping tokenizers\wordnet.zip.

TrueOnce downloaded, you are ready to use the lemmatizer.

>>> from nltk.stem.wordnet import WordNetLemmatizer

>>> lem = WordNetLemmatizer()

>>> lem.lemmatize('swam', 'v')

'swim'The context here is provided by the second argument, which tells the function to treat ran as a verb! Lemmatization, however, comes at a cost of speed. A comparison of stemming and lemmatization ultimately comes down to a trade off between speed and accuracy.

When you are running the lemmatizer on your tweets, how do you know the context of the word? You need to perform tagging to determine the relative position of a word in a sentence, after downloading another resource from NLTK.

>>> nltk.download('averaged_perceptron_tagger')

[nltk_data] Downloading package averaged_perceptron_tagger to C:\Users\Shaumik

[nltk_data] Daityari\AppData\Roaming\nltk_data...

[nltk_data] Unzipping help\averaged_perceptron_tagger.zip.

True

>>> from nltk.tag import pos_tag

>>> sample = "Your time is limited, so don't waste it living someone else's life."

>>> pos_tag(word_tokenize(sample))

[('Your', 'PRP$'),

('time', 'NN'),

('is', 'VBZ'),

('limited', 'VBN'),

(',', ','),

('so', 'IN'),

('don', 'JJ'),

(''', 'NN'),

('t', 'NN'),

('waste', 'NN'),

('it', 'PRP'),

('living', 'VBG'),

('someone', 'NN'),

('else', 'RB'),

(''', 'NNP'),

('s', 'JJ'),

('life', 'NN'),

('.', '.')]For every word, the tagger returns a string. How do you make sense of the tags?

>>> nltk.download('tagsets')

[nltk_data] Downloading package tagsets to C:\Users\Shaumik

[nltk_data] Daityari\AppData\Roaming\nltk_data...

[nltk_data] Unzipping help\tagsets.zip.

True

>>> nltk.help.upenn_tagset() From the list of words and their tags, here is the list of items and their meaning ---

NNP noun, proper, singularNN noun, common, singular or massVBD verb, past tenseVBG verb, present participle or gerundCD numeral, cardinalIN preposition or conjunction, subordinatingIn general, if a tag starts with NN, the word is a noun and if it stars with VB, the word is a verb.

def lemmatize_sentence(sentence):

lemmatizer = WordNetLemmatizer()

lemmatized_sentence = []

for word, tag in pos_tag(word_tokenize(sentence)):

if tag.startswith('NN'):

pos = 'n'

elif tag.startswith('VB'):

pos = 'v'

else:

pos = 'a'

lemmatized_sentence.append(lemmatizer.lemmatize(word, pos))

return lemmatized_sentenceYou are now ready to use the lemmatizer.

>>> lemmatize_sentence(sample)

['Your',

'time',

'be',

'limit',

',',

'so',

'don',

''',

't',

'waste',

'it',

'live',

'someone',

'else',

''',

's',

'life',

'.']The next step in the processing of textual data is to remove "noise". But, what is noise?

Any part of the text that is irrelevant to the processing of the data is noise. Noise does not add meaning or information to data. Do note that noise may be specific to your final objective. For instance, the most common words in a language are called stop words. Some examples of stop words are "is", "the" and "a". They are generally irrelevant when processing language, unless a specific use case warrants their inclusion.

For the sample tweets, you should remove the following ---

To search for each of the above items and remove them, you will use the regular expressions library in Python, through the package re.

>>> import re

>>> sample = 'Go to https://alibabacloud.com/campaign/techshare/ for tech tutorials'

>>> re.sub('http[s]?://(?:[a-zA-Z]|[0-9]|[$-_@.&+#]|[!*\(\),]|'\

'(?:%[0-9a-fA-F][0-9a-fA-F]))+','', sample)

'Go to for tech tutorials'It first searches for a substring that matches a URL --- starts with http:// or https://, followed by letters, numbers or special characters. Once a pattern is matched, the .sub() method replaces it with an empty string.

Similarly, to remove @ mentions, a similar code does the trick.

>>> sample = 'Go to @alibaba for techshare tutorials'

>>> re.sub('(@[A-Za-z0-9_]+)','', sample)

'Go to for techshare tutorials'The code searches for Twitter handles --- a @ followed by any number of numbers, letters or _, and removes them. In the next step, you can remove any punctuation marks using the string library.

>>> import string

>>> string.punctuation

'!"#$%&\'()*+,-./:;<=>?@[\\]^_`{|}~'To remove punctuation, you can use the following snippet.

>>> sample = 'Hi!!! How are you?'

>>> sample.translate(str.maketrans('', '', string.punctuation))

'Hi How are you'This snippet searches for any characters that is a part of the list of punctuation marks above and removes it.

In the last step, you should also remove stop words. You will use a built in list of stop words in nltk. You need to download the stopwords resource from nltk and use the .words() method to get the list of stop words.

>>> nltk.download('stopwords')

[nltk_data] Downloading package stopwords to C:\Users\Shaumik

[nltk_data] Daityari\AppData\Roaming\nltk_data...

[nltk_data] Unzipping corpora/stopwords.zip.

True

>>> from nltk.corpus import stopwords

>>> stop_words = stopwords.words('english')

>>> len(stop_words)

179

>>> stop_words[:3]

['i', 'me', 'my']You may combine all the above snippets to build a function that removes noise from text. It would take two arguments --- the tokenized tweet, and optional tuple of stop words.

def remove_noise(tokens, stop_words = ()):

'''Remove @ mentions, hyperlinks, punctuation, and stop words'''

clean_tokens = []

lemmatizer = WordNetLemmatizer()

for token, tag in pos_tag(tokens):

# Remove Hyperlinks

token = re.sub('http[s]?://(?:[a-zA-Z]|[0-9]|[$-_@.&+#]|[!*\(\),]|'\

'(?:%[0-9a-fA-F][0-9a-fA-F]))+','', token)

# Remove twitter handles

token = re.sub("(@[A-Za-z0-9_]+)","", token)

if tag.startswith("NN"):

pos = 'n'

elif tag.startswith('VB'):

pos = 'v'

else:

pos = 'a'

# Normalize sentence

token = lemmatizer.lemmatize(token, pos)

if len(token) > 0 and token not in string.punctuation and token.lower() not in stop_words:

# Get lowercase

clean_tokens.append(token)

return clean_tokensYou may also use the .lower() string method in Python before splitting the sentence. This functions skips the step because it could lead to possible issues during Named Entity Recognition (NER) later in the tutorial. Additionally, you could also remove the word RT from tweets.

In this tutorial, we have only used a simple form of removing noise. There are many other complications that may arise while dealing with natural language! It is possible for words to be combined without spaces ("iDontLikeThis"), which will be eventually analyzed as a single word, unless specifically separated. Further, exaggerated words such as "hmm", "hmmm" and "hmmmmmmm" are going to be treated differently too. These refinements in the process of noise removal are specific to your data and can be done only after carefully analyzing the data at hand.

If you are using the Twitter API, you may want to explore Twitter Entities, which give you the entities related to a tweet directly, grouping them into hastags, URLs, mentions and media items.

The most basic form of analysis on textual data is to take out the word frequency. A single tweet is too small an entity to find out the distribution of words, hence, the analysis of the frequency of words would be done on all of the 20000 tweets. Let us first create a list of cleaned tokens for each of the tweets in the data.

tokens_list = twitter_samples.tokenized('tweets.20150430-223406.json')

clean_tokens_list = [remove_noise(tokens, stop_words) for tokens in tokens_list]If your data set is large and you do not require lemmatization, you can accordingly change the function above to either include a stemmer or avoid normalization altogether.

Next, we create a list of all words in the tweets from our list of cleaned tokens, clean_tokens_list.

all_words = []

for tokens in clean_tokens_list:

for token in tokens:

all_words.append(token)Now that you have compiled all words in the sample of tweets, you can to find out which are the most common words using the FreqDist class of NLTK. The .most_common() method lists the words which occur frequently in the data.

>>> freq_dist = nltk.FreqDist(all_words)

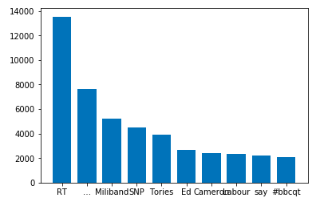

>>> freq_dist.most_common(10)

[('RT', 13539),

('…', 7663),

('Miliband', 5222),

('SNP', 4491),

('Tories', 3923),

('Ed', 2686),

('Cameron', 2419),

('Labour', 2338),

('say', 2208),

('#bbcqt', 2106)]It is not surprising that RT is the most common words. A few years ago, Twitter didn't have the option of adding a comment to a retweet, so an unofficial way of doing so was to follow the structure --- "comment" RT @mention "original tweet". Further, the tweets are from a time when Britain was contemplating leaving the EU, and top terms contain names of political parties and politicians.

Next, you may plot the same in a bar chart using matplotlib. This tutorial uses version 2.2.2, which can be installed using pip.

pip install matplotlib=2.2.2Now that you have installed matplotlib, you are ready to plot the most frequent words.

import matplotlib.pyplot as plt

items = freq_dist.most_common(10)

labels, values = zip(*items)

width = 0.75

plt.bar(labels, values, width, align='center', )

plt.show()

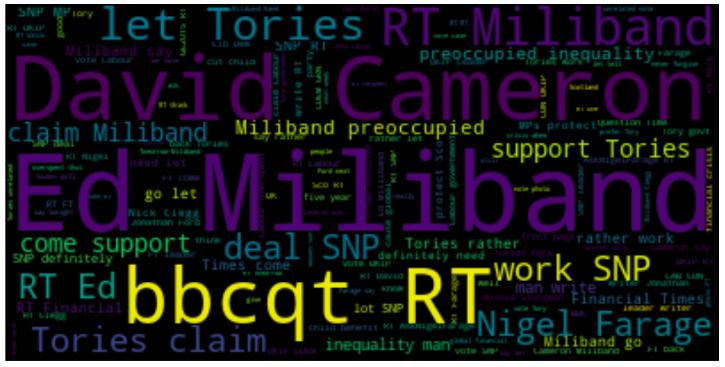

To visualize the distribution of words, you can create a word cloud using the wordcloud package.

from wordcloud import WordCloud

cloud = WordCloud(max_font_size=60).generate(' '.join(all_words))

plt.figure(figsize=(16,12))

plt.imshow(cloud, interpolation='bilinear')

plt.axis('off')

plt.show()

Named Entity Recognition (NER) is the process of detecting the named entities such as persons, locations and organizations from your text. As listed in the NLTK book, here are the various types of entities that the built in function in NLTK is trained to recognize.

| Named Entity | Examples |

|---|---|

| ORGANIZATION | Georgia-Pacific Corp., WHO |

| PERSON | Eddy Bonte, President Obama |

| LOCATION | Murray River, Mount Everest |

| DATE | June, 2008-06-29 |

| TIME | two fifty a m, 1:30 p.m. |

| MONEY | 175 million Canadian Dollars, GBP 10.40 |

| PERCENT | twenty pct, 18.75 % |

| FACILITY | Washington Monument, Stonehenge |

| GPE (Geo Political Entity) | South East Asia, Midlothian |

Before you get on to the process of NER, you need to download the following.

>>> nltk.download('maxent_ne_chunker')

[nltk_data] Downloading package maxent_ne_chunker to C:\Users\Shaumik

[nltk_data] Daityari\AppData\Roaming\nltk_data...

[nltk_data] Unzipping help\maxent_ne_chunker.zip.

True

>>> nltk.download('words')

[nltk_data] Downloading package words to C:\Users\Shaumik

[nltk_data] Daityari\AppData\Roaming\nltk_data...

[nltk_data] Unzipping help\words.zip.

TrueTo find named entities in your text, you need to create chunks of the data as follows.

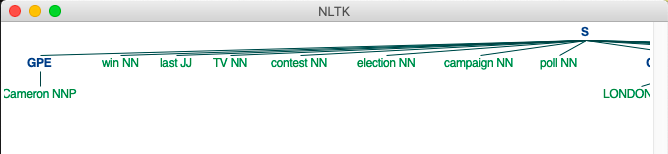

from nltk import ne_chunk, pos_tag

chunked = ne_chunk(pos_tag(clean_tokens_list[15]))The output of ne_chunk is a nltk.Tree object that can be visualized when we draw it using the .draw() method.

chunked.draw()

You can see in the image that chunked generates a tree structure with the string S as the root node. Every child node of S is either a word-position pair or a type of named entity. You can easily pick out which nodes represent named entities in the graph because they are roots of a sub-tree. The child node of a named entity node is another word-position pair.

To collect the named entities, you can traverse the tree generated by chunked and check whether a node has the type nltk.tree.Tree:

from collections import defaultdict

named_entities = defaultdict(list)

for node in chunked:

# Check if node is a Tree

# If not a tree, ignore

if type(node) is nltk.tree.Tree:

# Get the type of entity

label = node.label()

entity = node[0][0]

named_entities[label].append(entity)Once you have created a deafultdict with all named entities, you can verify the output.

>>> named_entities

defaultdict(list,

{'GPE': ['Cameron'],

'ORGANIZATION': ['LONDON'],

'PERSON': ['David']})The TF-IDF (term frequency - inverse document frequency) is a statistic that signifies how important a term is to a document. Ideally, the terms at the top of the TF-IDF list should play an important role in deciding the topic of the text.

There is a TextCollection class of NLTK that computes the TF-IDF of a document. However, as the documentation suggests, this class is a prototype, and therefore may not be efficient. In this tutorial, you will work with the TF-IDF transformer of the scikit-learn package (version 0.19.1) and numpy (version 1.14.3), which you can install through pip.

CountVectorizer converts text to a matrix form, and TfidfTransformer normalizes the matrix to generate the TF-IDF of each term.

from sklearn.feature_extraction.text import CountVectorizer, TfidfTransformer

import numpy as np

cv = CountVectorizer(min_df=0.005, max_df=.5, ngram_range=(1,2))

sentences = [' '.join(tokens) for tokens in clean_tokens_list]

cv.fit(sentences)When initializing the class, min_df and max_df are arguments that put thresholds for words to be present in minimum and maximum number of documents (in our case, sentences). They can be specified as absolute numbers or ratios. You are looking at terms that appear in at least 0.5% of documents and at most 50% of documents. You need to verify how many terms passed your thresholds.

>>> len(cv.vocabulary_)

460You may tighten the thresholds in case you want lesser number of terms. You may proceed with this for now. The next step is to create a matrix of the data.

cv_counts = cv.transform(sentences)This creates a matrix with the dimensions as number of sentences and number of words in the vocabulary. Next, you can calculate the sparcity of the data and check how much of the matrix is filled with non zero values.

>>> 100.0 * cv_counts.nnz / (cv_counts.shape[0] * cv_counts.shape[1])

1.8398804347826088nnz gives you the number of non zero elements in the matrix. In this case, you have a sparcity of 1.83%. Next, you need to transform the dictionary and get the most important terms with respect to importance to the document.

transformed_weights = TfidfTransformer().fit_transform(cv_counts)

features = {}

for feature, weight in zip(cv.get_feature_names(),

np.asarray(transformed_weights.mean(axis=0)).ravel().tolist()):

features[feature] = weight

sorted_features = [(key, features[key])

for key in sorted(features, key=features.get, reverse=True)]Here are the top ten terms and their weights that are most important to the set of tweets. Not surprisingly, most of the data is filled with politicians (miliband, cameron) and parties (ukip, labour).

>>> sorted_features[:10]

[('snp', 0.05178276636641074),

('miliband', 0.05100430290549024),

('ukip', 0.04243121435695297),

('tories', 0.03728900816044612),

('ed', 0.03455733071449128),

('labour', 0.033745972243756014),

('bbcqt', 0.033517709341470525),

('cameron', 0.03308571509950097),

('farage', 0.033009781157403516),

('tory', 0.03129438215249338)]Alternately, topic modelling can be done to determine what a document is about. Here is an implementation of the LDA algorithm using the package gensim, in case you are interested.

This tutorial introduced you to the basics of Natural Language Processing in Python. Next, various pre-processing stages for the data before statistical analysis were explained. The tutorial then moved on to common NLP tasks --- word frequency, word cloud, NER and TF-IDF.

We hope that you found this tutorial informative. Do you use a different tool for NLP in Python? Do let us know in the comments below.

2,593 posts | 793 followers

FollowAlibaba Clouder - October 10, 2019

ApsaraDB - July 13, 2023

Alibaba Clouder - February 14, 2020

Alibaba F(x) Team - December 31, 2020

Alibaba Cloud MaxCompute - May 5, 2019

Alibaba Cloud Community - September 5, 2024

2,593 posts | 793 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba Clouder