By Zongzhi Chen

Unique secondary indexes are commonly used by customers to ensure the uniqueness of records on the indexes. However, many customers have encountered occasional deadlocks or unexpected lock waits, and they have provided feedback on this issue.

Theoretically, PolarDB uses the READ COMMITTED isolation level by default. In most scenarios at this isolation level, gap locks are not used. Therefore, deadlocks are not expected to occur in normal cases. So, why does this issue occur?

In fact, this is an old problem that has remained unresolved for ten years.

The following case describes the bug issue.

-- Prepare test data

CREATE TABLE `ti` (

`session_ref_id` bigint(16) NOT NULL AUTO_INCREMENT,

`customer_id` bigint(16) DEFAULT NULL,

`client_id` int(2) DEFAULT '7',

`app_id` smallint(2) DEFAULT NULL,

PRIMARY KEY (`session_ref_id`),

UNIQUE KEY `uk1` (`customer_id`,`client_id`,`app_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

INSERT INTO ti (session_ref_id, customer_id, client_id, app_id) VALUES (4000, 8000, 10, 5);

INSERT INTO ti (session_ref_id, customer_id, client_id, app_id) VALUES (4090, 9000, 10, 5);

INSERT INTO ti (session_ref_id, customer_id, client_id, app_id) VALUES (6000, 10000, 10, 5);

INSERT INTO ti (session_ref_id, customer_id, client_id, app_id) VALUES (7000, 14000, 10, 5);In Session 1, delete the record (4090, 9000, 10, 5) and insert the record (5000, 9000, 10, 5), which has the same secondary index.

-- session 1

session1 > start transaction;

Query OK, 0 rows affected (0.00 sec)

session1 > DELETE FROM ti WHERE session_ref_id = 4090;

Query OK, 1 row affected (0.00 sec)

session1 > INSERT INTO ti (session_ref_id, customer_id, client_id, app_id) VALUES (5000, 9000, 10, 5);

Query OK, 1 row affected (0.00 sec)Then comes the issue.

According to the following code, there is a lock wait when inserting the record (NULL, 8001, 10, 5). Theoretically, lock waits should not occur because the primary key is automatically incremental, and the secondary index (8001, 10, 5) does not conflict with any other records. Then why would this lock wait occur?

Meanwhile, the insertion of (NULL, 7999, 10, 5) introduces no issue, and the secondary index (7999, 10, 5) does not conflict with any other secondary indexes, either.

-- session 2

session2 > set innodb_lock_wait_timeout=1;

Query OK, 0 rows affected (0.00 sec)

session2 > start transaction;

Query OK, 0 rows affected (0.00 sec)

session2 > INSERT INTO ti (session_ref_id, customer_id, client_id, app_id) VALUES (NULL, 8001, 10, 5);

ERROR 1205 (HY000): Lock wait timeout exceeded; try restarting transaction

session2 > INSERT INTO ti (session_ref_id, customer_id, client_id, app_id) VALUES (NULL, 7999, 10, 5);

Query OK, 1 row affected (0.00 sec)View the transaction lock information and we can see the following code.

mysql> select ENGINE_TRANSACTION_ID, index_name, lock_type, lock_mode, LOCK_STATUS, lock_data from performance_schema.data_locks;

+-----------------------+------------+-----------+------------------------+-------------+--------------+

| ENGINE_TRANSACTION_ID | index_name | lock_type | lock_mode | LOCK_STATUS | lock_data |

+-----------------------+------------+-----------+------------------------+-------------+--------------+

| 99537179 | NULL | TABLE | IX | GRANTED | NULL |

| 99537179 | uk1 | RECORD | X,GAP,INSERT_INTENTION | WAITING | 9000, 10, 5 |

| 99537176 | NULL | TABLE | IX | GRANTED | NULL |

| 99537176 | PRIMARY | RECORD | X,REC_NOT_GAP | GRANTED | 4090 |

| 99537176 | uk1 | RECORD | X,REC_NOT_GAP | GRANTED | 9000, 10, 5 |

| 99537176 | uk1 | RECORD | S | GRANTED | 9000, 10, 5 |

| 99537176 | uk1 | RECORD | S | GRANTED | 10000, 10, 5 |

| 99537176 | uk1 | RECORD | S,GAP | GRANTED | 9000, 10, 5 |

+-----------------------+------------+-----------+------------------------+-------------+--------------+During Session 1, uk1 holds the next-key locks for (10000, 10, 5) and (9000, 10, 5).

When Session 2 inserts the record (8001, 10, 5), it follows the normal INSERT logic. During the insertion phase, it requests the GAP | insert_intention lock for the next key, which conflicts with the next-key lock for (9000, 10, 5) held by trx1, resulting in a lock wait.

However, if the inserted record is (7999, 10, 5), it requests the GAP | insert_intention lock for the next key (8000, 10, 5), which does not conflict, allowing for a successful insertion.

Now, let's explore why Session 1 needs to hold the next-key lock. To analyze this issue further, we need to understand the unique check process of the secondary index.

The following pseudocode illustrates the process.

find the B-tree page in the secondary index you want to insert the value to

assert the B-tree page is latched

equal-range = the range of records in the secondary index which conflict with your value

if(equal-range is not empty){

release the latches on the B-tree and start a new mini-transaction

for each record in equal-range

lock gap before it, and the record itself (this is what LOCK_S does)

also lock the gap after the last(equal-range)

also (before Bug #32617942 was fixed) lock the record after last(equal-range)

once you are done with all of the above, find the B-tree page again and latch it again

}

insert the record into the page and release the latch on the B-tree page.As you can see, the process of inserting a record into a secondary unique index is divided into two phases.

There must be a lock or a latch between Phases 1 and 2. Otherwise, even though it is confirmed in Phase 1 that no conflict exists and the record can be inserted, if another transaction inserts a conflicting record between these two phases, the record to be inserted in Phase 2 will still encounter a conflict.

Therefore, in the current implementation, if there is at least one identical record (a delete-marked record, in most cases) on the gap, a gap X lock needs to be added to the entire range. Then, other transactions are prohibited from inserting data in this gap range, and the atomicity of Phases 1 and 2 is guaranteed by the lock.

If there is no identical record on the gap, gap locks are unnecessary.

For example, consider a table that contains only primary keys and secondary keys.

The existing secondary index records are <1, 1>, <4, 2>, <10(delete-mark), 3>, <10(d), 8>, <10(d), 11>, <10(d), 21>, <15, 9>. If the secondary index record <10, 6> is to be inserted, a next-key lock need to be added to <10, 3>, <10, 8>, <10, 11>, <10, 21>, <15, 9>.

Note: The record <15, 9> also needs a next-key lock to ensure that records like <10, 100> are not allowed to be inserted. However, if we change <15, 9> to <15000, 9>, the locked gap range will be too large, which will introduce the preceding issue.

The specific implementation is in row_ins_scan_sec_index_for_duplicate().

What would happen if the next-key lock is removed?

This change was once made, but it has brought a serious unique constraint issue. That is, bug#73170, which led to duplicates in unique secondary indexes. Therefore, the official quickly reverted the fix, leaving the problem unsolved. What is the cause?

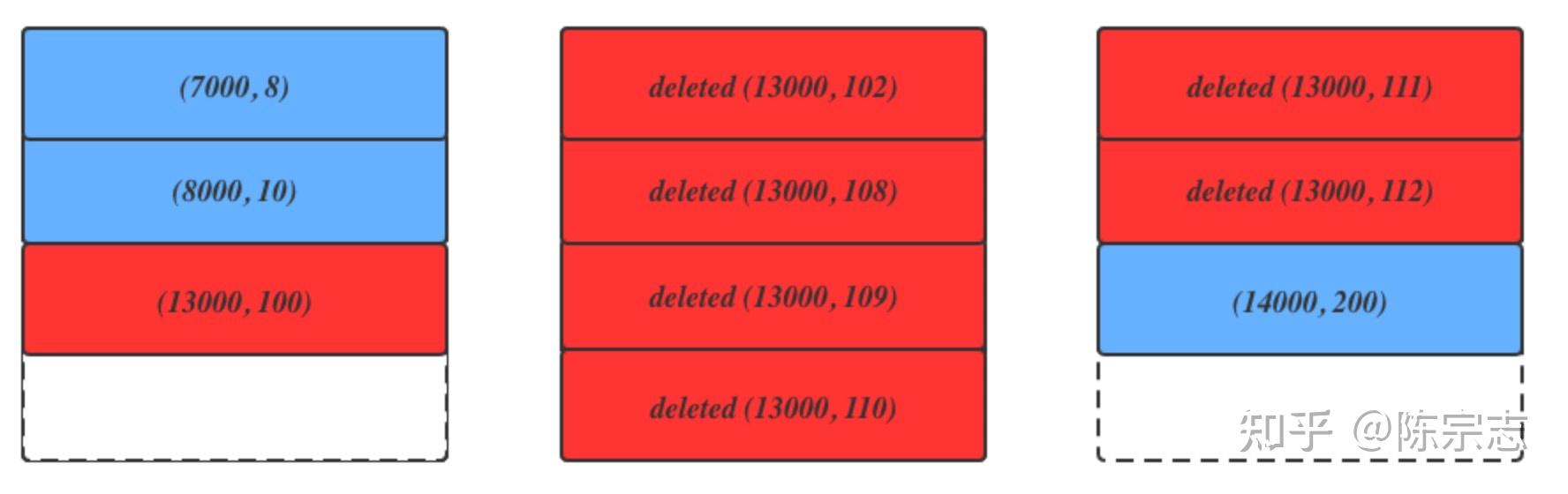

We can simplify the above secondary indexes by changing (9000, 10, 5) to 9000, because the value (10, 5) is always the same. The following figure shows a simplified structure of the secondary indexes on the page.

The red background indicates the deleted records and the blue background indicates records that are not deleted.

Then, if we change the next-key lock to record lock as the official did, and if two records (99, 13000) and (120, 13000) are inserted, the following will occur:

The record (99, 13000) adds a record S lock to all secondary indexes including (13000, 100), (13000, 102), (13000, 108)...(13000, 112) during the unique check phase, and adds a GAP | insert_intention lock to (13000, 100) during the insertion phase.

The record (120, 13000) adds a record S lock to all secondary indexes including (13000, 100), (13000, 102), (13000, 108)...(13000, 112) during the unique check phase, and adds a GAP | inser_intention lock to (13000, 112) during the insertion phase.

Then, both records can be inserted at the same time, failing the unique key constraint.

For specific mtr case, see bug#68021.

How is the official planning to fix this issue?

There are two main ideas.

1. We know that the locks of InnoDB must obey the 2PL (two-phrase locking) rule, which explains why the next-key lock cannot be released immediately after the unique check until the transaction ends. Therefore, the official hopes to distinguish between the locks for transactions and the locks for the unique check. The lifecycles of these two types of locks are supposed to be different. The former cannot be released until the transaction ends. The latter can be released as soon as the current statement ends. Of course, as Fungo proposed in the issue, theoretically, the lock for the unique check should be released immediately after the check is completed, because the statement duration is still too long. For multi-value insertion, the previous insertion could impact the subsequent insertion.

The official has introduced lock_duration_t::AT_LEAST_STATEMENT in certain situations, but challenges remain, due to InnoDB's lock inheritance and reuse. For example, when it is necessary to request for a gap lock, the current transaction already has one applied by the unique check, so the system will directly return true for this application. This is because the current implementation defaults that all locks will be released together when the transaction is committed. However, if the gap lock requested by the unique check is released in advance, a conflict will occur. Therefore, the lifecycle needs to be considered when reusing locks.

Lock inheritance presents another complication: if a record is purged or inserted within the gap, it inherits the statement's lifecycle. Consequently, when the unique check's gap lock is released, the inherited lock must be released as well. These are detailed, nuanced issues, and officials are addressing them incrementally.

2. The other main idea is to use a latch instead of a lock to perform the unique check. We know that a latch has a lifecycle much shorter than a lock. Generally speaking, latches are short-lived, and locks are long-lived. Latches can be released when the mtr is committed.

However, this also introduces an issue. If there are a large number of delete-marked records covering multiple pages, the mtr will hold a large number of latches. It is known that mtr is the smallest unit of InnoDB Btree modification. If the mtr holds too many page latches, the concurrent performance of Btree will inevitably decline.

In addition, as page latches are required for the execution of statements such as UNDO PURGE, the process may become I/O operations. This leads to a long latch duration and as a result, a long unique check duration. Latch conflicts are treated differently from lock conflicts. When a latch conflict occurs, the current thread waits. By contrast, when a lock conflict happens, the current transaction will enter the lock wait. It waits to be awakened after the conflicting lock is released. For more details, see Goetz's article.

This is similar to the PostgreSQL method that Fungo proposed in the issue. When PostgreSQL begins the unique check, an X latch is used for the first page, and latches for the following pages are held and released through latch coupling.

I have also proposed my solution in this issue.

In the function row_ins_scan_sec_index_for_duplicate(), change the next-key lock to a record lock. Then, in the insertion phase, replace the requested LOCK_X | LOCK_GAP | LOCK_INSERT_INTENTION with LOCK_X | LOCK_ORDINARY | LOCK_INSERT_INTENTION;

After these changes, the record lock is held, and LOCK_ORDINARY | LOCK_INSERT_INTENTION is waited. Therefore, Session 2 and Session 3 conflict with each other and cannot be inserted at the same time.

Why is LOCK_GAP held instead of LOCK_ORDINARY during the insertion phase?

Let's consider a scenario where the existing records are 1, 4, and 10, and we want to insert records 6 and 7.

In this case, the transaction requests the lock for record 10. As records 6 and 7 are not yet in the B-tree, record 10 is considered the next record. If we use LOCK_ORDINARY, the simple insertions of records 6 and 7 will wait for each other, leading to a deadlock.

Therefore, we can only add LOCK_GAP.

However, deadlocks are desirable for secondary keys that may conflict with each other. For example, if the existing records are <1, 1>, <4, 2>, <10(delete-mark), 3>, <10(d), 8>, <10(d), 11>, <10(d), 21>, and <15, 9>, and we want to insert <10, 6> in trx1 and <10,7> in trx2. In the first step, record S locks are added to <10, 3>, <10, 8>, <10, 11>, and <10, 21>. During the insertion, we check if the record is to be inserted between <10, 3> and <10, 8>, and if there is a 10. If so, the LOCK_X | LOCK_ORDINARY | insert_intention requested for <10, 8> will conflict with the held record S lock, resulting in a deadlock.

The same situation occurs when inserting <10, 6> and <10, 9>.

In the first step, a record S lock is added to all records of <10, x>.

During the insertion, trx1 requests LOCK_ORDINARY for <10, 8> and holds the record s lock of <10, 11> that trx2 needs. Similarly, trx2 requests LOCK_X | LOCK_ORDINARY of <10, 11> and holds the record S lock of <10, 8> that trx1 needs. This results in a deadlock conflict between the two transactions.

Finally, let's further explore the issue. Primary keys are also unique indexes, but they do not suffer from this problem. Why is that?

The main reason is that in secondary indexes, when a record is deleted, it is marked as "delete-marked" while still being retained when a new record is inserted.

When inserting data after a delete in primary indexes, the delete mark of the deleted record is changed to a non-delete mark. Additionally, a delete-marked record is written into the undo log. If there is a query for the historical version, the data will be restored from the undo log using mvcc. This ensures that the same delete-marked record will not span multiple pages. For example, in the case mentioned, (13000, 100) and (13000, 112) would not be split onto Page 1 and Page 3.

During insertion, similar to the two-phase insertion in secondary indexes, a latch or lock is required for protection. The primary index ensures atomicity of the two phases by holding the page X latch, preventing the two insertions from occurring simultaneously and ensuring uniqueness.

In operations such as DELETE + INSERT, INSERT... ON DUPLICATE KEY UPDATE, and REPLACE INTO, a next-key lock is applied to all matching records and the following record to maintain the atomicity between the phase of unique check and the phase of insertion. As a result, even if the subsequent record to be inserted doesn't have a conflict, it may still be blocked, potentially leading to a deadlock.

About Database Kernel | Transaction System of PolarDB-X Storage Engine – Part2

Common Deadlock Scenarios in MySQL: REPLACE INTO Statement Concurrency

ApsaraDB - January 30, 2024

Alibaba Clouder - February 13, 2019

ApsaraDB - January 16, 2026

ApsaraDB - August 23, 2024

Alibaba Cloud Product Launch - January 22, 2019

Alibaba Clouder - December 12, 2017

PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn More Database Migration Solution

Database Migration Solution

Migrating to fully managed cloud databases brings a host of benefits including scalability, reliability, and cost efficiency.

Learn MoreMore Posts by ApsaraDB