In this article, Lei Chunwei, Senior Development Engineer from Alibaba Cloud, introduces the technologies and stories of MaxCompute and Calcite. The specific content includes:

1) What is a query optimizer?

2) The specific practice of the MaxCompute query optimizer.

3) The follow-up plan of MaxCompute.

4) What kind of personal growth has he experienced, from being recruited as an Alibaba engineer to being a Calcite committer?

The following content is based on his speech and the related PowerPoint slides.

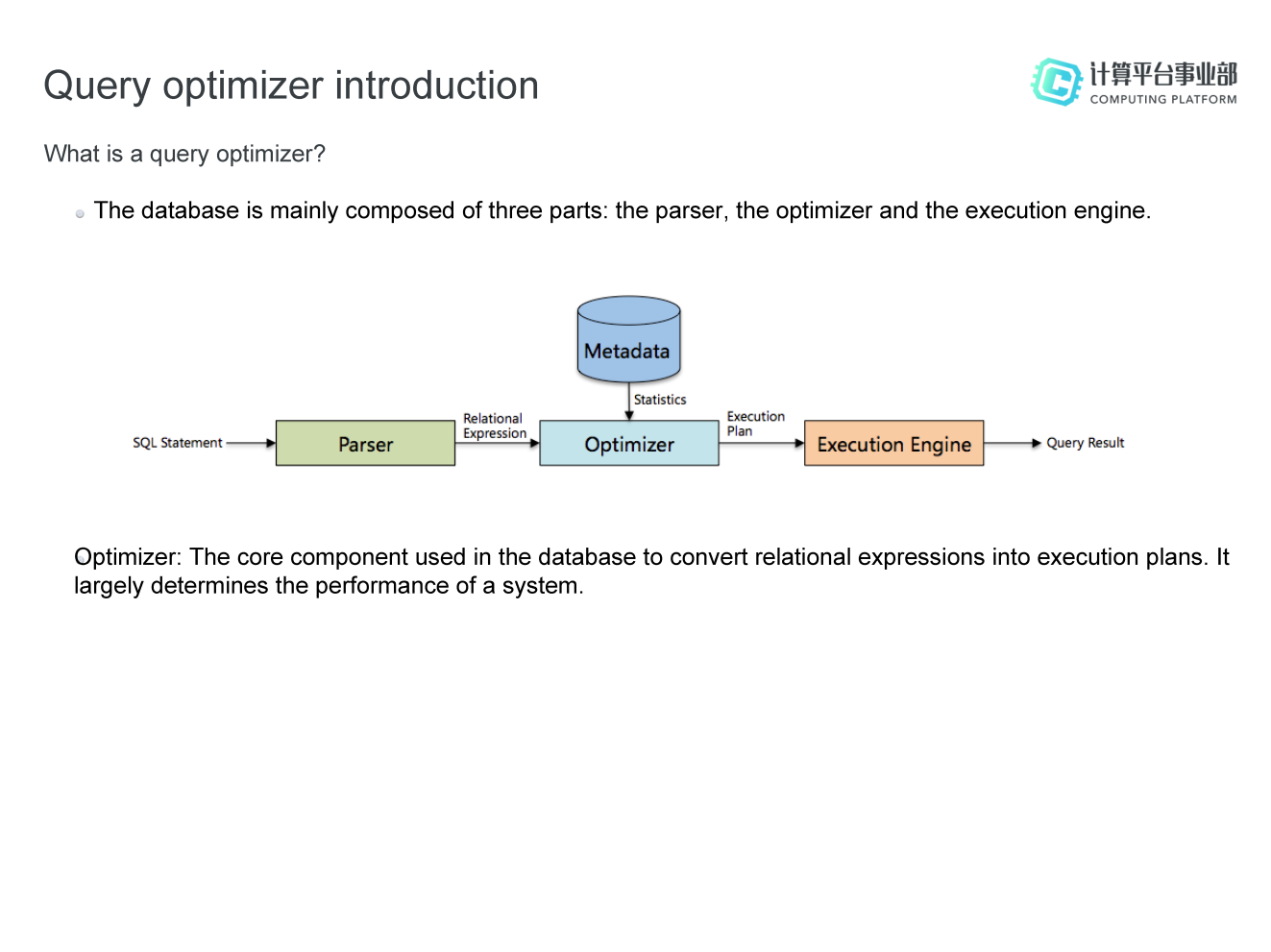

As we all know, a database is generally composed of three parts: the parser, the optimizer, and the execution engine. After an SQL statement enters the database, it first needs to be parsed by the parser to generate a corresponding relational expression, then the expression is optimized by the optimizer to generate a physical execution plan, and finally the plan is executed by an execution engine. The optimizer is an important component of the database. It is the core component used in the database to convert relational expressions into execution plans, and largely determines the performance of a system. If we compare a database to a human body, the optimizer is the human brain, which determines how far and how fast the human system can go.

The query optimizer can be divided into two types:

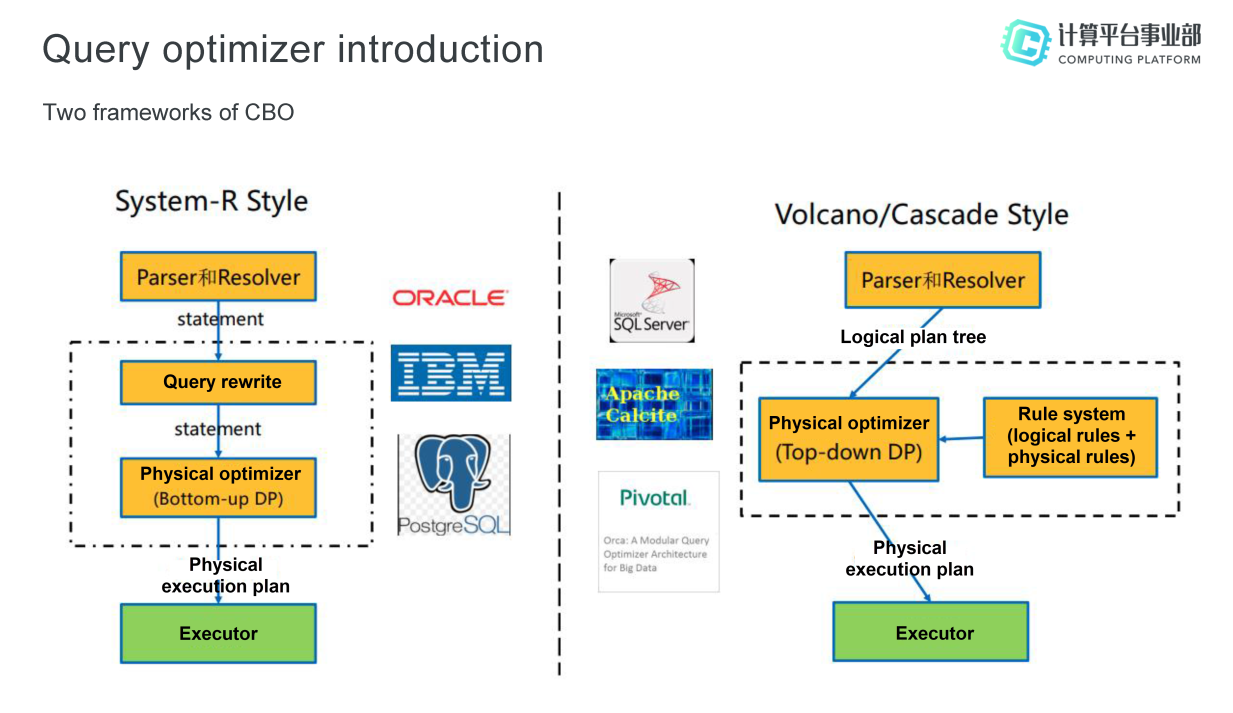

CBO mainly has two different styles of frameworks: One is the System-R style, and the other is the Volcano/Cascade style.

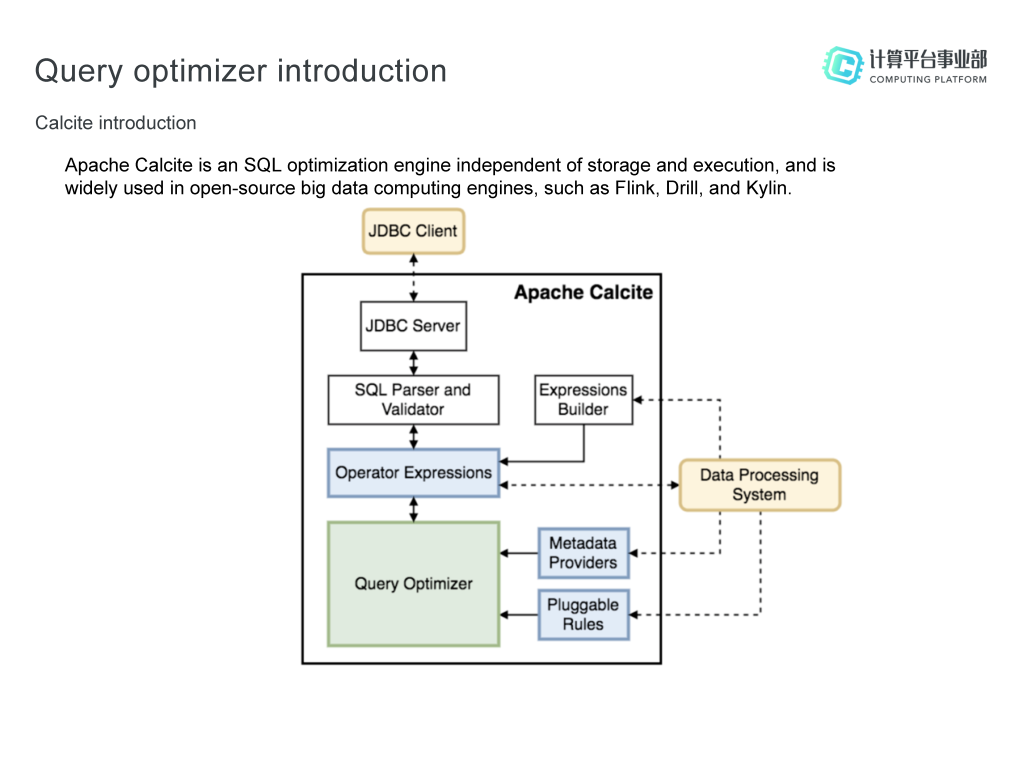

Apache Calcite is an SQL optimization engine independent of storage and execution, and is currently widely used in open-source big data computing engines, such as Flink, Drill, and Kylin. The goal of Calcite is "a solution for all demand scenarios", to provide a unified query engine for different computing platforms and data sources. As shown in the preceding figure, the Calcite architecture does not include storage and execution. The overall process is that after an SQL statement enters the JDBC Server through the JDBC Client, and is processed by the Parser and Validator, a relational algebra expression (operator expression) is generated. In addition, users can also use Expressions Builder to generate an SQL relational algebraic expression. This is applicable to systems with their own specific syntax. The generated relational algebra expression enters the Query Optimizer core engine, which is responsible for executing the dynamic planning algorithm and cost computing. Calcite also provides some pluggable features, such as Metadata Providers (providing metadata for optimization engines) and Pluggable Rules (supporting users to customize optimization rules).

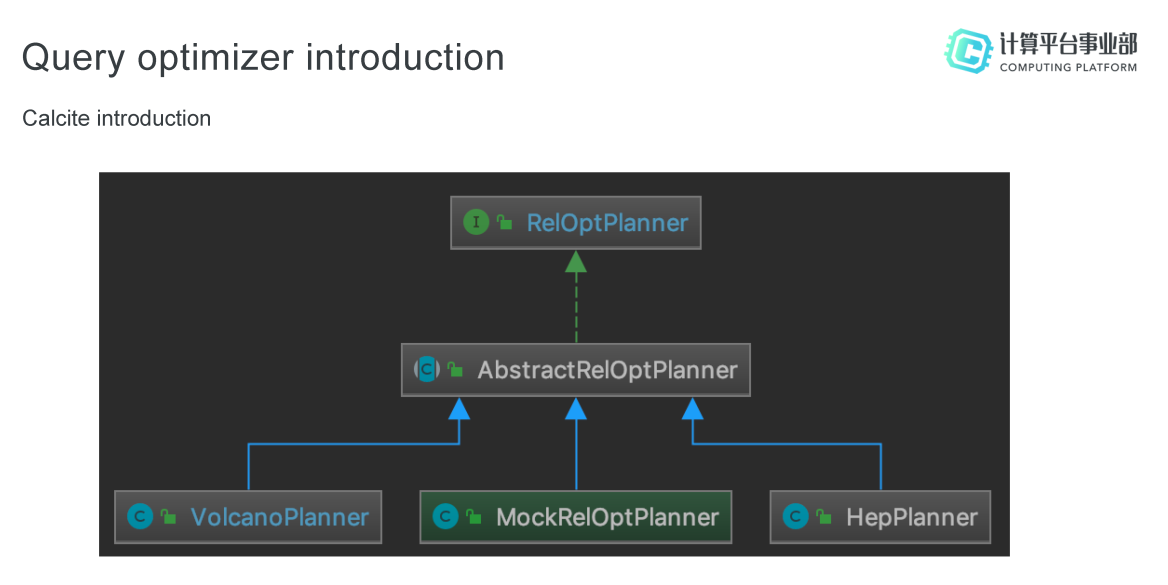

Calcite mainly provides two optimizer implementations:

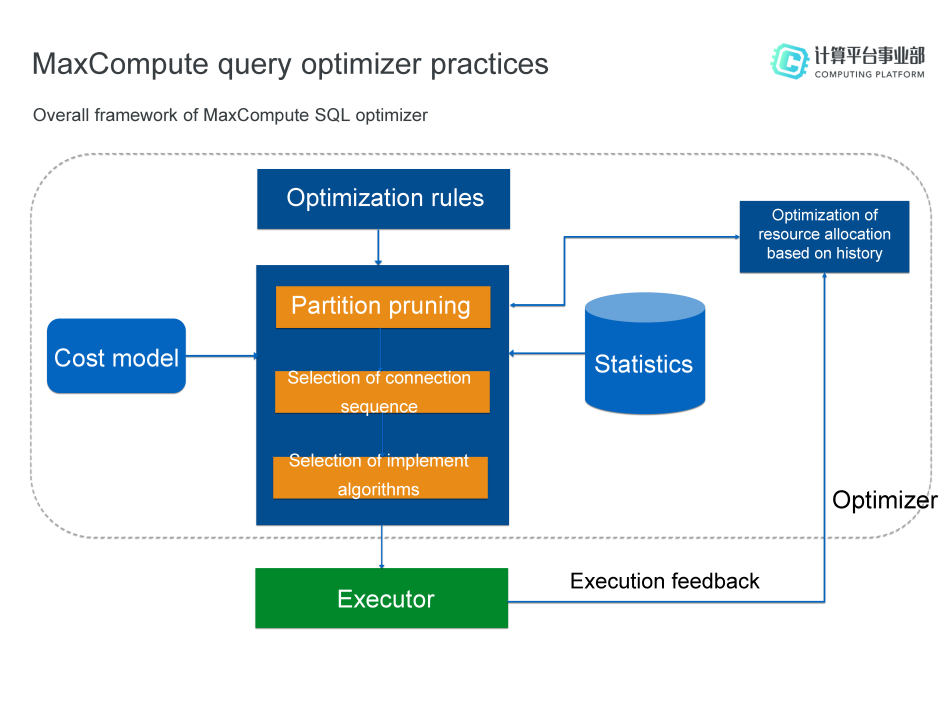

The overall framework of the MaxCompute SQL optimizer is shown in the following figure. It mainly includes five parts:

The MaxCompute SQL optimizer implements the Shuffle Removal feature based on the preceding optimization engine. Shuffle refers to the process in which data is processed from an upstream task to a downstream task, including sharding and sorting. If the data of the upstream task already contains the corresponding physical attributes, then the Shuffle process is unnecessary.

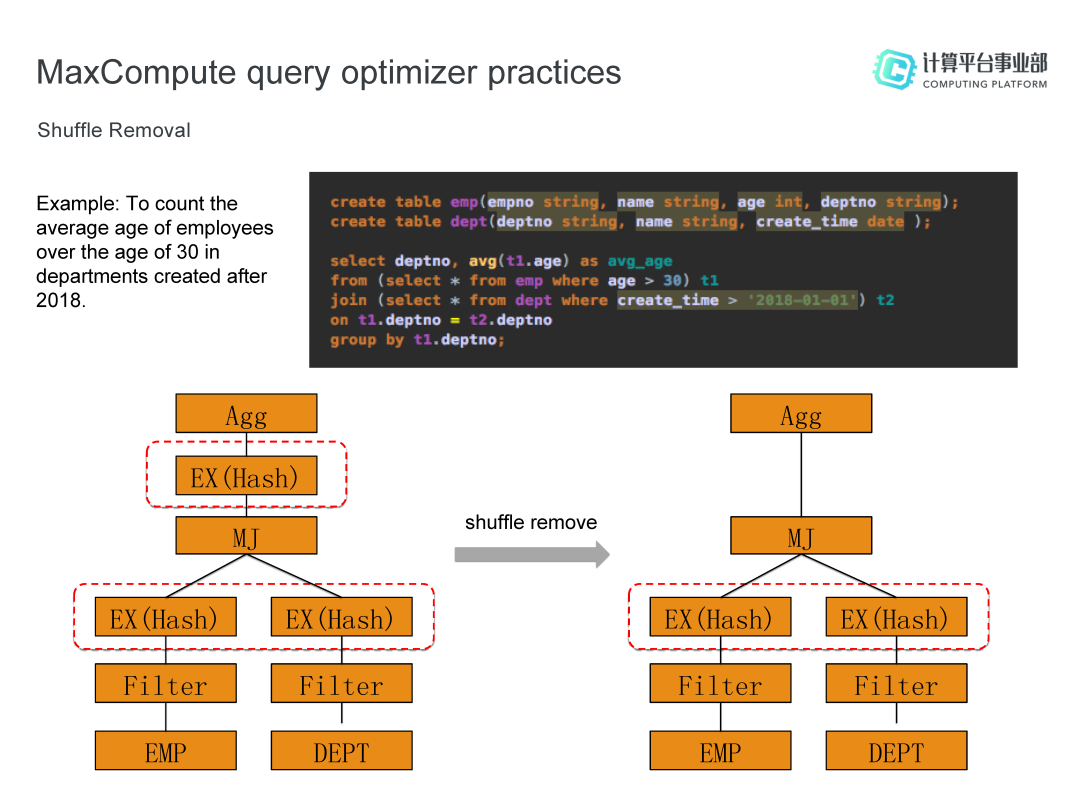

For example, to count the average age of employees over 30 years old in departments created after 2018, the physical execution plan shown in the above figure can be obtained by using MaxCompute. First, the employees over 30 years old are filtered out from the employee table "emp", the departments created after 2018 are filtered out from the department table "dept", and then the join operation is performed. Considering that the data volume may be large, Merge Join is selected for the operation. Since data needs to be distributed to the same machine, the Shuffle process (EX in the figure) is required from the upstream Filter task to perform the Merge Join task. In addition, the joined data needs to be aggregated (the Agg in the figure) to compute the average age, and the Shuffle process is also required at this time. In fact, since Merge Join is used, the data has been distributed and sorted in this process. Therefore, Shuffle does not need to be repeated in the process from Join to Aggregation. The Shuffle Removal feature can be used to remove repeated Shuffle processes.

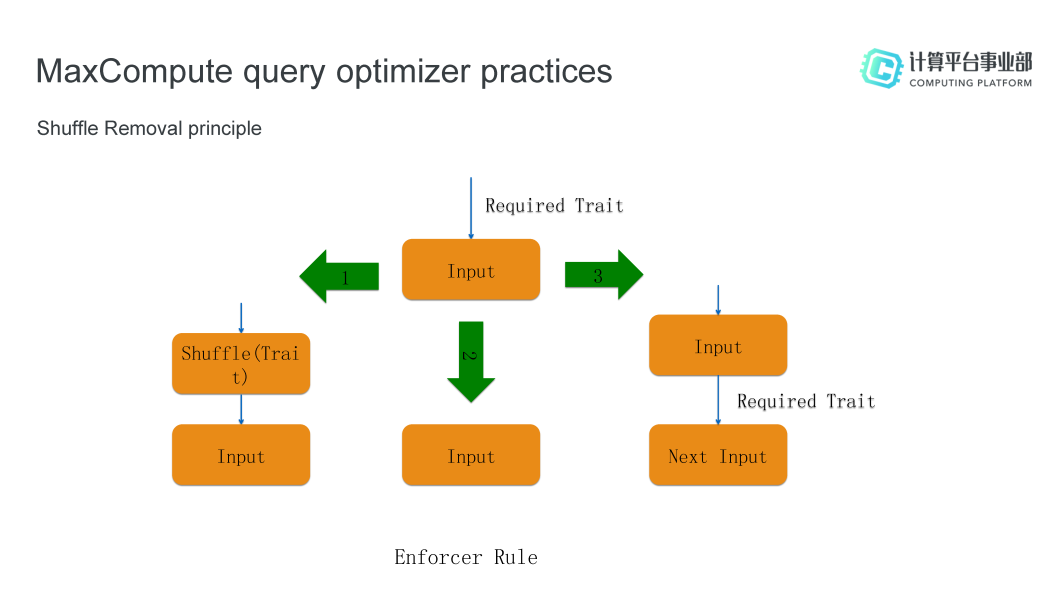

The implementation of the Shuffle Removal feature relies on the Enforcer Rule. The specific process is shown in the following figure. Operators, such as Join and Aggregate, require that the Input data must have the physical data attribute Trait (obtained through operations, such as sorting, and sharding). The Enforcer Rule can ensure the required physical attributes (Required Trait) of Input data: 1) If the Input data does not have Required Trait, a Shuffle process needs to be added; 2) If the Input data already has Required Trait, the process does not need to be added; 3) The attribute can be transferred in the Input process, that is, the Required Trait can be pushed down to its own Input. The Shuffle Removal feature has brought about 10% resource savings to Alibaba Cloud, since its launch.

MaxCompute handles 99% of computing and storage at Alibaba, and focuses on improving the user experience and computing efficiency of external users. Next, the new features of MaxCompute 2.0 are introduced, mainly including the compiler, the development tool Studio, the procedural support features (such as the Script Mode and the Parameterized View) to help improve development efficiency, and custom features (such as UDT and the External Table). As the public cloud has not been officially released, you can search for the DingTalk group number 11782920, and contact the group owner Jin Heng, for use.

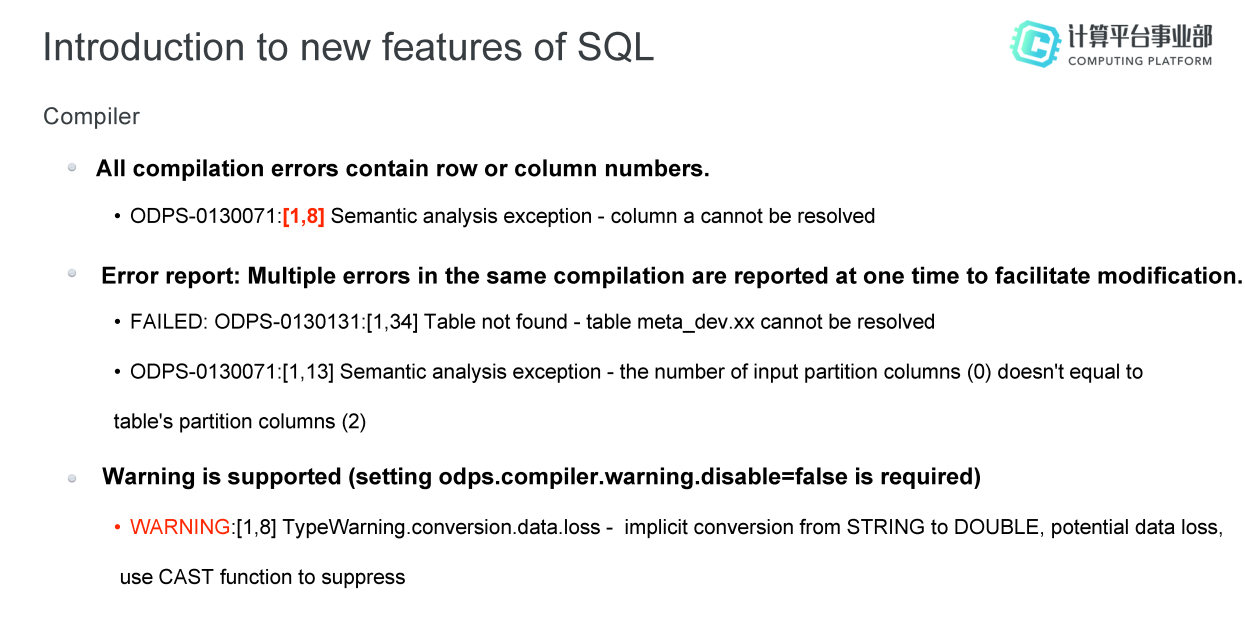

In MaxCompute 2.0, the compiler currently has three major new features: 1) All compilation errors include row or column numbers to help users clearly understand the location of errors; 2) Multiple errors in synonym compilation are reported in the form of an error report at one time to facilitate modification; 3) Warning is supported to prompt the user for potential problems, such as loss of Double = STRING precision. This feature needs to be enabled in advance by setting odps.compiler.warning.disable=false. The above three features are very practical in development, and will greatly improve the development efficiency of users.

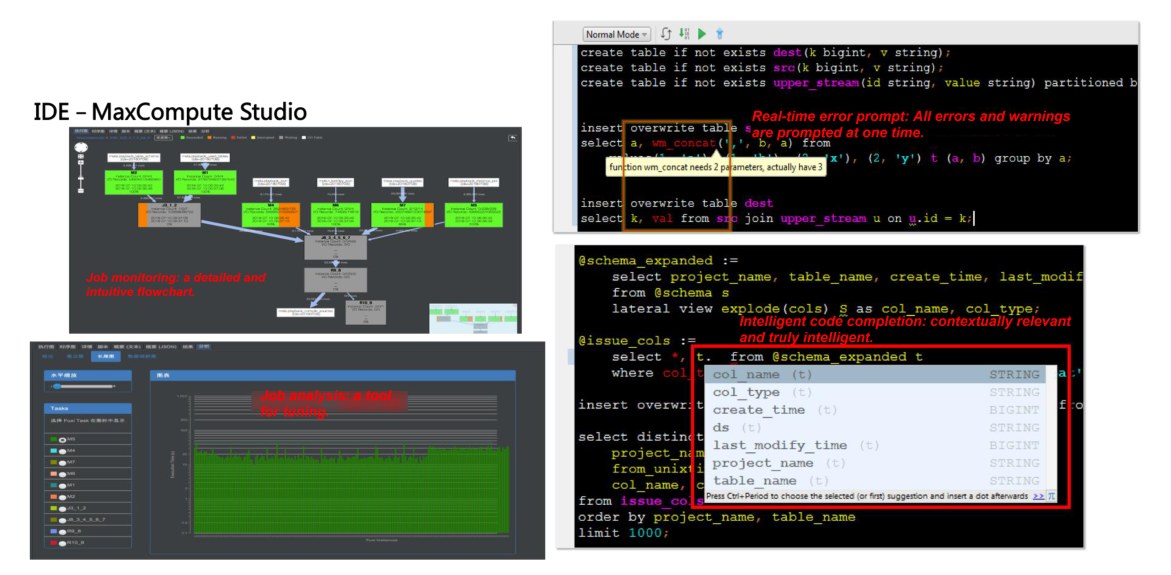

The second one that needs to be strongly recommended is Maxcompute 2.0 Studio. As an IDE, users can use it to write scripts. The main benefits include the following (as shown in the following figure):

1) Job monitoring: The Studio provides a detailed and intuitive job flowchart, allowing users to easily view the task flow and overall execution plan (the subgraph in the upper left corner).

2) Job analysis: The Studio provides data information related to job analysis (the subgraph in the lower left corner), such as the execution time of operator in each task. With this information, users can intuitively see the parts of the plan that are not well executed, the parts that occupy relatively high resources, and the parts that take a relatively long time to execute, so as to conduct targeted optimization, and improve the efficiency of the execution plan in a targeted manner.

3) Real-time SQL error prompt: The Studio prompts SQL errors in real time, and can prompt all errors and warnings at one time to prompt the user with the location of errors in the written SQL (the subgraph in the upper right corner).

4) Intelligent code completion: The Studio can determine and prompt the commands that a user may want to enter based on some of the letters entered by the user and the context, helping the user to fill in the code automatically and conveniently (the subgraph in the lower right corner).

Problem scenarios: When faced with a project with complex processing logic, you need to extract multiple tables and then join these tables, and then join the results. In addition, multiple tables need to be output from different run phases, which cannot be expressed even if CTE (Common Table Exclusion) is used. In this case, these steps can only be split into multiple jobs and submitted in sequence. The complexity and maintenance cost of this solution are both high, and it also has a great impact on performance.

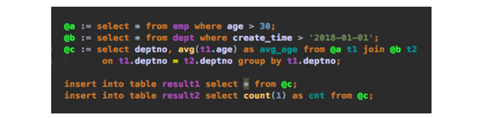

Script mode solution: For this requirement, MaxCompute 2.0 provides the Script Mode feature. We also introduce the use of script mode with the example (counting the average age of employees over 30 years old in departments created after 2018) mentioned earlier. Through MaxCompute script mode, all commands can be written in a SQL file. The user can first define a variable A to filter the list of employees older than 30 from the employee table "emp", define a variable B to filter the list of departments created after January 1, 2018 from the department table "dept", and then define a variable C to count the average age of employees over 30 years old in these departments. The obtained results can be easily processed in script mode. For example, you can directly insert the required results into different tables by using multiple script commands (the specific commands are shown in the following figure). In the face of complex queries, this method makes query operations and subsequent operations easier to maintain and clearer.

The script mode has the following three main advantages:

Problem scenarios: Suppose a user creates a view for some teams. The function of the view is to read a data table, and run the newly written pattern recognition algorithm to provide advertisement recommendations. Other teams think that the algorithm is good and also want to use it, but the data table accessed at the underlying layer is different, and some parameters of pattern recognition are also different, so the user has to create a new view for them. However, it is later discovered that the original view has bugs, which can only be fixed one by one, resulting in significant complexity for maintenance.

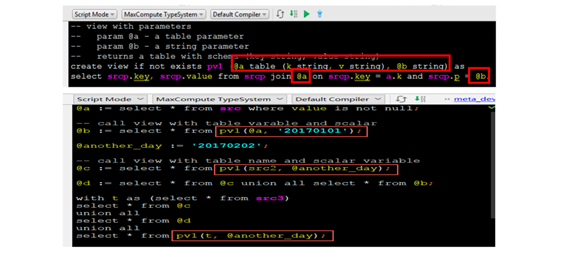

Parameterized view solution: To solve this problem, MaxCompute 2.0 provides the Parameterized View feature. The syntax is similar to that of creating a common view, except that the creation of a parameterized view requires two parameters, the table parameter @a (it contains two parameters k and v) and the String parameter @b. The command to create a parameterized view is shown in the following figure, in which the creation process combines the script mode. We can find that the view created in this way can be easily used by any user, because the mode is the same, and only the parameter types are different.

Problem scenarios: UDFs can be written in both Hive and MaxCompute to meet some requirements without built-in functions. For example, you need to implement a feature to parse JSON strings. This feature is very easy to implement in other languages. For example, you may only need to call the JSON function once in Java. However, this feature is not implemented for built-in MaxCompute functions, so writing a UDF is too complicated.

UDT solution: For the above problem scenario, MaxCompute2.0 provides the UDT (User Defined Type) feature, allowing users to directly reference a third-party language class or object in SQL to obtain its data content or call its method. For the above problems, only the following SQL is needed to solve them. This method avoids frequent UDF creation and is as convenient as calling in Java.

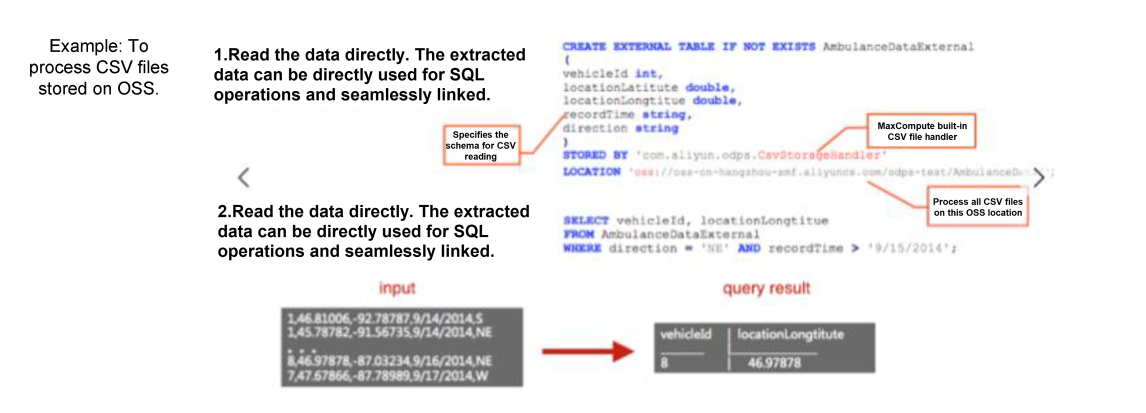

Problem scenarios: The data of MaxCompute users are stored on other storage systems (such as HDFS), and the format is CSV. Can SQL be used to operate?

External Table solution: The External Table feature in MaxCompute enables users to use SQL to access other data sources and data in other data formats. The following figure provides an example of processing CSV files stored on OSS. First, you need to define an external table. In the definition command, you need to specify the schema for CSV reading, the handler of the built-in CSV file in MaxCompute, and the location of the OSS to be processed. After the external table is defined, the data can be directly read, and processed through SQL just like an internal table.

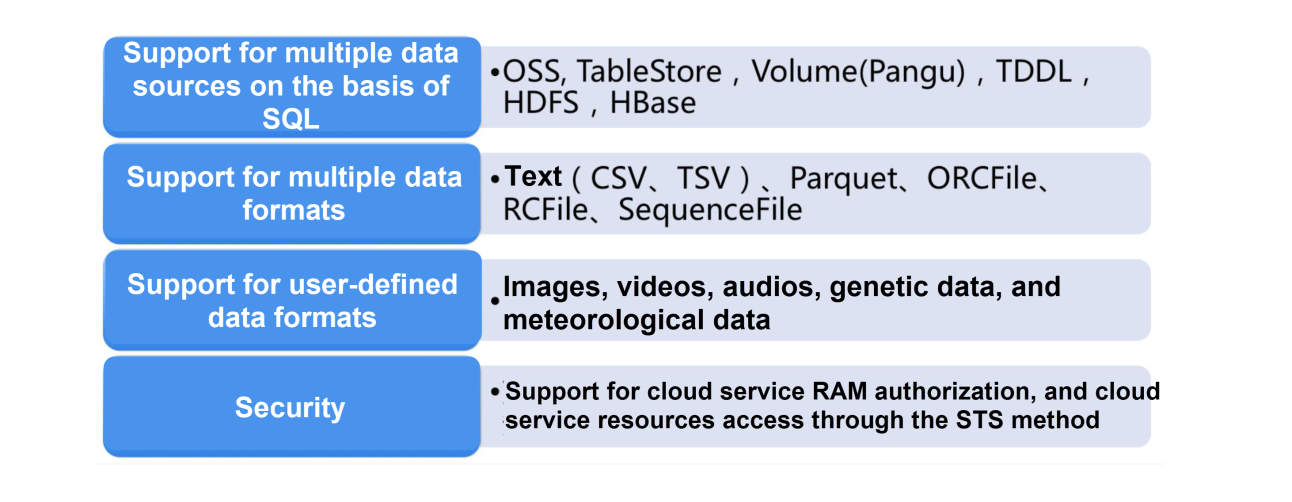

The External Table feature supports multiple data sources and formats, and supports user-defined data formats (as shown in the following figure).

Before introducing the follow-up plan for MaxCompute, let's first summarize its advantages over Hive:

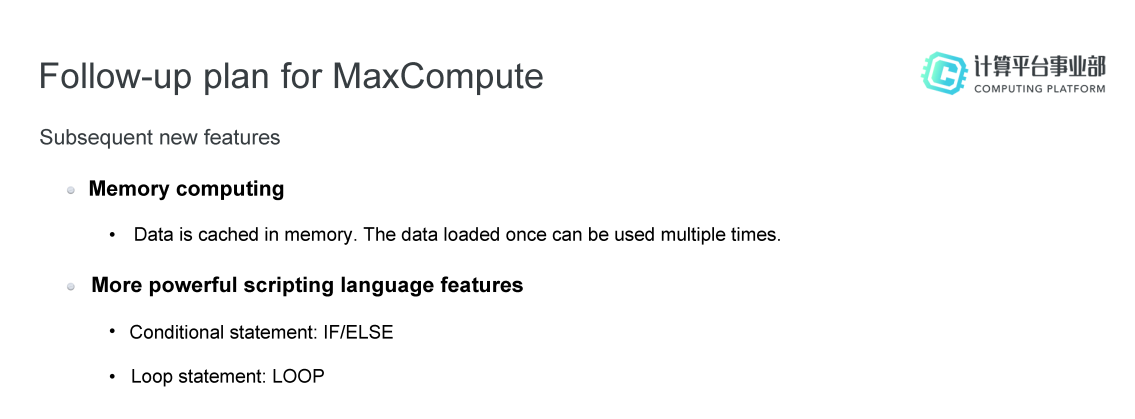

In subsequent versions, MaxCompute will mainly add two new features:

Since I was recruited into Alibaba MaxCompute team in 2015, I have witnessed the development of MaxCompute from 1.0 to 2.0. Personally speaking, when I came to Alibaba after completing school, I felt like I had come to another "campus". The team is based on the open-source community project Apache Calcite, and is also a heavy user. During the use process, the team has contributed some good ideas to the community and the community has actively accepted them. Therefore, it is a matter of course to become a committer.

Two years ago, I first came into contact with the open-source community. Speaking from personal experience, I have summarized two main points:

1) Growth is gradual. At the beginning, the requirements implementation I undertake may be small. But through active communication and learning with the community, the requirements will become larger and larger, and thus the contribution will gradually increase.

2) I can learn a lot from the open-source community. The open-source community pays more attention to the code quality and the communication between community members, and avoids many unnecessary conflicts and rework in advance through timely communication.

In-Depth Comparison between MaxCompute and MPP Query Engines

135 posts | 18 followers

FollowAlibaba Cloud_Academy - July 25, 2023

Alibaba Cloud MaxCompute - September 18, 2019

Apache Flink Community China - April 17, 2023

Alibaba Cloud Community - June 6, 2023

Apache Flink Community China - November 8, 2023

Apache Flink Community China - July 3, 2023

135 posts | 18 followers

Follow Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn MoreMore Posts by Alibaba Cloud MaxCompute