By Chu'an at Alibaba.

From Dark Blue's victory in chess to AlphaGo's defeat of the reigning Go champion, whenever machine intelligence attains superiority in a field originally dominated by humans, everyone sits up and takes notice. So, if machine intelligence can achieve success in chess, can it understand cybersecurity and beat hackers at their game? Today, machine intelligence has given rise to many exaggerated expectations, so perhaps we should take a step back and look at the capabilities of machine intelligence, the difficulties involved in cybersecurity, and the challenges of combining the two.

First off, what does security mean? In the past, security simply involved confrontations between people. Now, security also involves attackers and their offensive machines as well as defenders and their defensive machines. In the future, security will come to involve machines alone. From this perspective, the essence of security is the knowledge confrontation between intelligent entities. In the future, it will not be important that we "understand security", as it will be beyond our capabilities. Rather, we must be able to build a machine intelligence that extends human capabilities and can understand security better than we can ourselves.

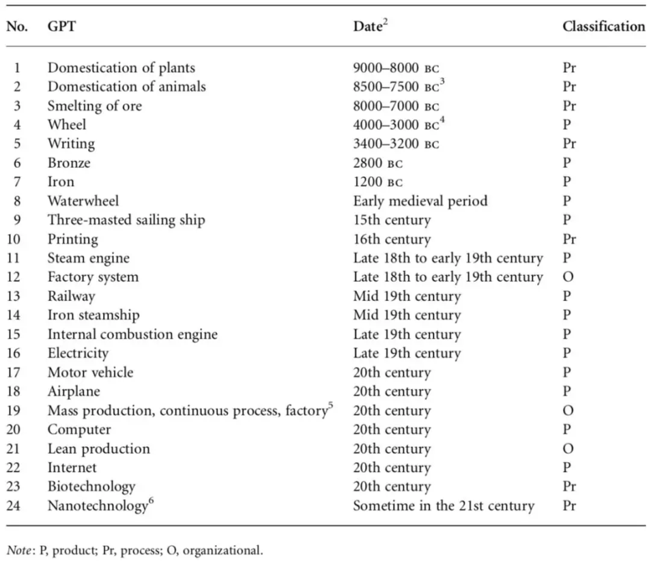

Technologies are an extension of human capabilities, and the invention of technology is the greatest ability of humans. Even before the emergence of civilization, humans already invented various technologies to give them a competitive edge over other animals in the fight for survival. In human history, the core driver of increased productivity and economic development has been the invention of General Purpose Technologies (GPTs). By influencing existing economic and social structures, GPTs have profoundly influenced human development.

GPTs are individual and identifiable basic common technologies. At present, we can only find about 20 GPTs throughout human history. Such technologies are defined by the following characteristics:

The agricultural revolution in the Neolithic age saw the domestication of plants and animals and, somewhat later, the invention of writing. During the first industrial revolution in the 18th and 19th centuries, the steam engine, factory system, and rail transport emerged. Then, the second industrial revolution was powered by the internal combustion engine, electricity, and automobiles and the aircraft. Most recently, the 20th century welcomed the information revolution, involving computers, the Internet, and biotechnology. The interval between the inventions of GPTs is becoming shorter, and the density of these technologies is increasing. We are seeing a greater number of such technologies that have a wider impact on our society and more quickly increased productivity.

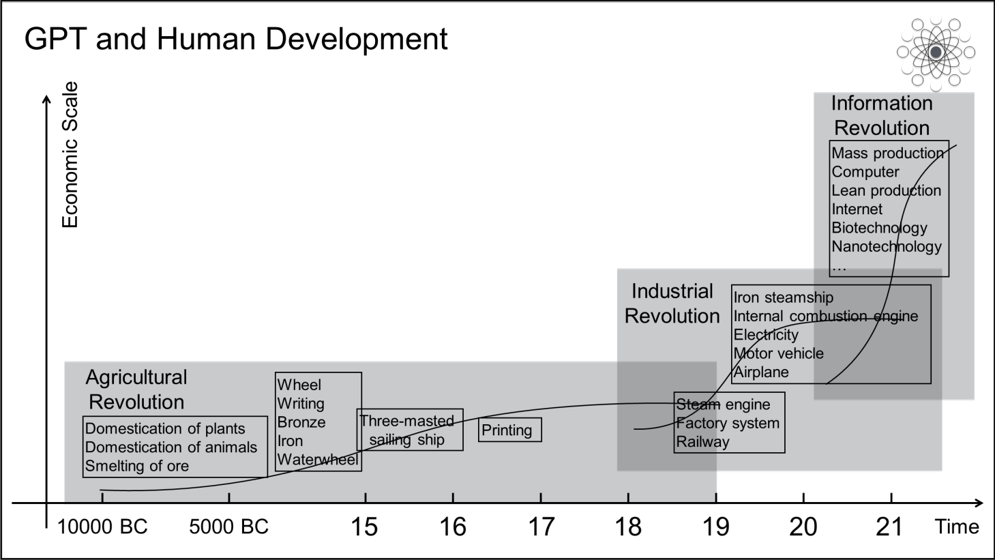

The synergy arising from the convergence among various GPTs emerging in the same era has also served as a catalyst in promoting productivity, economic development, and innovations. In the age of steam, the steam engine provided power and energy. The rail network connected various physical spaces, allowing the transport of steel and other materials and their use in various machine systems. In the age of electricity, central power stations provided electrical power. The power grid connected various physical spaces, allowing the transmission of current and its use in various electrical systems.

In the age of information, personal computers and servers provided computing power, and the Internet transmitted data and connected information systems in various digital spaces. In the age of intelligence, general-purpose computing, such as cloud computing and edge computing, provides computing power. The boundary between physical space and digital space has increasingly blurred, forming a fused space. In this fused space, the Internet of Everything connects various intelligent systems. From this, we can see that GPTs have worked together in similar ways in different ages. In the age of steam, kinetic energy was given to machines. In the age of electricity, electrical energy was given to machines. In the age of information, data was given to machines. In the age of intelligence, knowledge is given to machines.

Among all GPTs, machine intelligence is the most distinctive. This is the first technology that humans have invented to enable machines to acquire knowledge independently. It also marks the first time that humans have the ability to build a non-carbon based intelligent entity.

On a cold afternoon in February 1882, the young Nikko Tesla perfected the idea of an AC generator that he had been working on for five years. Excitedly, he said, "from now on, human beings will no longer be enslaved by manual labor. My machines will free them, and this will happen all over the world."

In 1936, in order to prove the existence of undecidable propositions in mathematics, the 24-year-old Alan Turing put forward the idea of a "Turing Machine". In 1948, his paper "Intelligent Machinery" set out most of the main ideas of connectionism. Then, "Computing Machinery and Intelligence" was published in 1950, proposing the famous "Turing Test". In the same year, Marvin Minsky and his classmate Dean Edmonds built the world's first neural network computer.

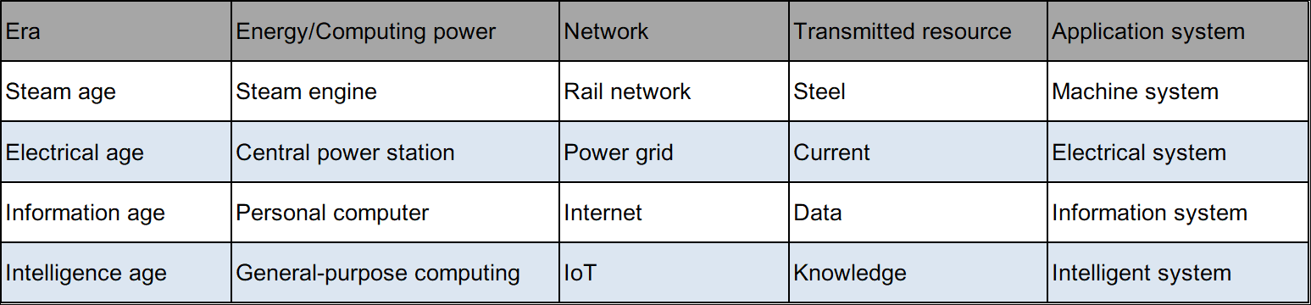

In 1955, John von Neumann gave a lecture series at Yale University. These lectures were later summarized in the book "The Computer and the Brain". In 1956, John McCarthy first put forward the concept of "artificial intelligence" at the Dartmouth conference. This marks the formal beginning of the history of machine intelligence. Over the following years, several influential schools were formed in succession: Symbolism, Connectionism, and Actionism.

As machine intelligence has developed, it has gone through several peaks and valleys, the three major schools as well. Starting in the 1950s, symbolism, represented by expert systems and classic machine learning, long dominated the field. In contrast, the development of connectionism went through many twists and turns, from the proposal of the perceptron to the idea of back propagation in the 1980s and to the success of deep learning with the help of greater computing power and data volumes. It was not until 2018 that the three giants, Geoffrey Hinton, Yann LeCun, and Yoshua Bengio, won the Turing Award and became celebrities in the field. However, actionism, represented by reinforcement learning, stepped into the spotlight after the emergence of AlphaGo and AlphaZero in 2016 and was hailed as the only way to achieve general-purpose machine intelligence.

Human intelligence evolved over millions of years, but machine intelligence has only been around for just over 60 years. Although general-purpose machine intelligence is still far away, machine intelligence is already gradually surpassing human intelligence in many fields. Over the past 60 years, data computing, storage, and transmission capabilities have increased at least 10 million times over. At the same time, the growth rate of data resources has outstripped Moore's law, and the total global data volume is expected to reach 40 ZB in 2020. Machine intelligence has reached a critical point for GPT development. With the collaboration of other GPTs, the approaching technological revolution will be more intense than ever before.

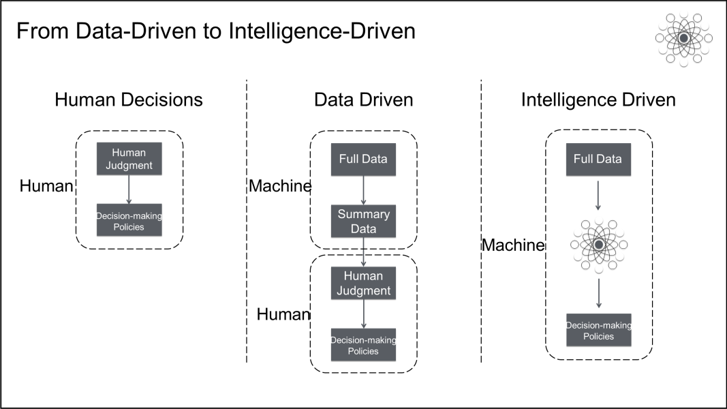

Surely, you have heard of "business intelligence and smart business", "security intelligence and smart security", and many other such terms. The main differences between the first and second terms are that the former is single-instance intelligence and the latter is global intelligence, and the former is data-driven and the latter is intelligence-driven. Data-driven and intelligence-driven operations look similar but have fundamental differences. The most essential difference is the difference between decision-making entities. Data-driven decision-making ultimately relies on humans to make decisions. Data only provides information that helps you make better decisions. Intelligence-driven decision-making allows machines to replace humans in online decision-making.

Human brains are subject to cognitive biases as the result of evolution. Limited by the information transmission bandwidth and information processing speed of the human brain, humans have gradually formed a simple heuristic inference decision-making system since our time as hunter-gatherers. This avoids the high cost of processing large amounts of information. This system allowed humans to make quick and almost unconscious decisions in various dangerous environments, and we have carried this system in our brains ever since. However, our fast and almost unconscious decisions are not always the best and are sometimes not even accurate.

The heuristic method is passed on generation by generation, giving us a mind pre-installed with cognitive biases. These biases affect human decision-making and cause us to deviate from rationality and objectivity. By the advent of the data-driven era, a wealth of online data was available to provide an auxiliary basis for better decision-making. We use general-purpose computing and massive data processing technologies to reduce the data volume to a size that can be digested by the human brain and assist in decision-making in various application scenarios.

Data-driven decisions have a significant advantage over the intuition-driven or experience-driven decisions of the past, but humans still play the role of the "CPU" in decision-making. Thus, the human brain still imposes limitations on the process. Due to the throughput limit of the human brain, it cannot process all raw data. The full data volume must be converted into summary data or overview data so that the human brain can extract knowledge from it. This process cannot avoid the omission of information, thus losing some implicit relationships, data patterns, and insights contained in the full data.

Intelligence-driven decisions allow machine intelligence to directly make online decisions. Data-driven approaches cannot compare to intelligence-driven decisions in efficiency, scale, objectivity, and evolution speed. Intelligence-driven approaches extract the full knowledge from all data resources and then use this full knowledge for global decision-making. Data-driven decisions are essentially the combination of summary data and human intelligence, while intelligence-driven decisions are full data plus machine intelligence.

However, in current business scenarios, many decisions are not even data-driven, let alone intelligence-driven. The implementation of "perception" by machine intelligence is only the first step, and the implementation of "decision-making" is a more critical step. At present, machine intelligence is at a stage best described by Churchill, when he said, "Now this is not the end. It is not even the beginning of the end. But it is, perhaps, the end of the beginning." So, now, we need to ask: What is a real machine intelligence system?

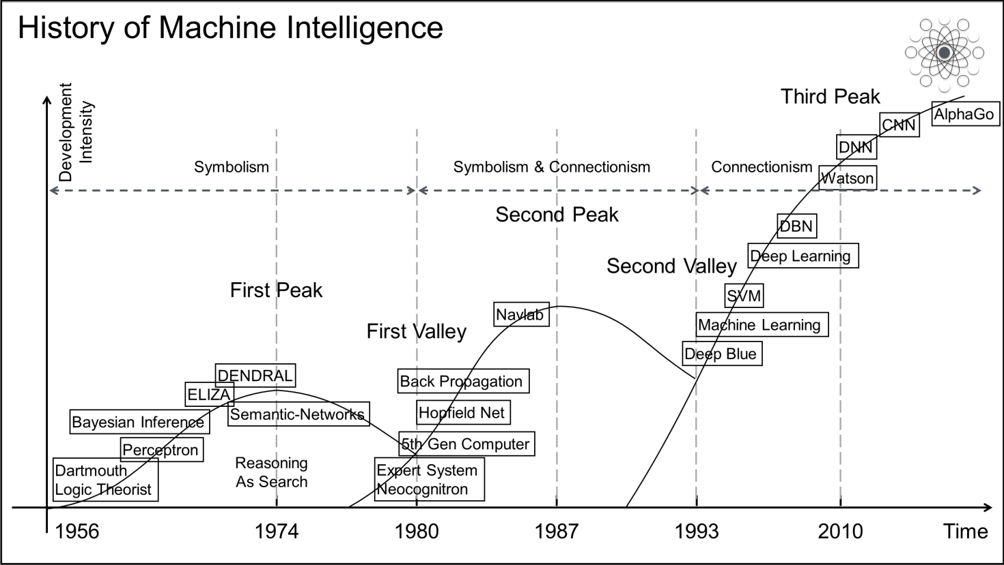

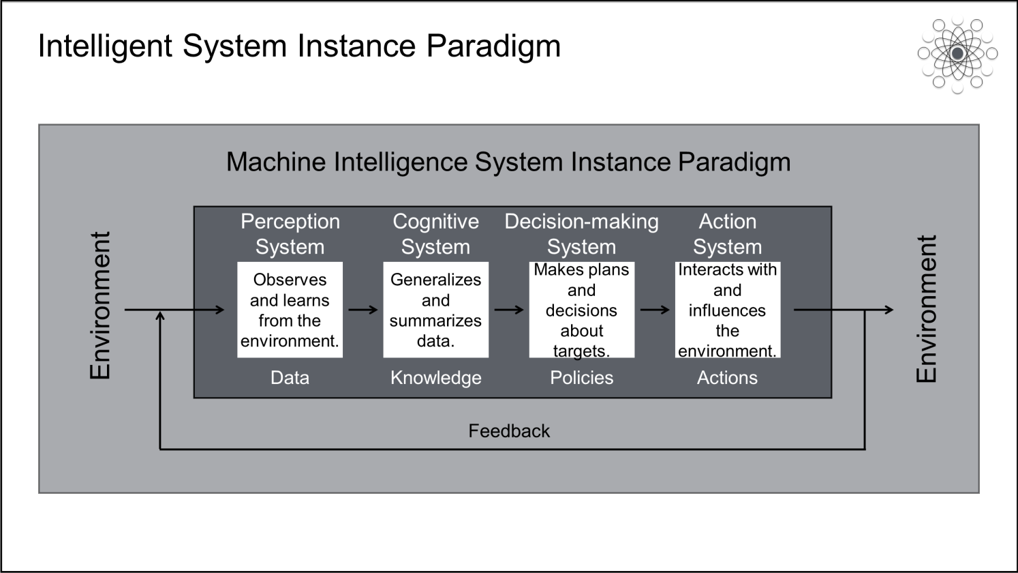

Ture intelligent systems must contain the following components: a perception system, cognitive system, decision-making system, and action system. At the same time, an intelligent system cannot be separated from its interaction with its environment. In the past, we always focused too much on the internal operations of systems and ignored their interactions with the environment.

In an intelligent system, the perception system is used to observe and learn from the environment and generate data. All data is generated from observation and cumulative insights into the environment. The motivation behind observation and learning is our desire to measure, record, and analyze the world. Information is always stored in an environment, which can be a digital space or a physical space. In different scenarios, we use hardware, software, and algorithms to convert this information into data. Hardware includes sensors and cameras, software includes loggers and data collectors, and algorithms include intelligent vision algorithms and intelligent speech algorithms. One day, we will be able to convert all physical spaces into data and map them onto data spaces.

Cognitive systems are used to generalize and summarize data to extract knowledge. For humans to understand knowledge, it must be expressed in a natural language. Machines, in contrast, must be trained on the knowledge using datasets that represent the problem space. Then, the trained model can be used to make inferences in new data spaces. As long as knowledge can be used to solve a specific target task, it makes no difference whether the knowledge is expressed as vectors, graphs, or natural language. The expressions of feature spaces are themselves a kind of knowledge.

Decision-making systems are used to make plans and decisions for target tasks and generate policies to accomplish the target tasks. Action systems perform specific actions based on policies, interact with the environment, and influence the environment. Actions produce feedback from the environment, and this feedback helps perception systems perceive more data and continuously obtain more knowledge. With more knowledge, cognitive systems can make better decisions for target tasks. This process forms a closed loop of continuous iteration and evolution.

From this perspective, machine intelligence is essentially an autonomous machine that monitors the environment to accumulate data, aggregates data to extract knowledge, plans targets and makes online decisions, and takes actions to influence the environment. Machine intelligence is a type of autonomous machine. The biggest difference between an autonomous machine and an automated machine is that an autonomous machine can autonomously obtain knowledge that helps solve the target task.

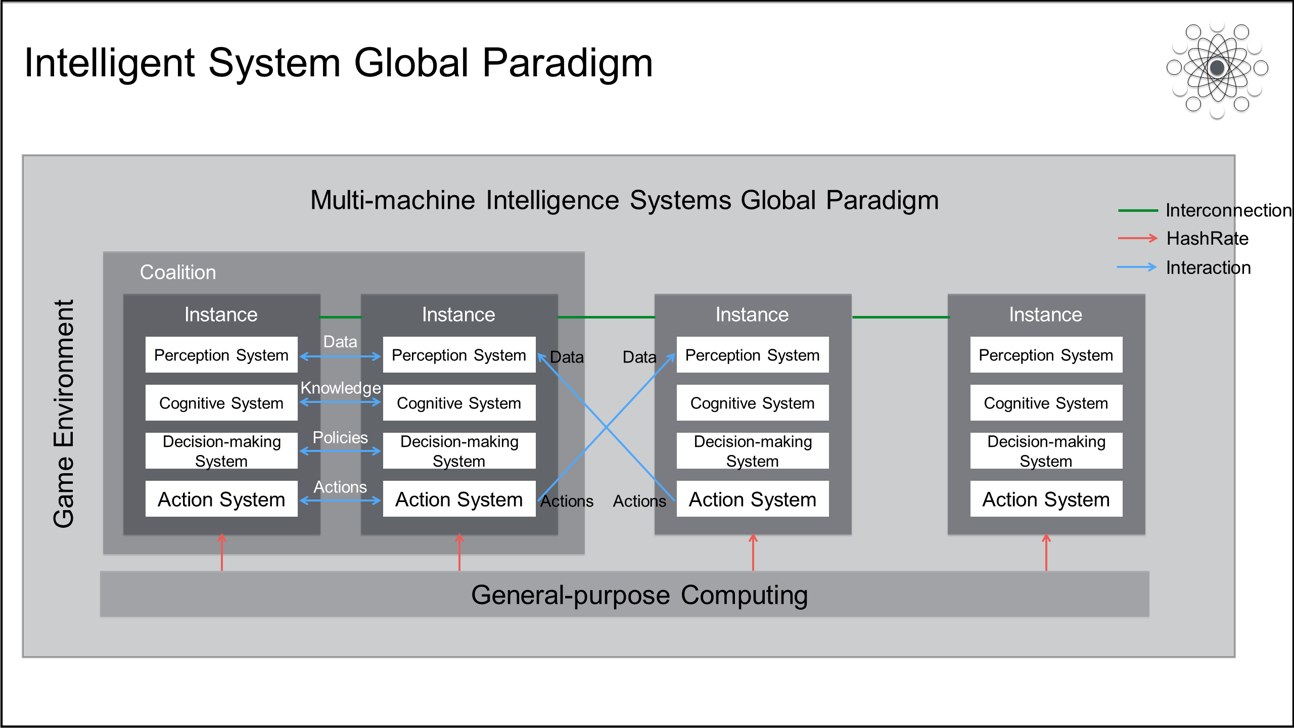

Today, most smart systems are isolated individual intelligent instances that solve single isolated problems. The essence of cloud computing is online computing, while the essence of big data is online data. Machine intelligence eventually needs to achieve online intelligence so that intelligent instances can autonomously interact with each other online.

A single intelligent instance is an autonomous system consisting of perception, cognitive, decision-making, and action systems. It has its own world representation and can independently accomplish its target tasks. In the same dynamic and complex game environment, instances become online instances through interconnection and can interact with each other. As such, they can cooperate, compete, compete and cooperate at the same time, or neither cooperate nor compete. Changes to the policies of one instance not only affect the environment of this instance, but also affect the policy changes of other instances.

In cooperation, multiple smart instances can choose to share data, knowledge, policies, or actions in order to coordinate and collaborate to complete more complex target tasks and jointly form higher-level smart instances. When the coverage and density of smart instances in a unit space are high enough, single-instance intelligence begins to evolve into multi-instance intelligence.

Security is a special type of technology. Strictly speaking, security cannot even be called a technology. Security has been a component in various human activities long before we invented any technology. So far, no technology is exclusive to the security field or has emerged from the security field. Rather, security has always accompanied and complemented other technologies.

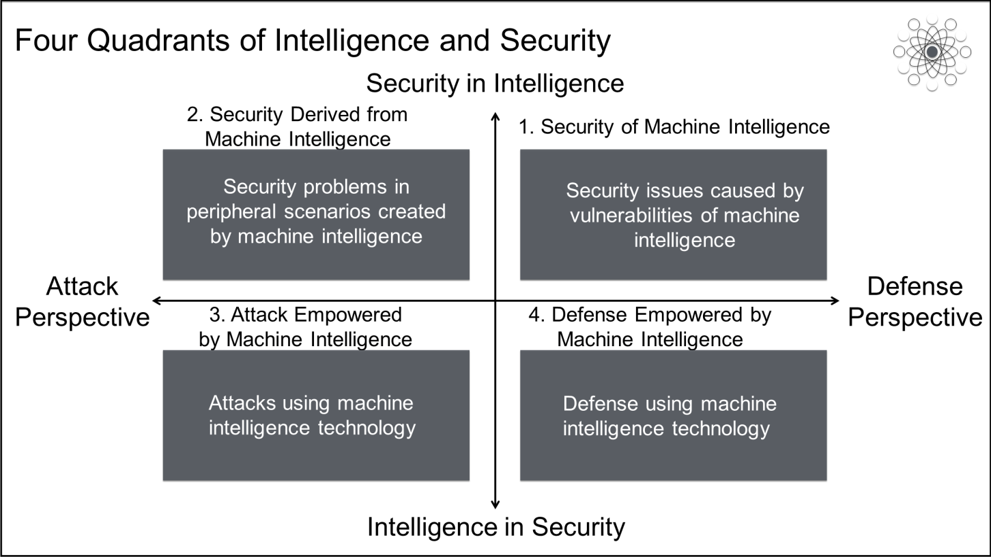

Any GPT can be combined with security in the four ways shown in the preceding figure. Machine intelligence technology is no exception. The vertical direction of the preceding diagram is "security in intelligence" and "intelligence in security," while the horizontal direction is "attack perspective" and "defense perspective." Security in intelligence refers to the new security problems introduced by machine intelligence itself, such as the security problems caused by the weakness of machine intelligence and the security problems in the peripheral fields created by machine intelligence. Intelligence in security refers to the application of machine intelligence in security scenarios, where attackers use machine intelligence to empower attacks and defenders use machine intelligence to empower defense.

Among these four quadrants, the time and maturity of the intersection of new technologies and security are different. Attackers have stronger motivation and interests than defenders. Therefore, the attack-related quadrant is often quicker to explore and apply new technologies. Defenders generally lag behind attackers and often believe in the illusion of security created by old technology and human experience. As a result, the fourth quadrant is the area that develops most slowly. This is also due to the attributes and difficulties of the defense perspective.

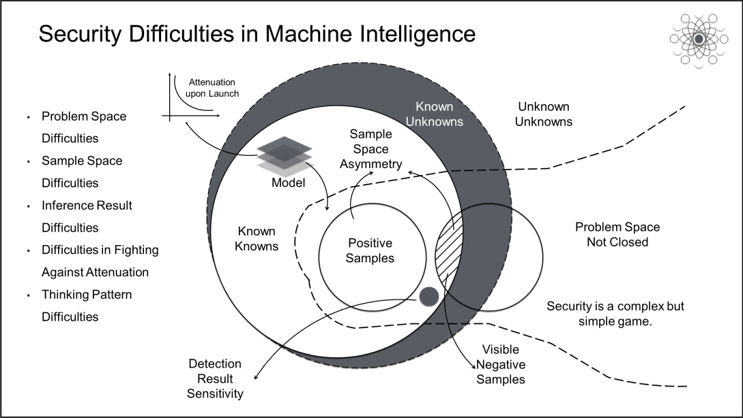

Go is a simple but complex game, while security is a complex but simple game. In 1994, cognitive scientist Steven Pinker wrote in his book "The Language Instinct" that "for machine intelligence, hard problems are easy and the easy problems are hard." Simple but complex problems refer to problems with a closed problem space, but that have a high complexity. Complex but simple problems refer to problems with infinitely open problem spaces, but that are not themselves very complex. Today, machine intelligence technology generally exceeds human capabilities in simple but complex problems. However, for complex but simple problems, machine intelligence often fails due to dimensional disasters arising from generalized boundaries.

Security is a typical complex but simple problem, so Moravec's paradox is very obvious in the security field. High uncertainty is the most prominent feature of security, and the biggest security difficulty is how to deal with unknown unknowns. Most of the failures of machine intelligence in the security field are due to our rush to use machine intelligence to solve problems that are not clearly defined. Today, in the security field, there is no need to break through the limits of intelligent technologies. Instead, we need to clearly define problems. This means finding ways to close the problem space.

In the security field, problem spaces are usually unbound, while the sample spaces of positive and negative samples corresponding to the problem space are seriously asymmetric. The serious lack of negative data, such as attack data and risk data, caused by unknown unknowns leads to feature space asymmetry. As a result, the feature space cannot truly characterize the problem space. A model is a world-related assumption formed in an existing data space and then used for inference in a new data space. Today, machine intelligence technology can effectively solve complex nonlinear relationships between input and output, but it is still relatively weak in bridging the huge gaps between sample spaces and problem spaces.

In the 1960s, Bell-LaPadula pointed out that a system is only secure when it starts in a secure state and never enters an insecure state. The essence of security is confrontation. As a result, the performance of most machine intelligence models in the security field begins to degrade as soon as the model goes online. A model that performs well on training sets, by its very release, forces its adversaries to improve their methods. Therefore, as soon as it is released, even the best model will become increasingly ineffective. Model attenuation is inevitable, just like the increase of entropy in a closed system.

In addition, the accuracy and interpretability of detection results are extremely important in security scenarios. Compared with rule-based and policy-based detection technologies commonly used in traditional security systems, the advantage of machine intelligence lies in its powerful representation capabilities. However, the poor interpretability and ambiguity of its inferences mean that they cannot be directly applied in decision-making scenarios. At present, most intelligent security systems have only achieved perception, and the vast majority serve only to assist in decision-making.

However, these are not the biggest difficulties. The biggest challenge to machine intelligence in the security field concerns thinking patterns. The thinking pattern in the security field is "stick to the routine but be ready for surprises," while the thinking pattern in machine intelligence is "Model the World." These two thinking patterns are not only very different, but also extremely difficult to reconcile. On the one hand, few people can direct these two ways of thinking at the same time, and on the other hand, it is extremely difficult for people who have adopted these two ways of thinking to work with each other. The essential problem is the inability to convert security issues to algorithm issues or vice versa and the lack of mutually intelligible definitions.

Together, the problem space, sample space, inference result, fight against attenuation, and thinking pattern problems are the reasons for the poor performance of most current intelligent security systems. Or, we could even say that, in the present security field, there are no true intelligent security systems.

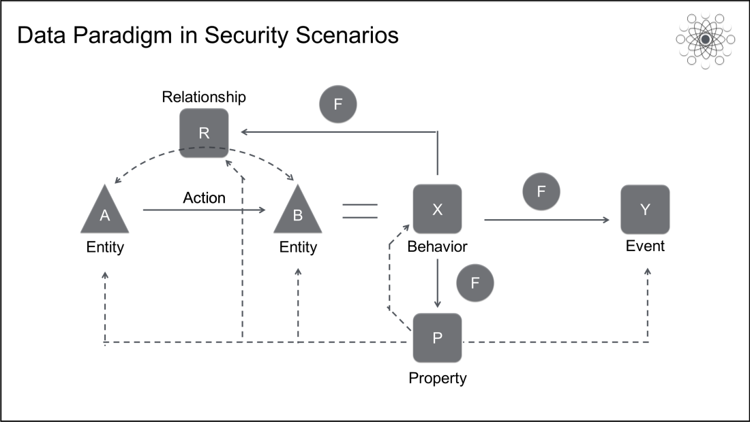

First, let's take a look at the general data paradigm in general security scenarios. Plato once wrote that the world we perceive is a projection on the wall of a cave. By this, he meant that the phenomenal world is a reflection of the rational world, which is the true world. The analogy of the cave points to the existence of an external and objective system of knowledge that is not dependent on the cognition or even the existence of humans. Humans gain knowledge by constantly observing the phenomena of the real world and imperfectly trying to understand this objective system of knowledge. Aristotle went further by establishing ontology, the science of existence, as a basic branch of metaphysics. In the 17th century, the philosopher Rudolph Goclenius first used the term "ontology." By the 1960s, the concept of ontology began to be introduced in the field of machine intelligence, diving into the further evolution of semantic networks and knowledge maps.

The confrontation involved in security is essentially a confrontation of knowledge. The party who obtains more knowledge will enjoy an asymmetric advantage. Threat analysis, intelligence-based judgment, attack detection, and event tracking are all essentially knowledge-gathering processes. This is why Palantir's Gotham, IBM's I2, UEBA, and many other threat intelligence products all draw on the ideas of ontology.

However, the common data paradigm in security scenarios is also closely related to ontology. Using the five main metadata types, entities, properties, behaviors, events, and relationships, we can build data architectures for all security scenarios, including basic, business, data, public, and urban security. Note: In the public security field, we also pay attention to the trajectory data type. Because trajectory is a special type of behavior data, we include it in behavior here.

Most of the raw data accumulated in the security field is behavior data, whether in the form of network traffic logs, host command logs, business logs, camera data streams, or sensing device data streams. Entities, properties, relationships, and events are extracted from behavior data by running different functions on different behavior data.

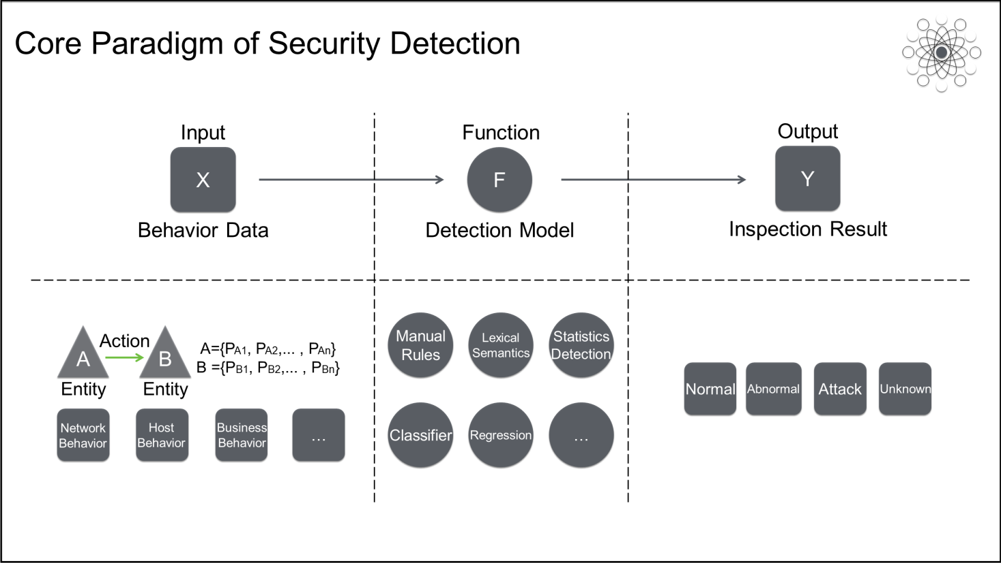

When functions generate events, this involves security detection problems, such as attack, threat, risk, and anomaly detection. The atomic paradigm of most security detection problems can be abstracted as Y = F(X), where X is the behavior data of an entity, Y is the detection result, and F is the detection model. F can be a rule-based, policy-based, syntax-based, statistical detection, machine learning, or deep neural network model. Y can be normal, abnormal, attack, or unknown.

More complex detection scenarios can also be assembled by using basic F models and various operators. Each type of F model has its own advantages and disadvantages and is suited to different scenarios. There is no absolutely superior detection technology. In fact, what an algorithm should pay most attention to in security detection is not the detection model itself. Instead, it should be able to automatically generate optimal detection models based on various scenarios and automatically and continuously iterate the detection model.

A true intelligent security system must also have a perception, cognitive, decision-making, and action system. It must also form a closed feedback loop with the environment. A perception system at least includes an anomaly, attack, false negative, and false positive perceptron. The anomaly perceptron must retain the ability to detect unknown unknowns while also solving sample space difficulties by defining a normal state in order to detect anomalies. The attack perceptron detects attacks based on anomaly data. It is used to address inference result difficulties and significantly reduce the range of false positives and negatives in inference results. The false positive and false negative perceptrons are designed to solve the difficulties in fighting against attenuation. From this, we can see that the most common algorithm-based attack detection systems in the industry represent only a small improvement in the perception systems of intelligent systems.

The cognitive system accumulates security-related knowledge, including at least normal, attack, false negative, and false positive knowledge. Security knowledge can be based on expert rules, vectors, models, graphs, natural languages, and other information. However, no matter what form the information takes, it must be refined into personalized knowledge. This process accumulates a set of knowledge about perceived anomalies, attacks, false negatives, and false positives for the protected objects, such as users, systems, assets, domain names, or data. The decision-making system includes at least the interception policies for target tasks and the launch and deprecation policies for various models. It can independently determine which behaviors should be intercepted and which models have attenuated and should be retrained or replaced.

The action system outputs various actions that affect the environment, such as allow, block, retrain, and release. An intelligent security instance contains thousands of agents, and each agent acts only on its corresponding protected object. Finally, the solution to problem space difficulties is to converge open problem spaces into small closed risk scenarios. This depends on the in-depth detection formed by the cascade of the four perceptrons as well as the personalized agents for protected objects.

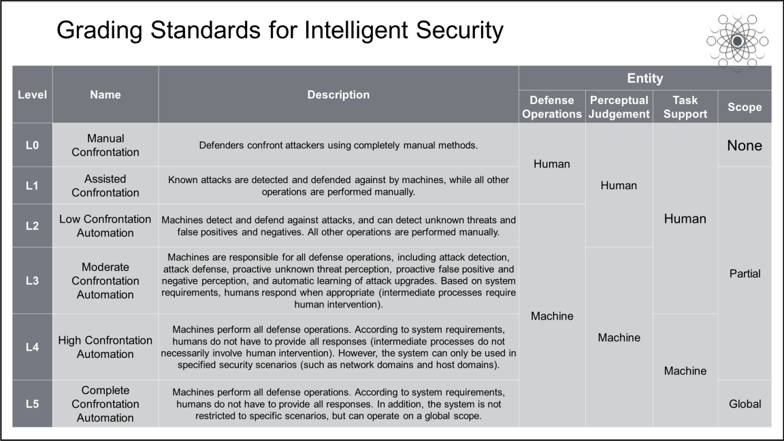

So far, whenever we find a way to eliminate problems in the security field, the solution produces new problems. Currently, we need to use new technologies to truly solve old problems. The popularity of machine intelligence in various industries has attracted the attention of the security industry. However, the capabilities of intelligent technologies in the security field vary greatly, and it is difficult to separate the true from the false. At present, any security system that uses algorithms is inevitably called an AI-based security system. As in the early years of intelligent driving, today's intelligent security also requires a unified grading standard to clarify the differences between different levels of intelligent security technologies. Security is essentially a confrontation between intelligent entities. Therefore, we have divided intelligent security technologies into six levels (L0-L5) based on their degree of autonomous confrontation.

In contrast to intelligent driving, different levels use completely different technology stacks. In intelligent security, progressing from L0 to L5 requires gradual construction and development. According to this division, most current security systems in the industry are L1 systems, and a very few are L2 systems. However, no intelligent security systems have truly reached L3 and the higher levels. As the system level increases, defenders will gradually be freed from low-level operations and can pay more attention to advanced operations. L3 will mark a watershed in the industry and is expected to be achieved within five years. After starting from Go and then moving to security, what will machine intelligence ultimately achieve in the security field? We expect that the network, host, application, business, and data layer will all have their own intelligent instances. Instances at different layers will be interconnected online for truly collaborative defense and intelligence sharing. On the day we fuse intelligent capabilities with intelligence information, we will see that intelligence has reshaped the security field.

Currently, the Alibaba Cloud Intelligent Security Lab is building L3 intelligent security systems in multiple fields. We are committed to the application of intelligent technologies in cloud security and are looking for security algorithm and security data experts. We hope to find like-minded people to apply intelligence to reshape the security field. After less than a year, we have already achieved some preliminary successes:

Alibaba Clouder - April 4, 2019

Alibaba Clouder - July 19, 2018

Alibaba Clouder - March 20, 2019

Nick Patrocky - January 30, 2024

Alibaba Cloud Security - January 3, 2020

Alibaba Clouder - September 16, 2020

Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More CloudMonitor

CloudMonitor

Automate performance monitoring of all your web resources and applications in real-time

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More