Written by Xia Jie, nicknamed Chuzhao at Alibaba. Xia works in the BUC, ACL, and SSO teams of Alibaba's Enterprise Intelligence Business Unit. He focuses on providing permission control and the secure access and governance of application data for the several different business operations of Alibaba Group. With existing technologies and domain models, he is committed to creating the infrastructural SaaS product MOZI of the application digitalization architecture in the To B and To G fields.

According to Moore's Law, the performance of computers will continue to soar , as the associated costs of the computing infrastructure will continue to drop over time. In regard to CPUs specifically, they have evolve from a simple single core system to multi-core systems, and cache performance has also increased dramatically. With the emergence of multi-core CPUs, computers could now run multiple tasks at the same time. And, with the significant efficiency improvements brought by several enhancements in hardware development, multi-thread programming at the software level has become an inevitable trend. However, multi-thread programming has also introduced several data security issues.

With all of these trends in motion, the industry has come to recognize that when there is a security vulnerability, there must also be a corresponding shield. With this trend, the virtual "lock" was invented to address security problems of threads. In this post, we're going to look at several of the typical JVM-level locks in Java that have come about over the years.

The synchronized keyword is a classic and very typical lock in Java. In fact, it's also the most commonly used one. Before JDK 1.6, "synchronized" was a rather "heavyweight" lock. However, with the newer upgrades of Java, this lock has been continuously optimized. Today, this lock has become much less "heavy." And, in fact, in some scenarios, its performance is even better than that of the typical lightweight lock. Moreover, in methods and code blocks with the synchronized keyword, only one thread is allowed to access a specific code segment at a time, preventing multiple threads from concurrently modifying the same piece of data.

Before JDK 1.5 (inclusive), the underlying implementation of the synchronized keyword was rather heavy, and therefore it was called a "heavyweight lock." However, after JDK 1.5, various improvements were made to the "synchronized" lock and it became less heavyweight. As such, the implementation method is exactly the lock upgrade process. Let's first see how the "synchronized" lock was implemented after JDK 1.5. When it comes to the principle of synchronized locking, we must first understand the layout of a Java object in a memory.

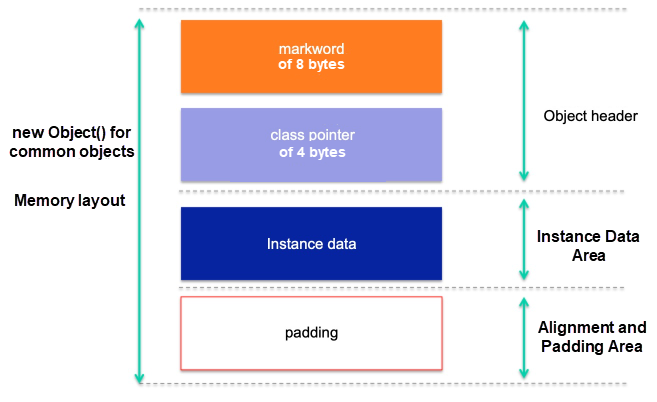

As shown above, after an object is created, the storage layout of the object in the Java memory in the JVM HotSpot virtual machine can be classified into three types.

The information stored in this area is divided into two parts.

This area stores the valid information of the object, such as the content of all the fields in the object.

The implementation of JVM HotSpot specifies that the starting address of the object must be an integral multiple of 8 bytes. In other words, a 64-bit operating system reads data of an integral multiple of 64 bits at a time, or 8 bytes. Therefore, HotSpot performs "alignment" to efficiently read objects. If the actual memory size of an object is not an integral multiple of 8 bytes, HotSpot "pads" the object to be an integral multiple of 8 bytes. Therefore, the size of the alignment and padding area is dynamic.

When a thread enters the synchronized state and tries to obtain the lock, the upgrade process of the synchronized lock is as follows.

In summary, the upgrade sequence of the synchronized lock is: biased lock > lightweight lock > heavyweight lock. The detailed triggering of lock upgrade in each step is as follows.

In JDK 1.8, the default is a lightweight lock. However, by setting -XX:BiasedLockingStartupDelay = 0, a biased lock is appended immediately after an object is synchronized. When the thread is in the biased locking state, MarkWord records the ID of the current thread.

When the next thread competes for the biased lock, the system first checks if the thread ID stored in MarkWord is consistent with the ID of this thread. If it is not, the system immediately revokes the biased lock and upgrades it to the lightweight lock. Each thread generates a LockRecord (LR) in its own thread stack. Then, each thread sets MarkWord in the lock object header to a pointer that points to its own LR through the CAS operation (spin). If a thread successfully sets MarkWord, the thread has obtained the lock. As such, the CAS operation executed for "synchronized" is done through the C++ code in the bytecodeInterpreter.cpp file of HotSpot.

If the lock competition intensifies, for example, the number of thread spins or the number of spinning threads exceeds a threshold, which is controlled by JVM itself for JDK versions later than version 1.6, the lock is upgraded to a heavyweight lock. Then, the heavyweight lock requests resources from the operating system.

Meanwhile, the thread is also suspended and enters the waiting queue of the operating system kernel state, and waits for the operating system to schedule it and map it back to the user state. In the heavyweight lock, the transition from the kernel state to the user state is required, and this process takes a relatively long time, which is one of the reasons it is characterized as being "heavyweight."

The synchronized lock has an internal lock mechanism with forced atomicity, which is a reentrant lock. When a thread uses the synchronized method, another synchronized method of the object is called. That is, after a thread obtains an object lock, the thread can always obtain the lock once it requests the object lock again. In Java, the operation of acquiring an object lock by a thread is thread-based but not call-based.

The MarkWord in the object header of the synchronized lock records the thread holder and the counter of the lock. When a thread request succeeds, JVM records the thread that holds the lock and sets the count to 1. At this time, if another thread requests the lock, this thread must wait.

When the thread that holds the lock requests the lock again, the lock can be obtained again and the count increments accordingly. And the count decrements when a thread exits a synchronized method or block. Last, the lock is released if the count is 0.

The synchronized lock is a pessimistic lock, or rather exclusive lock. In other words, if the current thread acquires the lock, any other threads that need the lock have to wait. The lock competition resumes until the lock-holding thread releases the lock.

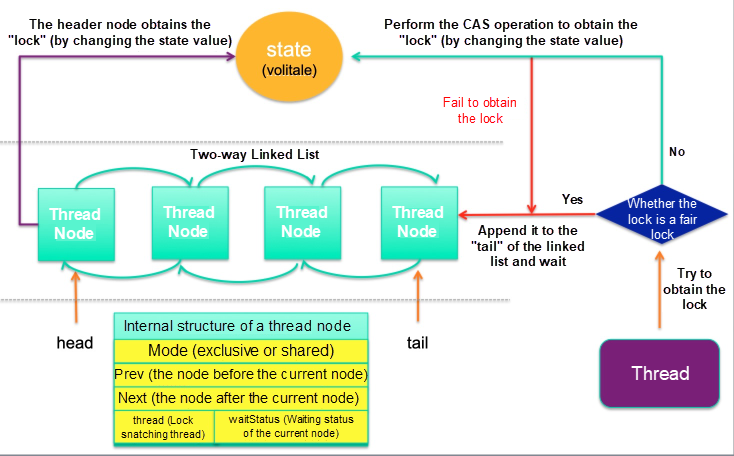

ReentrantLock is similar to the synchronized lock, but its implementation method is quite different from that of the synchronized lock. To be more specific, it is implemented based on the classic AbstractQueueSyncronized (AQS). AQS is implemented based on volatile and CAS. AQS maintains the state variable of the volatile type to count the number of reentrant attempts of the reentrant lock. Similarly, adding and releasing locks are also based on this variable. ReentrantLock provides some additional features that the synchronized lock does not have, so it is better than the synchronized lock.

The ReentrantLock and the synchronized keyword are the same in the regard that they are both locks that support reentering. However, their implementation methods are slightly different. ReentrantLock uses the state of AQS to determine whether resources have been locked. For a same thread, if it is locked, the state value increments by 1, and if it is unlocked, the state value decrements by 1. Note that the unlocking is only available for the current exclusive thread, otherwise, an exception occurs. If the state value is 0, the unlocking is successful.

The synchronized keyword is automatically locked and unlocked. Comparatively, ReentrantLock requires the lock() and unlock() methods together with the try/finally statement block to manually lock and unlock.

The synchronized keyword cannot set a lock timeout period. If a deadlock occurs in a thread that acquires the lock, other threads remain blocked. ReentrantLock provides the tryLock method, which allows you to set a timeout period for a thread that obtains the lock. If the timeout period expires, the thread is skipped and no operation is performed, which prevents deadlock.

The synchronized keyword is an unfair lock. The first thread that grabs the lock runs first. By setting true or false in the construction method of ReentrantLock, you can implement fair and unfair locks. If the setting is true, the thread needs to follow the rule of "first come, first served". Every time a thread wants to obtain the lock, a thread node is constructed and then appended to the "tail" of the two-way linked list for queuing, to wait for the previous node in the queue to release the lock resource.

The lockInterruptibly() method in ReentrantLock allows a thread to respond to an interruption when it is blocked. For example, thread t1 obtains a ReentrantLock through the lockInterruptibly() method and runs a long task. The other thread can use the interrupt() method to immediately interrupt the running of thread t1, to obtain thread t1's ReentrantLock. However, the lock() method of ReentrantLock or the thread with the synchronized lock does not respond to the interrupt() method of other threads until this method actively releases the lock.

The ReentrantReadWriteLock (read-write lock) is actually two locks, one is WriteLock (write lock), and the other is ReadLock (read lock). The rules of the read-write lock are: read-read non-exclusive, read-write exclusive, and write-write exclusive. In some actual scenarios, the frequency of reads is much higher than that of writes. If a common lock is used for concurrency control, reads and writes are mutually exclusive, which feature a low efficiency.

To optimize the operation efficiency in this scenario, the read-write lock emerged. In general, the low efficiency of exclusive locks comes from the fierce competition for critical sections under high concurrency, resulting in thread context switching. When the concurrency is not very high, the read-write lock may be less efficient than the exclusive lock because additional maintenance is required. Therefore, you need to pick the appropriate lock based on the actual situation.

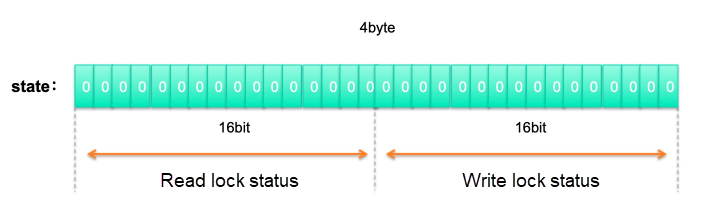

ReentrantReadWriteLock is also implemented based on AQS. The difference between the ReentrantLock and the ReentrantReadWriteLock is that the latter has the shared lock and exclusive lock attributes. Locking and unlocking in the read-write lock is based on Sync, which is inherited from AQS. It is implemented mainly by the state in AQS and the waitState variable in node.

The major difference between the implementation of the read-write lock and that of the ordinary mutex is that the read lock status and the write lock status need to be recorded respectively, and the waiting queue needs to differently handle both types of lock operations. In the ReentrantReadWriteLock, the int-type state in AQS is divided into the high 16 bits and low 16 bits to respectively record the read lock and write lock states, as shown below.

By calculating state&((1<<16)-1), all the high 16 bits of the state are erased. Therefore, the low bits of the state records the reentrant count of the write lock.

Below is the source code for acquiring the write lock.

/**

* 获取写锁

Acquires the write lock.

* 如果此时没有任何线程持有写锁或者读锁,那么当前线程执行CAS操作更新status,

* 若更新成功,则设置读锁重入次数为1,并立即返回

* <p>Acquires the write lock if neither the read nor write lock

* are held by another thread

* and returns immediately, setting the write lock hold count to

* one.

* 如果当前线程已经持有该写锁,那么将写锁持有次数设置为1,并立即返回

* <p>If the current thread already holds the write lock then the

* hold count is incremented by one and the method returns

* immediately.

* 如果该锁已经被另外一个线程持有,那么停止该线程的CPU调度并进入休眠状态,

* 直到该写锁被释放,且成功将写锁持有次数设置为1才表示获取写锁成功

* <p>If the lock is held by another thread then the current

* thread becomes disabled for thread scheduling purposes and

* lies dormant until the write lock has been acquired, at which

* time the write lock hold count is set to one.

*/

public void lock() {

sync.acquire(1);

}

/**

* 该方法为以独占模式获取锁,忽略中断

* 如果调用一次该"tryAcquire"方法更新status成功,则直接返回,代表抢锁成功

* 否则,将会进入同步队列等待,不断执行"tryAcquire"方法尝试CAS更新status状态,直到成功抢到锁

* 其中"tryAcquire"方法在NonfairSync(公平锁)中和FairSync(非公平锁)中都有各自的实现

*

* Acquires in exclusive mode, ignoring interrupts. Implemented

* by invoking at least once {@link #tryAcquire},

* returning on success. Otherwise the thread is queued, possibly

* repeatedly blocking and unblocking, invoking {@link

* #tryAcquire} until success. This method can be used

* to implement method {@link Lock#lock}.

*

* @param arg the acquire argument. This value is conveyed to

* {@link #tryAcquire} but is otherwise uninterpreted and

* can represent anything you like.

*/

public final void acquire(int arg) {

if (!tryAcquire(arg) &&

acquireQueued(addWaiter(Node.EXCLUSIVE), arg))

selfInterrupt();

}

protected final boolean tryAcquire(int acquires) {

/*

* Walkthrough:

* 1、如果读写锁的计数不为0,且持有锁的线程不是当前线程,则返回false

* 1. If read count nonzero or write count nonzero

* and owner is a different thread, fail.

* 2、如果持有锁的计数不为0且计数总数超过限定的最大值,也返回false

* 2. If count would saturate, fail. (This can only

* happen if count is already nonzero.)

* 3、如果该锁是可重入或该线程在队列中的策略是允许它尝试抢锁,那么该线程就能获取锁

* 3. Otherwise, this thread is eligible for lock if

* it is either a reentrant acquire or

* queue policy allows it. If so, update state

* and set owner.

*/

Thread current = Thread.currentThread();

//获取读写锁的状态

int c = getState();

//获取该写锁重入的次数

int w = exclusiveCount(c);

//如果读写锁状态不为0,说明已经有其他线程获取了读锁或写锁

if (c != 0) {

//如果写锁重入次数为0,说明有线程获取到读锁,根据"读写锁互斥"原则,返回false

//或者如果写锁重入次数不为0,且获取写锁的线程不是当前线程,根据"写锁独占"原则,返回false

// (Note: if c != 0 and w == 0 then shared count != 0)

if (w == 0 || current != getExclusiveOwnerThread())

return false;

//如果写锁可重入次数超过最大次数(65535),则抛异常

if (w + exclusiveCount(acquires) > MAX_COUNT)

throw new Error("Maximum lock count exceeded");

//到这里说明该线程是重入写锁,更新重入写锁的计数(+1),返回true

// Reentrant acquire

setState(c + acquires);

return true;

}

//如果读写锁状态为0,说明读锁和写锁都没有被获取,会走下面两个分支:

//如果要阻塞或者执行CAS操作更新读写锁的状态失败,则返回false

//如果不需要阻塞且CAS操作成功,则当前线程成功拿到锁,设置锁的owner为当前线程,返回true

if (writerShouldBlock() ||

!compareAndSetState(c, c + acquires))

return false;

setExclusiveOwnerThread(current);

return true;

}Source code for releasing the write lock:

/*

* Note that tryRelease and tryAcquire can be called by

* Conditions. So it is possible that their arguments contain

* both read and write holds that are all released during a

* condition wait and re-established in tryAcquire.

*/

protected final boolean tryRelease(int releases) {

//若锁的持有者不是当前线程,抛出异常

if (!isHeldExclusively())

throw new IllegalMonitorStateException();

//写锁的可重入计数减掉releases个

int nextc = getState() - releases;

//如果写锁重入计数为0了,则说明写锁被释放了

boolean free = exclusiveCount(nextc) == 0;

if (free)

//若写锁被释放,则将锁的持有者设置为null,进行GC

setExclusiveOwnerThread(null);

//更新写锁的重入计数

setState(nextc);

return free;

}By calculating state>>>16 for unsigned zero complementing, 16 additional bits are introduced. Therefore, the high bits of the state record the reentrant count of the write lock.

The process of obtaining a read lock is slightly more complicated than acquiring a write lock. First, the system determines whether the count of the write lock is 0 and the current thread does not hold an exclusive lock. If yes, the system directly returns the result. If not, the system checks whether the read thread needs to be blocked, and whether the number of read locks is less than the threshold and whether the comparison of the set status is successful.

If no read lock currently exists, firstReader and firstReaderHoldCount of the first read thread are set. If the current thread is the first read thread, the firstReaderHoldCount value increments. Otherwise, the value of the HoldCounter object corresponding to the current thread is set. After the successful update, the reentrant count of the current thread is recorded in readHolds (of the ThreadLocal type) of firstReaderHoldCount in the copy of the current thread. This is to implement the getReadHoldCount() method added in JDK 1.6. This method can obtain the number of times the current thread re-enters the shared lock. That is the total reentrant count of multiple threads is recorded in the state.

Introducing this method makes the code a lot more complicated, but the principle is still very simple: if there is only one thread, you do not need to use ThreadLocal, and you can directly store the reentrant count in the firstReaderHoldCount member variable. When another thread occurs, you need to use the ThreadLocal variable, readHolds. Each thread has its own copy, which is used to save its own reentrant count.

Below is the source code for acquiring the read lock:

/**

* 获取读锁

* Acquires the read lock.

* 如果写锁未被其他线程持有,执行CAS操作更新status值,获取读锁后立即返回

* <p>Acquires the read lock if the write lock is not held by

* another thread and returns immediately.

*

* 如果写锁被其他线程持有,那么停止该线程的CPU调度并进入休眠状态,直到该读锁被释放

* <p>If the write lock is held by another thread then

* the current thread becomes disabled for thread scheduling

* purposes and lies dormant until the read lock has been acquired.

*/

public void lock() {

sync.acquireShared(1);

}

/**

* 该方法为以共享模式获取读锁,忽略中断

* 如果调用一次该"tryAcquireShared"方法更新status成功,则直接返回,代表抢锁成功

* 否则,将会进入同步队列等待,不断执行"tryAcquireShared"方法尝试CAS更新status状态,直到成功抢到锁

* 其中"tryAcquireShared"方法在NonfairSync(公平锁)中和FairSync(非公平锁)中都有各自的实现

* (看这注释是不是和写锁很对称)

* Acquires in shared mode, ignoring interrupts. Implemented by

* first invoking at least once {@link #tryAcquireShared},

* returning on success. Otherwise the thread is queued, possibly

* repeatedly blocking and unblocking, invoking {@link

* #tryAcquireShared} until success.

*

* @param arg the acquire argument. This value is conveyed to

* {@link #tryAcquireShared} but is otherwise uninterpreted

* and can represent anything you like.

*/

public final void acquireShared(int arg) {

if (tryAcquireShared(arg) < 0)

doAcquireShared(arg);

}

protected final int tryAcquireShared(int unused) {

/*

* Walkthrough:

* 1、如果已经有其他线程获取到了写锁,根据"读写互斥"原则,抢锁失败,返回-1

* 1.If write lock held by another thread, fail.

* 2、如果该线程本身持有写锁,那么看一下是否要readerShouldBlock,如果不需要阻塞,

* 则执行CAS操作更新state和重入计数。

* 这里要注意的是,上面的步骤不检查是否可重入(因为读锁属于共享锁,天生支持可重入)

* 2. Otherwise, this thread is eligible for

* lock wrt state, so ask if it should block

* because of queue policy. If not, try

* to grant by CASing state and updating count.

* Note that step does not check for reentrant

* acquires, which is postponed to full version

* to avoid having to check hold count in

* the more typical non-reentrant case.

* 3、如果因为CAS更新status失败或者重入计数超过最大值导致步骤2执行失败

* 那就进入到fullTryAcquireShared方法进行死循环,直到抢锁成功

* 3. If step 2 fails either because thread

* apparently not eligible or CAS fails or count

* saturated, chain to version with full retry loop.

*/

//当前尝试获取读锁的线程

Thread current = Thread.currentThread();

//获取该读写锁状态

int c = getState();

//如果有线程获取到了写锁 ,且获取写锁的不是当前线程则返回失败

if (exclusiveCount(c) != 0 &&

getExclusiveOwnerThread() != current)

return -1;

//获取读锁的重入计数

int r = sharedCount(c);

//如果读线程不应该被阻塞,且重入计数小于最大值,且CAS执行读锁重入计数+1成功,则执行线程重入的计数加1操作,返回成功

if (!readerShouldBlock() &&

r < MAX_COUNT &&

compareAndSetState(c, c + SHARED_UNIT)) {

//如果还未有线程获取到读锁,则将firstReader设置为当前线程,firstReaderHoldCount设置为1

if (r == 0) {

firstReader = current;

firstReaderHoldCount = 1;

} else if (firstReader == current) {

//如果firstReader是当前线程,则将firstReader的重入计数变量firstReaderHoldCount加1

firstReaderHoldCount++;

} else {

//否则说明有至少两个线程共享读锁,获取共享锁重入计数器HoldCounter

//从HoldCounter中拿到当前线程的线程变量cachedHoldCounter,将此线程的重入计数count加1

HoldCounter rh = cachedHoldCounter;

if (rh == null || rh.tid != getThreadId(current))

cachedHoldCounter = rh = readHolds.get();

else if (rh.count == 0)

readHolds.set(rh);

rh.count++;

}

return 1;

}

//如果上面的if条件有一个都不满足,则进入到这个方法里进行死循环重新获取

return fullTryAcquireShared(current);

}

/**

* 用于处理CAS操作state失败和tryAcquireShared中未执行获取可重入锁动作的full方法(补偿方法?)

* Full version of acquire for reads, that handles CAS misses

* and reentrant reads not dealt with in tryAcquireShared.

*/

final int fullTryAcquireShared(Thread current) {

/*

* 此代码与tryAcquireShared中的代码有部分相似的地方,

* 但总体上更简单,因为不会使tryAcquireShared与重试和延迟读取保持计数之间的复杂判断

* This code is in part redundant with that in

* tryAcquireShared but is simpler overall by not

* complicating tryAcquireShared with interactions between

* retries and lazily reading hold counts.

*/

HoldCounter rh = null;

//死循环

for (;;) {

//获取读写锁状态

int c = getState();

//如果有线程获取到了写锁

if (exclusiveCount(c) != 0) {

//如果获取写锁的线程不是当前线程,返回失败

if (getExclusiveOwnerThread() != current)

return -1;

// else we hold the exclusive lock; blocking here

// would cause deadlock.

} else if (readerShouldBlock()) {//如果没有线程获取到写锁,且读线程要阻塞

// Make sure we're not acquiring read lock reentrantly

//如果当前线程为第一个获取到读锁的线程

if (firstReader == current) {

// assert firstReaderHoldCount > 0;

} else { //如果当前线程不是第一个获取到读锁的线程(也就是说至少有有一个线程获取到了读锁)

//

if (rh == null) {

rh = cachedHoldCounter;

if (rh == null || rh.tid != getThreadId(current)) {

rh = readHolds.get();

if (rh.count == 0)

readHolds.remove();

}

}

if (rh.count == 0)

return -1;

}

}

/**

*下面是既没有线程获取写锁,当前线程又不需要阻塞的情况

*/

//重入次数等于最大重入次数,抛异常

if (sharedCount(c) == MAX_COUNT)

throw new Error("Maximum lock count exceeded");

//如果执行CAS操作成功将读写锁的重入计数加1,则对当前持有这个共享读锁的线程的重入计数加1,然后返回成功

if (compareAndSetState(c, c + SHARED_UNIT)) {

if (sharedCount(c) == 0) {

firstReader = current;

firstReaderHoldCount = 1;

} else if (firstReader == current) {

firstReaderHoldCount++;

} else {

if (rh == null)

rh = cachedHoldCounter;

if (rh == null || rh.tid != getThreadId(current))

rh = readHolds.get();

else if (rh.count == 0)

readHolds.set(rh);

rh.count++;

cachedHoldCounter = rh; // cache for release

}

return 1;

}

}

}Next, this is the source code for releasing the read lock:

/**

* Releases in shared mode. Implemented by unblocking one or more

* threads if {@link #tryReleaseShared} returns true.

*

* @param arg the release argument. This value is conveyed to

* {@link #tryReleaseShared} but is otherwise uninterpreted

* and can represent anything you like.

* @return the value returned from {@link #tryReleaseShared}

*/

public final boolean releaseShared(int arg) {

if (tryReleaseShared(arg)) {//尝试释放一次共享锁计数

doReleaseShared();//真正释放锁

return true;

}

return false;

}

/**

*此方法表示读锁线程释放锁。

*首先判断当前线程是否为第一个读线程firstReader,

*若是,则判断第一个读线程占有的资源数firstReaderHoldCount是否为1,

若是,则设置第一个读线程firstReader为空,否则,将第一个读线程占有的资源数firstReaderHoldCount减1;

若当前线程不是第一个读线程,

那么首先会获取缓存计数器(上一个读锁线程对应的计数器 ),

若计数器为空或者tid不等于当前线程的tid值,则获取当前线程的计数器,

如果计数器的计数count小于等于1,则移除当前线程对应的计数器,

如果计数器的计数count小于等于0,则抛出异常,之后再减少计数即可。

无论何种情况,都会进入死循环,该循环可以确保成功设置状态state

*/

protected final boolean tryReleaseShared(int unused) {

// 获取当前线程

Thread current = Thread.currentThread();

if (firstReader == current) { // 当前线程为第一个读线程

// assert firstReaderHoldCount > 0;

if (firstReaderHoldCount == 1) // 读线程占用的资源数为1

firstReader = null;

else // 减少占用的资源

firstReaderHoldCount--;

} else { // 当前线程不为第一个读线程

// 获取缓存的计数器

HoldCounter rh = cachedHoldCounter;

if (rh == null || rh.tid != getThreadId(current)) // 计数器为空或者计数器的tid不为当前正在运行的线程的tid

// 获取当前线程对应的计数器

rh = readHolds.get();

// 获取计数

int count = rh.count;

if (count <= 1) { // 计数小于等于1

// 移除

readHolds.remove();

if (count <= 0) // 计数小于等于0,抛出异常

throw unmatchedUnlockException();

}

// 减少计数

--rh.count;

}

for (;;) { // 死循环

// 获取状态

int c = getState();

// 获取状态

int nextc = c - SHARED_UNIT;

if (compareAndSetState(c, nextc)) // 比较并进行设置

// Releasing the read lock has no effect on readers,

// but it may allow waiting writers to proceed if

// both read and write locks are now free.

return nextc == 0;

}

}

/**真正释放锁

* Release action for shared mode -- signals successor and ensures

* propagation. (Note: For exclusive mode, release just amounts

* to calling unparkSuccessor of head if it needs signal.)

*/

private void doReleaseShared() {

/*

* Ensure that a release propagates, even if there are other

* in-progress acquires/releases. This proceeds in the usual

* way of trying to unparkSuccessor of head if it needs

* signal. But if it does not, status is set to PROPAGATE to

* ensure that upon release, propagation continues.

* Additionally, we must loop in case a new node is added

* while we are doing this. Also, unlike other uses of

* unparkSuccessor, we need to know if CAS to reset status

* fails, if so rechecking.

*/

for (;;) {

Node h = head;

if (h != null && h != tail) {

int ws = h.waitStatus;

if (ws == Node.SIGNAL) {

if (!compareAndSetWaitStatus(h, Node.SIGNAL, 0))

continue; // loop to recheck cases

unparkSuccessor(h);

}

else if (ws == 0 &&

!compareAndSetWaitStatus(h, 0, Node.PROPAGATE))

continue; // loop on failed CAS

}

if (h == head) // loop if head changed

break;

}

}Through some analysis, we can see that, when a thread holds a read lock, this thread cannot obtain the write lock because its attempt to obtain the write lock will fail if the current read lock is occupied, regardless of whether the read lock is held by the current thread.

Next, when a thread holds a write lock, the thread can continue to obtain the read lock. During the acquisition of the read lock, if the write lock is occupied, the read lock can be obtained only if the current thread occupies the write lock.

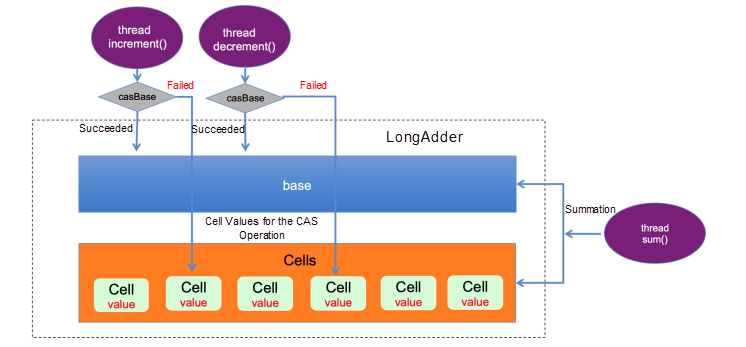

In high concurrency scenarios, directly performing i++ on an Integer-type integer does not guarantee the atomicity of the operation, resulting in thread security problems. For this reason, we use AtomicInteger in juc, which is an Integer class that provides atomic operations. Internally, thread security is achieved through CAS. However, when a large number of threads access a lock at the same time, spin occurs because a large number of threads fail to perform the CAS operation.

This results in excessive CPU resource consumption and low execution efficiency. Doug Lea was not satisfied with this, so he optimized CAS in JDK 1.8 and provided LongAdder, which was based on the idea of the CAS segment lock.

LongAdder is implemented based on CAS and volatile, provided by Unsafe. A base variable and a cell array are maintained in the Striped64 parent class of LongAdder. When multiple threads operate on a variable, the CAS operation is performed on this base variable first. When additional threads are detected, the cell array will be used. For example, when base is about to be updated and more threads are detected. That is, the casBase method fails to update the base value, the cell array is automatically used, with each thread corresponding to one cell, and the CAS operation is performed on the cell in each thread.

This shares the update pressure on a single value among multiple values and reduces the "heat" of the single value. It also reduces the spin of a large number of threads, improving the concurrency efficiency and distributing the concurrency pressure. This type of segment lock requires additional memory cells, but the costs are almost negligible in high-concurrency scenarios. The segment lock is an outstanding optimization approach. ConcurrentHashMap in juc is also based on the segment lock to ensure thread security for reads and writes.

The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Alibaba Cloud Becomes First CDN Provider to Receive IPv6 Enabled CDN Logo Certification

Learn How an Open-Source Microservice Component Has Supported Double 11 for the Past 10 Years

2,593 posts | 793 followers

FollowAlibaba Cloud Community - May 7, 2024

Alibaba Clouder - March 19, 2018

ApsaraDB - July 26, 2018

Ye Tang - March 9, 2020

Alibaba Cloud Native Community - August 2, 2024

ziyun - August 5, 2021

2,593 posts | 793 followers

Follow Security Center

Security Center

A unified security management system that identifies, analyzes, and notifies you of security threats in real time

Learn More WAF(Web Application Firewall)

WAF(Web Application Firewall)

A cloud firewall service utilizing big data capabilities to protect against web-based attacks

Learn MoreLearn More

More Posts by Alibaba Clouder