By Chaochao Zhou (Huicheng)

Many articles can be found on SQL tuning parameters and data skew. This article mainly explains common SQL development scenarios, distinctive SQL writing, and execution plans in depth. It will show you how to write efficient SQL quickly.

Multiple pieces of data need to be merged in some business scenarios. For example, when we want to obtain customer information, and the information overlaps in two tables (assuming that the overlap part in the two tables is consistent), we must merge the two pieces of data first.

Since the data in the two tables overlaps, the data needs to be deduplicated and then join. Common deduplication methods are:

SELECT cst_id,cst_info

FROM (

SELECT cst_id,cst_info

FROM @cst_info_a

WHERE dt = '${bizdate}'

UNION

SELECT cst_id,cst_info

FROM cst_info_b

WHERE dt = '${bizdate}'

)cst_info

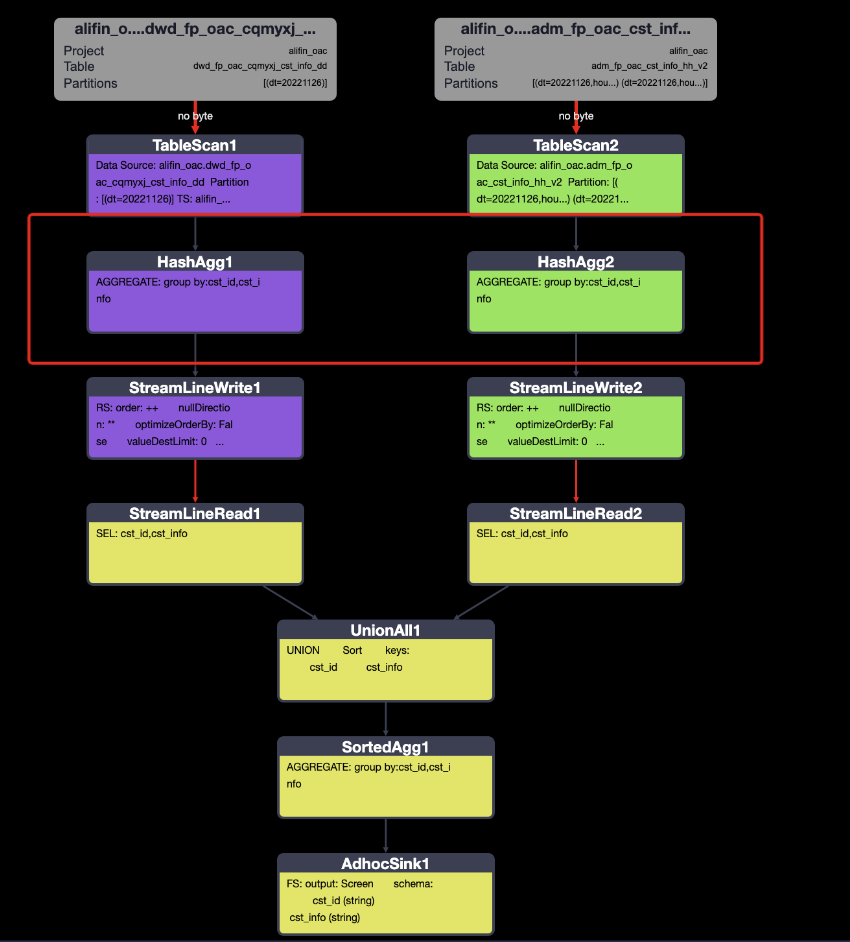

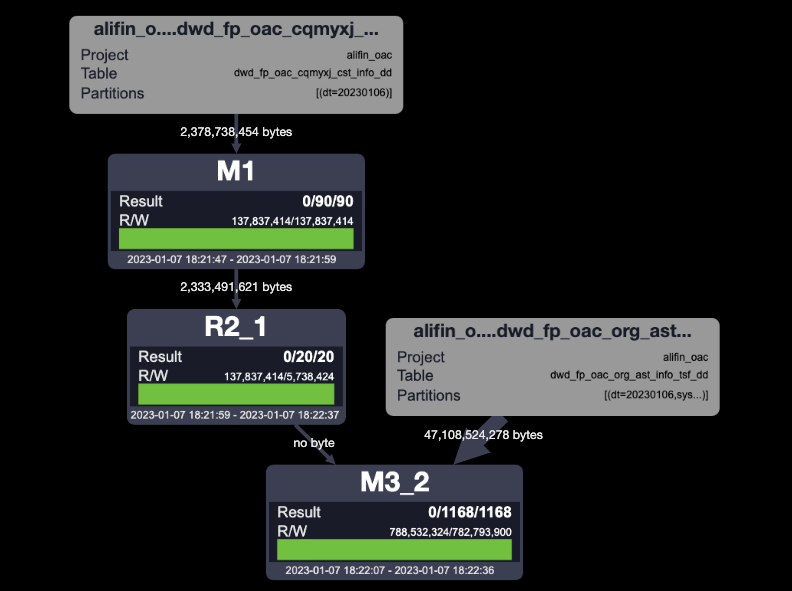

;As such, it will be understood that two pieces of data are merged without task processing, resulting in shuffle, and the amount of data temporarily written and the amount of data read are consistent with the data source. Then, deduplication is performed. Since the amount of data is not reduced in the process, the efficiency is relatively low. Now, let's look at the execution plan.

It is found that the execution plan has been optimized and is already the optimal execution plan.

Next, look at the execution plan according to efficient SQL writing.

-- Method 1

SELECT cst_id,cst_info

FROM (

SELECT cst_id,cst_info

FROM @cst_info_a

WHERE dt = '${bizdate}'

GROUP BY cst_id,cst_info

UNION

SELECT cst_id,cst_info

FROM @cst_info_b

WHERE dt = '${bizdate}'

GROUP BY cst_id,cst_info

)cst_info;

-- Method 2

SELECT cst_id,cst_info

FROM (

SELECT cst_id,cst_info

FROM @cst_info_a

WHERE dt = '${bizdate}'

GROUP BY cst_id,cst_info

UNION ALL

SELECT cst_id,cst_info

FROM @cst_info_b

WHERE dt = '${bizdate}'

GROUP BY cst_id,cst_info

)cst_info

GROUP BY

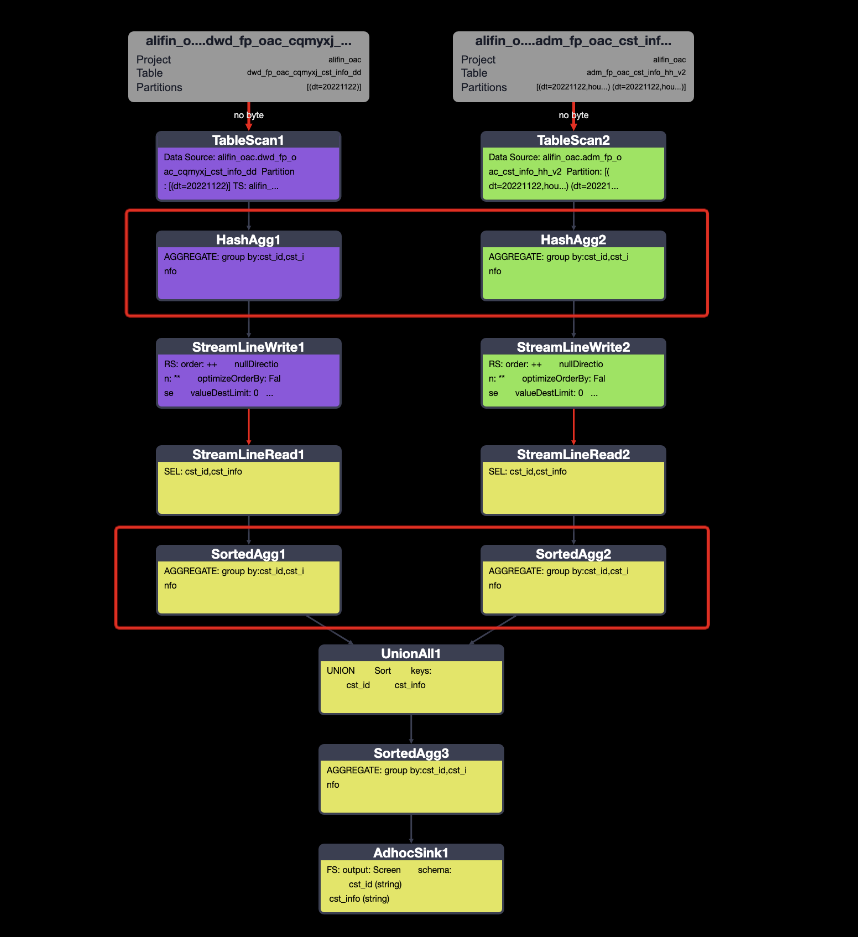

cst_id,cst_info;The execution plan of the two writings is consistent.

The additional aggregation processing increases complexity.

ODPS has optimized union and can be used directly. When performing union for three or more (X) tables, the execution plan is X MAP tasks +1 REDUCE task. Unlike Hive, it is X MAP tasks + (X-1) REDUCE tasks, and SQL needs to be adjusted to achieve the optimal execution plan.

Data exploration is often encountered in the development process, such as the number of users in the asset information table. Count distinct is often used in the exploration process, but how efficient is it?

Here are the common writing and optimized writing to explore the number of users in the last five days in the asset information table.

-- Select assets in the last 5 days

-- Common writing, count distinct

SELECT

COUNT(DISTINCT cst_id) AS cst_cnt

FROM @pc_bill_bal

WHERE dt BETWEEN '${bizdate-5}' AND '${bizdate}'

;

-- Optimized writing

SELECT COUNT(1) AS cst_cnt

FROM (

SELECT

cst_id

FROM @pc_bill_bal

WHERE dt BETWEEN '${bizdate-5}' AND '${bizdate}'

GROUP BY

cst_id

)base

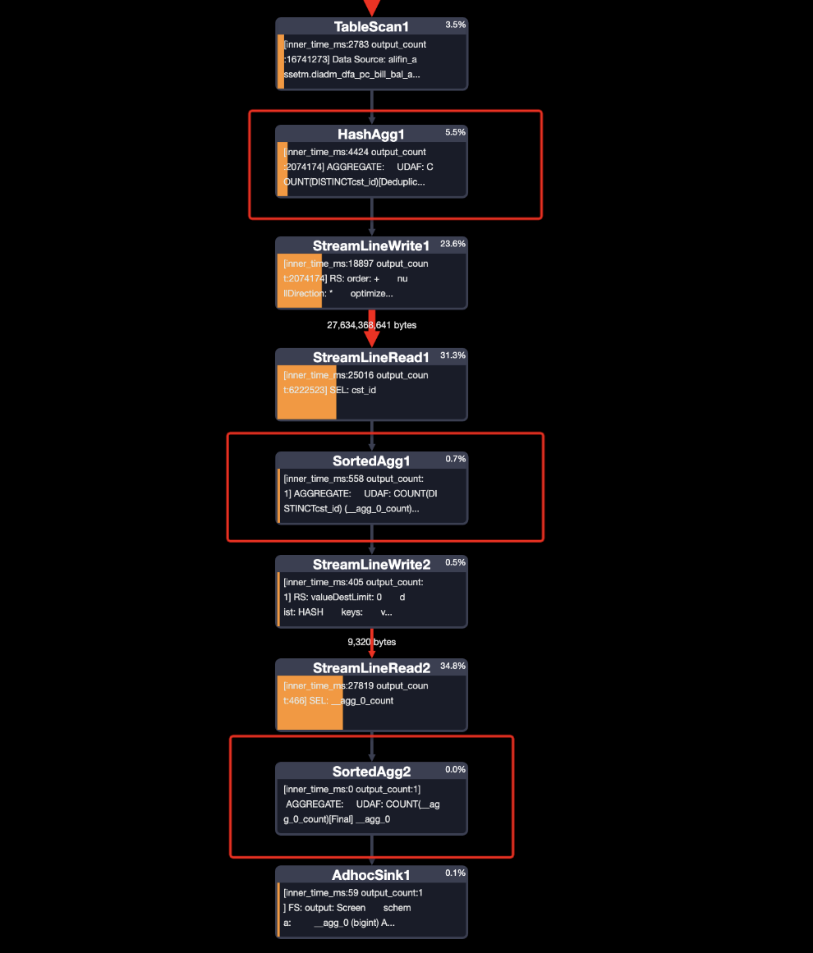

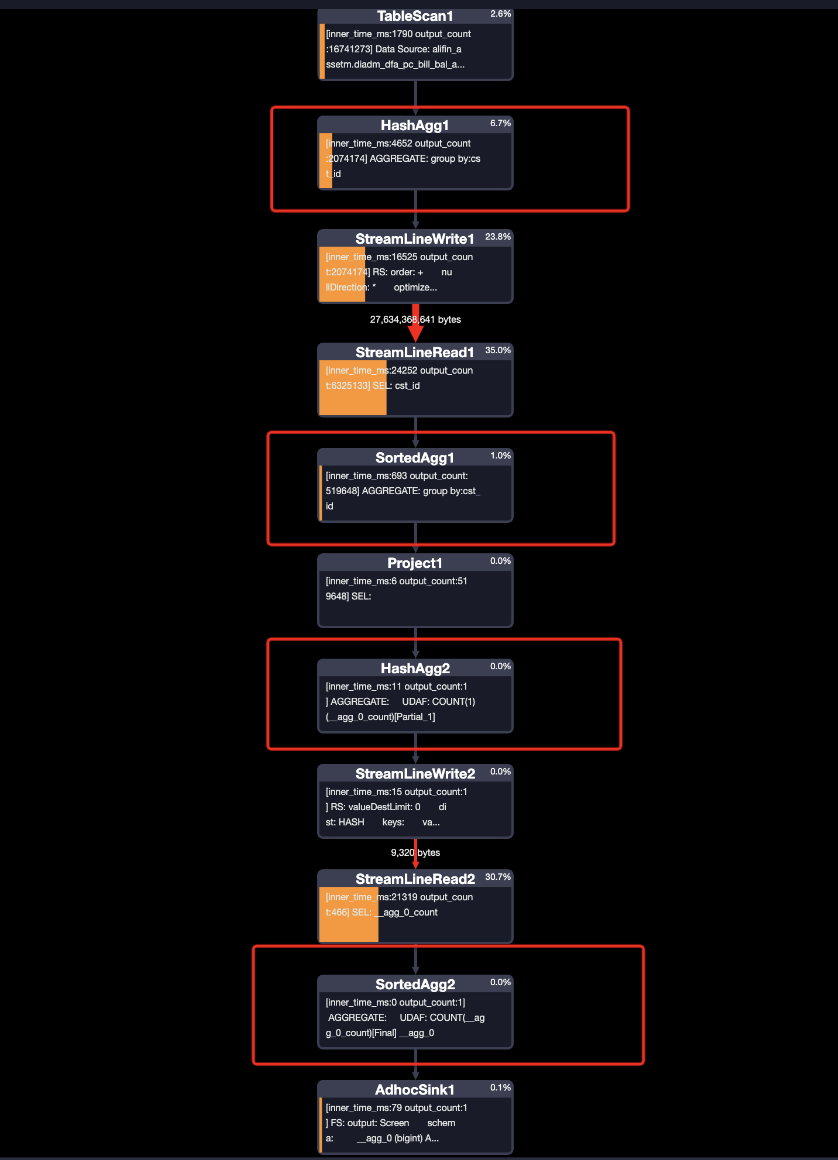

;It is generally believed that direct count distinct is inefficient. Is that right? Look at the comparison of the two execution plans.

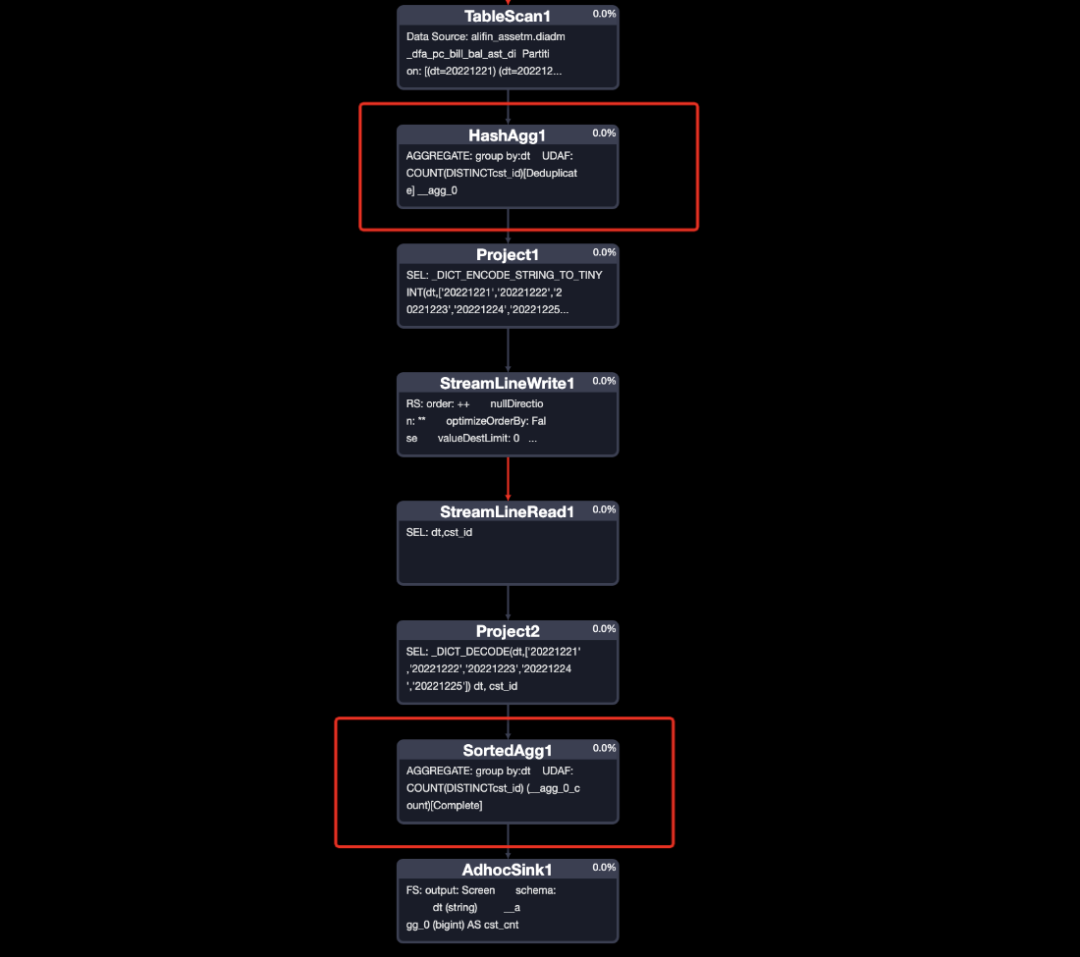

Common Writing:

Optimized Writing:

As shown in the execution plan, direct count distinct writing is optimized into two deduplication processing and one sum computing instead of full deduplication computing. Looking at the optimized method, it has two rounds of deduplication processing and two rounds of summation calculation, which is one step more than count distinct. However, the running efficiency is still very fast. Finally, look at the running time and consumption of resources. The common writing is 28% (62s, 86s) faster than the optimized writing, and the resource consumption is 28% less.

Can count distinct be used without limit?

Next, look at another scenario. Explore the number of users per day in the asset information table in the past five days. The common writing and the optimized writing:

-- Select assets in the last 5 days

-- Common writing, count distinct

SELECT

dt

,COUNT(DISTINCT cst_id) AS cst_cnt

FROM @pc_bill_bal

WHERE dt BETWEEN '${bizdate-5}' AND '${bizdate}'

GROUP BY

dt

;

-- Optimized writing

SELECT

dt

,COUNT(cst_id) AS cst_cnt

FROM (

SELECT

dt

,cst_id

FROM @pc_bill_bal

WHERE dt BETWEEN '${bizdate-5}' AND '${bizdate}'

GROUP BY

dt

,cst_id

)base

GROUP BY

dt

;Look at the comparison of the two execution plans in this scenario.

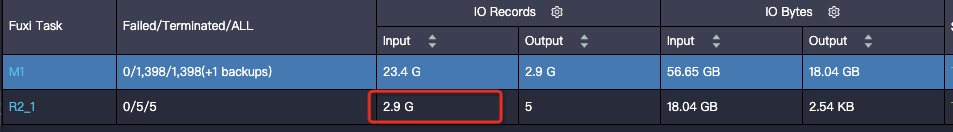

Common writing (also look at the assigned task):

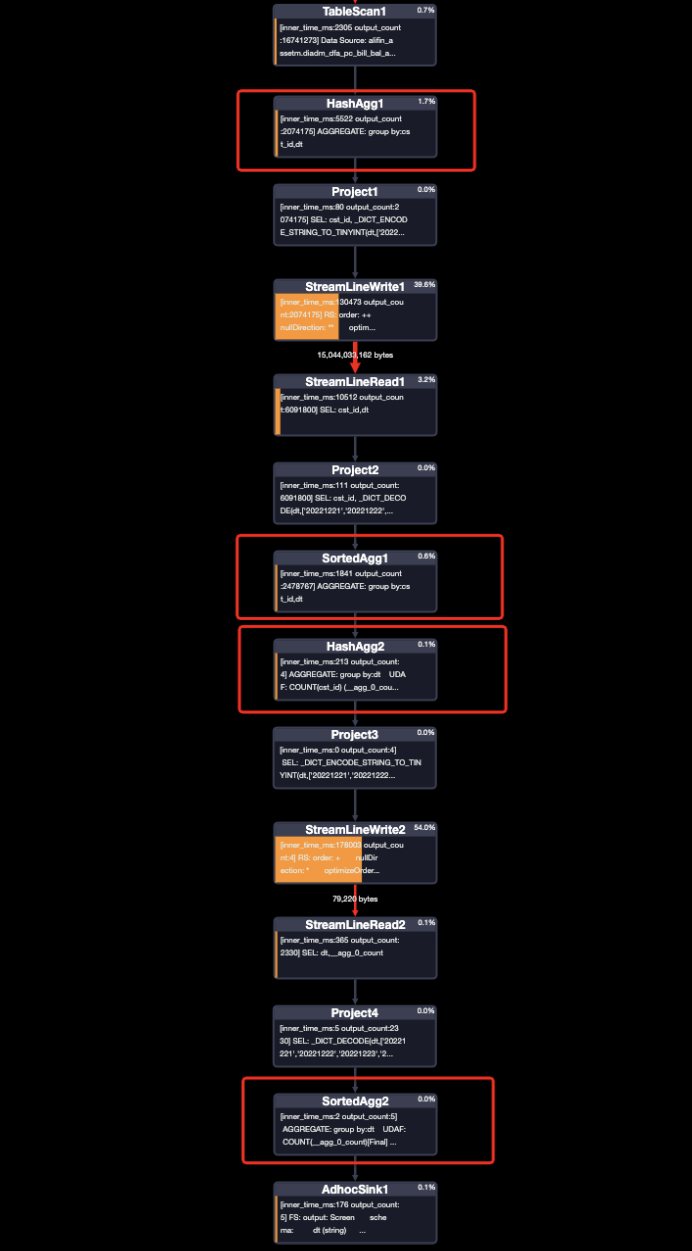

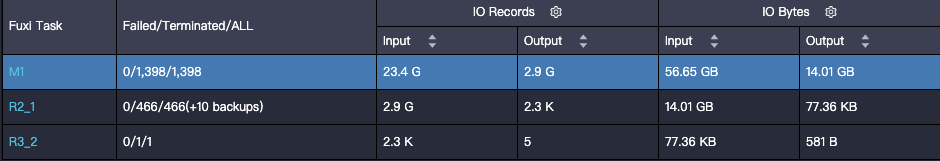

Optimized Writing:

As shown in the execution plan, direct count distinct writing carries out one deduplication, and 0.3 billion pieces of data are given to five tasks to perform deduplication and sum computing. Each task is under considerable pressure. The optimized writing has two deduplication processing and two sum computing, and each step runs fast without the long tail. Finally, looking at the running time and consumption of resources. The regular method is 26 times slower than the optimized method and consumes twice as much resources.

ODPS only optimizes the execution plan for count distinct when reading one field from the data source. When multiple fields are read from the data source, count distinct writing should be changed to group by count.

In daily development work, it is often encountered that multiple tables are associated to take attributes, such as computing the number of times a user has behaved A, B, C...N in the past period, or in the field of asset management, counting all assets in an asset pool (assets at the beginning of the day + lending assets + assets bought).

Assuming three pieces of data need to be associated to obtain attributes, the common writing is to use full outer join + coalesce twice to associate the values; or first, merge the 3 data bodies and then use left join three times.

-- For example, get all the assets of each user for the asset pool.

-- Use full outer join + coalesce

SELECT

COALESCE(tt1.cst_id, tt2.cst_id) as cst_id

,COALESCE(tt1.bal_init_prin, 0) AS bal_init_prin

,COALESCE(tt1.amt_retail_prin, 0) AS amt_retail_prin

,COALESCE(tt2.amt_buy_prin, 0) AS amt_buy_prin

FROM (

SELECT

COALESCE(t1.cst_id, t2.cst_id) as cst_id

,COALESCE(t1.bal_init_prin, 0) AS bal_init_prin

,COALESCE(t2.amt_retail_prin, 0) AS amt_retail_prin

FROM @bal_init t1 - Assets at the beginning of the day

FULL OUTER JOIN @amt_retail t2 - Same-day lending assets

ON t1.cst_id = t2.cst_id

)tt1

FULL OUTER JOIN @amt_buy tt2 - Same-day buying assets

ON tt1.cst_id = tt2.cst_id

;Next, look at the optimized writing:

-- Writing method 1

SELECT

cst_id

,SUM(bal_init_prin) as bal_init_prin

,SUM(amt_retail_prin) as amt_retail_prin

,SUM(amt_buy_prin) as amt_buy_prin

FROM (

SELECT cst_id, bal_init_prin, 0 AS amt_retail_prin, 0 AS amt_buy_prin

FROM @bal_init - Assets at the beginning of the day

union ALL

SELECT cst_id, 0 AS bal_init_prin, amt_retail_prin, 0 AS amt_buy_prin

FROM @amt_retail - Same-day lending assets

UNION ALL

SELECT cst_id, 0 AS bal_init_prin, 0 AS amt_retail_prin, amt_buy_prin

FROM @amt_buy - Same-day buying assets

)t1

GROUP BY

cst_id

;

-- Optimized writing 2

SELECT

cst_id

,SUM(IF(flag = 1, prin, 0)) as bal_init_prin

,SUM(IF(flag = 2, prin, 0)) as amt_retail_prin

,SUM(IF(flag = 3, prin, 0)) as amt_buy_prin

FROM (

SELECT cst_id, bal_init_prin AS prin, 1 AS flag

FROM @bal_init - Assets at the beginning of the day

union ALL

SELECT cst_id, amt_retail_prin AS prin, 2 AS flag

FROM @amt_retail - Same-day lending assets

UNION ALL

SELECT cst_id, amt_buy_prin AS prin, 3 AS flag

FROM @amt_buy - Same-day buying assets

)t1

GROUP BY

cst_id

;Compare the execution plans of join writing and optimized writing (the two execution plans do the same thing, and their task names can be understood as the same, so they will not be explained in detail).

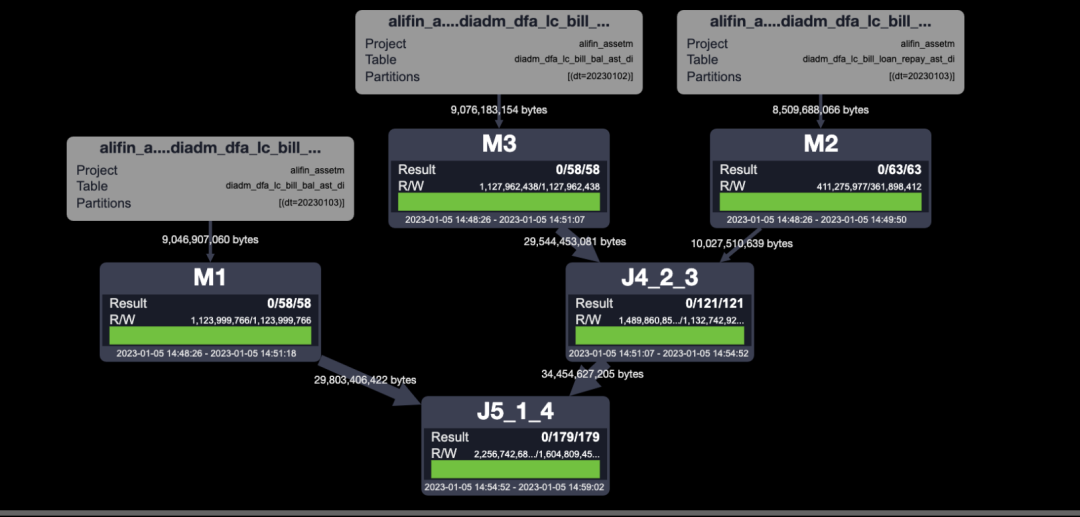

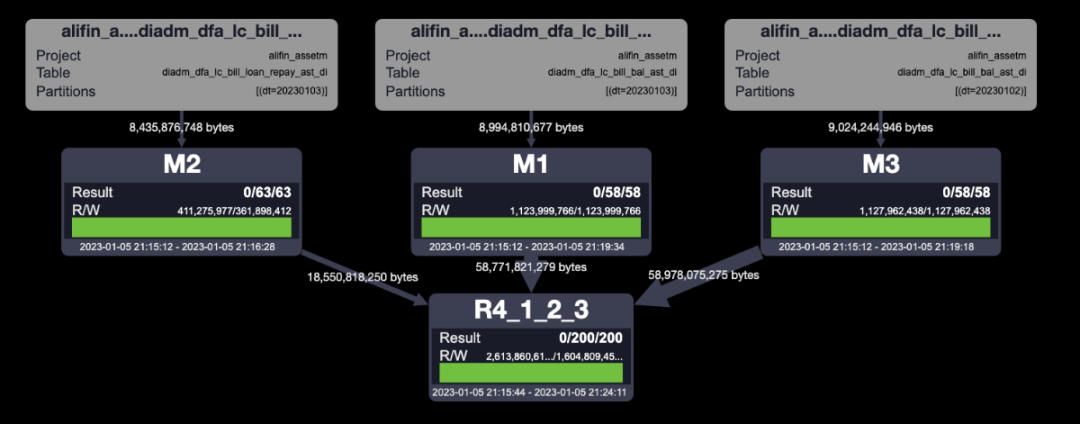

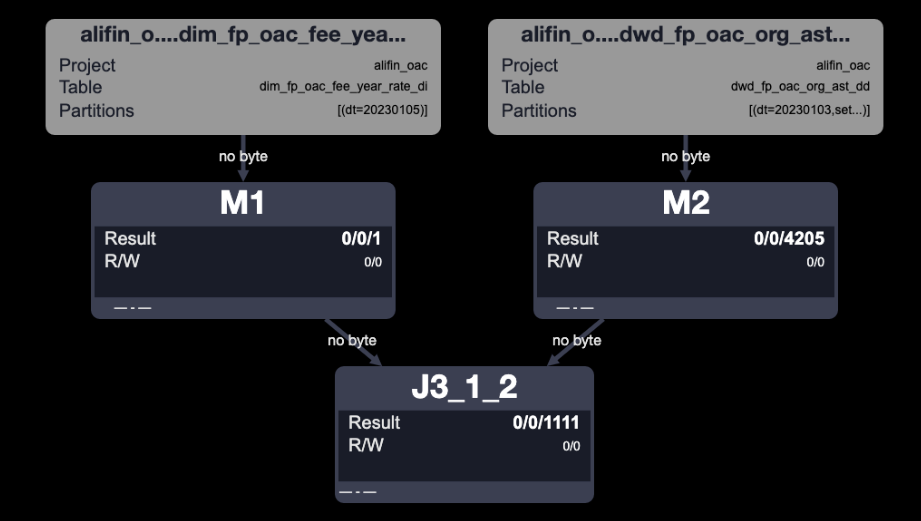

Join Writing:

Optimized Writing:

As shown in the execution plan, join writing requires more execution steps. Multiple shuffles consume more resources, and serial running takes longer. The optimized writing only needs to reduce once after reading all the data. Finally, compare running time and resource consumption. The optimized writing's running time is 20% faster, and resource usage is 30% less. (The more complex the scenario, the better the effect.)

Since JOIN is the most inefficient part of offline data development, it is better to get rid of JOIN.

When multiple tables have the same association key of the int type and aggregate values, the union all + group by method runs faster, saves resources, and simplifies code development and maintenance. In addition, the union all + group by method has more advantages when the number of table rows, the number of associated tables, and the number of associated keys are more.

Regarding the two optimized writings, the second writing is more flexible, easier to be maintained, and occupies fewer resources. However, the first writing is more suitable for scenarios that require placeholder data (such as aggregate map).

In daily development, it is often encountered that attributes are taken from multiple tables of one body (such as data related to customer information). Address in table A, phone number in table B, uv in table C, identity information in table D, and preference in table E.

Assuming that three pieces of data need to be associated to obtain attributes, the common writing is to use full outer join + coalesce twice to associate the values; or first, merge the 3 data bodies and then use left Join three times.

-- This case is similar to the previous case. Merge the bodies first and use left join three times.

SELECT

base.cst_id AS cst_id

,t1.bal_init_prin AS bal_init_prin

,t2.amt_retail_prin AS amt_retail_prin

,t3.amt_buy_prin AS amt_buy_prin

FROM (

SELECT

cst_id

FROM @bal_init - Assets at the beginning of the day

UNION

SELECT

cst_id

FROM @amt_retail - Same-day lending assets

UNION

SELECT

cst_id

FROM @amt_buy - Same-day buying assets

)base

LEFT JOIN @bal_init t1 - Assets at the beginning of the day

ON base.cst_id = t1.cst_id

LEFT JOIN @amt_retail t2 - Same-day lending assets

ON base.cst_id = t2.cst_id

LEFT JOIN @amt_buy t3 - Same-day buying assets

ON base.cst_id = t3.cst_id

;Next, look at the optimized writing:

-- Use JSON to implement the STRING data type.

SELECT

cst_id

,GET_JSON_OBJECT(all_val, '$.bal_init_prin') AS bal_init_prin

,GET_JSON_OBJECT(all_val, '$.amt_retail_prin') AS amt_retail_prin

,GET_JSON_OBJECT(all_val, '$.amt_buy_prin') AS amt_buy_prin

FROM (

SELECT

cst_id

,CONCAT('{',CONCAT_WS(',', COLLECT_SET(all_val)) , '}') AS all_val

FROM (

SELECT

cst_id

,CONCAT('\"bal_init_prin\":\"', bal_init_prin, '\"') AS all_val

FROM @bal_init - Assets at the beginning of the day

UNION ALL

SELECT

cst_id

,CONCAT('\"amt_retail_prin\":\"', amt_retail_prin, '\"') AS all_val

FROM @amt_retail - Same-day lending assets

UNION ALL

SELECT

cst_id

,CONCAT('\"amt_buy_prin\":\"', amt_buy_prin, '\"') AS all_val

FROM @amt_buy - Same-day buying assets

)t1

GROUP BY

cst_id

)tt1

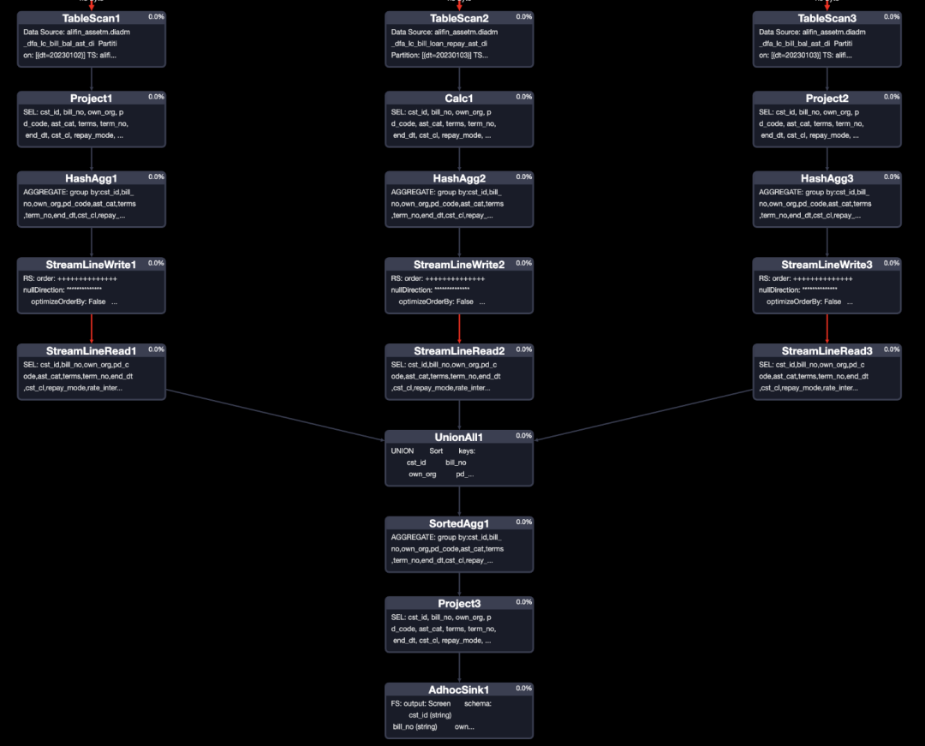

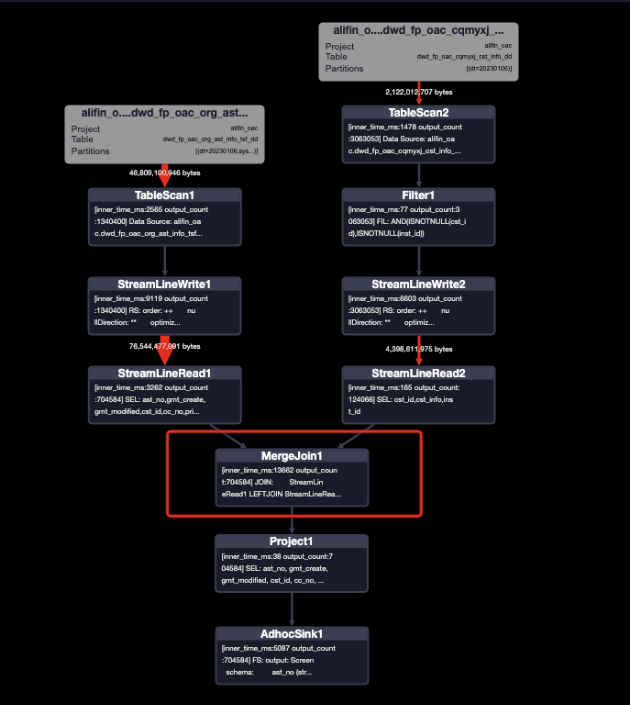

;Compare the execution plans of join writing and optimized writing

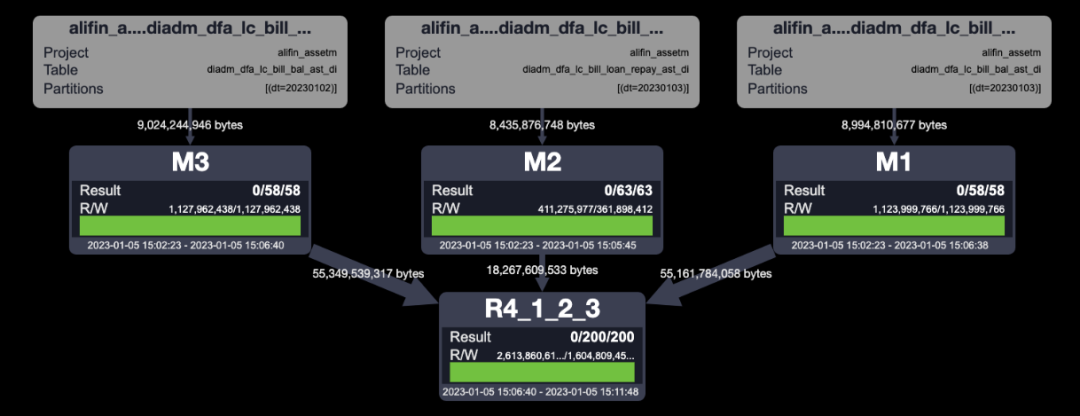

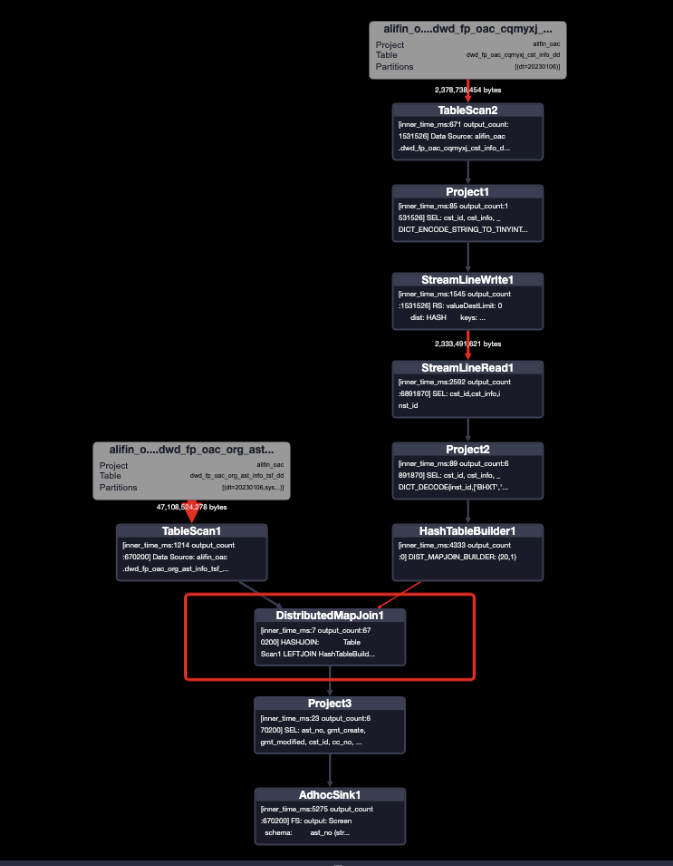

The execution plan of the Join writing:

Optimized Writing:

Compared with the two execution plans, the Join writing is used twice for the data of each table, which are the construction body and the value, so the complexity of each MAP and JOIN task is still high. However, the optimized writing MAP and REDUCE tasks are concise and clear. In addition, as the number of tables increases, the responsibility of JOIN tasks for JOIN writing becomes higher. Comparing running time and resource consumption, the optimized writing runs 20% faster and reduces resource consumption by 20%. (The more complex the scenario, the better the effect.)

Since collect_set is used, you need to consider whether the node has excessive memory and adjust the memory. This scenario does not occur in most cases.

It is the same as the large table join (aggregate type). The difference is that this method applies to the STRING type. Note the memory usage of the collect_set function.

In daily development, we often encounter the situation where large tables join small tables. Mapjoin is a common processing method, but you must pay attention to the writing and adjust the memory parameters of small tables to ensure Mapjoin takes effect.

Currently, ODPS has optimized mapjoin to be automatically enabled. You do not need to manually write /* + mapjoin(a,b)*/ to enable it. Inner join and left join of large tables with small tables can directly make mapjoin effective.

-- Base is a large table and fee_year_rate is a small table.

-- Method 1: inner join

SELECT

base.*

,fee_year_rate.*

FROM @base base

INNER JOIN @fee_year_rate fee_year_rate

ON (base.terms = fee_year_rate.terms)

;

-- Method 2: LEFT join

SELECT

base.*

,fee_year_rate.*

FROM @base base

LEFT JOIN @fee_year_rate fee_year_rate

ON (base.terms = fee_year_rate.terms)

;Mapjoin – ineffective writing:

-- Method 3: right join

SELECT

base.*

,fee_year_rate.*

FROM @base base

RIGHT JOIN @fee_year_rate fee_year_rate

ON (base.terms = fee_year_rate.terms)

;

-- Method 4: full outer join

SELECT

base.*

,fee_year_rate.*

FROM @base base

FULL OUTER JOIN @fee_year_rate fee_year_rate

ON (base.terms = fee_year_rate.terms)

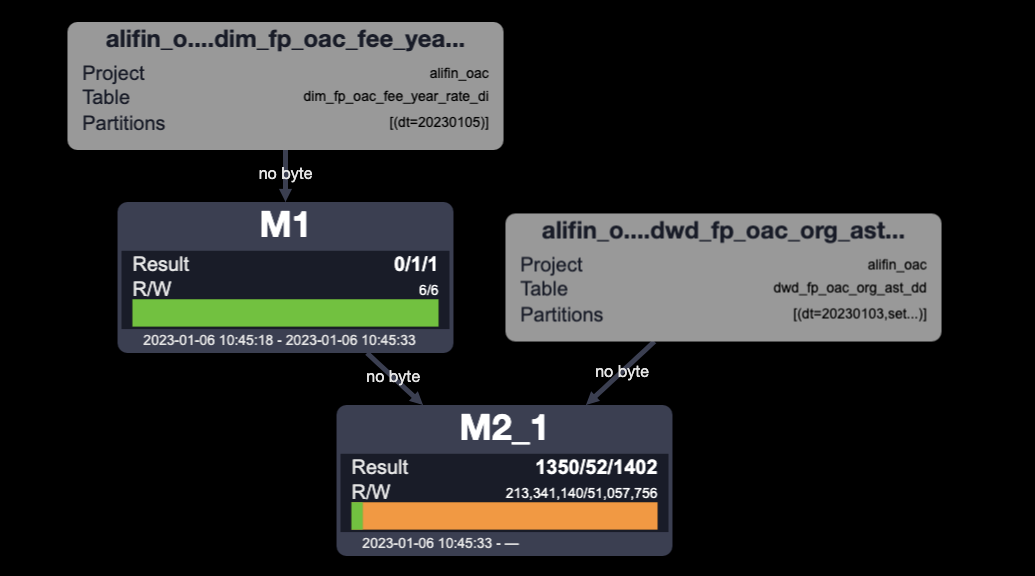

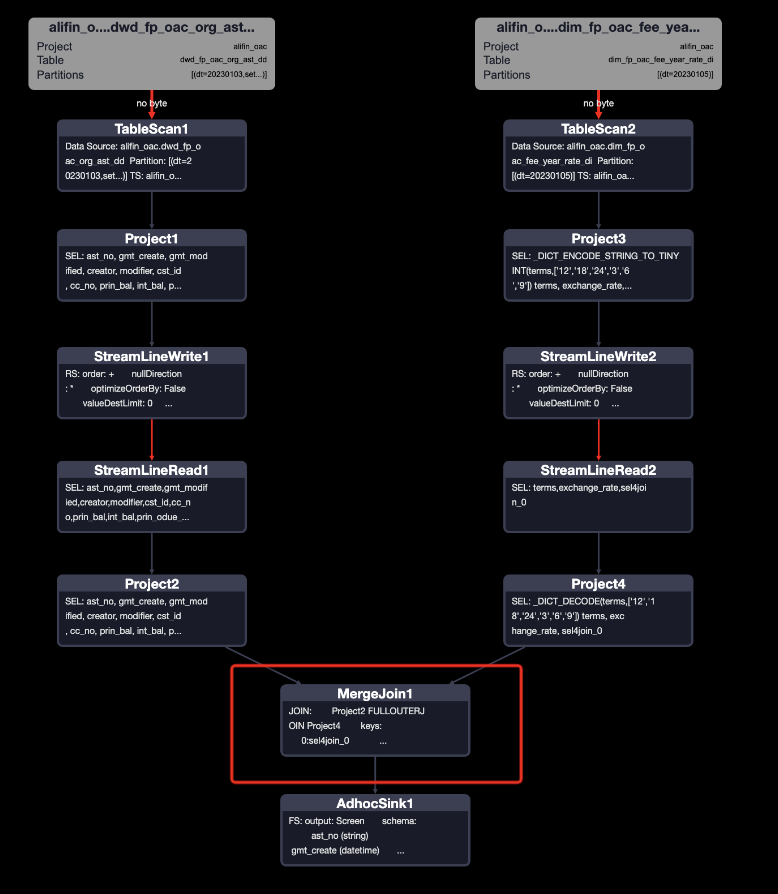

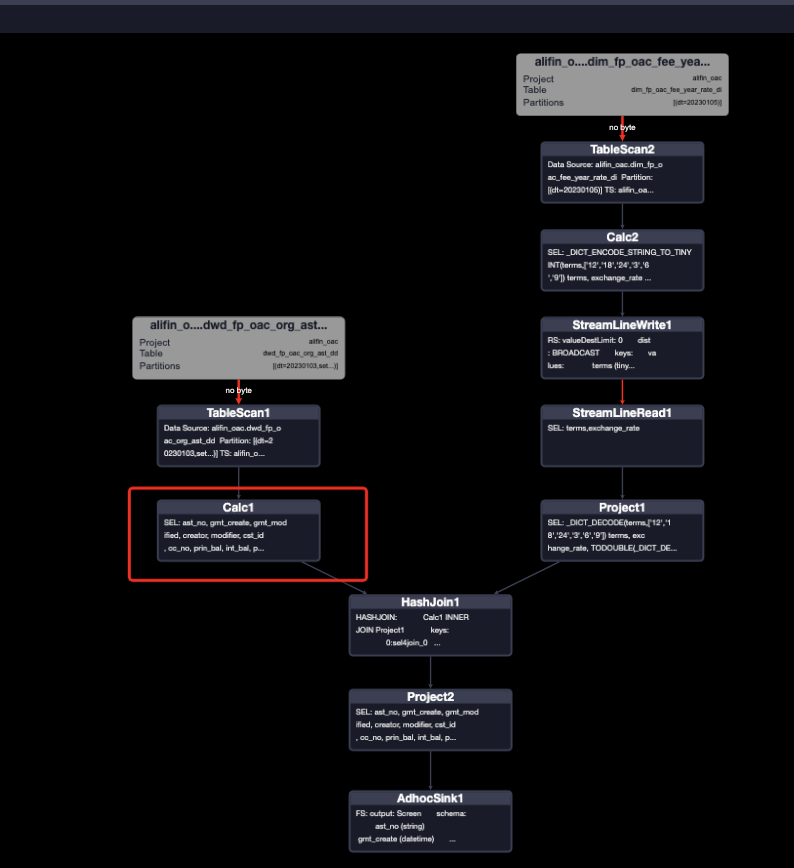

;Compare the execution plan

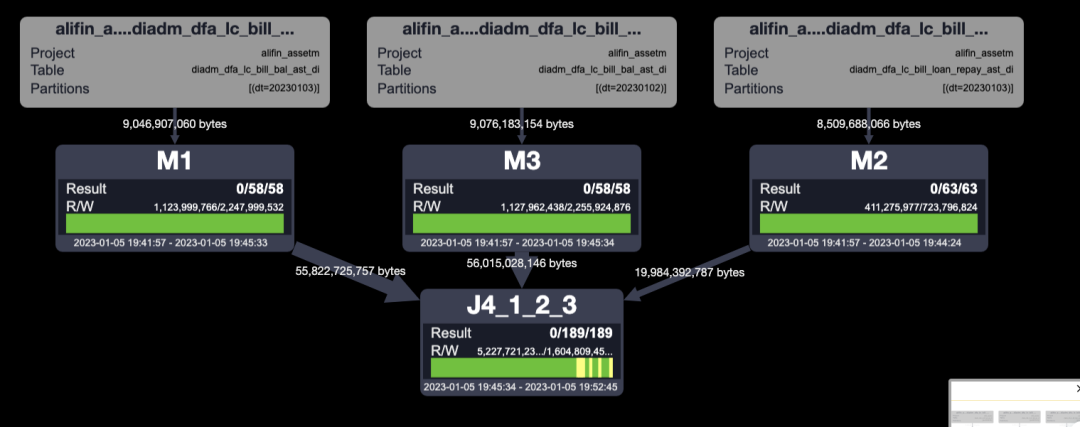

Mapjoin – effective execution plan:

Mapjoin – ineffective execution plan:

Mapjoin simply reads small tables into the memory in the Map phase and scans large tables sequentially to complete the join.

Compare the two execution plans. After mapjoin takes effect, there are only two MAP tasks and no JOIN tasks, which is equivalent to saving one JOIN.

Whether mapjoin takes effect can be determined by whether it is hashjoin or mergejoin.

After mapjoin is enabled, the running efficiency is significantly improved, but it does not take effect because the writing and small table are too large. You must determine from the execution plan and adjust the parameters to ensure mapjoin takes effect.

Small table size tuning parameter: set odps.sql.mapjoin.memory.max=2048 (M)

Small tables often exceed the maximum memory of mapjoin for large and small table join scenarios. Then, mapjoin will not take effect. ODPS provides a solution to store medium-sized tables in the memory, which is called distmapjoin. The usage is similar to mapjoin. You can use the hint /+ distmapjoin((shard_count=,replica_count=)/ in the SELECT statement to perform distmapjoin. shard_count (the number of shards, default [200M,500M]) and replica_count (the number of replicas, default 1) determine the concurrency of a task, which is concurrency=shard_count * replica_count.

Common Writing:

SELECT

base.*

,cst_info.*

FROM @base base

LEFT JOIN @cst_info cst_info

ON (base.cst_id = cst_info.cst_id

AND base.origin_inst_code = cst_info.inst_id)

;Optimized Writing:

SELECT /*+distmapjoin(cst_info(shard_count=20))*/

base.*

,cst_info.*

FROM @base base

LEFT JOIN @cst_info cst_info

ON (base.cst_id = cst_info.cst_id

AND base.origin_inst_code = cst_info.inst_id)

;Compare Execution Plans

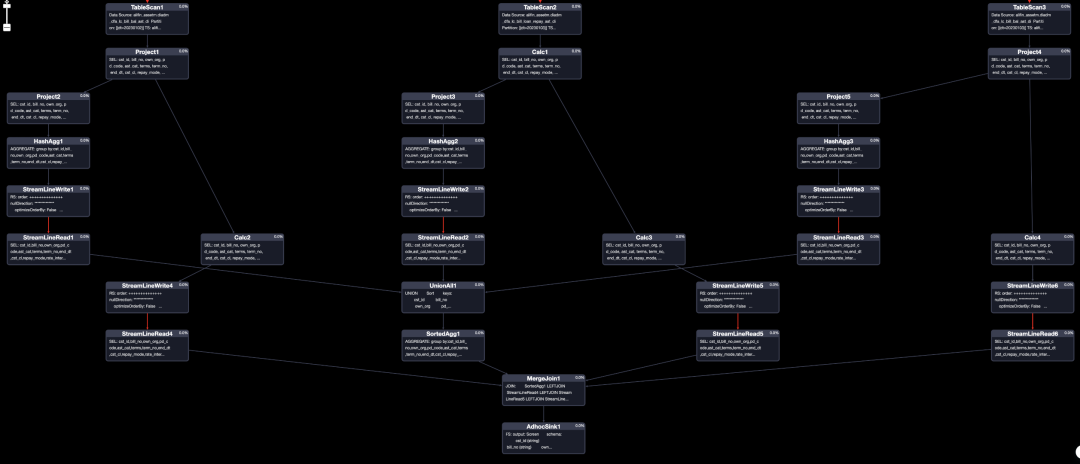

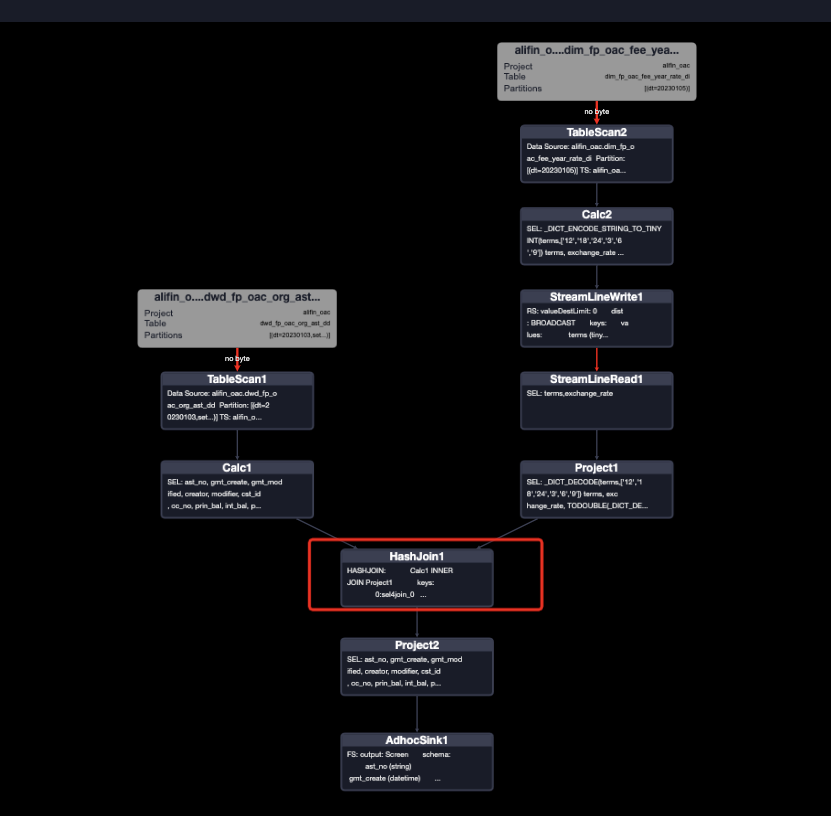

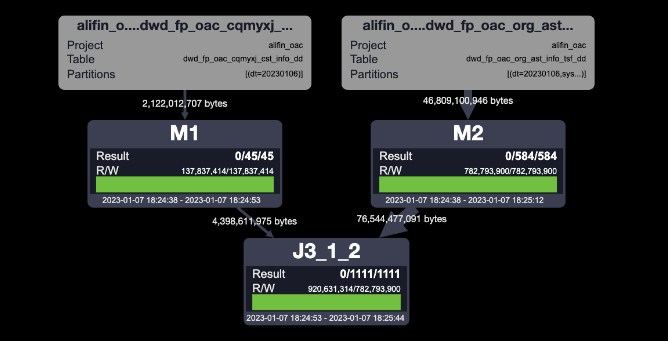

Common Writing:

Optimized Writing:

After comparing the two execution plans with the mapjoin execution plan, we found that the optimized writing eliminates the JOIN task, which speeds up the running and reduces resource consumption. The distmapjoin writing has one more REDUCE task than the mapjoin writing, which is the sharding of small tables.

Whether distmapjoin takes effect can be determined by if it is DistributedMapJoin1 or MergeJoin.

The same as mapjoin summary

In daily development, we habitually follow the filter criteria after the read table, which can reduce the amount of data to reduce task running time.

The standard writing of filter conditions after reading tables and the non-standard writing of filter conditions after multi-table join:

-- Standard writing

SELECT

base.*

,fee_year_rate.*

FROM (

SELECT *

FROM @base

where terms = '12'

)base

INNER JOIN @fee_year_rate fee_year_rate

ON (base.terms = fee_year_rate.terms)

;

-- Non-standard writing

SELECT

base.*

,fee_year_rate.*

FROM @base base

INNER JOIN @fee_year_rate fee_year_rate

ON (base.terms = fee_year_rate.terms)

WHERE base.terms = '12'

;In my impression, the operation efficiency of the standard writing will be higher. If you look at the execution plans, you will find that the execution plans of the two writing methods are the same, and both are filtered before Join.

ODPS has optimized the preposition of predicates, but daily development tries to follow the filter conditions after the reading table, which is more standardized, and the code will have better readability.

You must learn to read the execution plan to do a good job in SQL development and optimization. Practicing more can help you quickly master this skill. (The execution plan mentioned in this article will change with the optimization of ODPS.)

1 posts | 0 followers

FollowAlibaba Cloud Community - June 9, 2023

Aliware - April 10, 2020

Alibaba Clouder - December 21, 2020

Alibaba Cloud MaxCompute - May 5, 2019

Apache Flink Community China - June 15, 2021

Alibaba Cloud Native Community - October 10, 2025

1 posts | 0 followers

Follow ApsaraDB RDS for SQL Server

ApsaraDB RDS for SQL Server

An on-demand database hosting service for SQL Server with automated monitoring, backup and disaster recovery capabilities

Learn More