By Zhucha

Problems encountered by ReplicaSet

ReplicaSet helps address issues such as read request scaling and high availability. However, as business scenarios further increase, the following issues may arise.

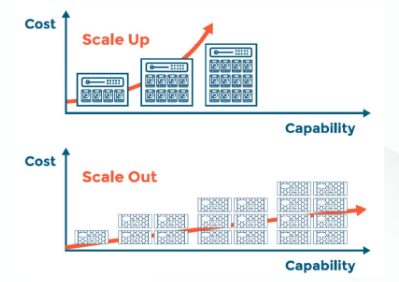

Scale Up Compared to Scale Out

What Is MongoDB Sharded Cluster?

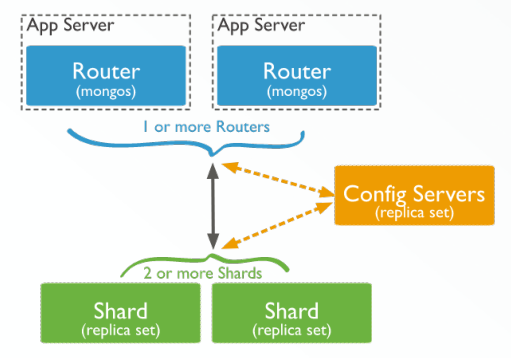

Mongos

ConfigServer

Shard

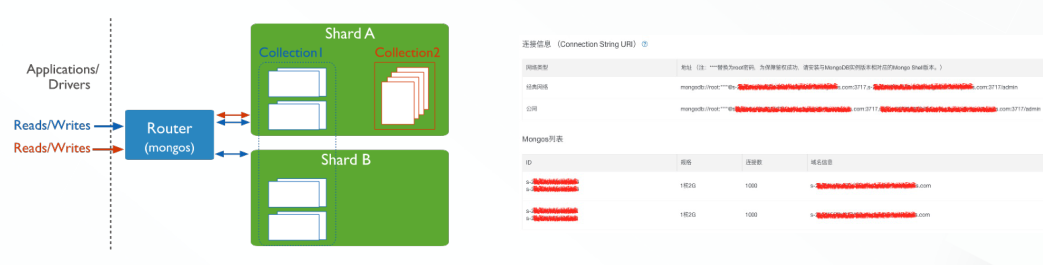

With a sharded cluster, Drivers need to connect to, mongos instances to interact with the entire cluster. Then mongos sends requests to different shards at the backend based on the requests from the client. For example, to read and write collection one, mongos performs request interaction with shard A and shard B. If there is a collection of two to read and write, mongos performs interaction with shard A only.

The following figure shows the sharded cluster applied from Alibaba Cloud Apsaradb for MongoDB instance. The link address of each mongos instance is listed and the ConnectionStringURI is spliced. If linking with a single mongos instance, there may be a single point of failure, therefore, ConnectionStringURI for access is recommended.

The ConnectionStringURI consists of the following parts:

mongodb://[username:password@]host1[:port1][,host2[:port2],...[,hostN[:por tN]]][/[database][?options]]Mongodb://: The prefix, indicating a connection string URI.Username:password@: The username and password used to connect to the MongoDB instance, separated by a colon.HostX:portX: The link address and port number of the instance./Database: Authenticated the database to which the database account belongs.?Options: Additional connection options.The following example describes this issue.

Example : mongodb://user:password@mongos1:3717,mongos2:3717/ad min

In the above ConnectionStringURI, the username is User, the password is Password, and then the connection to mongo1 and mongos2 is made. Their ports are both 3717, and the entire database is admin.

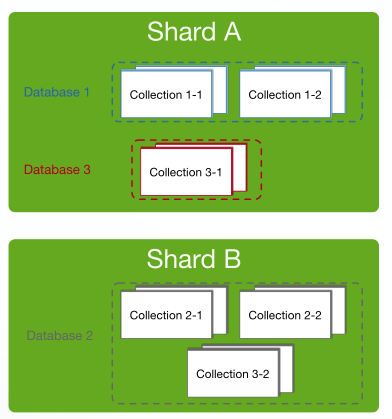

Primary Shard Definition

By default, the collection of each database is not sharded, storing on a fixed shard, which is called the primary shard.

Primary Shard Options

When creating a new database, the system will select a shard with the least amount of data as the primary shard of the new database based on the amount of data currently stored in each shard.

Apsaradb for MongoDB supports set-based data sharding. A set that has been sharded is split into multiple sets and stored in shards.

sh.enableSharding("") Sharding Key

The following example describes this issue.

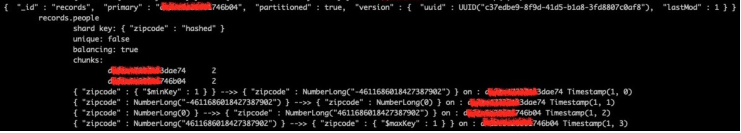

Example : sh.shardCollection("records.people", { zipcode: 1 } ) to shard the recor ds.people collection, which is a record-based ranged sharding.

Ranged Sharding Compared to Hashed Sharding

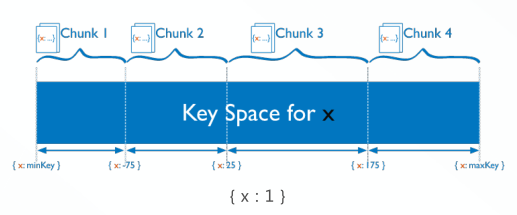

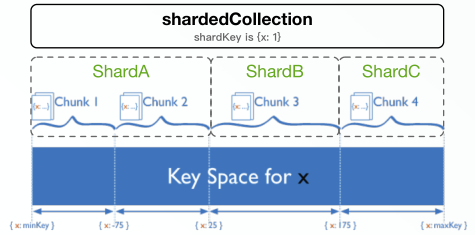

The preceding figure shows an x-based ranged sharding. The data is divided into four parts, and the cut points are x:-75, x:25, and x:175. Data with similar values are adjacent to each other, which can well meet the requirements of range query. However, if data is written monotonically based on shard keys, the writing capability cannot be well expanded because all writes are carried by the last chunk.

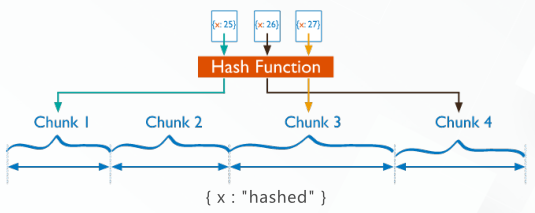

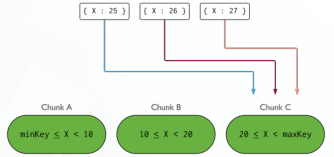

As shown in the above figure, x:25 x:26 x:27 have been scattered in different chunks after hash calculation. Based on the monotonicity of the hashed sharding, for the scenario where the shard key is written monotonically, the write capability can be fully expanded, but it is not efficient for range queries.

Hash sharding only supports for single field:

{ x : "hashed" } {x : 1 , y : "hashed"} // 4.4 newAfter version 4.4, Apsaradb for MongoDB can combine hash sharding of a single field with a range of one to multiple shard key fields, for example by specifying x:1,y is the way to hash.

Cardinality: The larger the better

Take log generation time as the shard key when recording a collection of logs: If ranged sharding is used, the data is written only on the last shard.

The shard key must be an index. For non-empty collections, an index must be created before ShardCollection. For empty collections, ShardCollection is automatically indexed.

Before version 4.4:

After version 4.4:

Before version 4.2, the value of shard key cannot be modified. While after version 4.2, if the value of shard key is not a variable ID, the corresponding value of shard key can be modified.

New command of version 4.4, which allows to modify the shard key by adding a suffix field to the shard key:

db.adminCommand( {

refineCollectionShardKey: "<database>.<collection>",

key: { <existing key specification>,

<suffix1>: <1|"hashed">, ... }

} )The example above describes this issue.

Instructions for FefineCollectionShardKey

Targeted Operations Compared to Broadcast Operations

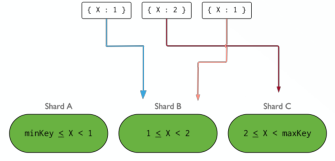

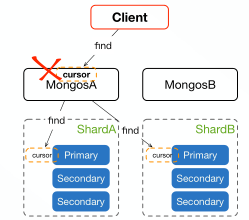

There are two ways for request forwarding that mongos instances perform based on the shard key information in the request. One is targeted operations and the other is broadcast operations.

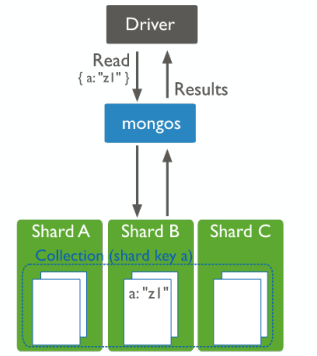

As shown in the figure above, take a as the shard key. If the request contains the field a, the mongos instance can identify its targeted shard. If the shard is a shard b, the a can directly interact with shard b, then get the result and return it to the client.

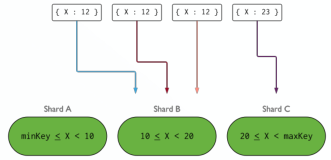

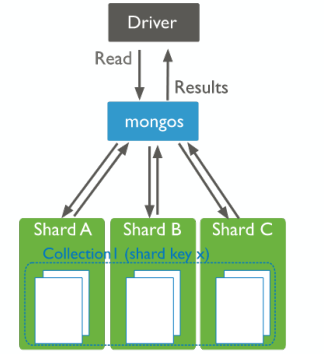

The following figure shows the broadcast operation procedure.

The preceding figure shows an x-based ranged sharding. The data is divided into four chunks with left-closed right-open interval, Chunk 1 : [minKey, -75) ; Chunk 2 : [-75, 25); chunk3: [25, 175) ; Chunk4 : [175, maxKey). ShardA holds Chunk1 and Chunk2, while ShardB and ShardC hold Chunk3 and Chunk4 respectively.

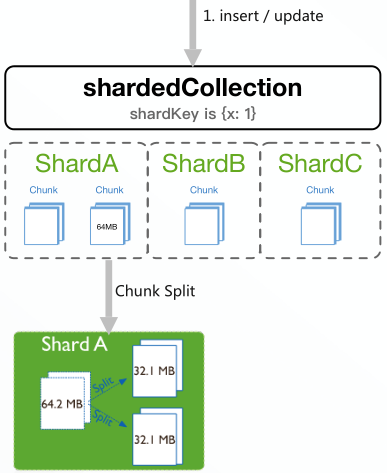

With data writing, when the chunk size increases to the specified size, 64MB by default, Apsaradb for MongoDB will split the chunk.

Manual trigger

Automatic trigger: Automatic chunk split Can be only triggered by operations such as insert and update. Chunk split does not occur immediately when the chunk size is adjusted to a smaller size.

The following figure shows as below.

Chunk split management includes manual chunk splits and chunk size adjustment.

Scenario: The business needs to insert massive data into the collection that is distributed only in a small number of the chunk.

Multiple shards for concurrent writes cannot be used by direct insertion and chunks will be split after insertion. By doing so, chunk migration will be triggered then, creating many inefficient IOs.

sh.splitAt(namespace, query) : Specifies a chunk split point,

Example: x was [0, 100) , sh.splitAt(ns, {x: 70}). After splitting, x becomes [0, 70) , [70, 100)

sh.splitFind(namespace, query): Splits the data in the middle targeted chunk,

Example: x was [0, 100) , sh.splitFind(ns, {x: 70}). After splitting x becomes [0, 50) , [50, 100)

Example: use config; db.settings.save( { _id:"chunksize", value: } );

The method of adjusting the ChunkSize is to add a document to the settings set of the configuration library. The ID of this document is ChunkSize.

Notes:

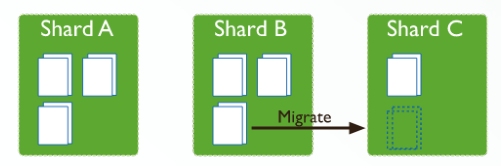

To ensure data load balancing, Apsaradb for MongoDB supports data migration between shards, which is called Chunk Migration.

Chunk migration methods:

sh.moveChunk(namespace, query, destination)

sh.moveChunk("records.people", { zipcode: "53187" }, "sha rd0019").Chunk migration influence:

Chunk migration constraints:

| Chunk quantity | Migration threshold |

| Less than 20 | 2 |

| 20-79 | 4 |

| Greater than or equal to 80 | 8 |

As shown in the table above, the number of chunks is less than 20, and the migration threshold is 2. As the number of chunks increases, the migration thresholds increase by 4 and 8, respectively.

sh.enableAutoSplit().sh.disableAutoSplit().sh.getBalancerState().sh.isBalancerRunning().sh.startBalancer() / sh.setBalancerState(true). sh.stopBalancer() / / sh.setBalancerState(false)

sh.enableBalancing(namespace).sh.disableBalancing(namespace).use config;

db.settings.update(

{ _id: "balancer" },

{ $set: { activeWindow : { start : "<start-time>", stop : "<stop-time>" } }

},

{ upsert: true }

); 。JumboChunk definition: The smallest chunk can contain only one unique shard key, and this chunk cannot be split anymore.

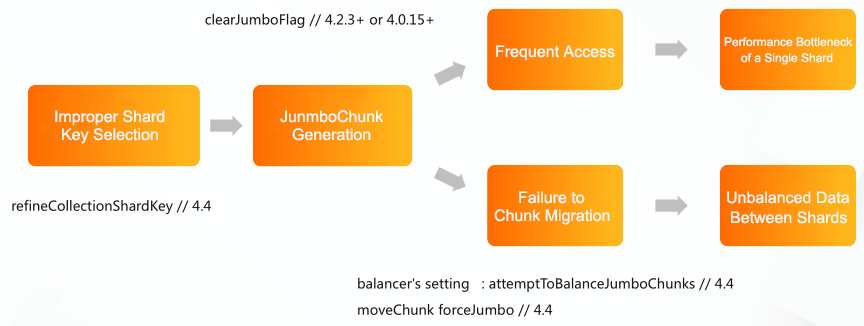

JumboChunk generation: JumboChunk is generated only when the shard key is not properly selected. If these chunks are frequently accessed, the performance bottleneck of a single shard will be introduced. In addition, chunks cannot be migrated as well. If further migration is performed, it will cause data between shards unbalanced.

These problems are gradually solved as the Apsaradb for MongoDB version iterates. For example, in version 4.4, the RefineCollectionShardKey command has been provided, setting the shard key again. Meanwhile, this version also provides some settings for the balancer and some options for MoveChunk to support chunk migration.

In the newer versions of 4.2 and 4.0, the command is also provided to clean up the JumboChunk script in the sharded cluster.

Balancer

sh.setBalancerState(state)true : sh.startBalancer()false : sh.stopBalancer()sh.getBalancerState()sh.isBalancerRunning()sh.disableBalancing(namespace)sh.enableBalancing(namespace)Chunk

sh.disableAutoSplit()sh.enableAutoSplit()sh.moveChunk( … )sh.splitAt( … )sh.splitFind( … )Sharding

sh.shardCollection()sh.enableSharding()

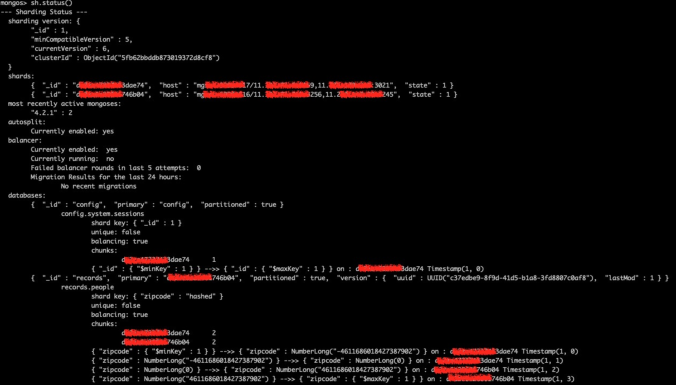

The information is shown in the figure.

Sharding version: The version of the sharded cluster instance.

Shards: There are currently two shards in the sharded cluster, each with a name, link information, and current status.

Most recently active mongos: There are currently two 4.2.1 versions of mongos in the sharded cluster.

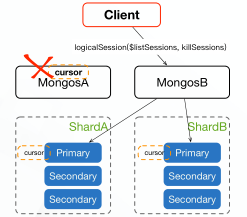

Since version 3.6, the Apsaradb for MongoDB driver has associated all operations with LogicalSession

For versions 3.4 and earlier, as shown in the following figure:

For version 3.4 and later, as shown in the following figure:

{

// The unique identifier. Either generated by the client or by the server

Introduction to the usage and principles of sharded cluster < 62

"id" : UUID("32415755-a156-4d1c-9b14-3c372a15abaf"),

// Current Login User ID

"uid" : BinData(0,"47DEQpj8HBSa+/TImW+5JCeuQeRkm5NMpJWZG3hSu

FU=")

}Self-cleaning mechanism

Operation methods

use config; db.system.sessions.aggregate( [ { $listSessions: { allUse rs: true } } ] )db.runCommand( { killSessions: [ { id : }, ... ] } )startSession / refreshSessions / endSessions ...ApsaraDB - March 26, 2024

ApsaraDB - January 12, 2023

Alibaba Clouder - January 9, 2018

Alibaba Clouder - November 8, 2016

Alibaba Clouder - August 12, 2019

ApsaraDB - July 14, 2021

ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More ApsaraDB for MongoDB

ApsaraDB for MongoDB

A secure, reliable, and elastically scalable cloud database service for automatic monitoring, backup, and recovery by time point

Learn More ApsaraDB for OceanBase

ApsaraDB for OceanBase

A financial-grade distributed relational database that features high stability, high scalability, and high performance.

Learn More ApsaraDB for Cassandra

ApsaraDB for Cassandra

A database engine fully compatible with Apache Cassandra with enterprise-level SLA assurance.

Learn MoreMore Posts by ApsaraDB