By Yu Renjie, Product Expert of SOFAStack at Ant Financial

From February 19 to February 26, Ant Financial live streamed digital classes with the topic of "Fight Against the "Epidemic" with a Breakthrough in Technologies". Ant Financial invited senior experts to share their practical experiences of cloud native, development efficiency, and databases and answer questions online. They analyzed the implementation of the Platform-as-a-Service (PaaS) architecture in financial scenarios and the elastic and dynamic architecture of Alipay on mobile devices. In addition, they shared the features and practices of OceanBase 2.2. So far, we have compiled and posted a series of these speeches under the WeChat official account "Ant Financial Technology" (WeChat ID: Ant-Techfin). You are welcome to follow the account and read them.

In this blog, we'll be recapping the presentation by Yu Renjie, product expert of SOFAStack at Ant Financial, on the practices of building the cloud-native application PaaS architecture at Ant Financial's digital classroom.

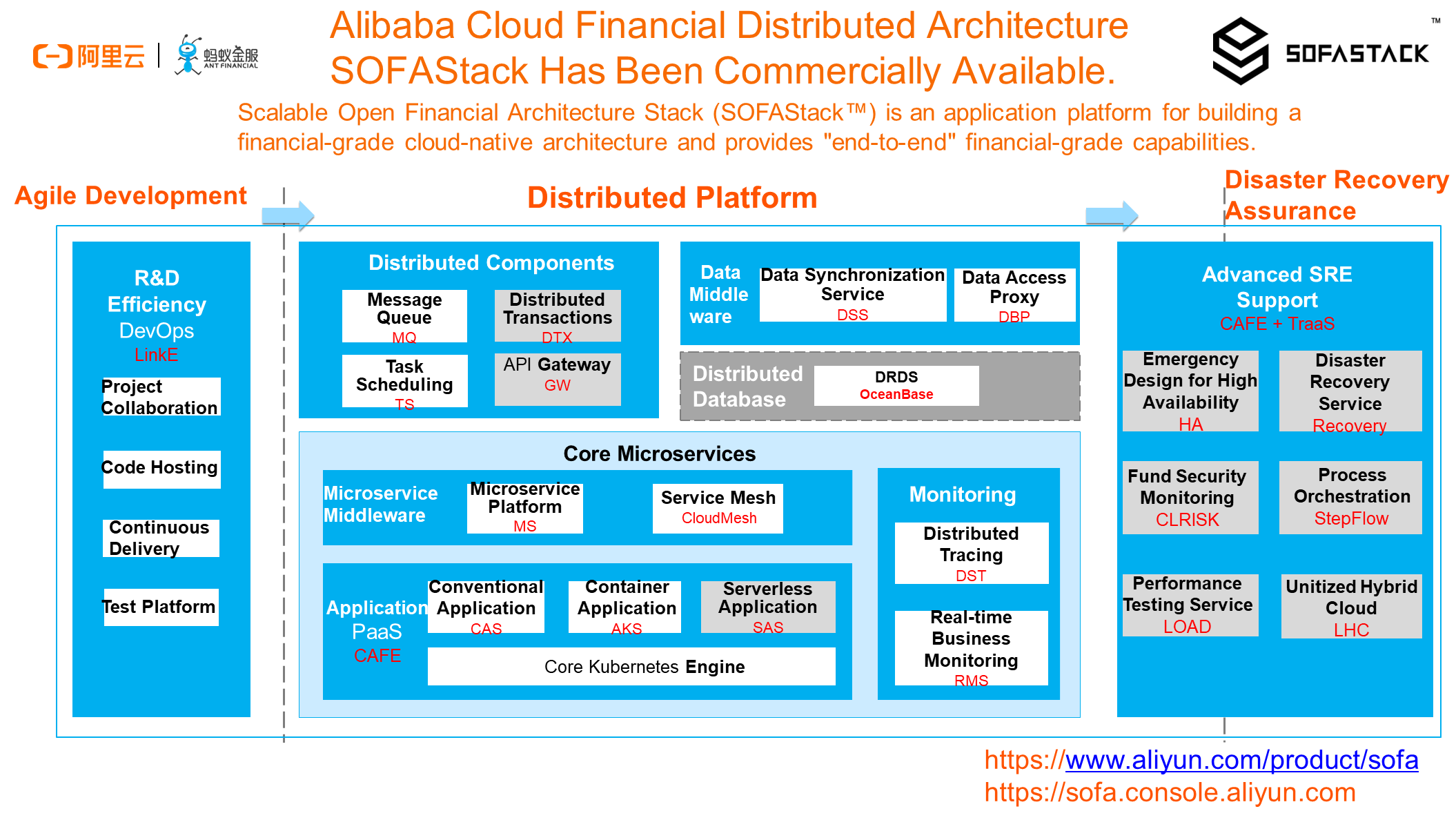

Hello, everyone. Welcome to Ant Financial's digital livestreaming classroom. In February, we commercially released our Scalable Open Financial Architecture Stack (SOFAStack) on Alibaba Cloud. To allow more friends to know about the capabilities, positioning, and design ideas of this financial-grade distributed architecture, we will conduct a series of livestreamed classes to share them later. Today, we are going to share the topic of Practices of Building the Cloud-native Application PaaS Architecture. This topic focuses on the ideas of employing PaaS product capabilities in some financial scenarios that require steady innovations, to help you better connect PaaS products to the cloud-native architecture.

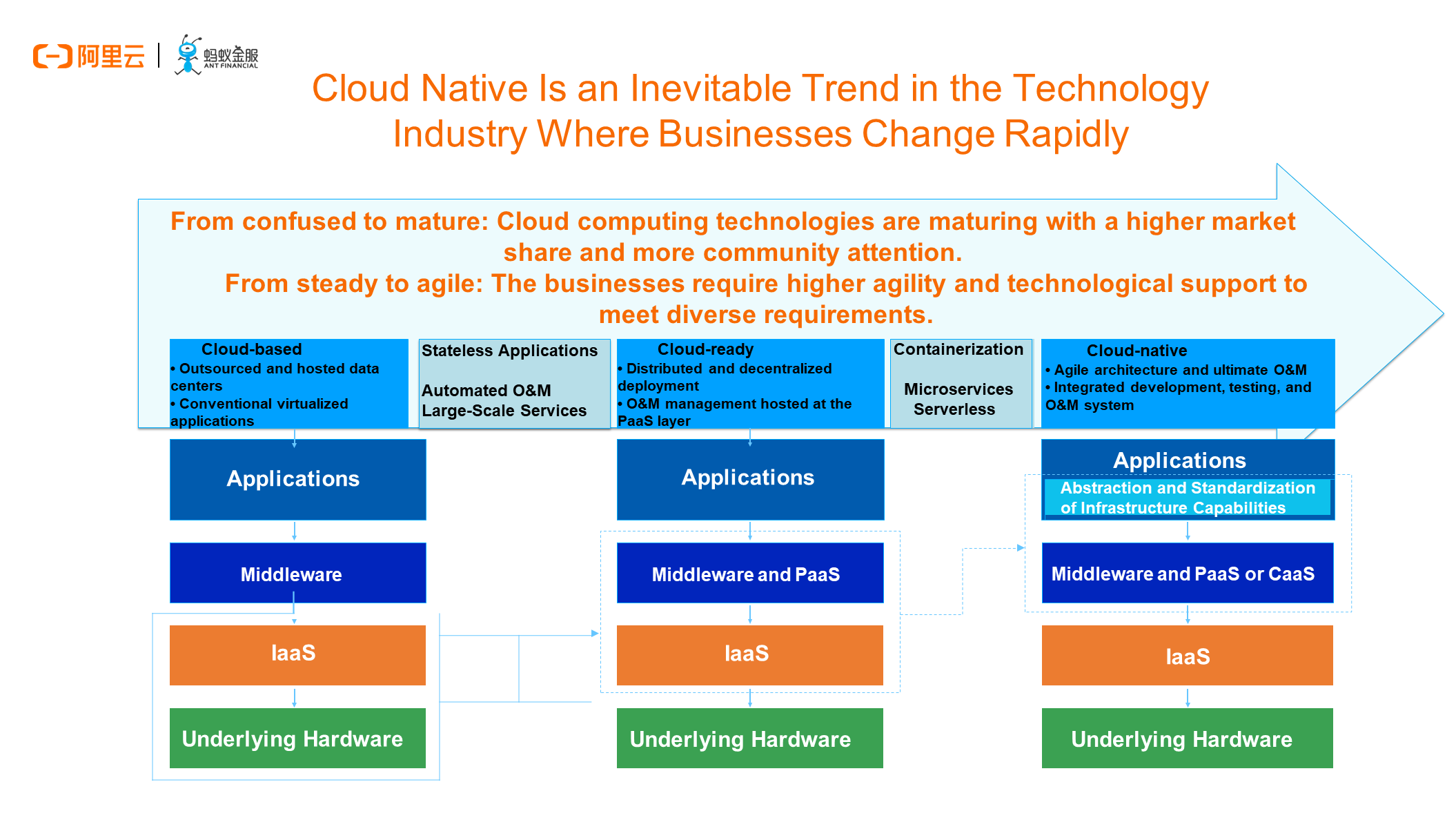

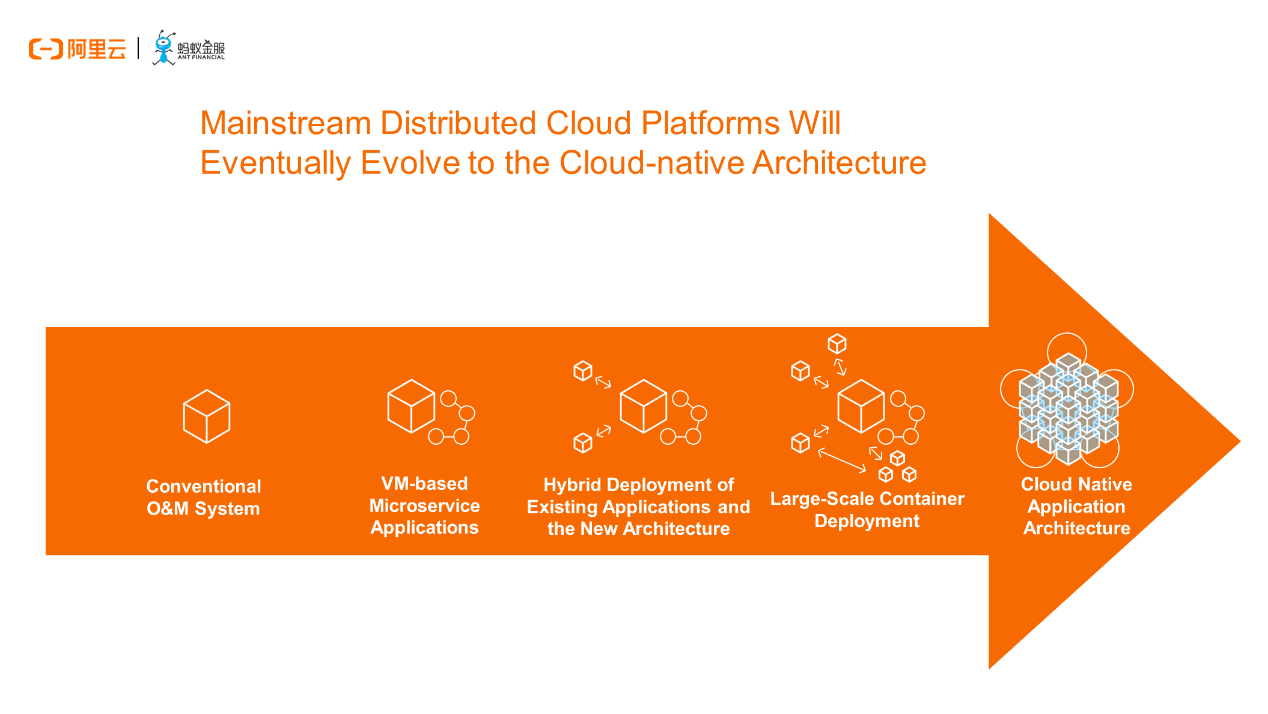

Through the development history of IT, cloud computing has been classified into Infrastructure as a Service (IaaS), PaaS, and Software as a Service (SaaS) for more than a decade. During the development of the entire cloud computing industry, it is obvious that enterprises have experienced three stages of cloud computing policies, that is, Cloud-based, Cloud-ready, and Cloud-native. The three stages changed due to the fact that businesses are becoming increasingly agile, requiring enterprises to shift their focus to upper layers by investing more energy and talent in the business logic and turning over the unfamiliar and increasingly complex infrastructure and middleware at lower layers to cloud computing vendors. In this way, professionals are able to focus on their professions.

This essentially further refines the social division of labor and complies with the law of the development of human society. In the era of cloud native, the container technology, service mesh technology, and serverless technology proposed in the industry are all intended to decouple business R&D from base technologies to make it easier to drive innovations both in businesses and base technologies.

Cloud native is an inevitable trend in the technology industry where businesses change rapidly. What substantively drives this trend is the container technology represented by the so-called cloud native, Kubernetes, and Docker. These essentially revolutionize application delivery modes. To truly implement the new application delivery mode advocated by the industry and communities in the actual enterprise environments, we need an application-centric platform that works throughout the lifecycle of application O&M.

In fact, there have been a lot of exchanges and materials for the keyword "cloud native" in the community and the industry. They focus on best practices of Docker and Kubernetes, continuous integration and continuous delivery (CI/CD) of DevOps, the design of container network storage, optimizations on the integration with log monitoring, and so on. Today, we want to demonstrate the product value of building a PaaS platform on top of Kubernetes. Kubernetes is an excellent orchestration and scheduling framework, and the key contributor to standardize application orchestration and resource scheduling. In addition, Kubernetes provides a highly scalable architecture to help the upper layer customize various controllers and schedulers. However, Kubernetes is not a PaaS instance. The bottom layer of PaaS can be implemented based on Kubernetes. However, to truly use Kubernetes in production environments, you must supplement many capabilities at the upper layer, especially in the financial industry.

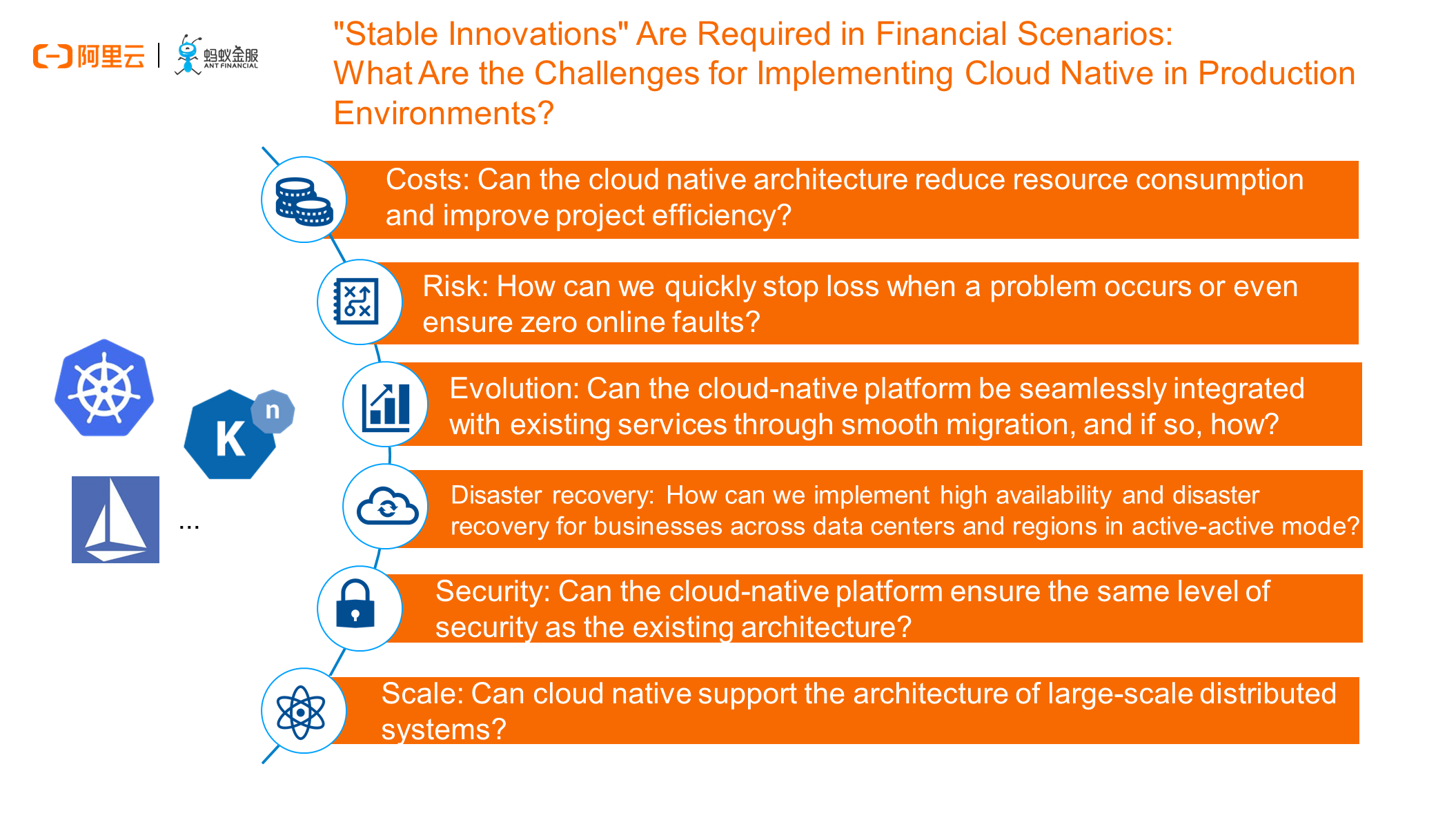

What are the challenges for implementing cloud native in production environments?

We have previously conducted some research and customer interviews. As of 2020, the vast majority of financial institutions had expressed a great interest in technologies such as Kubernetes and containers. Many institutions had built an open-source or commercial cluster for some non-critical businesses or in development and testing environments. Their motivations are simple. Financial institutions hope that this new delivery mode can help businesses evolve rapidly. However, in distinct contrast, it is evident that few of them dare to implement cloud-native architectures in core production environments. This is because financial business innovations are based on the premise of guaranteed stability.

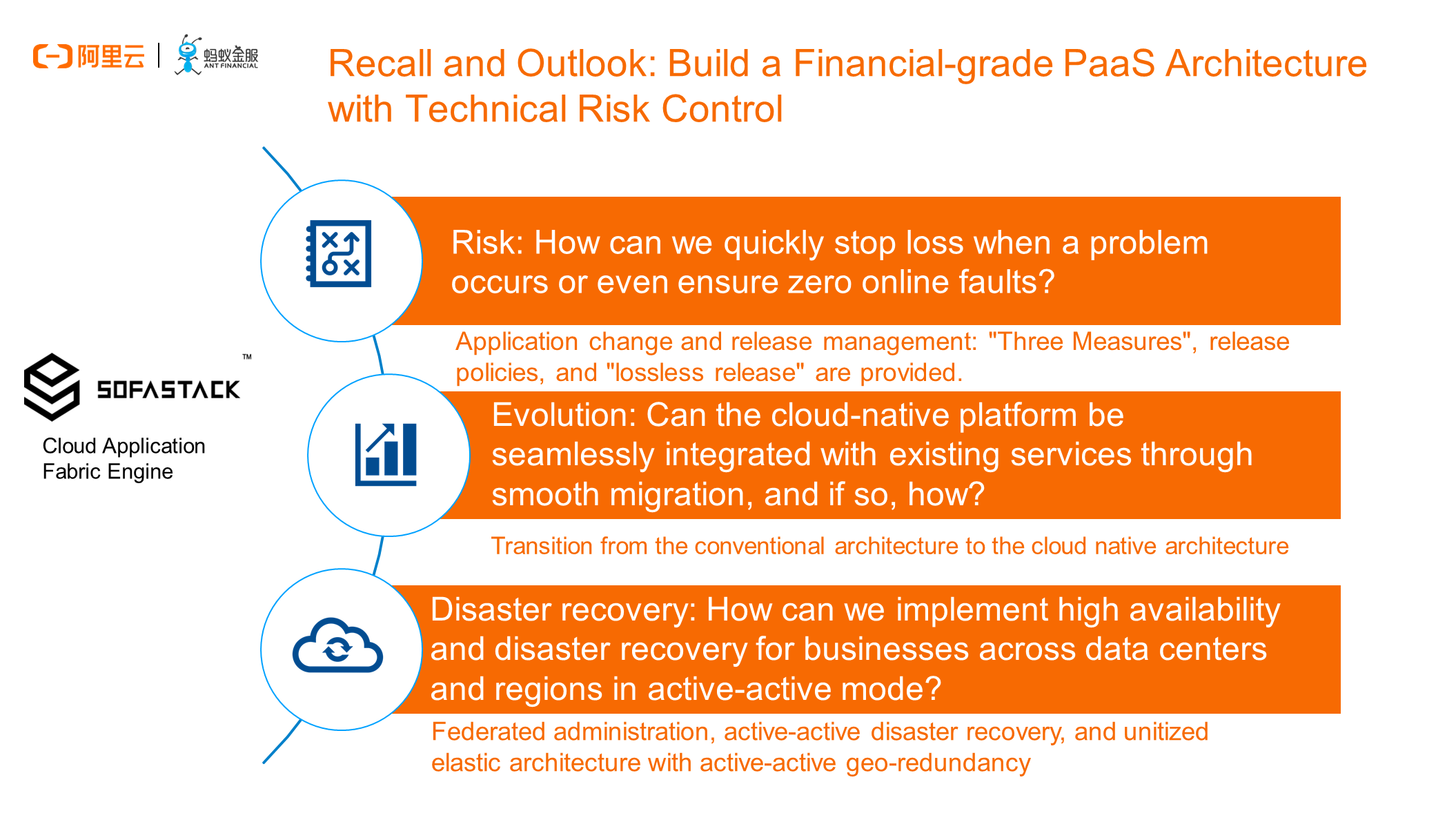

Our team has summarized the preceding six challenges faced by Ant Financial in the process of serving internal businesses and external financial institutions. In fact, these challenges are also faced by our internal site reliability engineer (SRE) team. In the insights we will share today and in the future, we will gradually summarize and deepen product ideas to address these challenges.

One of the core ideas that we are going to share today is how we control application change risks at the product level. Around this topic, we will introduce the background of the "three measures" for application changes, the native deployment capability of Kubernetes, the extensions made to our products based on change requirements, and our open sourcing plans.

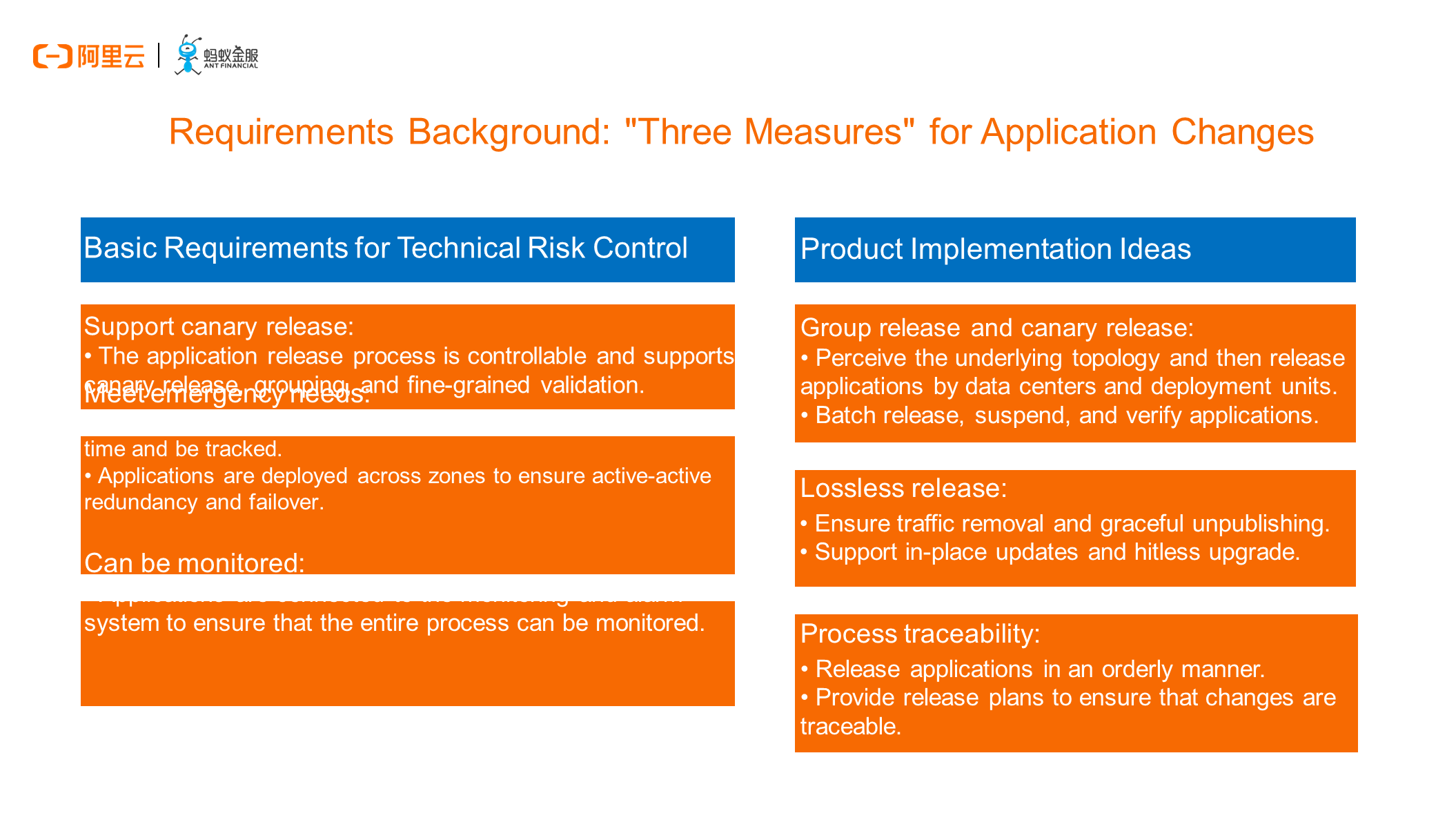

The so-called "three measures" indicate the requirements that application changes can be carried out in canary release mode, be monitored, and meet emergency needs. This is a redline rule for internal SREs at Ant Financial. All changes must comply with this rule, and this rule is mandatory even in the cases of minor changes or rigorous tests. To meet this rule, we have designed a variety of fine-grained release policies at the PaaS product layer, such as group release, beta release, canary release, and blue-green release. These release policies are similar to those used in conventional O&M. However, many container users find it difficult to implement them in Kubernetes.

Sometimes, due to demanding business continuity requirements, users are reluctant to accept the standard mode of the native Kubernetes model. For example, the canary release of a native deployment cannot be completely lossless or controlled on demand because we still lack control over pod changes and traffic governance by default. In view of this, we made customizations at the PaaS product level and extended custom resources at the Kubernetes level. In this way, we can still exercise fine-grained control over the entire release process in cloud-native scenarios, making the deployment, canary release, and rollback of applications in a large-scale cluster more graceful and in line with the "three measures" against technical risks.

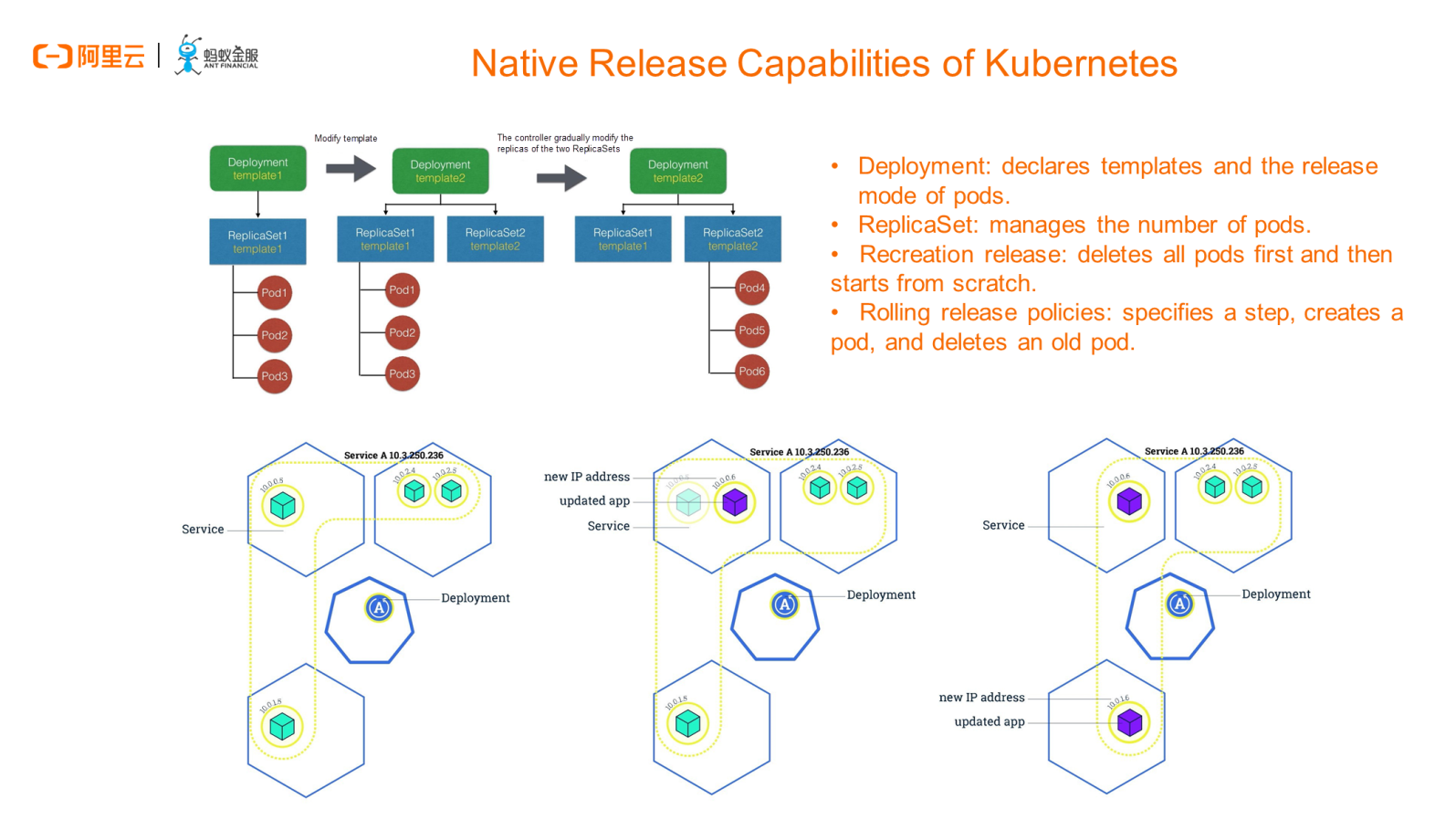

Actually, the native deployment object of Kubernetes and the corresponding ReplicaSet have gradually been stabilized in several recent major versions. To put it simply, in the most common release scenario in Kubernetes, we declare the expected release mode and the definition of pod specifications by using the deployment object. When pods are running, we use the ReplicaSet object to manage the expected number of pods, in which case rolling release or recreation release is enabled by default.

The lower part of the preceding figure shows the rolling release of an application based on the deployment object. Here, we will not elaborate too much on that. Essentially, in the process, we specify a step according to O&M requirements, create a pod, and delete the old pod. In this way, we can ensure that there is always an available container that provides external services in the entire application version change and release process. In most scenarios, the deployment object is sufficient and the whole process is easy to understand. In reality, deployments are the most common in the Kubernetes system in addition to pods and nodes.

After reviewing the deployment object, let's take a look at CafeDeployment, a CustomResourceDefinition (CRD) extension developed according to actual requirements. Cloud Application Fabric Engine (CAFE) is the name of our SOFAStack PaaS product line. We will briefly describe CAFE at the end of this article.

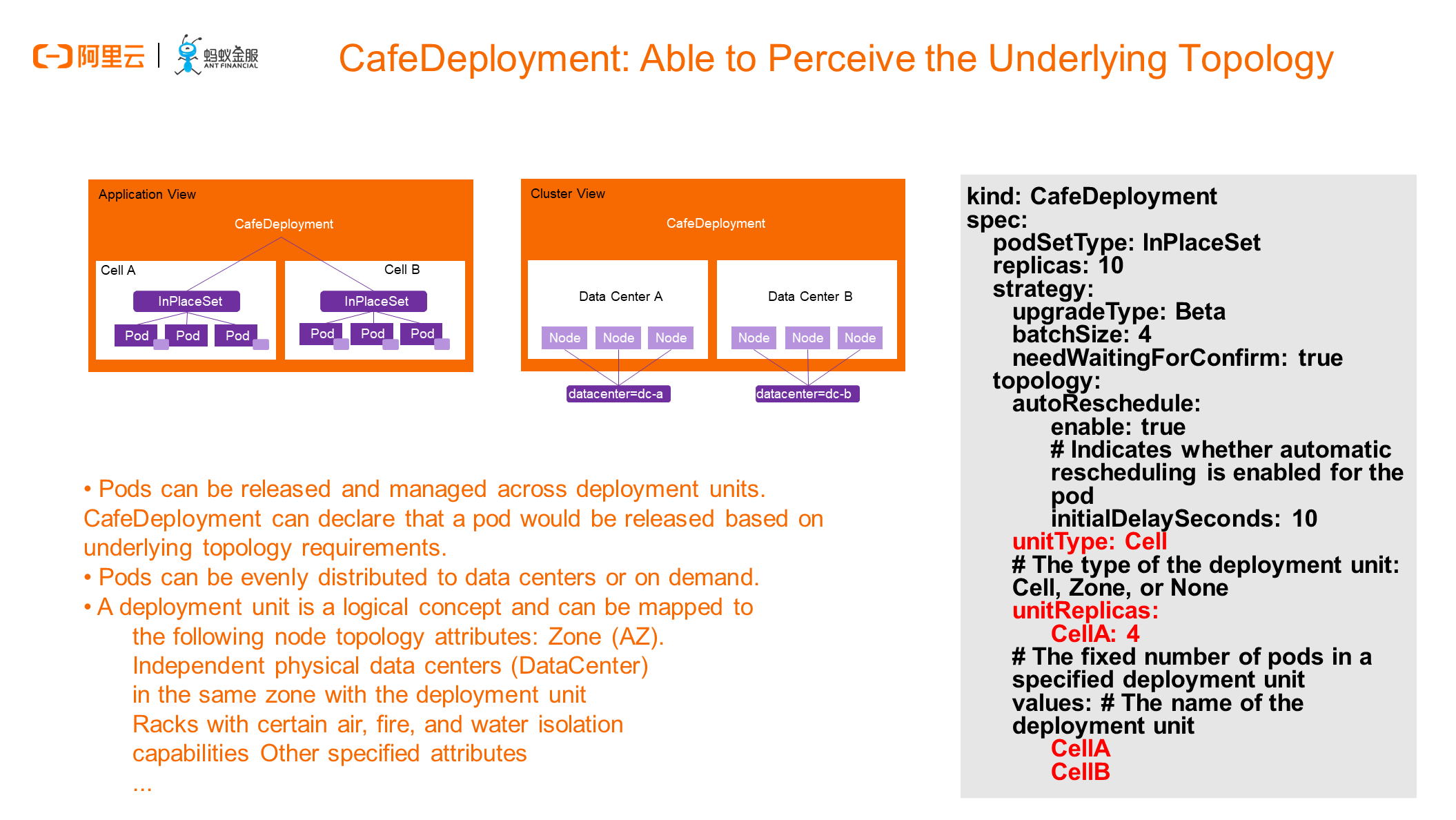

CafeDeployment has an important capability of perceiving the underlying topology. But, what does this topology mean? This topology knows the specific node to which we publish a pod and does not simply bind the pod to the node based on affinity rules, but can truly import relevant scenario information such as high availability, disaster recovery, and deployment policies into the entire release-centric domain model. To this end, we proposed a domain model called a deployment unit. It is a logical concept and is simply called a cell in the YAML file. In actual use, a cell can be used in different zones, different physical data centers, or different racks, all of which are centered on different levels of high availability topologies.

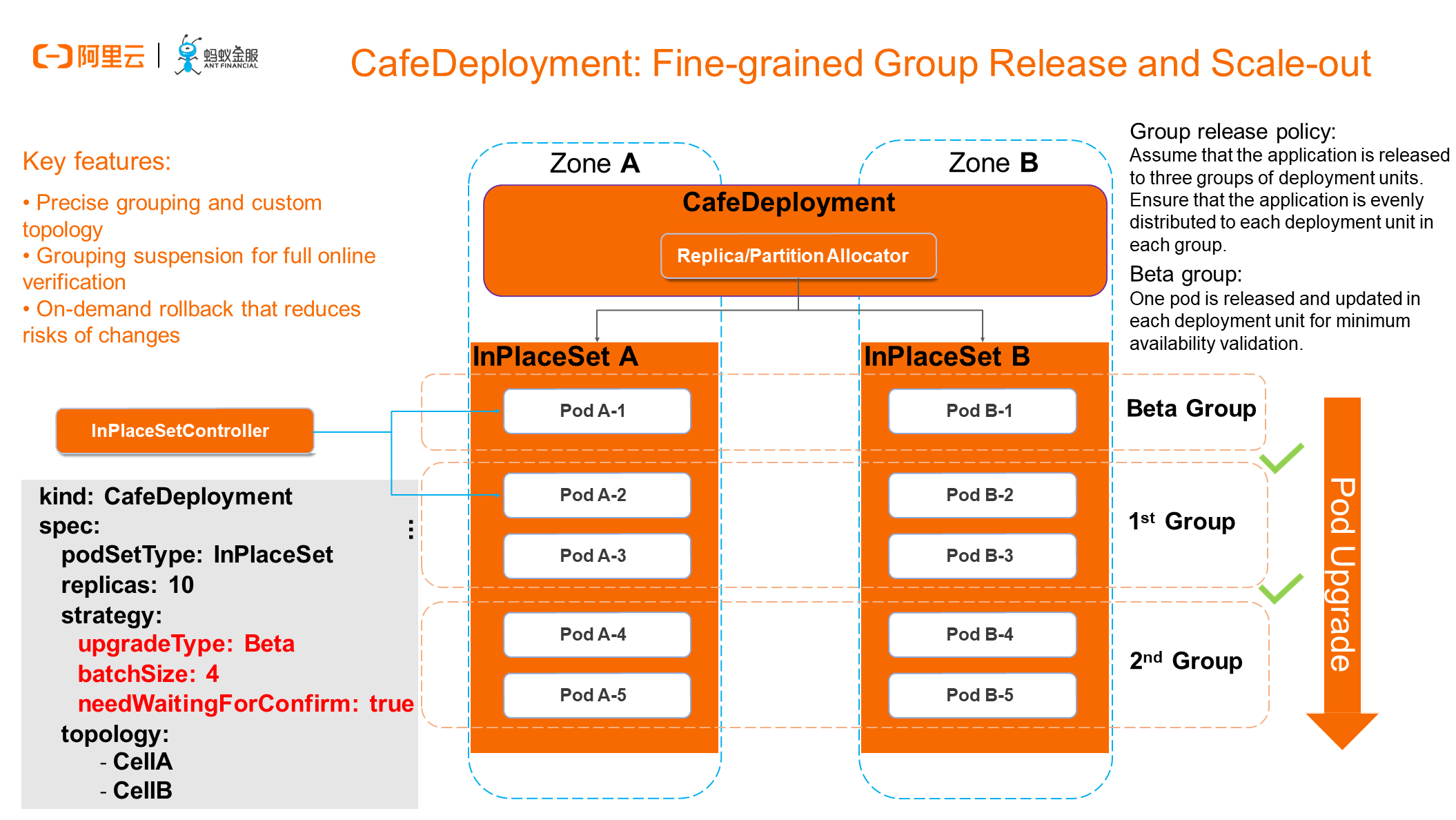

Let's see the typical release process of a CafeDeployment that has perceived the underlying topology. We will demonstrate the process through the product console and command line later. This figure shows a fine-grained group release process that enables changes at the container instance level to be sufficiently controllable and support canary release. Specifically, each stage can be suspended, verified, resumed, or rolled back.

As shown in the preceding example, we want to release or change 10 pods and evenly distribute them into two zones to ensure high availability at the application level. In addition, we need to introduce the concept of group release in the release process. Specifically, each data center must release one instance first. After the verification is suspended, the data center can continue to release the next group. In this case, the beta group has 1 instance on each side, group 1 has 2 instances on each side, and group 2 has the remaining 2 instances on each side. In the actual production environment, we monitor the businesses and more dimensions when making major changes to a container, to ensure that every step meets expectations and passes verification. In this way, the fine-grained control at the application instance layer plays the important role of a break for online application release, allowing SREs to roll back an application in time, when needed.

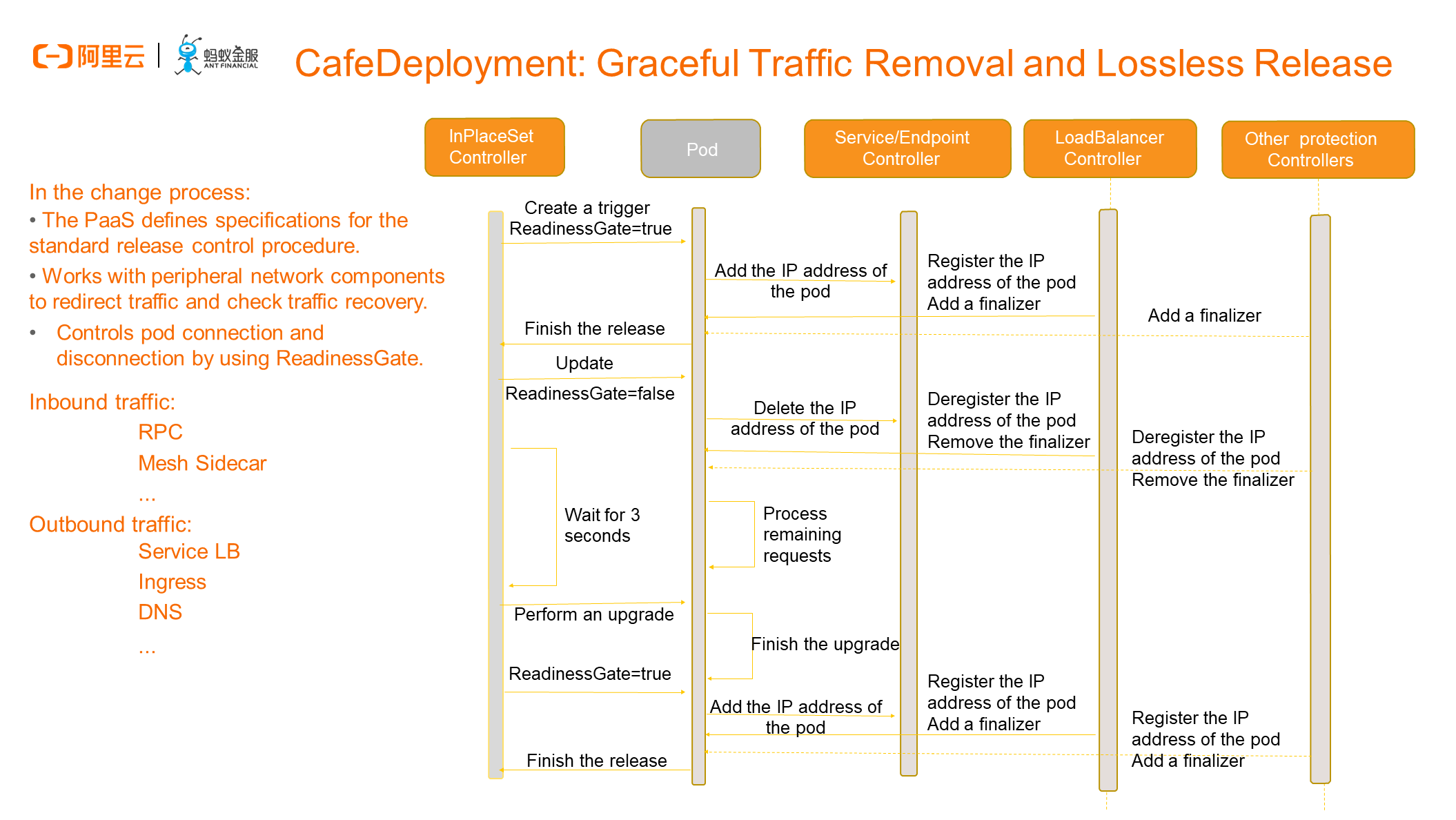

Now that we've described the entire fine-grained release process, let's talk about the fine-grained traffic removal process. To ensure lossless release, we must gracefully remove the network traffic in both the north-south and east-west directions so that online services are not affected when a container is stopped, restarted, or scaled in.

The preceding figure shows the standard control procedure when a pod is released after being changed. The time sequence diagram includes the pod and its associated component controllers. The CafeDeployment mainly works with network-related components such as the service controller and LoadBalancer controller to redirect traffic and check traffic recovery for lossless release.

According to our command-based O&M practices in conventional O&M scenarios, we can run commands sequentially to perform atomic operations on each component to ensure that all inbound traffic and inter-application traffic are removed before we make the actual change. In contrast, in the cloud-native Kubernetes scenario, these complex operations are performed by the platform and SREs only need to run a simple statement. During deployment, we pass through the traffic components associated with the application, including but not limited to the Service LoadBalancer, RPC, and DNS, to the CafeDeployment, add the corresponding finalizers, and use ReadinessGate to identify whether the pod can carry traffic.

For example, when we want to update a specified pod in place under the control of the InPlaceSet controller, the InPlaceSet controller sets the ReadinessGate parameter to false. After perceiving the change, the associated components deregister their respective IP addresses sequentially to trigger actual traffic removal. After all related finalizers are removed, the system automatically updates the pod. After the new version of pod is deployed, the InPlaceSet controller sets the ReadinessGate parameter to true to sequentially trigger the loading of actual traffic to the associated components. The pod has been released only when the detected traffic types of the finalizers are consistent with that actually declared in the CafeDeployment.

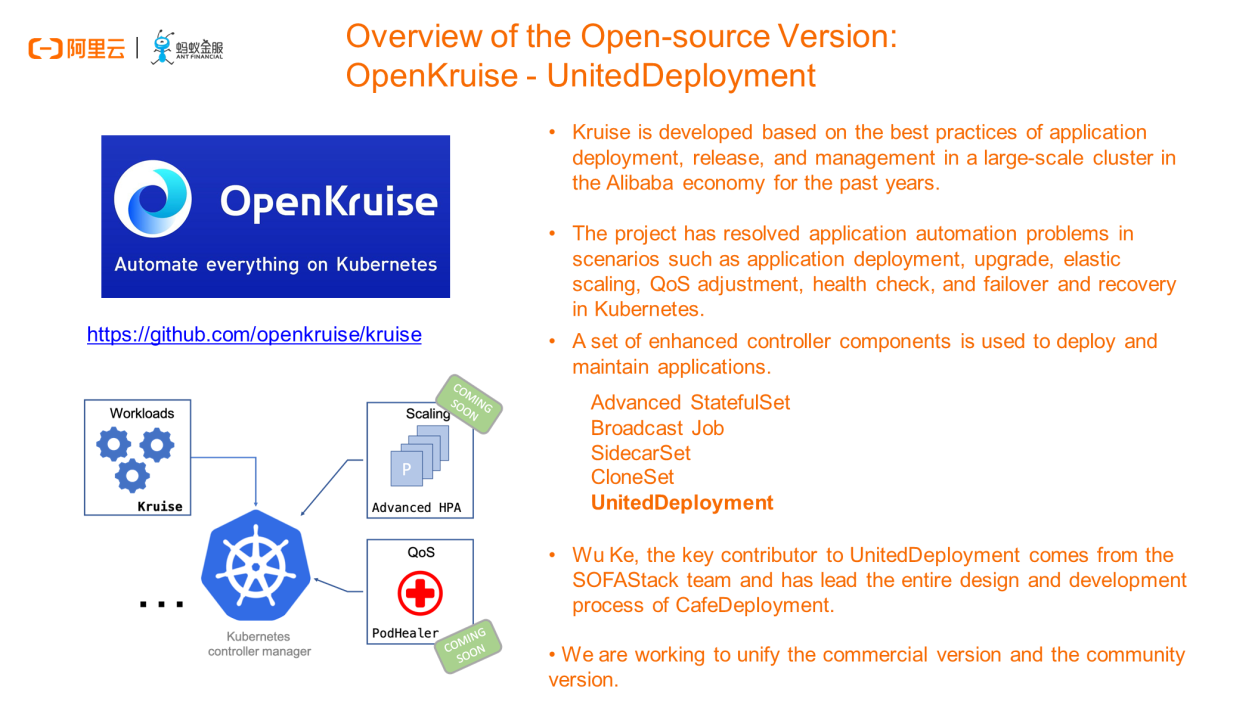

Let's go back to the PPT file. In fact, the CafeDeployment we just mentioned is a commercial product of CAFE. We have also developed some open-source projects in the community when commercializing the CAFE. Here, I would like to introduce the OpenKruise project, which we developed based on massive cloud-native O&M practices in the Alibaba economy. We open-sourced many Kubernetes-based automated O&M operations through standard Kubernetes extensions to supplement the capabilities that native workloads cannot provide. The project has resolved application automation problems in scenarios such as deployment, upgrade, elastic scaling, QoS adjustment, health check, and failover and recovery in Kubernetes.

Currently, the OpenKruise project provides a set of controller components. In particular, the UnitedDeployment can be considered as the open-source version of CafeDeployment. In addition to the basic replica retention and release capabilities, the UnitedDeployment provides the capability of releasing pods to multiple deployment units, which is one of the main features of CafeDeployment. Additionally, UnitedDeployment manages pods based on various workloads, currently including StatefulSet and OpenKruise AdvancedStatefulSet provided by the community. Therefore, the UnitedDeployment can inherit features of the corresponding workloads.

Wu Ke (Haotian, GitHub ID: wu8685), the key contributor to UnitedDeployment, comes from the SOFAStack CAFE team and has led the entire design and development process of CafeDeployment. Currently, we are working to incorporate more capabilities into the open-source version through standardized methods after carrying out massive verification on the capabilities. By doing this, we can gradually minimize the difference between the two versions.

Due to time constraints, that's it for our detailed discussion of these technical implementations today. According to the previous description about the entire release policy of CafeDeployment, our key value proposition for product design is to provide a steady evolution capability while helping integrate emerging technology architectures into applications and businesses. Both conventional O&M systems represented by virtual machines (VMs) and cloud-native architectures for large-scale container deployment require fine-grained technical risk control and evolution towards the most advanced architectures.

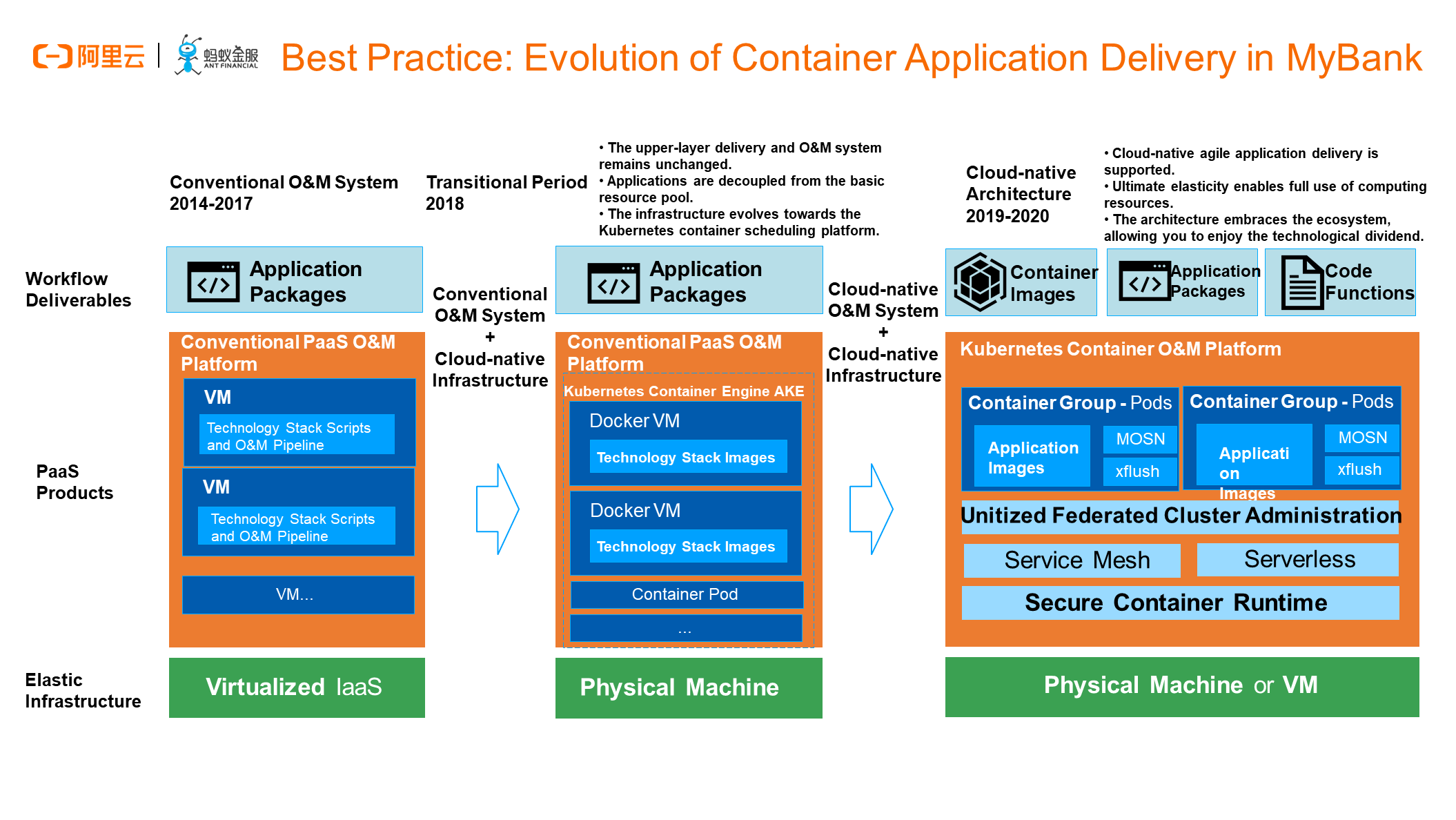

The following example shows the containerization evolution route of a certain Internet bank. Since its foundation, the Internet bank has determined a distributed system where microservices are built on top of the cloud computing infrastructure. However, from the perspective of the delivery mode, the PaaS management model based on conventional VMs was initially adopted. From 2014 through 2017, developers had been deploying application packages to VMs through Buildpack. This O&M mode lasted for three years, during which we helped upgrade the architecture from the zone active-active mode to the mode with three data centers across two zones, and then to the unitized active geo-redundancy mode.

In 2018, as Kubernetes became more mature, we built a base at the underlying layer based on physical machines and Kubernetes. Meanwhile, we used containers to simulate VMs to containerize the whole infrastructure. However, service providers are unaware of this. We provided services for upper-layer applications through "rich containers" by using pods that are located on top of the underlying Kubernetes. From 2019 to 2020, as the businesses developed, the requirements for O&M efficiency, scalability, migratability, and refined management drove us to evolve the infrastructure to a more cloud-native O&M system and gradually implement capabilities such as service mesh, serverless, and unitized federated cluster management.

Through productization and commercialization, we are open-sourcing the capabilities that we have accumulated for years. We hope to enable more financial institutions to quickly replicate the capabilities of the cloud-native architecture in the Internet financial business scenarios and create value for the businesses.

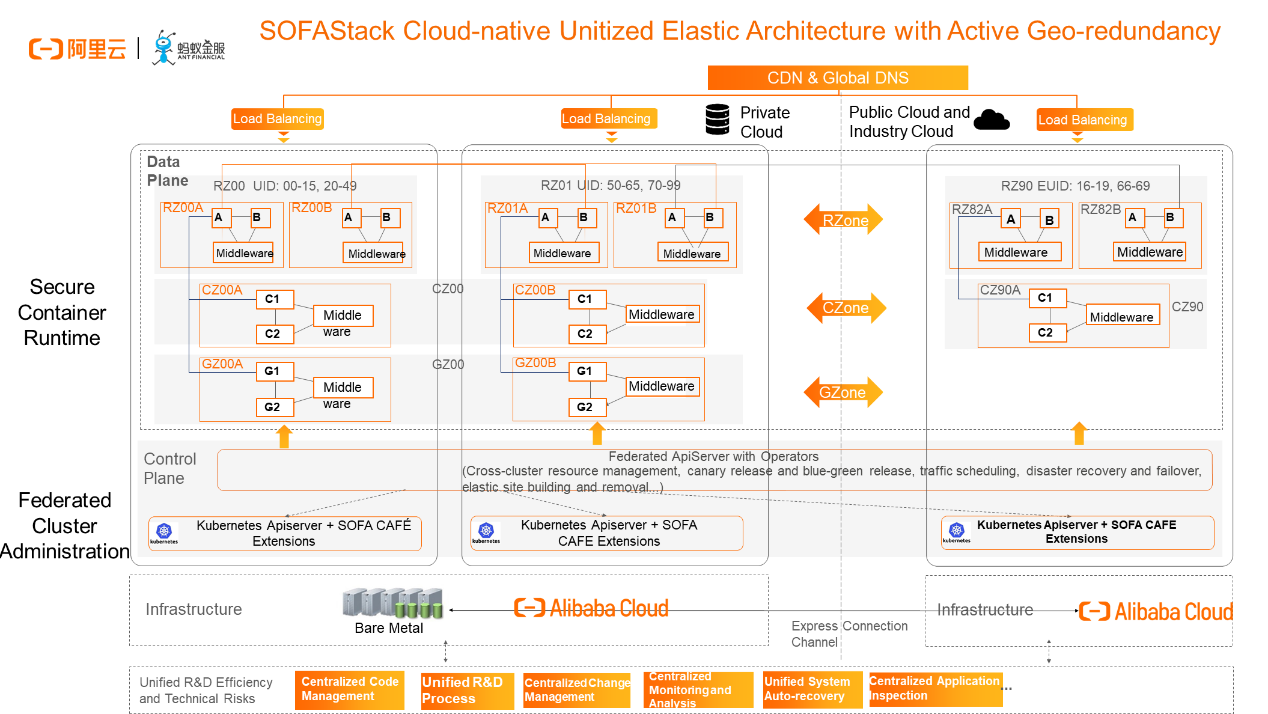

You may know about the unitized architecture and the elasticity and disaster recovery capabilities of the active geo-redundancy mode at Ant Financial from many channels. Here, I'll show you a figure, which is the abstract architecture of a solution that we are currently working on and are going to implement within months for a large bank. At the PaaS level, we build federated capabilities on top of Kubernetes, hoping that each data center has an independent Kubernetes cluster. This is because disaster recovery requirements cannot be met if we deploy a Kubernetes cluster across data centers and regions. Furthermore, the multi-cloud federated management capability also requires that we extend Kubernetes capabilities to PaaS-layer products, such as defining logical units and federation-layer resources. Ultimately, this builds a unitized architecture that covers multiple data centers, regions, and clusters. We have made a large number of extensions, including some federated objects at the federation layer in addition to the aforementioned CafeDeployment and ReleasePipeline. The ultimate goal is to provide unified release management, disaster recovery, and emergency management for businesses in these complex scenarios.

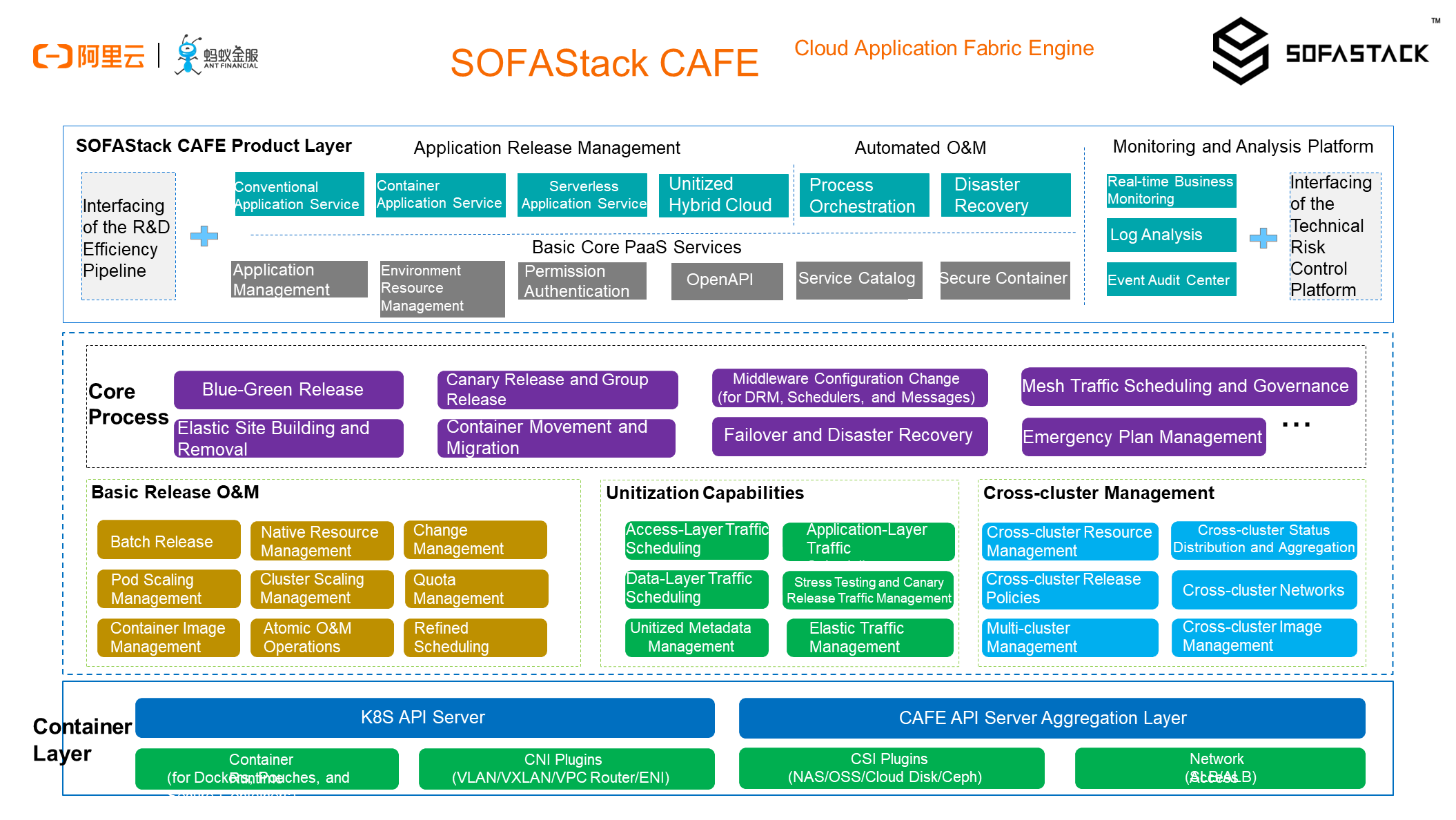

Now, I can finally explain the meaning of CAFE, which was mentioned much earlier. CAFE stands for Cloud Application Fabric Engine. It is the PaaS for cloud-native applications at Ant Financial's SOFAStack. It not only provides the cloud-native capabilities standardized by Kubernetes, but also open-sources production-proven financial-grade O&M capabilities at the upper layer, including application management, release and deployment, O&M and orchestration, monitoring and analysis, disaster recovery, and emergency management. In addition, CAFE is highly integrated with the SOFAStack middleware, service mesh, and Alibaba Cloud container service for Kubernetes (ACK).

The differentiated application lifecycle management capabilites provided by CAFE include release management, disaster recovery, and emergency management, plus the evolving path to the unitized hybrid cloud capabilities. CAFE is the key base for the implementation of distributed architectures, cloud-native architectures, and hybrid cloud architectures in financial scenarios.

The last slide is actually the core theme today. The CAFE we described today is a part of the financial distributed architecture product SOFAStack. At present, SOFAStack has been commercially available on Alibaba Cloud. So, we welcome you to apply for a trial and discuss it further with us. For more information, search us online, follow the product link provided in this article, or go to the official website of Alibaba Cloud.

Ant Financial's Innovations and Practices in Online Graph Computing

12 posts | 2 followers

FollowAlipay Technology - February 20, 2020

Alipay Technology - August 21, 2019

Alibaba Cloud Community - May 31, 2022

Alibaba Clouder - January 4, 2021

Alibaba Clouder - May 13, 2020

Alibaba Clouder - June 8, 2020

12 posts | 2 followers

Follow Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn More FinTech on Cloud Solution

FinTech on Cloud Solution

This solution enables FinTech companies to run workloads on the cloud, bringing greater customer satisfaction with lower latency and higher scalability.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alipay Technology