By Li Shan

Want to reduce AI Training time from Month to Days.

Have you considered saving the expensive GPU cost?

Is your evaluation on checking the AI module whether it meets the business requirements correct?

...

If some AI jobs troubles you, this solution is definitely your best helper.

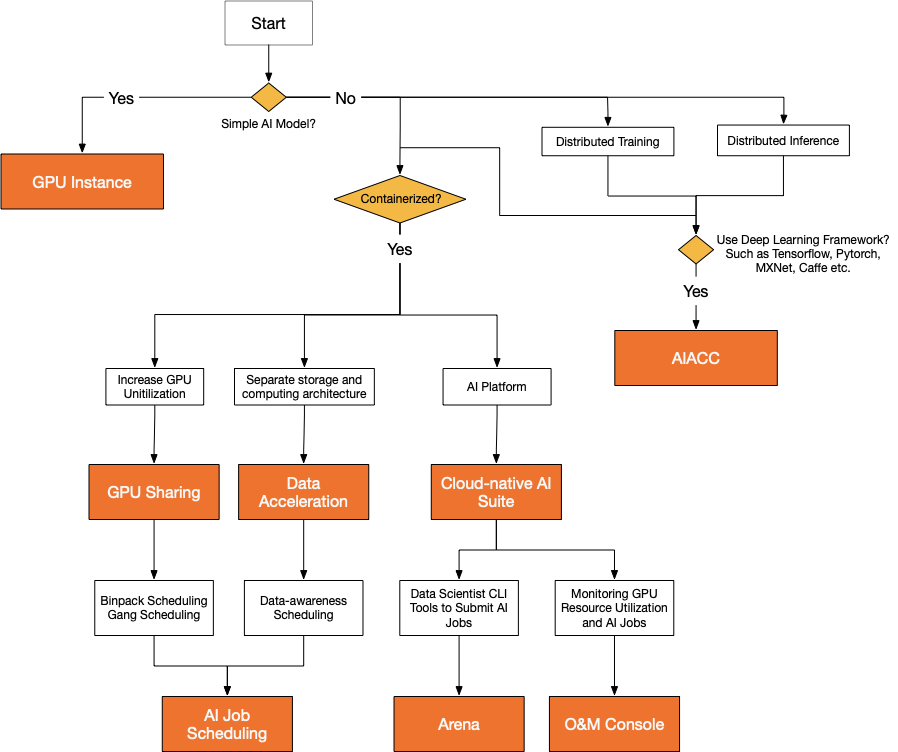

In the following document, I will briefly introduce AI Acceleration for AI Training and Inference. Alibaba Cloud provides 6 layers of Services/Component to improve speed and saving cost of AI Model Training and Inference. Below diagram is an option to help you choose which is suitable for you scenario.

1. AIACC

AIACC is short for Apsara AI Acceleration, a unified distributed deep learning training and inference acceleration engine provided by Alibaba Cloud. AIACC supports various widely used distributed training frameworks including TensorFlow, PyTorch, MXNet, Caffe, and Kaldi, and is applicable to the classification and recognition of massive images, Click-Through Rate (CTR) estimation, natural language processing (NLP), speech and face recognition, and many other fields. In breaking the world record in the DAWNBench ImageNet Training, AIACC is officially ranked on top of the performance list. The training was performed

in a cluster that included 128 V100 GPUs, provided by 16 ecs.gn6ec12g1.24xlarge instances. In addition, a 32 Gbit/s virtual private cloud (VPC) was used as the communication network. When the previous world record was set, the cluster for training included 128 V100 GPUs and used 100 Gbit/s InfiniBand as the communication network.

2. GPU Sharing

GPUs are expensive and often deployed in a large scale to support workloads. Therefore, the improvement of overall resource usage in a large GPU cluster to realize the potential of each GPU is the key challenge in GPU scheduling. Think below situation: Cluster administrators who want to improve the GPU utilization of the cluster need a more flexible scheduling strategy. Application developers need to run model training tasks on multiple GPUs at the same time.

GPU sharing is based on Alibaba Cloud Container Service for Kubernetes (ACK), which supports multiple pods to share the same GPU at the cluster level, a single pod to use multiple GPUs, or a pod to use only one GPU. GPU isolation is isolate GPU memory and tasks at the same time to ensure the stability and security of AI inference tasks.

3. Data Acceleration

Fluid is developed by Alibaba Cloud, it also accepted as an official sandbox project by CNCF. Fluid is a scalable distributed data orchestration and acceleration system running on Kubernetes. It introduces a range of technological innovations in data caching, collaborative orchestration, and scheduling optimization of cloud-native applications and data. Fluid improves task operation efficiency by providing features such as dataset preloading, metadata management optimization, small file I/O optimization, and auto scaling.

4. Cloud Native Suite

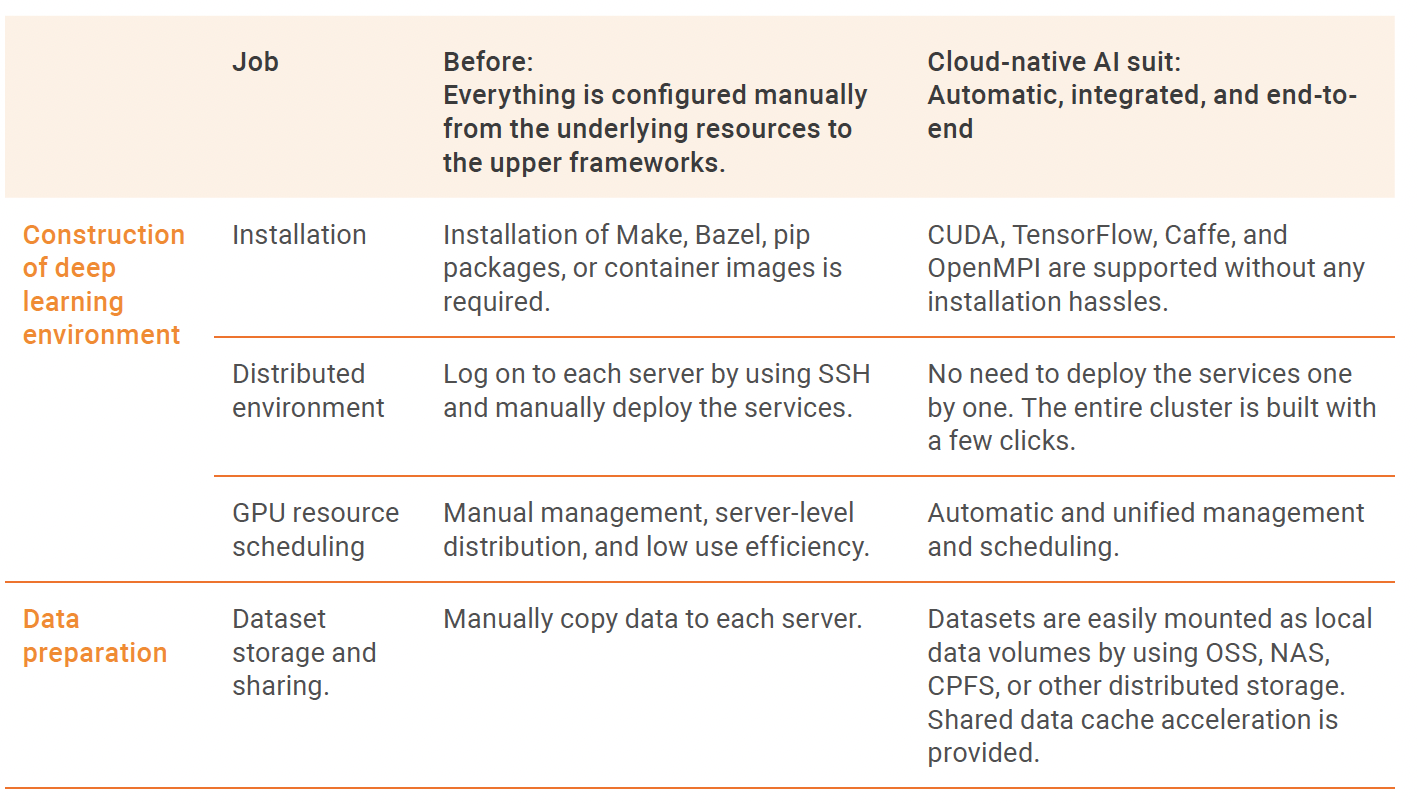

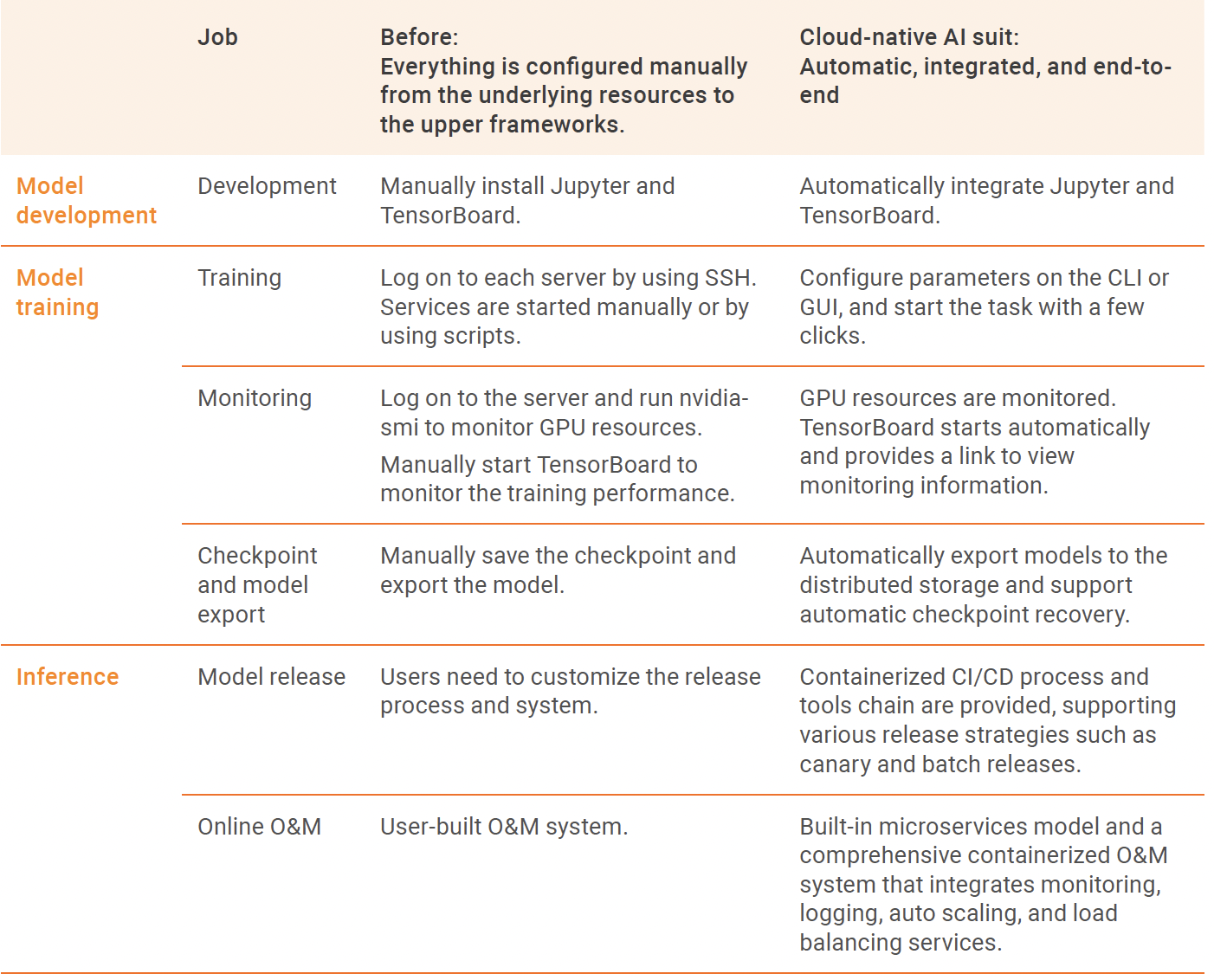

The suite provides a range of tools and components to improve AI engineering efficiency, covering all stages of deep learning from environment construction, data preparation, model development, model training to inference. The following table describes the benefits of the cloud-native AI suite in different stages.

There are also Whitepaper, demos, solution pages and relevant products for your interests to know more. Visit: https://www.alibabacloud.com/solutions/ai-acceleration

How China Grew its Rich Digital Economy – Asia Accelerator Series Part 1

[Infographic] Accelerate Digitization in Financial Services with Alibaba Cloud

1,304 posts | 461 followers

FollowAlibaba Cloud Native Community - March 18, 2024

Alibaba Cloud Community - October 14, 2025

Alibaba Cloud ECS - June 4, 2020

Justin See - November 7, 2025

PM - C2C_Yuan - August 8, 2023

Alibaba Cloud Community - November 14, 2025

1,304 posts | 461 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Community