By Xuanheng

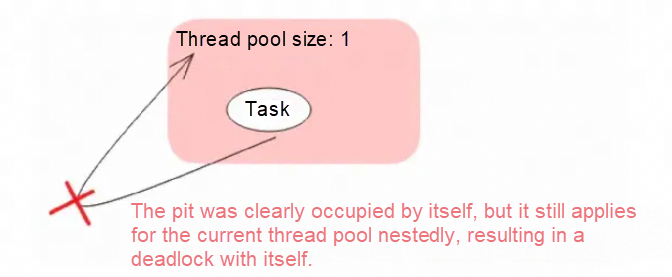

In Java, there is a classic deadlock issue where a program has already taken hold of the thread pool, yet it continues to request access to it, effectively causing it to wait on itself, thus leading to deadlock, as shown in the following figure and code:

// This code will keep deadlocking.

final ExecutorService executorService = Executors.newSingleThreadExecutor();

executorService.submit(() -> {

Future<?> subTask = executorService.submit(() -> System.out.println("Hello dead lock"));

try {

subTask.get();

} catch (ExecutionException | InterruptedException ignore) { }

});Compared with other deadlock problems, the troublesome point of this problem is that, because of the implementation problem of the thread pool, JVM tools such as jstack cannot automatically diagnose it, so it can only be spotted with the naked eye.

In the Kotlin coroutine, since the underlying thread pool application is in a black box, if you don't know enough, it is easy to step into this trap.

This article does not repeat the basic syntax of Kotlin coroutine, but focuses on the topic of deadlocks.

Do you think there is a deadlock risk in the following two pieces of code?

• The first piece of code looks complex, but it's not a deadlock risk.

runBlocking {

runBlocking {

runBlocking {

runBlocking {

runBlocking {

println("Hello Coroutine")

}

}

}

}

}• The second piece of code looks simple, but there is actually a deadlock risk.

runBlocking(Dispatchers.IO) {

runBlocking {

launch (Dispatchers.IO) {

println("hello coroutine")

}

}

}As long as 64 requests enter this code block at the same time, there would be no escape – the deadlock is inevitable. Since the thread pool of the coroutine is reused, other coroutines will not be able to execute, for example, the following code can lock the entire application:

// Use the traditional Java thread pool to simulate 64 requests.

val threadPool = Executors.newFixedThreadPool(64)

repeat(64) {

threadPool.submit {

runBlocking(Dispatchers.IO) {

println("hello runBlocking $it")

// In the coroutine environment, sleep should not be called. To simulate the time-consuming calculation and call, we have to use it.

// Normal coroutine dormancy should use delay.

Thread.sleep(5000)

runBlocking {

launch (Dispatchers.IO) {

// Because of deadlock, the following line will never be printed.

println("hello launch $it")

}

}

}

}

}

Thread.sleep(5000)

runBlocking(Dispatchers.IO) {

// Other coroutines cannot be executed, and the following line will never be printed.

println("hello runBlocking2")

}This article delves into the root cause behind Kotlin coroutine deadlock and how to completely avoid it.

My experience primarily lies in server-side development, so the insights in this article might be more pertinent to server development scenarios. If the mobile scenario is different, you are welcome to discuss it in the comments.

runBlocking is to open a coroutine and wait for it to end.

Java threading always makes it seem that runBlocking will use a new thread to execute blocks of code asynchronously, which is not the case. If no parameter is added to runBlocking, the current thread is used by default:

fun main() {

println("External Thread name: ${Thread.currentThread().name}")

runBlocking {

println("Inner Thread name: ${Thread.currentThread().name}")

}

}The output is as follows:

External Thread name: main

Inner Thread name: mainIf I use launch/async without parameters, it is also executed in the current main thread:

runBlocking {

val result = async {

println("async Thread name: ${Thread.currentThread().name}")

1 + 1

}

// Complete the calculation of 1+1 in another coroutine

val intRes = result.await()

println("result:$intRes, thread: ${Thread.currentThread().name}")

}Print the results.

async Thread name: main

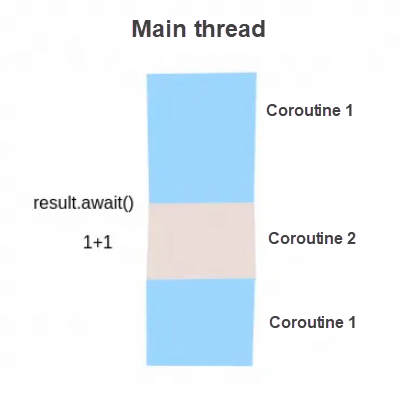

result:2, thread: mainFrom the perspective of thread thinking, it is easy to mistakenly think that the above code will deadlock. In fact, it will not, because the await will not block the thread, but directly use the main thread to continue to run the code block in the async. The entire scheduling process is as follows:

Therefore, for runBlocking/launch/async without parameters, no matter how you use them, they won't cause a deadlock. For example, the first example above looks complicated but will not deadlock:

// Always in the current thread. There is no thread switching at all and of course, there will be no deadlock.

runBlocking {

runBlocking {

runBlocking {

runBlocking {

runBlocking {

println("Hello Coroutine")

}

}

}

}

}Printout:

Hello CoroutineAlthough there is no deadlock, it is actually a single thread at this time, which cannot accelerate I/O-intensive tasks in parallel.

If you want to execute asynchronously, you can add a parameter to the runBlocking. Commonly used parameters are Dispatchers. Default and Dispatchers.IO:

println("current thread:${Thread.currentThread().name}")

runBlocking(Dispatchers.Default) {

println("Default thread:${Thread.currentThread().name}")

}

runBlocking(Dispatchers.IO) {

println("IO thread:${Thread.currentThread().name}")

}Printout:

current thread:main

Default thread:DefaultDispatcher-worker-1

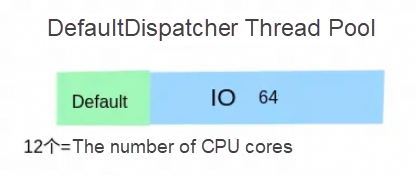

IO thread:DefaultDispatcher-worker-1runBlocking is no longer running on the main thread, but on a DefaultDispatcher thread pool built into Kotlin. It is strange that even though I used two different Dispatchers of Default and I/O, but it ended up executing on the same thread. This indicates an ambiguous relationship between them.

The Default layer and the I/O layer actually allocate threads from a thread pool, and they cut out a part of it for their own use: 64 threads are for I/O use, and the same number of threads as the number of CPU cores are for the Default use. So DefaultDispatcher thread pool can have a maximum of 64+CPU cores of threads. For example, my personal computer has 12 cores, so there can be a maximum of 64+12=76 threads on my computer.

The design idea is that Default is used for CPU-intensive tasks, which are most appropriate if the concurrency of such tasks is the same as the number of CPU cores, and too much concurrency will result in context switching overhead. As the name implies, I/O is used for I/O-intensive tasks. For such tasks, the concurrency can be higher, and the default is 64.

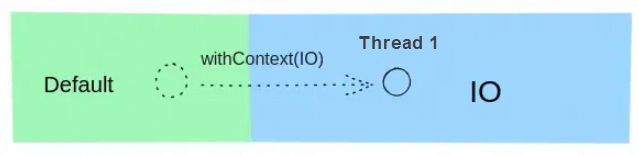

Since DefaultDispatcher thread pool is divided into two parts for separate use, why do you have to put it in one thread pool? This is because Kotlin allows switching I/O and Default types in the current thread, which can reduce the overhead when switching between I/O and Default. Use the withContext method to switch the task from Default to I/O without switching the thread:

runBlocking(Dispatchers.Default) {

println("default thread name ${Thread.currentThread().name}")

withContext(Dispatchers.IO) {

println("io thread name ${Thread.currentThread().name}")

}

}The output is as follows:

default thread name DefaultDispatcher-worker-1

io thread name DefaultDispatcher-worker-1Therefore, the previous figure isn't completely accurate. It does not mean that one part of the DefaultDispatcher is dedicated to serving for Default and the other part is dedicated to I/O. Threads are like cashiers in supermarkets, serving people one by one, no matter whether they are poor or rich.

The only limitation is the number of concurrent coroutines, for example, the number of I/O coroutines running at the same time cannot exceed 64, and the number of Default coroutines running at the same time cannot exceed the number of CPU cores. It's possible for the same thread to be running a Default coroutine at one moment, and an I/O coroutine the next.

Will reusing the same thread pool lead to poor isolation between Default and I/O tasks? Don't worry about this. Kotlin's isolation is quite good. At the upper level, it can be perceived as having two dedicated thread pools serving Default and I/O respectively.

For example, when 64 I/O threads are exhausted, the Default thread cannot be switched even with withContext:

val threadPool = Executors.newFixedThreadPool(64)

// Block 64 I/O threads

repeat(64) {

threadPool.submit {

runBlocking(Dispatchers.IO) {

// In the coroutine, delay should be used instead of sleep. Here, the wrong approach is taken for demonstration purposes.

Thread.sleep(Long.MAX_VALUE)

}

}

}

runBlocking(Dispatchers.Default) {

println("in default thread ${Thread.currentThread().name}")

withContext(Dispatchers.IO) {

// It will never be printed because I/O resources cannot be applied.

println("in io thread ${Thread.currentThread().name}")

}

}Printout:

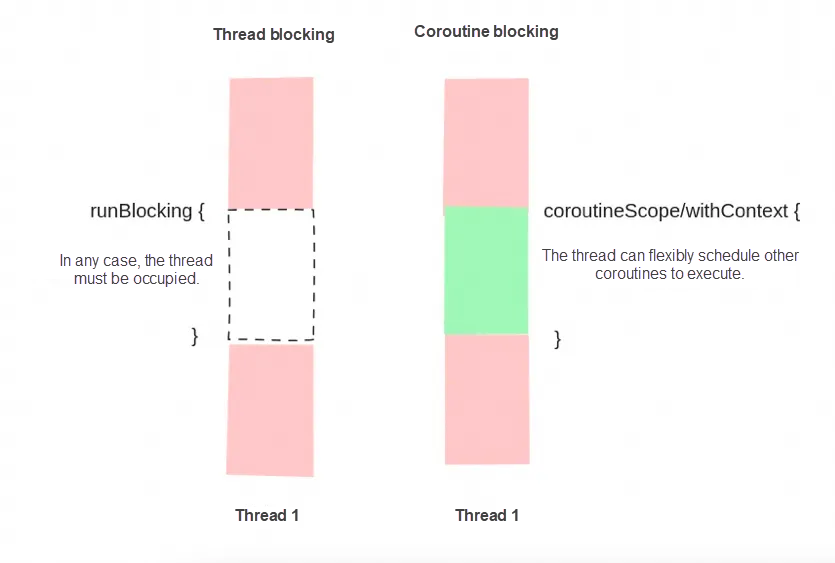

in default thread DefaultDispatcher-worker-1In Kotlin, there is also an API similar to runBlocking, called coroutineScope, which also starts a coroutine to run a code block and waits for it to end. The difference is:

• coroutineScope is a suspend function and can only be used in the context of a coroutine (such as a runBlocking code block or other suspend function);

• runBlocking is blocking in the thread dimension, while coroutineScope is blocking in the coroutine dimension.

For example, the code at the beginning with the risk of deadlock:

runBlocking(Dispatchers.IO) {

runBlocking {

launch (Dispatchers.IO) {

println("hello coroutine")

}

}

}Changing to coroutineScope can solve it:

runBlocking(Dispatchers.IO) {

coroutineScope {

launch (Dispatchers.IO) {

println("hello coroutine")

}

}

}An experiment can be done to prove that it does not deadlock:

// Use the traditional Java thread pool to simulate 64 requests.

val threadPool = Executors.newFixedThreadPool(64)

repeat(64) {

threadPool.submit {

runBlocking(Dispatchers.IO) {

println("hello runBlocking $it")

Thread.sleep(5000)

coroutineScope {

launch (Dispatchers.IO) {

// Print it after 5 sec.

println("hello launch $it")

}

}

}

}

}

runBlocking(Dispatchers.IO) {

// Print it out smoothly.

println("hello runBlocking2")

}Why can this problem be solved? Since runBlocking threads will be blocked. However, the coroutineScope only blocks the coroutine.

The withContext mentioned above have similar effects with coroutineScope , except that switching coroutine contexts is further supported.

Thread.sleep and delay also differ in this aspect.

Therefore, runBlocking is very strange. If you completely embrace coroutine, you don't need to perceive any thread dimension in theory. For example, in terms of the Go language with built-in coroutines, I have never heard of the concept of thread dimension. However, the JVM ecology is too burdensome after all, and a large amount of old code is written based on threads. Therefore, Kotlin provides this method. It is also said in the document that "it is only designed to bridge traditional thread code and coroutine context" [1].

I believe that's what the Kotlin official team thinks as well, and it relies on programmers to consciously avoid it. If you want to stop waiting for a block of code to be executed, choose the appropriate method in different scenarios:

| Scenario | Method |

| Thread Context | runBlocking |

| Coroutine Context | coroutineScope/withContext |

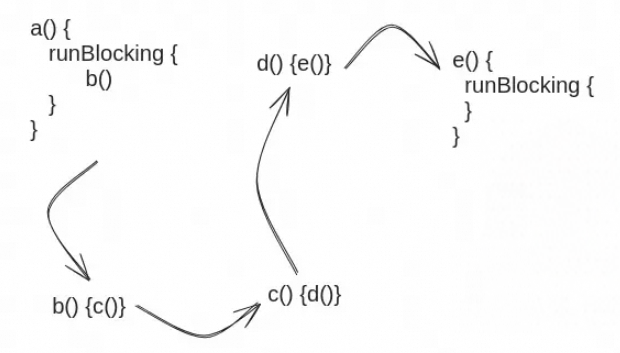

It is easy in theory, but difficult in practice. In real practice, functions are nested and reused layer by layer. Who knows where might be a runBlocking call in these layers?

In the article How I Fell in Kotlin's RunBlocking Deadlock Trap, and How You Can Avoid It [2], the author suggested that the use of runBlocking should be completely prohibited in the project, which also means that the project should completely embrace the coroutine, and the suspend method should be from the entrance. This requires support from the framework.

In Spring WebFlux, it is supported to directly define the methods in the Controller as suspend [3]:

@RestController

class UserController(private val userRepository: UserRepository) {

@GetMapping("/{id}")

suspend fun findOne(@PathVariable id: String): User? {

//....

}

}However, most of Alibaba's applications provide HSF interfaces instead of HTTP. Currently, HSF does not support the provider method that defines suspend. However, HSF supports asynchronous calls, which can be encapsulated based on itself.

Although the biggest advantage of coroutine is non-blocking I/O, most applications do not have such high-performance requirements.

Then we just need to write in the same way as usual thread writing. Suppose we have a method that calls an interface asynchronously ten times and waits for it to finish before returning. How do we write it in a traditional thread?

public class ThreadExample {

private final static Executor EXECUTOR = Executors.newFixedThreadPool(64);

public void example(String[] args) throws InterruptedException {

CountDownLatch cd = new CountDownLatch(10);

for (int i = 0; i < 10; i++) {

EXECUTOR.execute(() -> {

invokeRpc();

cd.countDown();

});

}

// Wait for 10 parallel tasks to end and return.

cd.await();

}

}The traditional way of using threads has the following characteristics:

• Each task has an independent thread pool and is not reused, so the underlying method cannot apply for this thread pool again and will not deadlock.

• The current thread is blocked, waiting for another ten threads to finish.

The above two points can also be achieved with coroutines.

• Use the asCoroutineDispatcher to convert the thread pool into a current task-specific Dispatcher for launch to use.

• runBlocking without parameters is executed in the current thread by default, which has a similar effect to CountDownLatch.

class CoroutineExample {

companion object {

val THREAD_POOL = Executors.newFixedThreadPool(64).asCoroutineDispatcher()

}

fun example() {

runBlocking {

repeat(10) {

launch(THREAD_POOL) {

invokeRpc()

}

}

}

}

}In this way, there will be no deadlock whether there are nested runBlocking upstream or downstream. It is because we're just using it as syntactic sugar for a thread.

Finally, let’s fix a deadlock problem:

fun main() {

// Use the traditional Java thread pool to simulate 64 requests.

val threadPool = Executors.newFixedThreadPool(64)

repeat(64) {

threadPool.submit {

runBlocking {

// Still in the main thread here.

println("hello runBlocking $it")

launch(Dispatchers.IO) {

// Because of Dispatchers.IO, it has already entered the DefaultDispatcher thread pool.

// If runBlocking is nested downstream, there is a deadlock risk.

Thread.sleep(5000)

// Hide the nested runBlocking in the child method to make it more undetectable.

subTask(it)

}

}

}

}

Thread.sleep(5000)

runBlocking(Dispatchers.IO) {

// Other coroutines cannot be executed, and the following line will never be printed.

println("hello runBlocking2")

}

}

fun subTask(i: Int) {

runBlocking {

launch (Dispatchers.IO) {

// Because of deadlock, the following line will never be printed.

println("hello launch $i")

}

}

}According to our principles, the problem can be solved by modifying it:

val TASK_THREAD_POOL = Executors.newFixedThreadPool(20).asCoroutineDispatcher()

fun main() {

// Use the traditional Java thread pool to simulate 64 requests

val threadPool = Executors.newFixedThreadPool(64)

repeat(64) {

threadPool.submit {

runBlocking {

println("hello runBlocking $it")

launch(TASK_THREAD_POOL) {

Thread.sleep(5000)

subTask2(it)

}

}

}

}

Thread.sleep(5000)

runBlocking(TASK_THREAD_POOL) {

// Successfully print

println("hello runBlocking2")

}

}

val SUB_TASK_THREAD_POOL = Executors.newFixedThreadPool(20).asCoroutineDispatcher()

fun subTask2(i: Int) {

runBlocking {

launch (SUB_TASK_THREAD_POOL) {

// Successfully print

println("hello launch $i")

}

}

}Although we have not as many threads as the 64 threads that DefaultDispatcher leaves for I/O, the above code will not deadlock.

[1] https://kotlinlang.org/api/kotlinx.coroutines/kotlinx-coroutines-core/kotlinx.coroutines/run-blocking.html

[2] https://betterprogramming.pub/how-i-fell-in-kotlins-runblocking-deadlock-trap-and-how-you-can-avoid-it-db9e7c4909f1

[3] https://spring.io/blog/2019/04/12/going-reactive-with-spring-coroutines-and-kotlin-flow

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

1,347 posts | 477 followers

FollowAlibaba Clouder - April 13, 2020

Alibaba Cloud Community - November 28, 2023

Alibaba Cloud Native Community - January 22, 2026

Alibaba Cloud Community - February 28, 2024

Alibaba Cloud Native Community - December 24, 2025

Alibaba Cloud Community - March 9, 2022

1,347 posts | 477 followers

Follow Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn More Web Hosting

Web Hosting

Explore how our Web Hosting solutions help small and medium sized companies power their websites and online businesses.

Learn More EMAS Superapp

EMAS Superapp

Build superapps and corresponding ecosystems on a full-stack platform

Learn More Web App Service

Web App Service

Web App Service allows you to deploy, scale, adjust, and monitor applications in an easy, efficient, secure, and flexible manner.

Learn MoreMore Posts by Alibaba Cloud Community