By Jiachun

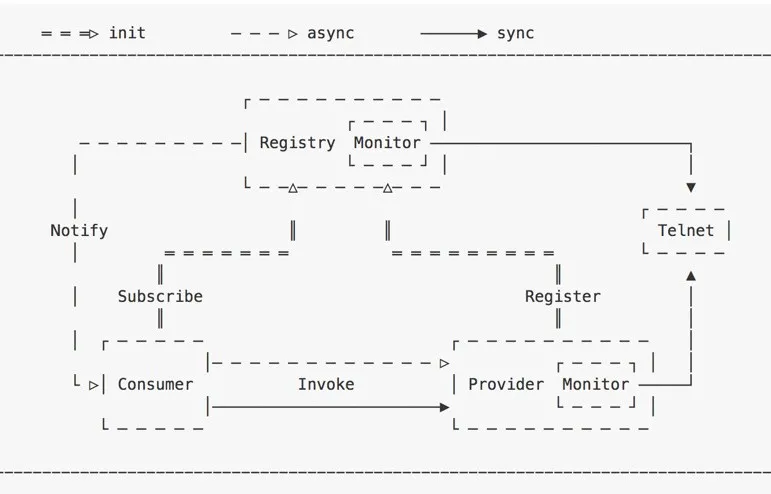

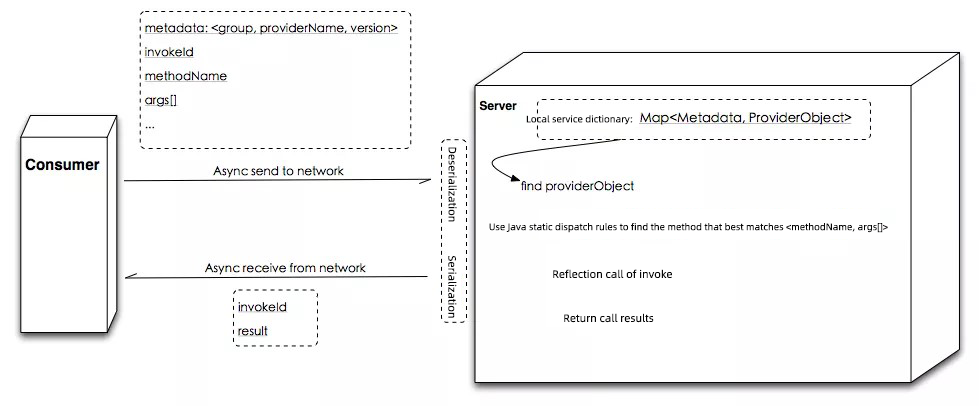

<group, providerName, version>, methodName, and args[], into a byte array and sends it to the address over the network.providerObject in the local service dictionary by <group, providerName, version>, calls the specified method through reflection based on <methodName, args[]>, and serializes the method return value as an array of bytes to return to the client.The process above is transparent to the method caller, and everything looks like a local call.

Important Concept: RPC trituple <ID, Request, Response>.

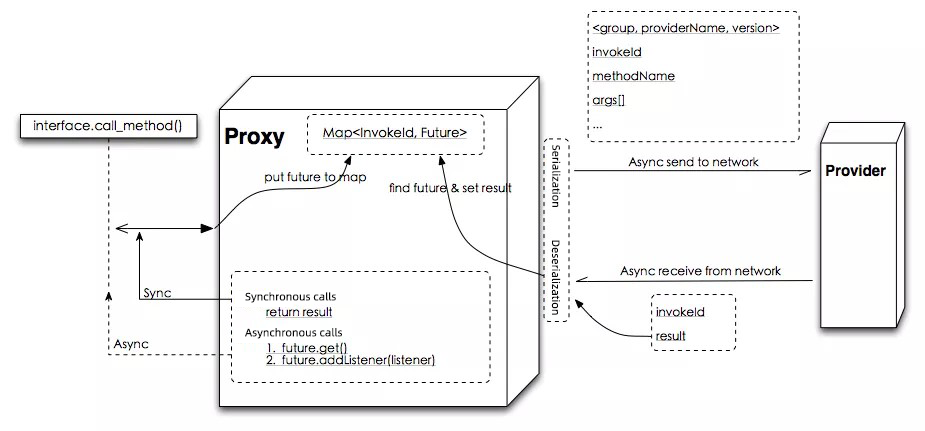

Note: In Netty4.x, thread contention can be avoided better by replacing the global Map with IO Thread(worker) —> Map<InvokeId,Future>.

1) metadata:<group, providerName, version>

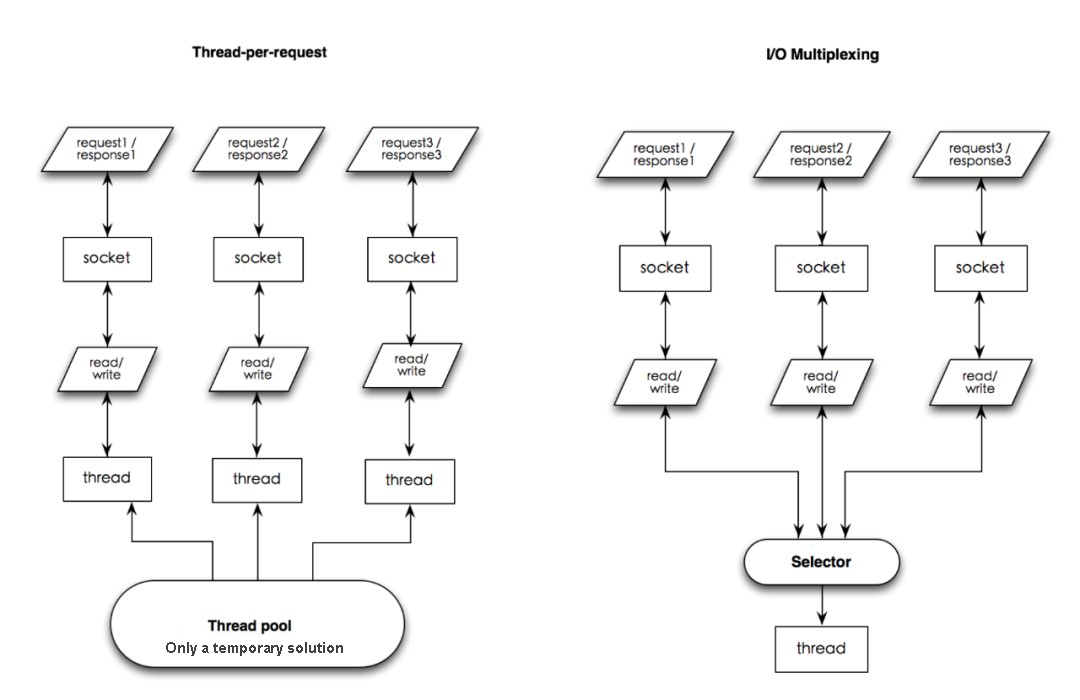

2) methodName

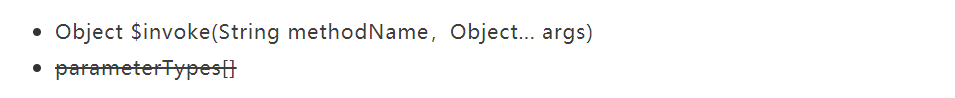

3) Is parameterTypes[] necessary?

a) What's the problem?

ClassLoader.loadClass() during deserializationb) Can they be solved?

c) args[]

d) Other: traceId, appName ...

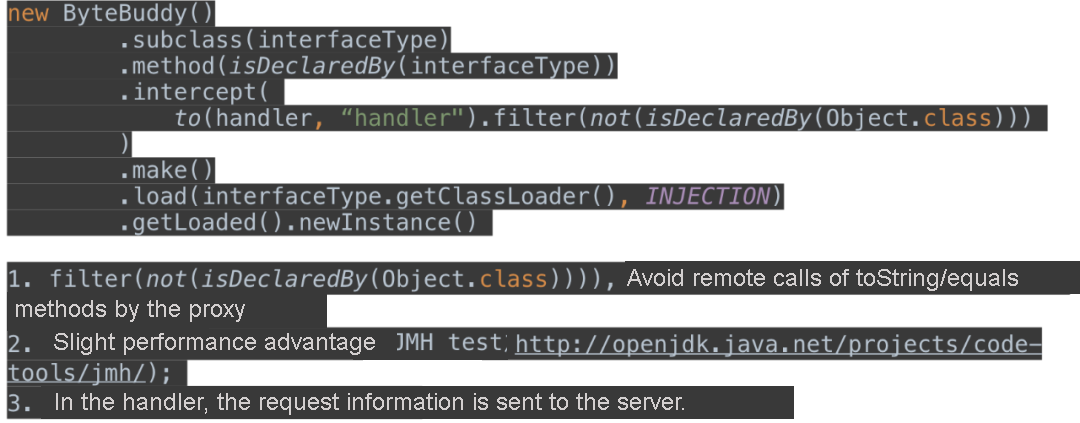

1) What does the Proxy do?

2) What methods can be used to create a Proxy?

3) What are the most important aspects?

toString, equals, hashCode, and other methods.4) Recommendation (bytebuddy):

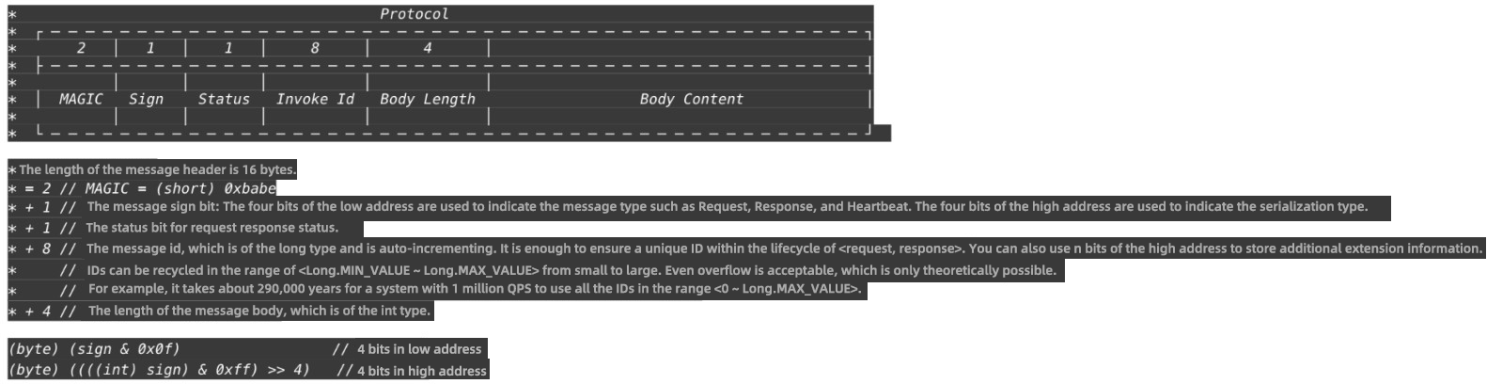

The protocol header is marked with the serializer type. Multiple types are supported.

Java SPI:

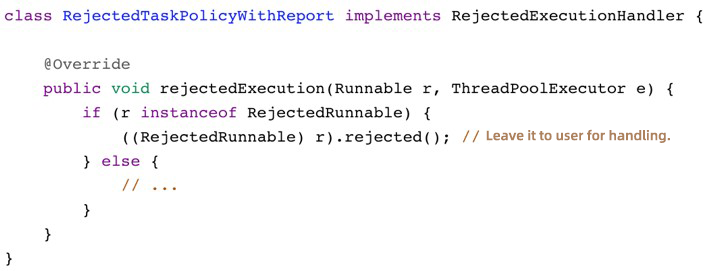

The failure of one thread pool does not affect other thread pools.

Too many extensions need to start from here.

OpenTracing

It is necessary to have the extension capability to access the third-party throttling middleware easily.

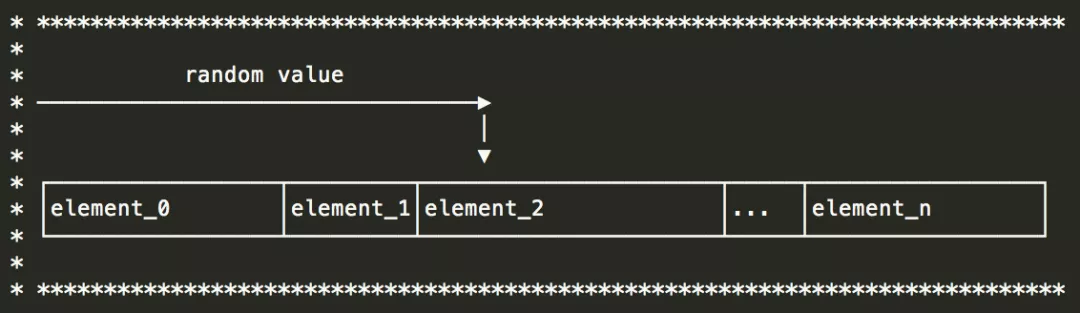

1) Weighted Random (Dichotomy Instead of Traverse)

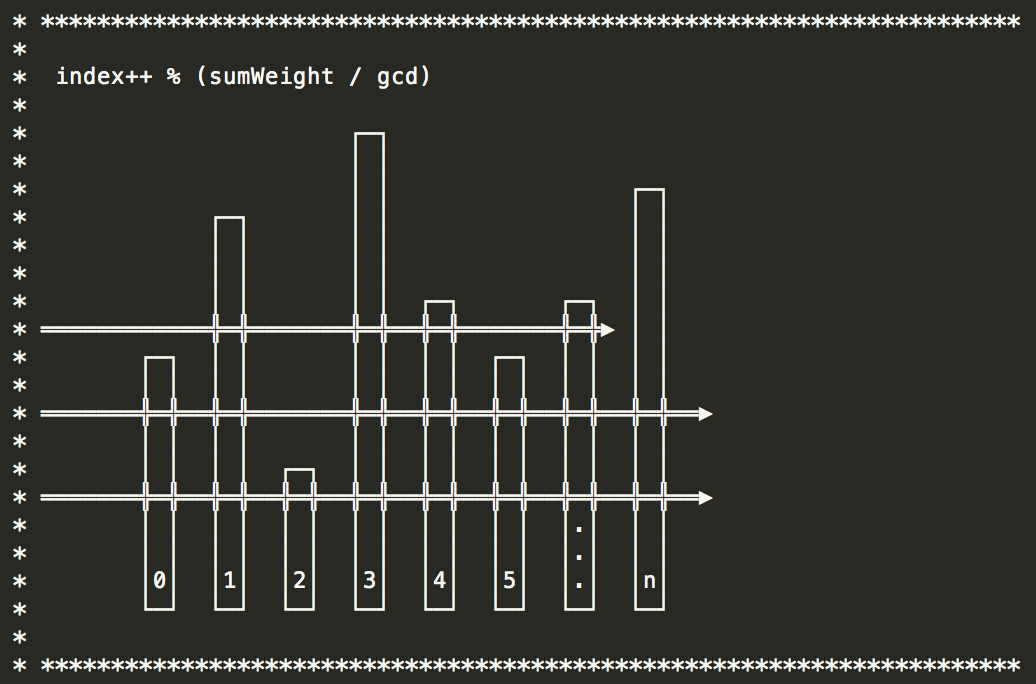

2) Weighted Polling (Maximum Common Divisor)

3) Minimum Load

4) Consistent Hash (Stateful Service Scenarios)

5) Others

Note: Preheating logic is required.

1) Fail-Fast

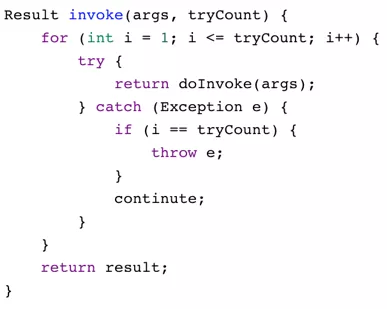

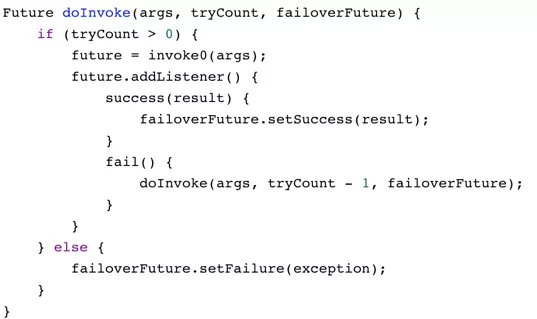

2) Failover

How do we handle asynchronous calls?

3) Fail-Safe

4) Fail-Back

5) Forking

6) Others

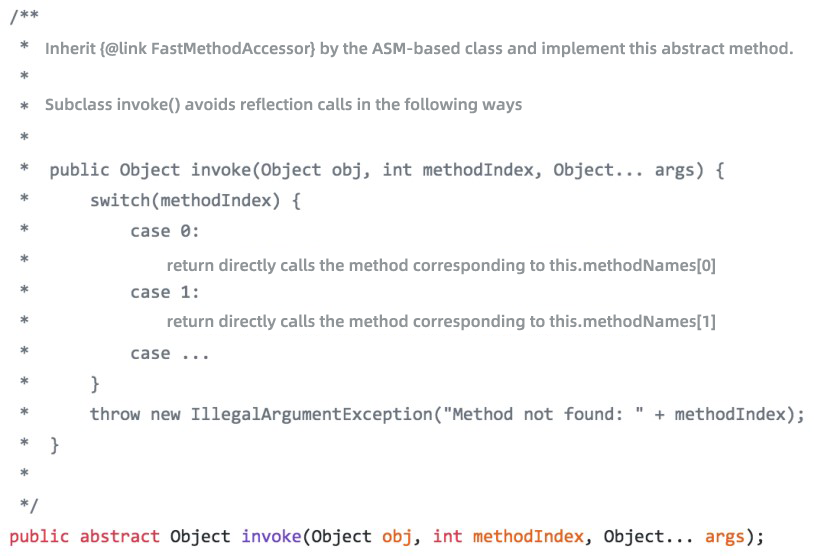

1) Write a FastMethodAccessor using ASM to replace the reflection call on the server.

2) Serialization/Deserialization

Serialize and deserialize in business threads to avoid occupying I/O threads:

loadClass has a serious lock contention problem, which can be observed through JMC.Select an efficient serialization/deserialization framework:

Framework selection is only the first step. If serialization framework does not go well, expand and optimize it:

byte[] -> off-heap memory/off-heap memory -> byte[] -> java objectbyte[] step and read from/write to the off-heap memory directly. This requires the corresponding serialization framework to be expanded.writeBytes are merged into writeShort/writeInt/writeLong.UnsafeNioBufInput reads from the off-heap memory directly, and UnsafeNioBufOutput writes to the off-heap memory directly.3) I/O thread is bound to the CPU.

4) Client coroutine that calls a synchronous blocking operation in the client and encounters a bottleneck easily:

| Name | Description |

| Kilim | Bytecode enhancement during compilation |

| Quasar Agent | Dynamic bytecode enhancement |

| ali_wisp | Implementation of ali_jvm in the underlying environment |

5) Netty Native Transport and PooledByteBufAllocator:

6) Release the I/O thread as soon as possible to do what it should do and minimize thread context switching

Poor Stability with Multiple Problems

EPollArrayWrapper.epollWait returns a bug of 100% CPU usage caused by empty polling. Netty helps you work around by rebuilding the selector.Some Disadvantages of NIO Code Implementation

1) Selector.selectedKeys() produces too much garbage.

Netty modified the implementation of sun.nio.ch.SelectorImpl and used double arrays instead of HashSet to store selectedKeys:

NIO code is synchronized everywhere, such as allocate direct buffer and Selector.wakeup():

pooledBytebuf of Netty has a fronted TLAB (Thread-local allocation buffer) that reduces lock contention effectively.fd_set in Windows, we can only compromise and use two TCP connections for simulation.) If wakeup calls are insufficient, it will cause unnecessary congestion during the select operation. (If you are confused, use Netty directly, which has the corresponding optimization logic.)2) fdToKey mapping

EPollSelectorImpl#fdToKey maintains the mapping of SelectionKey corresponding to all connected fd (descriptor), which is a HashMap.fdToKey. These fdToKeys roughly share all connections.3) Selector is the implementation of Epoll LT on the Linux platform.

4) Direct Buffers is managed by GC.

DirectByteBuffer.cleaner: The virtual reference is responsible for free direct memory. DirectByteBuffer is just a shell. If this shell survives through the age limit of the new generation and finally comes to the old generation, it will be a sad thing.Bits.reserveMemory() -> { System.gc() }. First of all, the entire process is interrupted by GC, and the code sleeps for 100 milliseconds. If the direct memory is still not enough after the code wakes up, oops .-XX:+DisableExplicitGC parameter, there will be unexpected misfortune.UnpooledUnsafeNoCleanerDirectByteBuf of Netty. The Netty framework releases the items in real-time by maintaining the reference count.EventLoop

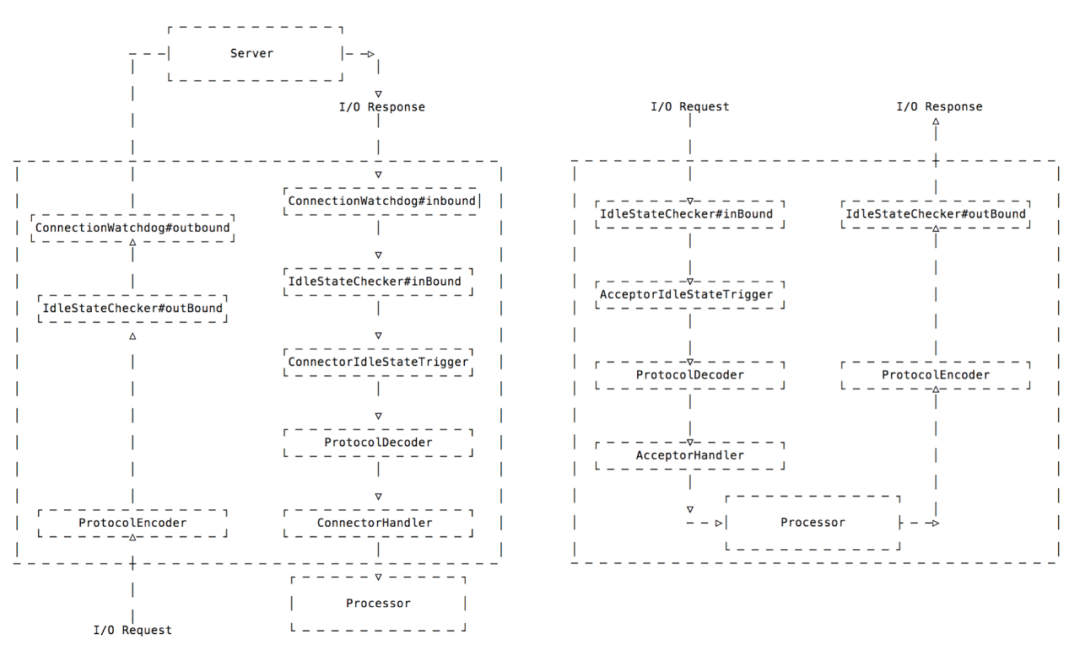

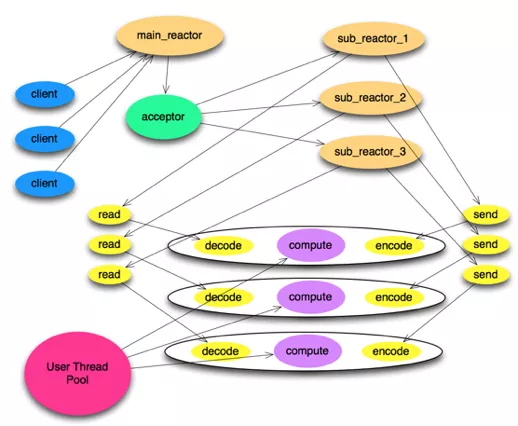

Boss: the mainReactor and Worker: the subReactor

EventLoop needs to be included in BossEventLoopGroup, and only one can be used.WorkerEventLoopGroup generally contains multiple EventLoop, and the number is generally two times the CPU core number. The most important thing is to find the best value according to the scenario.ServerChannel and Channel. ServerChannel corresponds to ServerSocketChannel, and Channel corresponds to a network connection.

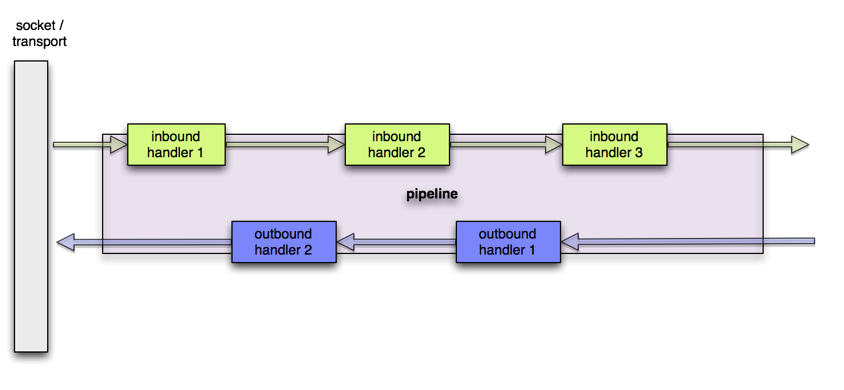

ChannelPipeline

Pooling&Reuse

PooledByteBufAllocator

mpsc_queue while sacrificing a little bit of performance.Recycler

WeakOrderQueue and associated with the stack. If the stack is empty in the next pop, all WeakOrderQueues associated with the current stack are scanned first.WeakOrderQueue is a linked list of multiple arrays. The default size of each array is 16.It creates fewer objects and has less GC pressure than NIO.

The following part describes some specific features for the optimization on Linux:

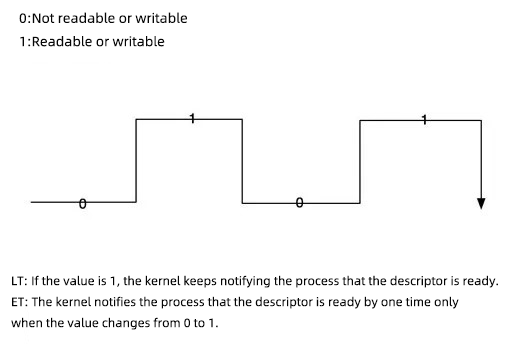

SO_REUSEPORT: Port reuse – Multiple sockets are allowed to listen on the same IP address and port, and the cooperation with RPS/RFS improves the performance more. RPS and RFS simulate multi-queue network interface cards (NICs) at the software layer and provide load balancing capabilities. This prevents the interruption of packet reception, and delivery by NICs occurs at one CPU core, which affects the performance.TCP_FASTOPEN: Three handshakes are also used to exchange data.EDGE_TRIGGERED: Epoll ET is supported.select/poll

fd_set between the user space and kernel space repeatedly.

Epoll

epoll_wait is called, only the ready file descriptor is returned.Concepts:

Readable:

Writable:

Diagram:

Three Epoll Methods

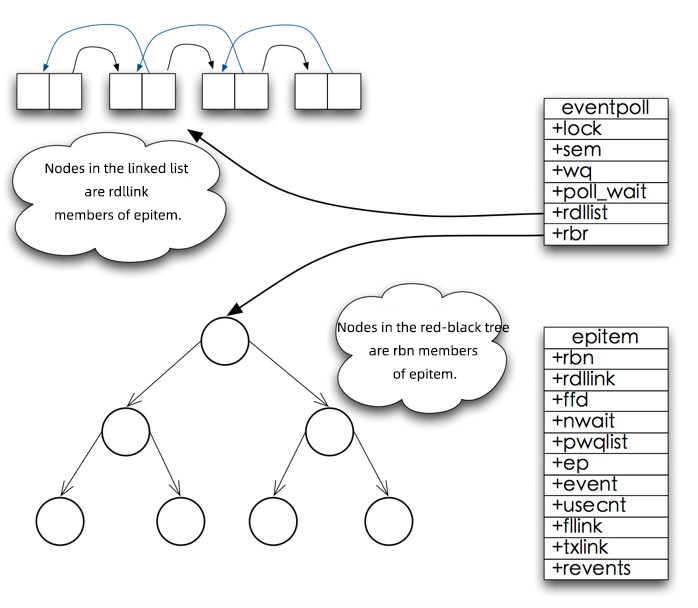

1) Main Code: linux-2.6.11.12/fs/eventpoll.c

2) int epoll_create(int size)

Create an rb-tree (red-black tree) and a ready-list (ready linked list):

3) int epoll_ctl(int epfd, int op, int fd, struct epoll_event *event)

epitem in the rb-tree and register ep_poll_callback with the kernel interrupt handler. When the callback is triggered, put the epitem in the ready-list.4) int epoll_wait(int epfd, struct epoll_event * events, int maxevents, int timeout)

ready-list —> events[]

Data Structure of Epoll

epoll_wait Workflow Overview

Code for reference: linux-2.6.11.12/fs/eventpoll.c:

1) epoll_wait calls ep_poll

rdlist (ready-list) is empty (no ready fd), the current thread is suspended. The current thread is only awakened when rdlist is not empty.2) The event status of file descriptor fd is changed.

ep_poll_callback on the corresponding fd is triggered.3) ep_poll_callback is triggered.

epitem of the corresponding fd is added to the rdlist. Therefore, the rdlist is not empty, the thread is awakened, and the epoll_wait can continue.4) Run the ep_events_transfer function

epitem in rdlist to txlist and clear rdlist

epitem is returned to rdlist.5) Run the ep_send_events function

epitem in txlist and call the poll method corresponding to its associated fd to obtain the newer events1) The necessity of the business thread pool

2) WriteBufferWaterMark

3) Rewrite the MessageSizeEstimator to reflect the real high and low watermarks

outboundHandler is passed through when writing the object. At this time, the object has not been encoded into Bytebuf. Therefore, the size calculation is inaccurate (being smaller.)4) Pay attention to the setting of EventLoop#ioRatio, which is 50 by default.)

EventLoop to execute I/O tasks and non-I/O tasks.5) Who schedules the detection of idle procedures?

delayQueue of EventLoop, a priority queue implemented by a binary heap, is used, and the complexity degree is O(log N). Each worker processes its own procedure monitoring, which helps reduce context switching, but network I/O operations and idle procedures will affect each other.IdleStateHandler using HashedWheelTimer when the number of connections is large. Its complexity degree is O(1), and it allows network I/O operations and idle procedures to be independent of each other, but it incurs the context switching overhead.6) ctx.writeAndFlush or channel.writeAndFlush?

ctx.write goes to the next outbound handler directly. Be careful not to let it bypass the idle procedure detection, which is not what you want.channel.write moves backward from the end to the front and passes through all outbound handlers of the pipeline one by one.7) Use Bytebuf.forEachByte() to replace the loop traverse of ByteBuf.readByte() and avoid rangeCheck()

8) Use CompositeByteBuf to avoid unnecessary memory copying

9) To read an int, use Bytebuf.readInt() instead of Bytebuf.readBytes(buf, 0, 4).

10) Configure UnpooledUnsafeNoCleanerDirectByteBuf to replace DirectByteBuf of the JDK so that the Netty framework releases off-heap memory based on the reference count.

io.netty.maxDirectMemory:

<0: Without using cleaner, Netty inherits the maximum direct memory size set by JDK directly. The direct memory size of JDK is independent, so the total direct memory size will be twice as big as the JDK configuration.

== 0: If cleaner is used, Netty does not set the maximum direct memory size.0: If no cleaner is used, this parameter will limit the maximum direct memory size of Netty. (The direct memory size of JDK is independent and not limited by this parameter.)

11) Optimal Number of Connections

12) When using PooledBytebuf, you should be good at using the -Dio.netty.leakDetection.level parameter.

grep command to check the logs from time to time. Once "LEAK:" appears, change the level to ADVANCED immediately and run again. This way, you can know where the leaking object was accessed.13) Channel.attr() – Attach your own objects to the channel

1) AtomicIntegerFieldUpdater --> AtomicInteger in scenarios with a large number of objects

AtomicInteger is 16 bytes in size, and the AtomicLong is 24 bytes in size.AtomicIntegerFieldUpdater acts as a static field to operate volatile int.2) FastThreadLocal is faster than JDK in terms of implementation.

3) IntObjectHashMap / LongObjectHashMap

4) RecyclableArrayList

ArrayList.5) JCTools

NonblockingHashMap (comparable to ConcurrentHashMapV6/V8) not available in JDK.We are the Ant Intelligent Monitoring Technology Middle Platform Storage Team. We are using Rust, Go, and Java to build a new-generation low-cost time-series database with high performance and real-time analysis capability. You are welcome to transfer positions or recommend other applicants to our team. Please contact Feng Jiachun via email (jiachun.fjc@antgroup.com) for more information.

[1] Netty

[2] JDK-Source

[3] Linux-Source

[4] RPS/RFS

[5] I/O Multiplexing

[6] jemalloc

[7] SO_REUSEPORT

[8] TCP_FASTOPEN

[9] Main Reference Sources for Best Practices

Brief Introduction to Distributed Consensus: Raft and SOFAJRaft

Alibaba Cloud Native Community - May 21, 2021

Alibaba Cloud Community - May 29, 2024

frank.li - February 24, 2021

Alibaba Cloud Native Community - August 23, 2023

Alibaba Clouder - April 13, 2020

OpenAnolis - September 1, 2023

Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn MoreMore Posts by block

profedsonbelem@gmail.com October 23, 2021 at 8:02 am

excelente post