11.11 Big Sale for Cloud. Get unbeatable offers with up to 90% off on cloud servers and up to a $300 rebate for all products! Click here to learn more.

By Meng Qingyi.

In 2011, HBase was introduced into the technical system of Alibaba Group by the two senior technical experts Bi Xuan and Zhu Zhuang. Then, in 2014, Tianwu took over the leadership, becoming the first HBase committer to be located in China. And over the years, HBase was worked into several of Alibaba's business units, including Taobao, TradeManager, Cainiao, Alipay, AMAP, Alibaba Digital Media and Entertainment Group, and Alimama. Alibaba Cloud's implementation of HBase, known internally as Ali-HBase, has been supporting the core business units of Alibaba including dashboards for Double 11, Alipay bills, Alipay risk control, and logistics details. During the Double 11 shopping festival in 2018, Ali-HBase processed 2.4 trillion lines of requests during the day, with the throughput of a single cluster being in the tens of millions. Thanks to the trust of our customers, our implementation of HBase grew from a humble prototype to be a robust product after overcoming several initial challenges. In 2017, Ali-HBase became a commercial product of Alibaba Cloud, ApsaraDB for HBase, currently available in China. At Alibaba Cloud, we are planning to gradually deliver several of the internal high availability (HA) capabilities of Ali-HBase to external customers. The same-city zone-disaster recovery feature has been developed and will serve as a basic platform for our subsequent HA development.

This article outlines the high availability (HA) development of Alibaba's implementation of HBase in four parts: large clusters, mean time to failure (MTTF) and mean time to repair (MTTR), disaster recovery, and the utmost experience.

In early stages, a model of one cluster for one business is relatively simple and easy to manage, but as the business grows, and O&M becomes increasingly difficult, this model can no longer effectively be used to manage resources. For one, for every cluster, three machines must be dedicated to three roles, the Zookeeper, Master and NameNode. For another, while some businesses may rely more heavily on computing, as opposed to storage, some business work the other way around. This separation of services cannot implement load shifting. Therefore, since 2013, Alibaba's implementation of HBase has adopted the large cluster model, with a single cluster containing over 700 nodes.

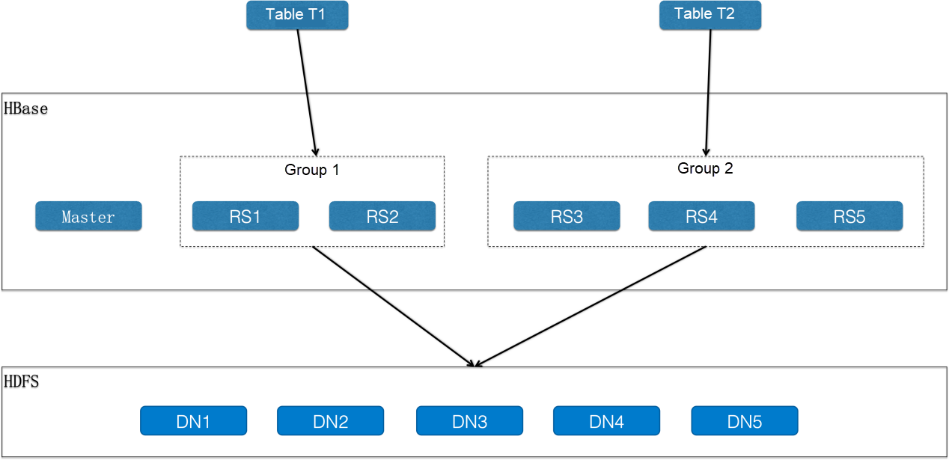

Isolation is a critical challenge for large clusters. For users to accept the large cluster model, Ali-HBase must be able to ensure that the traffic bursts of business A do not affect business B. To that end, Ali-HBase introduces the concept of "group", with storage sharing and computing isolation at the core of its design.

As shown in the preceding figure, a cluster is divided into multiple groups, with each group containing at least one server. A server can only be attached to one group for a given period of time, but it can move across groups. That is to say, group scaling is supported. A table can only be deployed only in one group, but can move across groups. As you can see, the RegionServers that read and write T1 are completely isolated from those that read and write T2. Therefore, the CPU and memory resources are physically isolated. However, all these RegionServers share the same underlying Hadoop Distributed File System (HDFS). This allows multiple businesses to share one large storage pool to fully improve storage utilization. Related to this, the open-source community introduced RegionServerGroup in HBase 2.0.

Consider the impact of failed disks on shared storage. As a characteristic of the HDFS mechanism, three nodes are randomly selected to form a pipeline for each block write. If a machine has a failed disk, the failed disk may appear in multiple pipelines. In this case, a single point of failure (SPOF) causes global jitters. The real-world impact would be like dozens of customers sending you text messages or calling you at the same time due to one failed disk. In particular, writes may be blocked if slow or failed disks are not handled quickly. Currently, Ali-HBase has more than 10,000 machines, with an average of 22 disk failures every week.

To solve this problem, we have done two things. The first thing we did was reduce the impact time by monitoring and setting up alarms on slow and failed disks, so to provide an automatic processing platform. And the second thing we did was to protect the system against the impact of disk single points of failure through a software catch. The way we did this is by having three replicas concurrently write when data is written to HDFS, so that as long as two replicas are written, the task is considered successful even if one is faulty or fails. That is, the third replica is discarded if it times out. In addition, if a write-ahead logging (WAL) error (the number of replicas is less than 3) is detected, the system will automatically generate a new log file, starting a new round of pipeline selection to avoid failed disks. Finally, a higher version of HDFS is also capable of identifying and automatically removing failed disks.

Now, let's consider the impact of the client connection on Zookeeper. When a client accesses HBase, it establishes a persistent connection with Zookeeper, and the RegionServer of HBase also establishes a persistent connection with Zookeeper. A large cluster means a large number of services and client connections. Exceptions are usually caused by the number of many client connections being too much for the heartbeat of RegionServer and Zookeeper, which can result in machine downtime. Our workaround is to first limit the number of connections to a single IP address, and then provide HBASE-20159, a solution that uses different Zookeeper quorums for client and server. In this case, RegionServer will not abort due to Zookeeper session loss when some client connections boost exhausted Zookeeper quorums.

In many ways, stability is the lifeblood of business and cloud computing. With the development of Alibaba Group's various business units, HBase continues to expand its support for several different online scenarios, all of which require an increasing degree of stability each and every year. Mean time to failure (MTTF) and mean time to repair (MTTR) are common metrics for measuring system reliability and stability.

To understand this metric, let's first look at some of the main causes of system failure:

Next, the following are several typical stability issues that Alibaba Clooud ApsaraDB for HBase has encountered:

When supporting Cainiao's logistics details service, we found that the machine aborted every two months because memory fragmentation caused the promotion failure, which further led to full garbage collection (FGC). We use a lot of memory, and therefore pauses due to FGC lasted longer than the heartbeat with Zookeeper. In this case, the Zookeeper session expired, and the HBase process killed itself. We identified that the problem was caused by the BlockCache. Due to the existence of compression encodings, the blocks in the memory have different sizes. The cache's swap-in and swap-out behavior will gradually cut the memory into very small fragments. We developed BucketCache and SharedBucketCache. BucketCache effectively solves the problem of memory fragmentation, and SharedBucketCache allows objects that are deserialized from BlockCache to be shared and reused, reducing the creation of runtime objects and completely solving the FGC problem.

HBase relies on two external components: Zookeeper and HDFS. In terms of architecture design, zookeeper is highly available. HDFS also supports a HA deployment mode. When we write code based on the assumption that a component is reliable, potential failures may occur. When this "reliable" component fails, HBase handles the exceptions by killing the component to try to recover through failover. Sometimes HDFS may only be temporarily unavailable, for example, some blocks enter the protection mode without reporting, or there are short network jitters. If HBase restarts on a large scale, a 10-minute disruption may last for hours. Our solution for this problem is to optimize exception handling by directly handling the problems that can be prevented and applying a "retry and wait" strategy for the exceptions that cannot be prevented.

Large queries in HBase usually refer to the scans with filters that read and filter a large number of data blocks in RegionServers. If the data to be read is often not in the cache, it is easy to cause I/O overload. If most of the data to be read is in the cache, it is easy to cause the CPU to overload due to operations such as decompression and serialization. In short, when dozens of such large queries are executed on servers concurrently, the server load rises rapidly, and the system responds slowly or acts as if it freezes. To solve this problem, we have developed monitoring and limitation procedures for large requests. When the resource consumption of a request exceeds a certain threshold, it is marked as a large request and recorded in logs. There is a maximum number of concurrent large requests allowed by a server. Any additional large requests beyond that number will be subject to throttling. When a request has been running on the server for a long time and has not ended, but the client has determined that the request has timed out, the system actively terminates this large request. The launch of this function solves the problem of performance volatility in Alipay's billing system caused by hotspot queries.

In online scenarios, we occasionally encounter situations in which the data volume in a partition ranges anywhere from dozens of GBs to several TBs. This is usually because the partition is inappropriate with a large amount of data being written in a short period of time. This kind of partition not only has a large amount of data, but often also has a large number of files. When a read to this partition occurs, it is a large request. If the large partition is not promptly split into smaller partitions, it will have a serious impact. This splitting process is very slow. HBase can only split one partition into two, and perform the next splitting only after one round of compaction. Assuming that the partition size is 1 TB, for example, it must undergo 7 rounds of splitting before the large partition can be divided into 128 partitions smaller than 10 GB. Each round of splitting is accompanied by a compaction (read 1 TB data and write 1 TB data). As compaction of each partition can only be performed by one machine, only 2 machines are engaged in the first round, 4 in the second round, and 8 in the third round, so on. In practice, manual intervention is required for load balancing. The entire process can last for more than 10 hours assuming an easy environment with no new data being written in and a normally-loaded system. To address this problem, we designed the "cascading splitting", which allows us to enter the next round of splitting without performing a compaction. First, we quickly split the partitions and then perform compaction as a one-off.

Of course, another problem is related to slow disks and failed disks. However, for the sake of space, we aren't going to cover this here, as we've already covered these issues when discussing large clusters.

Above we discussed how to solve several different, specific problems. However, there are a variety of situations that may cause a system failure, making it extremely difficult to troubleshoot, especially when a failure involves multiple problems. To make an analogy, modern medicine often points out that hospitals should invest more in prevention rather than treatment, and people should have physical examinations regularly to detect indicators for latent diseases early on. A mild disease can develop into something far more serious if it is not treated promptly. This idea works for distributed systems, too, of course. Due to the complexity and self-healing capability of systems, minor problems such as memory leakage, compaction backlog and queue backlog may not have immediate consequences, but they will trigger a system crash at some point. To address this problem, we came up with the "Health Diagnosis" system to alert about metrics that have not caused a system failure but have obviously exceeded the normal threshold. The "Health Diagnosis" system has intercepted a large number of abnormal cases and its diagnostic intelligence is continuously evolving.

However robust, a system will fail at some point. What we need to do is to tolerate and reduce impact, and speed up recovery. HBase is a self-healing system. A failover is triggered when a single node fails, allowing other active nodes to take over the service of the affected partition. Before the partition provides external service, log replay must be performed to ensure data read/write consistency. The complete process consists of three steps: Split Log, Assign Region, and Replay Log. There is no redundancy for compute nodes in HBase. Therefore, if one node is down, all states in its memory must be replayed. The size of this memory is generally considered to be 10 GB to 20 GB. Suppose that the data replay rate of the entire cluster is R GB/s and that M GB needs to be restored when a single node is down, M*N/R seconds are required for N nodes that are down. This means if R is not large enough, the more nodes are down, the more unpredictable is the recovery time. Therefore the factors that affect R are crucial. Out of Split Log, Assign Region, and Replay Log, Split Log and Assign Region generally have problem with scalability, primarily because they depend on a single point. In the process of Split Log, WAL files are split into small files by partition, and a large number of new files are created, which can only be performed by one NameNode machine with low efficiency. The Assign Region process is managed by HBase Master (HMaster), which is also a single point. The core optimization of Ali-HBase in failover is to adopt a new MTTR2 architecture, remove the Split Log step, and implement multiple optimization measures such as priority meta partition, bulk assign, and timeout optimization in the Assign Region step, increasing the failover efficiency by more than 200% compared to the community failover function.

So you may ask, from a customer's point of view, which situation is more serious? A traffic drop to zero in 2 minutes or a 5% drop in 10 minutes? My guess is the former. Due to the limited thread pool resources available to the client, the recovery process of a HBase SPOF may cause a sharp drop in business traffic, because threads are blocked on the failed machine. The situation in which a failure with 2% machines down causes a 90% drop in business traffic is unacceptable. We have developed a Fast Fail mechanism on the client, which can actively detect abnormal servers and quickly reject requests sent to this server. This releases thread resources without affecting access to other partition servers. This project is named DeadServerDetective.

Disaster recovery is a survival mechanism to be used in the case of a major accident, such as destructive strikes caused by natural disasters such as earthquakes and tsunamis, completely uncontrollable recovery time caused by software changes, and service crashes or unknown recovery time caused by network outages. In reality, for most of us at least, a natural disaster is an once-in-a-lifetime experience, compared to network outages may even not occur once in a year and especially compared to problems caused by software changes, which may happen every month. Software changes may involve risks in which operations in the change process may go wrong, and unknown bugs may exist in the new release. Therefore, it imposes a comprehensive test on the O&M capability, kernel capability, and testing capability of a system. In addition, to continuously meet business needs, it is necessary to accelerate kernel iteration, which brings more changes.

Disaster recovery is actually isolation-based redundancy. It requires physical isolation at the resource level, version isolation at the software level, and operation isolation at the O&M level. Minimal association is maintained between redundant services to ensure that least one replica is alive when a disaster occurs. A few years ago, Ali-HBase began to develop zone-disaster recovery and active geo-redundancy. Currently, 99% of clusters have at least one secondary cluster. The primary/secondary model provides a strong guarantee for HBase to support online businesses. The two core issues of the primary/secondary model are data replication and traffic failover.

Data replication is somewhat complex and depends greatly on your business requirements. For example, the choices of whether to choose a synchronous or asynchronous replication method and whether to maintain the data sequence both depend greatly on the business requirements of the system. Some businesses require a high level of consistency, some require session consistency, and some can accept eventual consistency. From the perspective of HBase, many of our services can accept eventual consistency in disaster scenarios. Of course, we have also developed a synchronous replication mechanism, which only applies to a few scenarios. This article will focus on the discussion of asynchronous replication. For a long time, we have adopted the asynchronous replication mechanism from the community (HBase Replication), which is the synchronization mechanism built in to HBase.

The root cause identification of synchronization latency is the first challenge. A synchronization link involves three parts: the sender, channel, and receiver, and therefore it is difficult to troubleshoot problems. We enhance the monitoring and alert functions related to synchronization.

Synchronization latency caused by hotspots is the second challenge. HBase Replication uses the push mode. It reads WAL logs and then forwards them. The sending thread and the HBase writing engine are in the same process of the same RegionServer. When a RegionServer writes data to hotspots, it requires more sending capacity. However, writing to hotspots itself occupies more system resources, which means writing and synchronization have to compete for resources. Ali-HBase has been optimized in two aspects. First, it improves synchronization performance and reduces the resource consumption per MB for synchronization. Second, it has developed a remote consumer, which allows other idle machines to help hotspot machines synchronize logs.

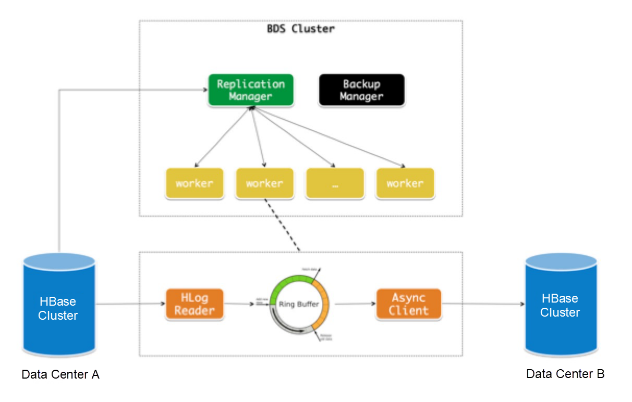

The third challenge is the mismatch of resource requirements and iteration methods. Data replication does not require disk I/O and only consumes bandwidth and CPUs, whereas HBase depends heavily on disk I/O. The worker node for data replication is essentially stateless, restart is not a concern, and it supports pause-and-resume data transfer. However, HBase, is stateful. You must move the partitions before restarting to avoid triggering a failover. When a lightweight synchronization component works closely with a heavyweight storage engine, HBase must be restarted at every iteration and upgrade of the synchronization component. Online reads and writes are affected by the need of restarting HBase and the synchronization component at the same time when a synchronization problem can be solved simply through a restart. What could have been achieved by a scaling out of CPU or bandwidth resources, now requires the scaling out of the entire HBase.

To sum up, Ali-HBase eventually stripped the synchronization components and built as an independent service, which solves the hotspot and coupling problems. This service is called BDS Replication in the cloud environment. With the development of the active geo-redundancy solution, the data synchronization relationship between clusters is becoming more complex. To address this problem, we develop a monitoring mechanism for topology relationships and link synchronization latency, and optimize the problem of repeated data transmission in the ring topology.

BDS Replication

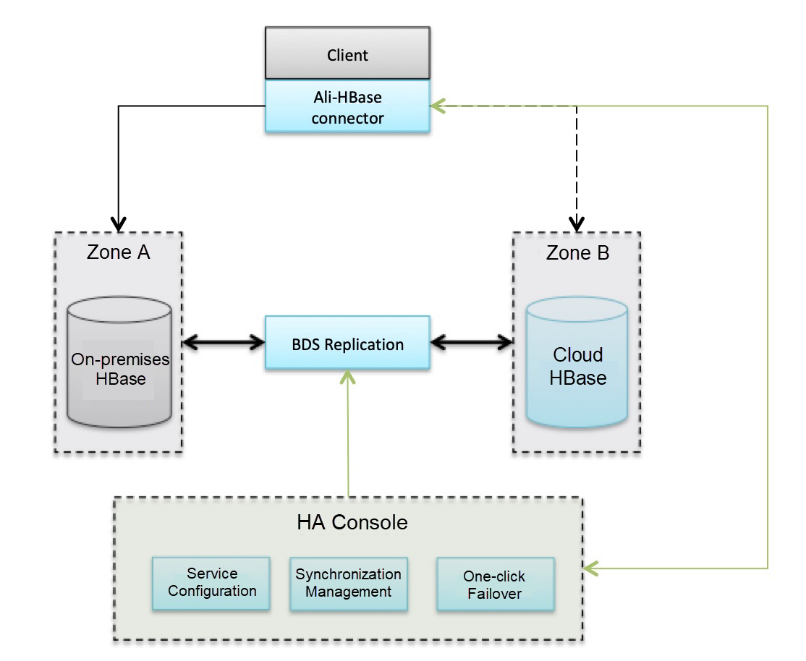

When it comes to traffic failover, with primary/secondary clusters in place, business traffic must be quickly switched to the secondary cluster during a disaster. Alibaba's implementation of HBase has modified the HBase client to make traffic switching happen inside the client. The switching command is sent through a highly available channel to the client, which then closes the old link, opens the link to the secondary cluster, and retry the request.

Zone-disaster recovery

Now let's consider the impact of instantaneous failover on meta service. Before accessing a partition for the first time, the HBase client must request the meta service to obtain the partition address. During instantaneous failover, all clients access the meta service concurrently. In reality, the number of concurrent requests may be hundreds of thousands or more, which causes service overload. After the request times out, the client retries again, causing the server to work hard without any results, and the failover fails. To address this problem, we modify the caching mechanism of the meta table, which greatly improves the throughput of the meta table to handle millions of requests. Meta partitions and data partitions are isolated during operations to prevent them from affecting each other.

Next is the transition from one-click switchover to auto failover. One-click switchover relies on an alert system and manual operations. In reality, it takes a few minutes to respond, and more than 10 minutes if it happens to take place in the evening. There are two ideas included throughout the evolution of Ali-HBase's auto failover function. The first one involves the addition of a third-party arbitration, which grades each system on their health in real time. When the health score of a system falls below a specific threshold and the secondary system is healthy, the failover command is automatically run. This arbitration system is complex. First of all, it must maintain an independent network in its deployment. Secondly, the system itself must be highly reliable. Finally, the validity of health scores must be guaranteed. The arbitration system makes health checks from the perspective of the server. However, from the perspective of the client, the fact that the server is active does not mean it still functions well. There may be sustained full garbage collection (FGC), or network jitters. The second idea is to perform auto failover on the client, which determines the availability based on the failure rate or other rules. When the failure rate exceeds a certain threshold, a failover is performed.

Now let's talk about how we can provide the utmost experience. In risk control and recommendation scenarios, the lower the request response time (RT), the more rules the service can apply in a unit of time, and the more accurate the analysis. The storage engine must be able to operate with high concurrency, low latency, and fewer glitches, as well as high speed and stability. On the kernel of Ali-HBase, the team developed CCSMAP to optimize write cache, SharedBucketCache to optimize read cache, and IndexEncoding to optimize in-block search. In addition, by combining other technologies such as lock-free queue, coroutine, and ThreadLocal Counter, and the ZGC algorithm developed by the Alibaba JDK team, Ali-HBase achieves a single cluster P999 latency of less than 15 ms online. From another perspective, strong consistency is not required in risk control and recommendation scenarios. With some data being read-only data imported offline, reading multiple replicas is acceptable as long as the latency is low. If the request glitches between the primary and secondary replicas is an independent event, then theoretically speaking, accessing primary and secondary replicas at the same time can lower the glitch rate by an order of magnitude. Based on this, we develop DualService by taking advantage of the existing primary/secondary architecture, which supports concurrent access from clients to the primary cluster and secondary cluster. Generally, the client starts by reading from the primary database. If the primary database does not respond for a certain period of time, the client sends a concurrent request to the secondary database and waits for the first response. The application of DualService is a great success, with the service operating with nearly zero jitter.

There are still some problems in the primary/secondary model. The failover process has a great impact because the granularity of failover is cluster-level. Partition-level failover is not possible because the primary and secondary partitions are inconsistent. With only eventual consistency available, it is difficult to write code logic for some services. With these concerns and other considerations, such as indexing capability and access models, the Ali-HBase team independently developed the Lindorm engine, which provides a built-in dual-zone deployment model. Its data replication adopts the push-and-pull combination mode, greatly improving the synchronization efficiency. The partitions between the two zones are coordinated by GlobalMaster, and stay consistent for most of the time, enabling partition-level failover. Lindorm provides multi-level consistency protocols, such as strong consistency, session consistency, and eventual consistency, to facilitate business logic implementations. Currently, most Alibaba internal services have been switched to the Lindorm engine.

With zero jitter as the ultimate goal, we must realize that the causes of glitches can be anything. We must properly identify a problem before solving it. Therefore, giving the reasons behind every glitch is both needed by users and a demonstration of what we are capable of. Ali-HBase has developed distributed tracing to monitor requests from clients, networks, and servers. It provides extensive and detailed profiling to display various traces, such as request path, resource access, and time consumption, helping developers to quickly locate problems.

In this article, we have introduced some of the ways in which Ali-HBase can ensure high availability in a variety of several different scenarios. In conclusion, I would like to share some thoughts with you on the construction of high availability capability with the hope of inspiring some more discussion in this area.

Alibaba Clouder - February 14, 2020

Alibaba Clouder - November 23, 2020

Apache Flink Community China - September 27, 2020

Alibaba Cloud_Academy - October 7, 2023

Rupal_Click2Cloud - September 24, 2021

Alibaba Cloud Storage - April 25, 2019

Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More