By Alibaba Cloud Table Store Development Team

In the previous article, we discussed about cluster composition, node discovery, Master election, error detection, cluster scaling, and so on. This article focuses on analyzing consistency issues with meta update in ES based on the previous section. To enhance understanding, it also describes Master methods for cluster management, meta composition, storage, and other information. We will be discussing the following topics:

In the previous article, we introduced the ES cluster composition, how to discover the node, and Master election. So, how does the Master manage the cluster after it is successfully elected? There are several questions that needs to be addressed, such as:

Because cluster management is required, the Master node must have some method of informing other nodes to execute corresponding actions to accomplish a task. For example, when creating a new Index, it must assign its Shard to some nodes. The directory corresponding to the Shard must be created on the nodes, which means it must create data structures corresponding to the Shard in memory.

In ES, the Master node informs other nodes by publishing ClusterState. Master node publishes the new ClusterState to all the other nodes. When these nodes receive the new ClusterState, they send it to the relevant modules. The modules then determine the actions to take based on the new ClusterState, for example, creating a Shard. This is an example of how to coordinate every module's operations through Meta data.

While Master makes Meta changes and informs all other nodes, Meta change consistency issues must be considered. If the Master crashes during the process, only some nodes can perform the operations in accordance with the new Meta. When a new Master is elected, you need to ensure that all nodes perform the operation in accordance with the new Meta and cannot be rolled back. Since some nodes may have already performed the operation in accordance with the new Meta, if a rollback occurs, it causes inconsistency issues.

In ES, as long as the new Meta is committed on a node, the corresponding operations are performed. So, we must make sure that once a new Meta is committed on a node, no matter which node is the master, it must generate a newer meta based on this commit; otherwise, inconsistencies may occur. This article analyzes this problem and the strategy ES adopted to handle it.

Before introducing the Meta update process, we will introduce Meta composition, storage, and recovery. If you are familiar with this topic, you can skip to the next one directly.

Meta is the data used to describe data. In ES, the Index mapping structure, configuration, and persistence are meta data, and some configuration information of the cluster belongs to meta as well. Such meta data is very important. If the meta data that records an index is missing, the cluster thinks the index no longer exists. In ES, meta data can only be updated by the master, so the master is essentially the brain of the cluster.

ClusterState

Each node in the cluster maintains a current ClusterState in memory, which indicates various states within the current cluster. ClusterState contains a MetaData structure. The contents stored in MetaData are more in line with the meta characteristics, and the information to be persisted is in MetaData. In addition, some variables can be considered temporary states that are dynamically generated while the cluster is operating.

ClusterState contains the following information:

long version: current version number, which increments by 1 for every update

String stateUUID: the unique id corresponding to the state

RoutingTable routingTable: routing table for all indexes

DiscoveryNodes nodes: current cluster nodes

MetaData metaData: meta data of the cluster

ClusterBlocks blocks: used to block some operations

ImmutableOpenMap<String, Custom> customs: custom configuration

ClusterName clusterName: cluster nameMetaData

As mentioned above, MetaData is more in line with meta characteristics and must be persisted, so, next, we take a look at what mostly comprises MetaData.

The following must be persisted in MetaData:

String clusterUUID: the unique id of the cluster.

long version: current version number, which increments by 1 for every update

Settings persistentSettings: persistent cluster settings

ImmutableOpenMap<String, IndexMetaData> indices: Meta of all Indexes

ImmutableOpenMap<String, IndexTemplateMetaData> templates: Meta of all templates

ImmutableOpenMap<String, Custom> customs: custom configurationAs you see, MetaData mostly includes cluster configurations, Meta of all Indexes in the cluster, and Meta of all Templates. Next, we analyze the IndexMetaData, which will also be discussed later. Although IndexMetaData is also a part of MetaData, they are stored separately.

IndexMetaData

IndexMetaData refers to the Meta of an Index, such as the shard count, replica count, and mapping of the Index. The following must be persisted in IndexMetaData:

long version: current version number, which increments by 1 for every update.

int routingNumShards: used for routing shard count; it can only be the multiples of numberOfShards of this Index, which is used for split.

State state: Index status, and it is an enum with the values OPEN or CLOSE.

Settings settings: configurations, such as numbersOfShards and numbersOfRepilicas.

ImmutableOpenMap<String, MappingMetaData> mappings: mapping of the Index

ImmutableOpenMap<String, Custom> customs: custom configuration.

ImmutableOpenMap<String, AliasMetaData> aliases: alias

long[] primaryTerms: primaryTerm increments by 1 whenever a Shard switches the Primary, used to maintain the order.

ImmutableOpenIntMap<Set<String>> inSyncAllocationIds: represents the AllocationId at InSync state, and it is used to ensure data consistency, which is described in later articles.First, when an ES node is started, a data directory is configured, which is similar to the following. This node only has the Index of a single Shard.

$tree

.

`-- nodes

`-- 0

|-- _state

| |-- global-1.st

| `-- node-0.st

|-- indices

| `-- 2Scrm6nuQOOxUN2ewtrNJw

| |-- 0

| | |-- _state

| | | `-- state-0.st

| | |-- index

| | | |-- segments_1

| | | `-- write.lock

| | `-- translog

| | |-- translog-1.tlog

| | `-- translog.ckp

| `-- _state

| `-- state-2.st

`-- node.lockYou can see that the ES process writes Meta and Data to this directory, where the directory named _state indicates that the directory stores the meta file. Based on the file level, there are three types of meta storage:

nodes/0/_state/

This directory is at the node level. The global-1.st file under this directory stores the contents mentioned above in MetaData, except for the IndexMetaData part, which includes some configurations and templates at the cluster level. node-0.st stores the NodeId.

nodes/0/indices/2Scrm6nuQOOxUN2ewtrNJw/_state/

This directory is at the index level, 2Scrm6nuQOOxUN2ewtrNJw is IndexId, and the state-2.st file under this directory stores the IndexMetaData mentioned above.

nodes/0/indices/2Scrm6nuQOOxUN2ewtrNJw/0/_state/

This directory is at the shard level, and state-0.st under this directory stores ShardStateMetaData, which contains information such as allocationId and whether it is primary. ShardStateMetaData is managed in the IndexShard module, which is not that relevant to other Meta, so we will not discuss details here.

As you see, the cluster-related MetaData and the Index MetaData are stored in different directories. In addition, the cluster-related Meta is stored on all MasterNodes and DataNodes, while the Index Meta is stored on all MasterNodes and the DataNodes that already store this Index data.

The question here is, because MetaData is managed by Master, why is MetaData also stored on DataNode? This is mainly for data security: Many users do not consider the high availability requirements for Master and high data reliability and only configure one MasterNode when deploying the ES cluster. In this situation, if the node fails, the Meta may be lost, which could have dramatic impact on their businesses.

If an ES cluster reboots, a role would be required to recover the Meta because all processes have lost the previous Meta information; this role is Master. First, Master election must take place in the ES cluster, and then the failure recovery can begin.

When Master is elected, the Master process waits for specific conditions to be met, such as having enough current nodes in the cluster, which can prevent unnecessary data recovery situations due to disconnection of some DataNodes.

When the Master process decides to recover the Meta, it sends the request to MasterNode and DataNode to obtain the MetaData on its machine. For a cluster, the Meta with the latest version number is selected. Likewise, for each Index, the Meta with the latest version number is selected. The Meta of the cluster and each Index then combine to form the latest Meta.

Now, we take a look at the ClusterState update process to see how ES guarantees consistency during ClusterState updates based on this process.

First, atomicity must be guaranteed when different threads change ClusterState within the master process. Imagine that two threads are modifying the ClusterState, and they are making their own changes. Without concurrent protection, modifications committed by the last thread commit overwrite the modifications committed by the first thread or result in an invalid state change.

To resolve this issue, ES commits a Task to MasterService whenever a ClusterState update is required, so that MasterService only uses one thread to sequentially handle the Tasks. The current ClusterState is used as the parameter of the execute function of the Task when it is being handled. This way, it guarantees that all Tasks are changed based on the currentClusterState, and sequential execution for different Tasks is guaranteed.

As you know, once the new Meta is committed on a node, the node performs corresponding actions, like deleting a Shard, and the actions cannot be rolled back. However, if the Master node crashes while implementing the changes, the newly generated Master node must be changed based on the new Meta, and the rollback must not happen. Otherwise, the Meta may be rolled back although the action cannot be rolled back. Essentially, that means there is no longer consistency with the Meta update.

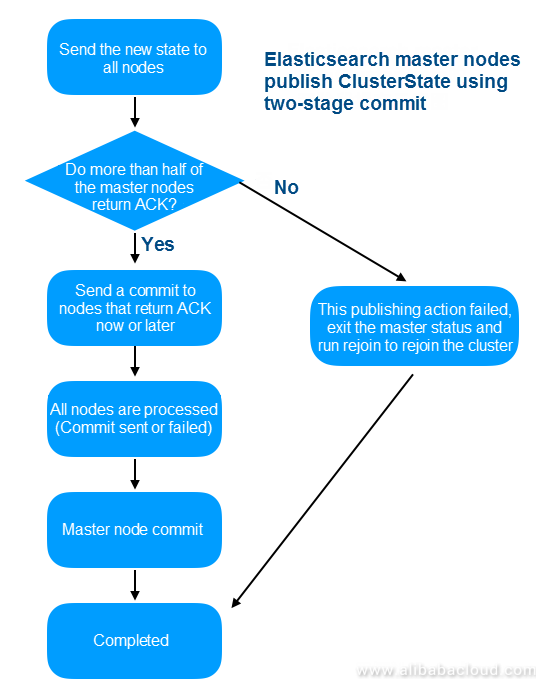

In the earlier ES versions, this issue was not resolved. Later, two-phase commit was introduced (Add two-phased commit to Cluster State publishing). The two-phase commit splits the Master publishing the ClusterState process into two steps: first, have the latest ClusterState sent to all nodes; second, once more than half of the master nodes return ACK, the commit request is sent, requiring the nodes to commit the received ClusterState. If ACK is not returned by more than half of the nodes, the publish fails. In addition, it loses master status and executes rejoin to rejoin the cluster.

Two-phase commit can solve the consistency issue, for example:

This approach, however, has many inconsistency issues, which we cover next.

The principle in ES is that the Master sends a commit request if more than half of MasterNode (master-eligible nodes) receive the new ClusterState. If more than half of the nodes are considered to have received the new ClusterState, the ClusterState can definitely be committed and is not rolled back in any situation.

Issue one

In the first phase, master node sends a new ClusterState, and nodes that receive it simply queue it in memory then return ACK. This process is not persisted, so that even when a master node receives more than half of the ACKs, we cannot assume that the new ClusterState is on all the nodes.

Issue two

If network partitioning occurs when only a few nodes are committed in the master commit phase, and the master and these nodes are divided into the same partition, other nodes can access each other. At this point, other nodes are in the majority and will select a new master. Since none of these nodes commit a new ClusterState, the new master will continue to use the ClusterState set before the update, causing meta inconsistency.

ES is still tracking this bug:

https: //www.elastic.co/guide/en/elasticsearch/resiliency/current/index.html

Repeated network partitions can cause cluster state updates to be lost (STATUS: ONGOING)

... This problem is mostly fixed by #20384 (v5.0.0), which takes committed cluster state updates into account during master election. This considerably reduces the chance of this rare problem occurring but does not fully mitigate it. If the second partition happens concurrently with a cluster state update and blocks the cluster state commit message from reaching a majority of nodes, it may be that the in flight update will be lost. If the now-isolated master can still acknowledge the cluster state update to the client, this will amount to the loss of an acknowledged change. Fixing that last scenario needs considerable work. We are currently working on it but have no ETA yet.Under what circumstances will problems occur?

In a two-stage commit, one of the most important conditions is "Return ACK if more than half of the nodes are included, indicating that this ClusterState has been received", which doesn't guarantee anything. On one hand, receiving a ClusterState will not persist; on the other hand, receiving a ClusterState will not have any influence on the future Master election, because only committed ClusterStates will be considered.

During the two-stage process, the master will be in the safe status only when it commits a new ClusterState on more than half of the MasterNodes (master-eligible nodes) and these nodes have all completed the persistence of the new ClusterState. If the master has any sending failures between the beginning of the commit and this state, meta inconsistency may occur.

Since ES has some consistency problems with meta updates, here are some ways to resolve these problems.

The first approach is to implement a standardized consistency algorithm, such as raft. In the previous article, we have explained the similarities and differences between the ES election algorithm and the raft algorithm. Now, we continue to compare the ES meta update process and the raft log replication process.

Similarities:

Commit is performed after receiving a response from more than half of the nodes.

Differences:

The comparison above shows similarities in the meta update algorithms of ES and raft. However, raft has additional mechanisms to ensure consistency, while ES has some consistency problems. ES has officially said that considerable work is needed to fix these problems.

For example, use ZooKeeper to save meta and ensure meta consistency. This does solve consistency problems, but performance still needs to be considered. For example, evaluate whether the meta is saved in ZooKeeper when the meta volume is too large, or whether full meta or diff data needs to be requested each time.

First, ensure that no split-brain will occur, then save the meta in shared storage to avoid any consistency problems. This approach requires a shared storage system that must be highly reliable and available.

As the second article in this series about Elasticsearch distribution consistency principles, this article mainly shows how the master nodes in ES clusters publish meta updates and analyze potential consistency problems. This article also describes what meta data consists of and how it is stored. The following article explains the methods used to ensure data consistency in ES, as well as the data-writing process and algorithm models.

To learn more about Elasticsearch on Alibaba Cloud, visit https://www.alibabacloud.com/product/elasticsearch

Elasticsearch Resiliency Status

Elasticsearch Distributed Consistency Principles Analysis (1) - Node

Elasticsearch Distributed Consistency Principles Analysis (3) - Data

Whybert - January 10, 2019

Whybert - January 10, 2019

Alibaba Clouder - July 7, 2020

Alibaba Cloud Storage - April 10, 2019

ApsaraDB - July 8, 2021

Alibaba Cloud Native - July 18, 2024

Tablestore

Tablestore

A fully managed NoSQL cloud database service that enables storage of massive amount of structured and semi-structured data

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More