By Priyankaa Arunachalam, Alibaba Cloud Community Blog author.

It's important to understand the process of Big Data Analytics on Alibaba Cloud E-MapReduce. Similarly, it's equally important to manage the environment that you are using for everything as well. Managing a Hadoop cluster, similar to maintaining high availability, starting and stopping of services, and scaling out for computational issues, is a mandatory piece of providing a smooth way to process big data with uninterrupted services. These actions are made easier in Alibaba Cloud, of course, because you manage everything by using the web interface in a convenient fashion.

For people who are new to using Alibaba E-MapReduce, this article specifically addresses EMR cluster management. In contrast to the previous article, Diving into Big Data: Getting Started with OSS and EMR , in which we have seen how to create a cluster in EMR as an initial step, this article will additionally consider various methods for creating an EMR cluster, as well as services running on initiating a cluster, expanding a cluster, releasing a cluster, among other things.

The advantages of creating a cluster using EMR:

So now, let's take a look on how to make use of some of these features in Alibaba Cloud E-MapReduce console.

As seen earlier, the environment for Big Data can be built easily with the help of Alibaba Elastic MapReduce (EMR). You can quickly create a cluster and launch it in few minutes making you spend less time in cluster creation and concentrate more on processing and insights.

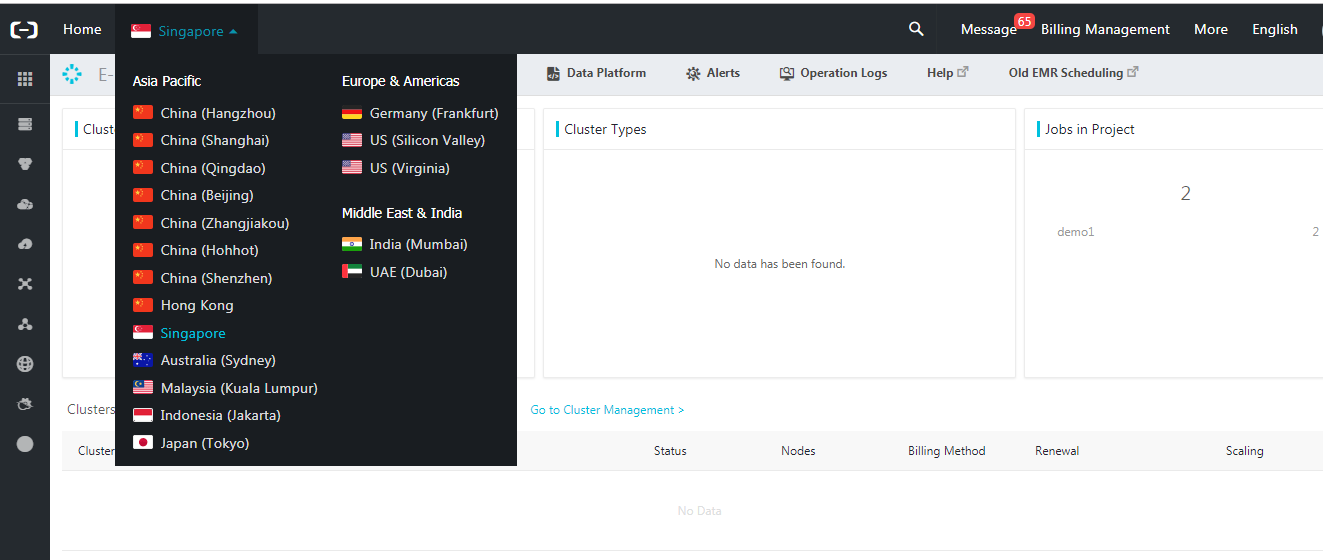

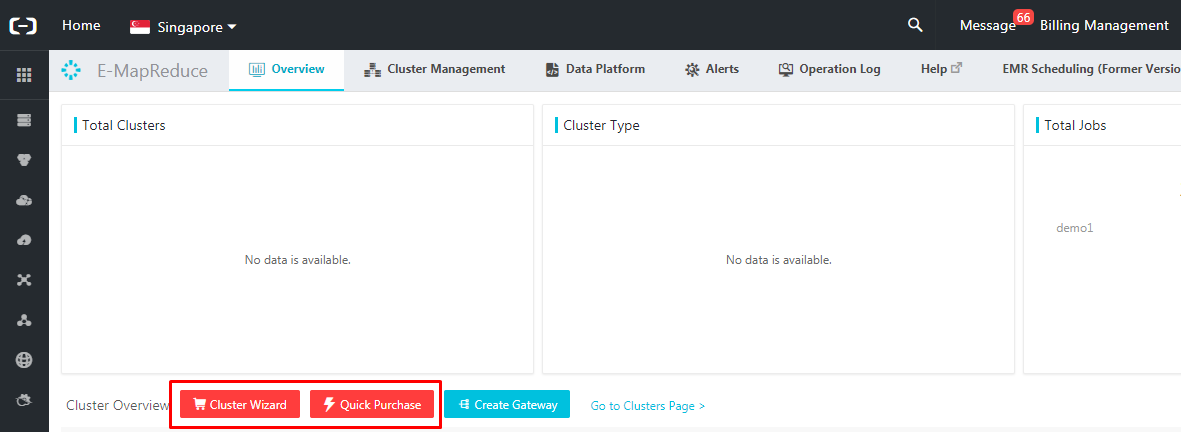

Following are the steps for cluster creation in EMR console.

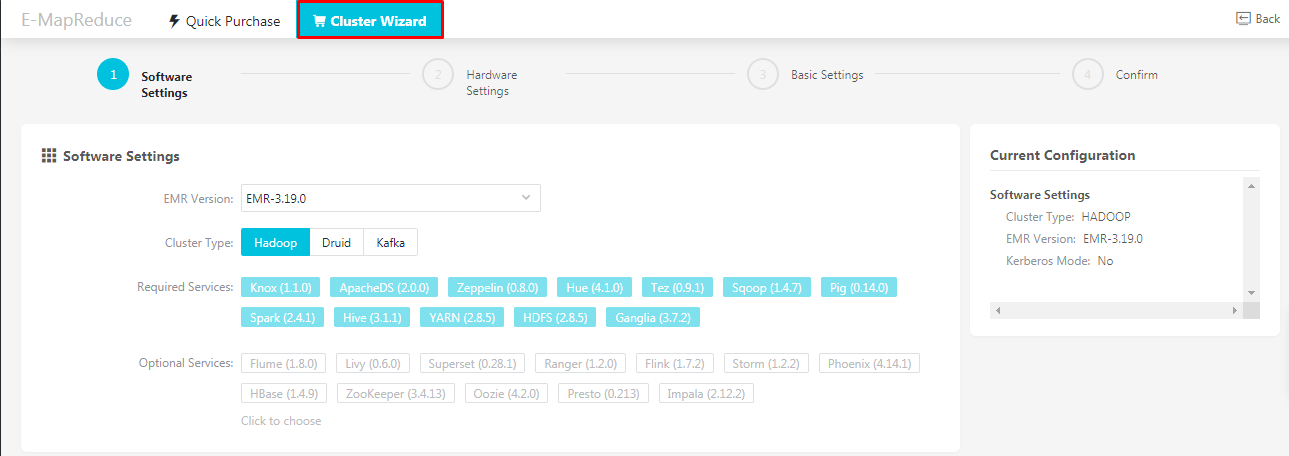

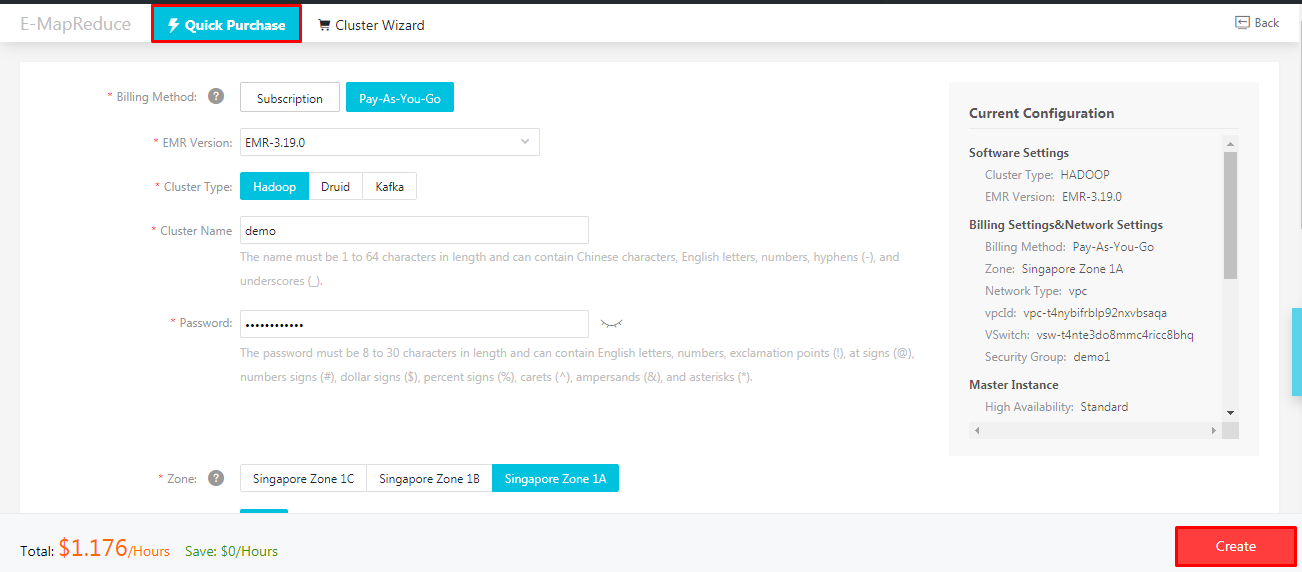

You can use one of the following to create a cluster in EMR:

Cluster Wizard is a type of custom purchase of a cluster where we can choose a type of cluster like Hadoop or Kafka and choose optional services from the list other than the built-in services. For detailed information about this type of purchase, refer the previous article, Diving into Big Data: Getting Started with OSS and EMR .

One new feature added to the EMR console is quick purchase in which you have a pre-built set of services and configurations filled up by default. Just specify a cluster name, password and security group and hit on create. You can also see the price estimation based on the configurations selected. This will create a cluster at a basic level. Relax for few minutes while the cluster is being launched

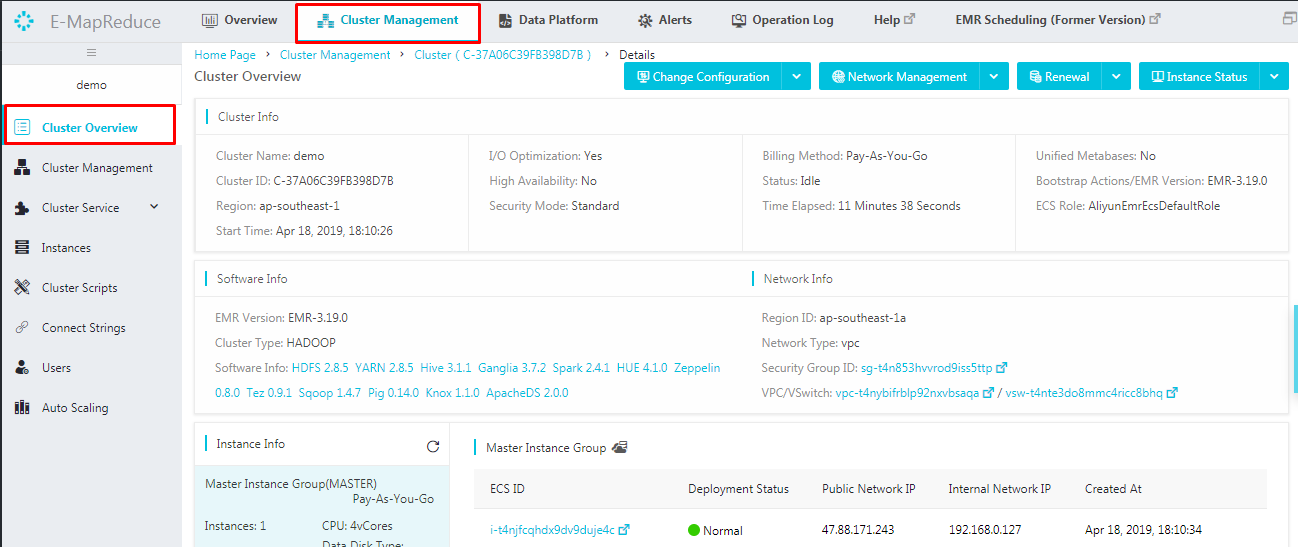

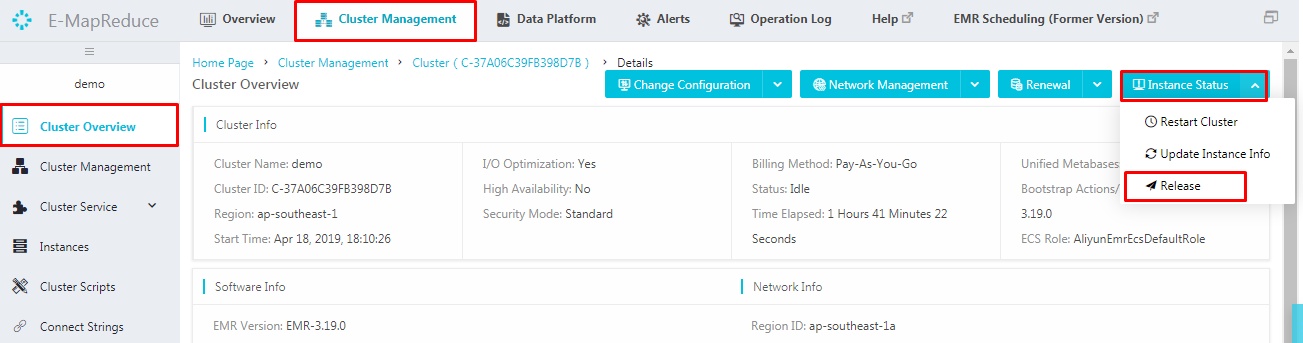

Once the cluster is created, move to Cluster Management tab and click on the cluster created. On the left pane, click on Cluster Overview. This leads to a screen where you can several details such as the software information and instance information. From here you can change configurations or change the status of instance in addition to other settings and configurations.

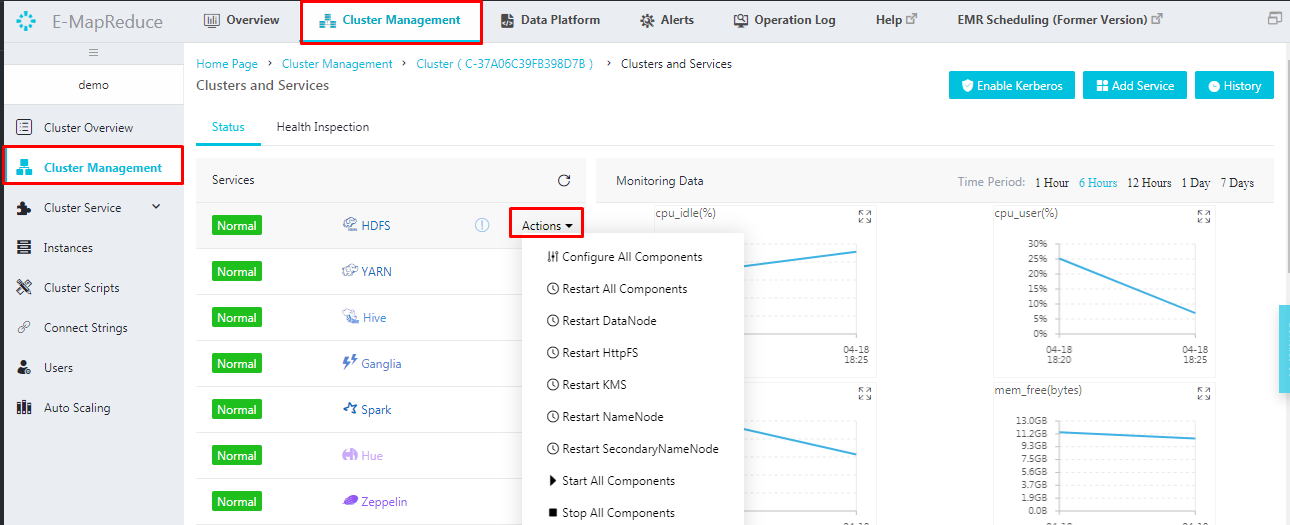

Navigate to the Cluster Management next. This shows the list of services which are up and running like HDFS, YARN, HIVE, Spark and other services. Anytime you can start, stop or restart the components by clicking on Actions next to the corresponding service.

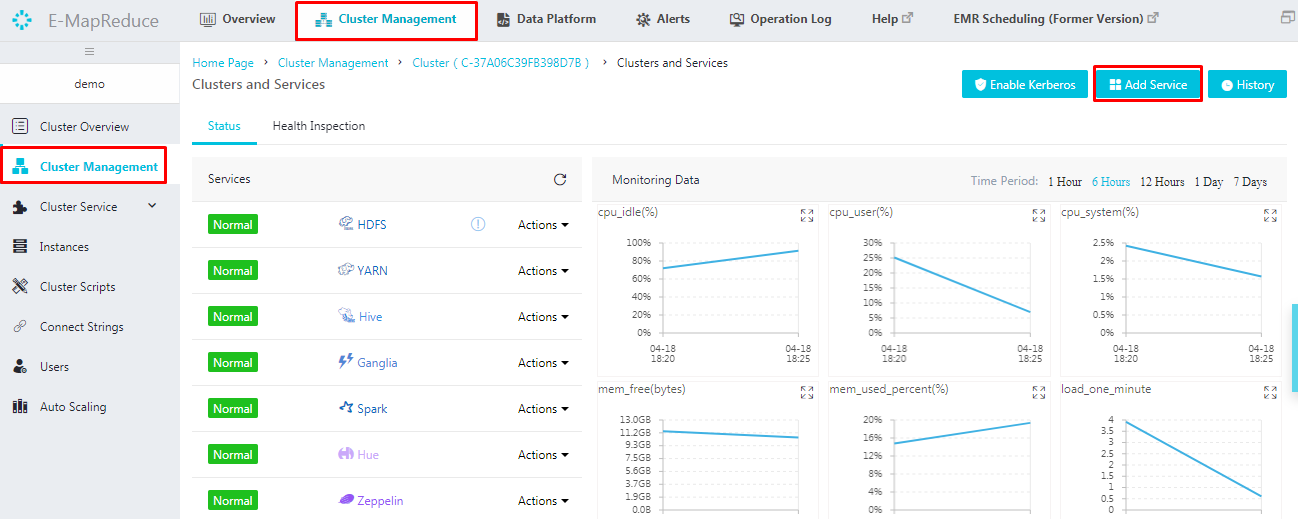

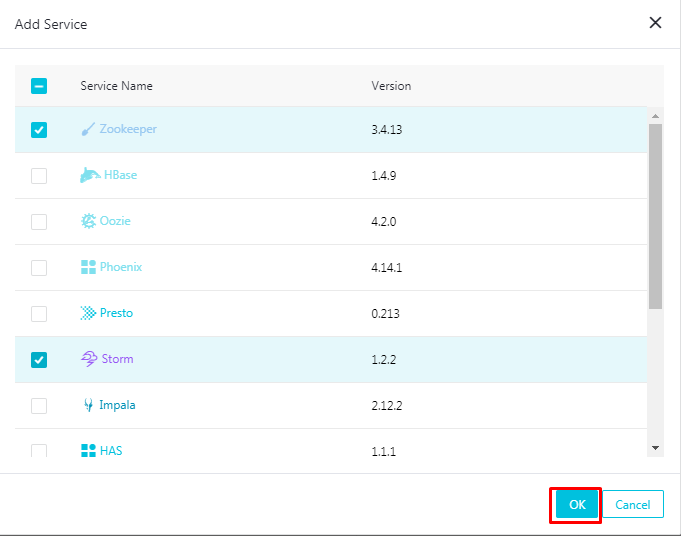

Now we have a list of services running and at some situation, we will have a need to add more services to the cluster. This is possible at any time just by a single click. To add one or more services to the cluster created, click Add Service.

In the Add Service wizard that pops up, various services with their corresponding versions will be displayed. Select the required services and click OK.

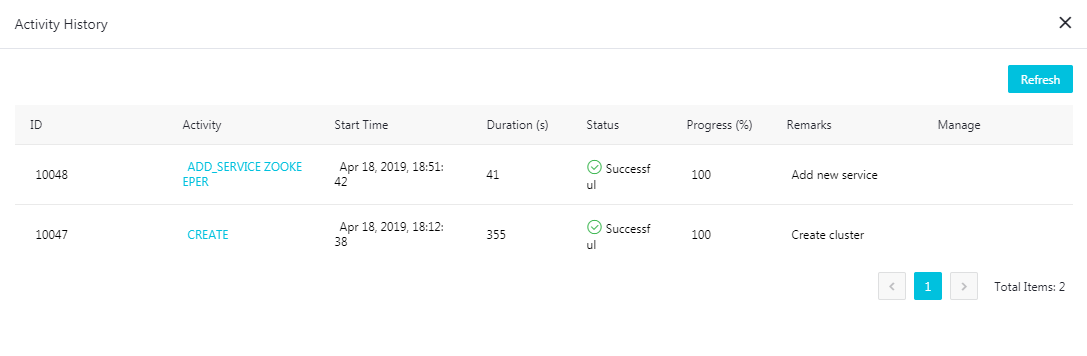

To see the status of the added service, let's move to the history to view the activate history. Here we can see the service added up in executing state and once it's up, the status will be successful as shown below.

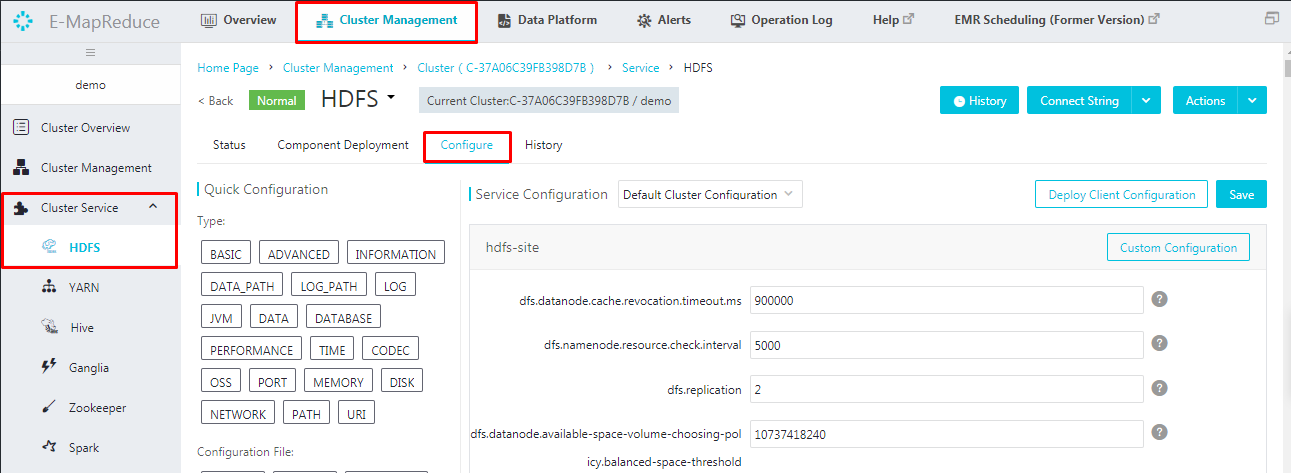

Click a service under Cluster service to view the corresponding tabs, such as Status, Component Deployment, Configuration, and History. To change the configurations of a service, you can use the E-MapReduce interface. Major configuration files like hdfs-site, core-site will be available under configure.

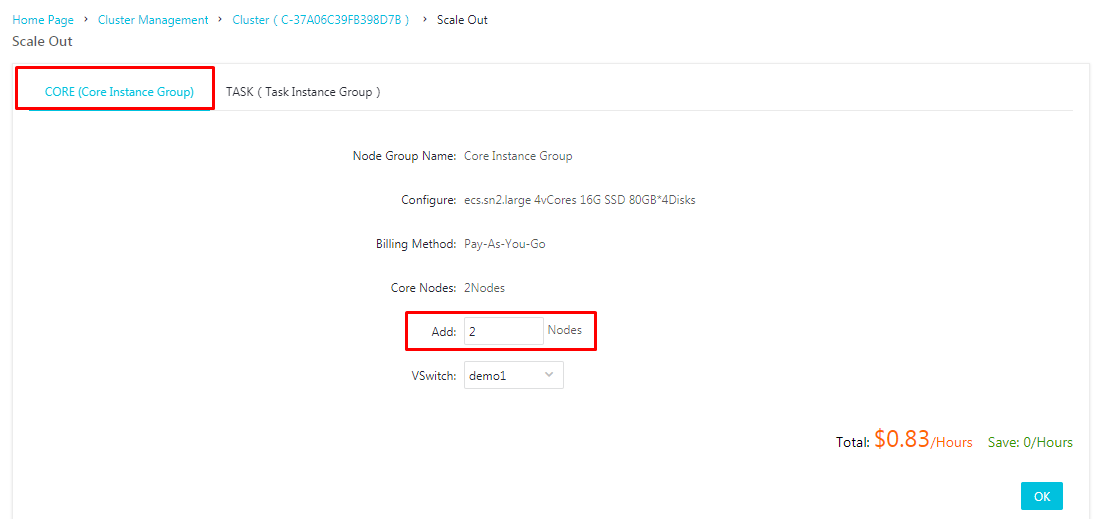

Scaling out a cluster can be understood as expanding a cluster due to HDFS disk usage or for more efficient processing. With that said, consider the following: We create a 3-node cluster with one node functioning as a master and two functioning as worker nodes. Scaling out a cluster, in such a case, can be understood as adding worker or client nodes to increase computing efficiency.

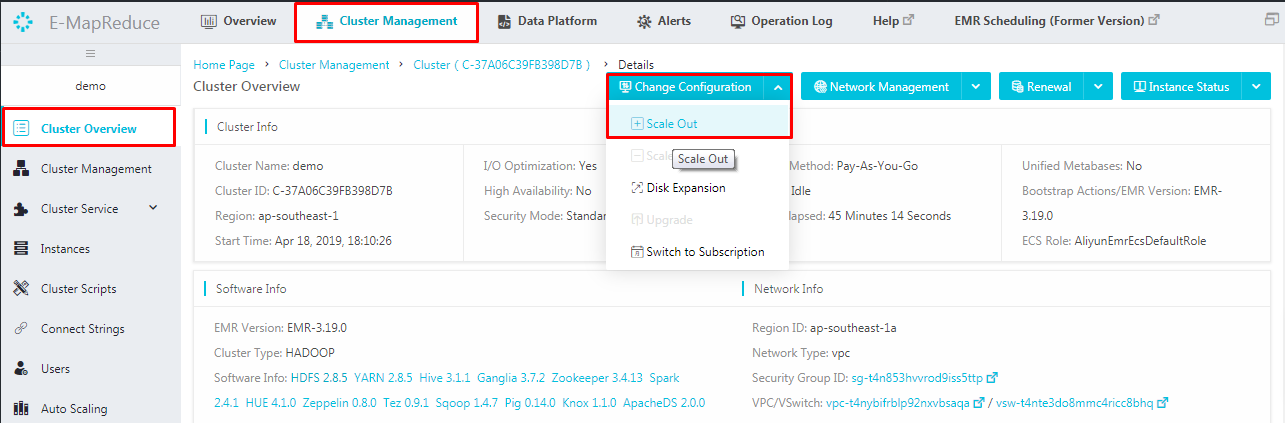

With Alibaba E-MapReduce, you can expand it horizontally when your resources are insufficient for your needs. So what will be the configuration of the newly added node? When expanding a cluster, configurations default to be consistent with the ECS instances which were purchased previously. You can expand a cluster but cannot reduce a cluster. To add worker nodes to a cluster, navigate to Cluster Overview under Cluster Management tab. Click on Change Configuration and choose Scale out from the list.

On the scale out wizard, you can either add core or task nodes. Choose between core or task nodes which you want to add up. Specify the number of nodes to be added up to the cluster and click OK.

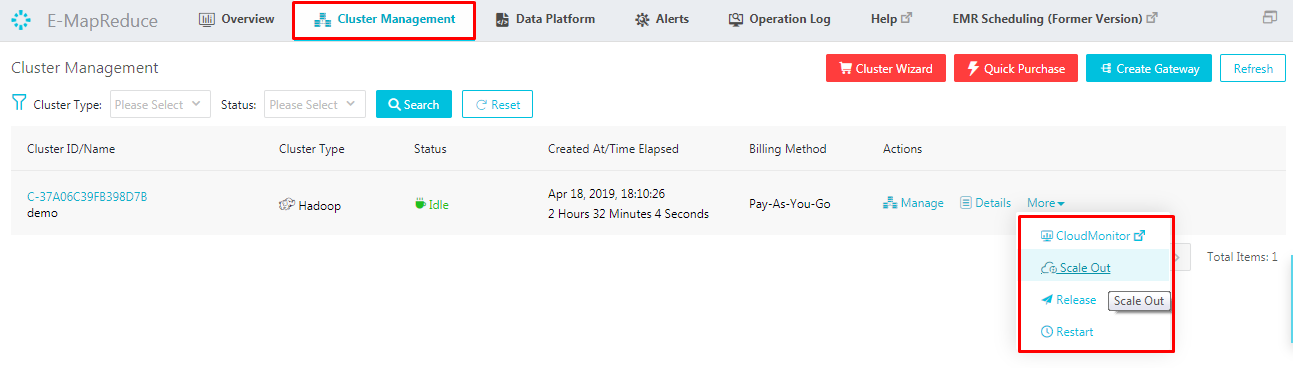

You can also add nodes by clicking More next to the cluster and selecting Scale Out from the More options contextual menu.

To view the expansion status of a cluster, click on Core Instance Group under Cluster Overview. Nodes that are being added to the cluster are displayed as Scaling Up/Out. When the status of an ECS instance changes to Normal, it means the instance has been added to the cluster and can now be used in your services.

The autoscaling feature of Alibaba E-MapReduce is used to reduce costs and improve execution efficiency because:

To enable autoscaling, navigate to Autoscaling under Cluster Management. To do this through an Alibaba Cloud account for the first time, you will need to assign a default role to the EMR service. Click OK on the authorization page of Auto Scaling.

You can also see, disk expansion and Switch to Subscription options in the drop-down of Change Configuration. In this tutorial, because I am using a Pay-as-you-go model here, anytime I can switch to a Subscription model with this option. Similarly when there is not much space for data storage, you can expand the disk size anytime by using Disk Expansion option. This is similar to node expansion, as you can expand the disk size but reduction is not allowed and the expansion is specific to the data disks. Specify the size you want to expand to and restart the cluster for the changes to take place effectively.

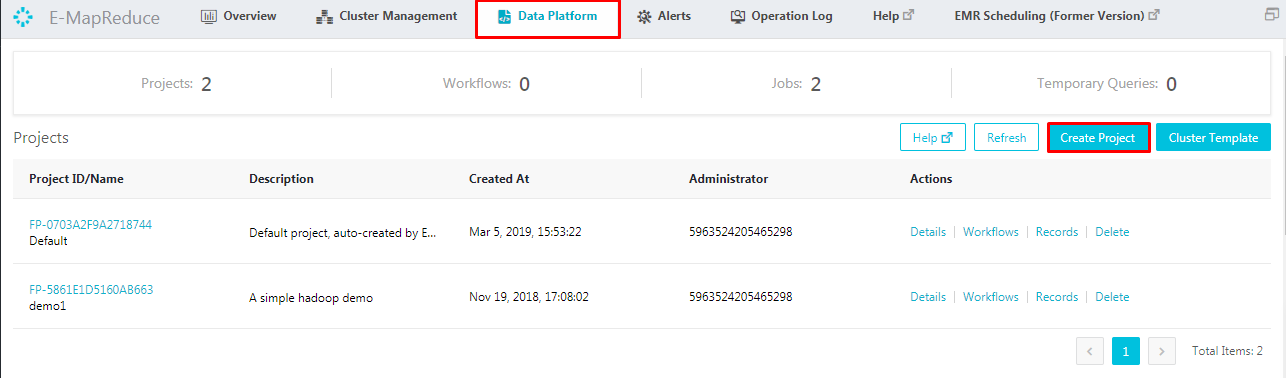

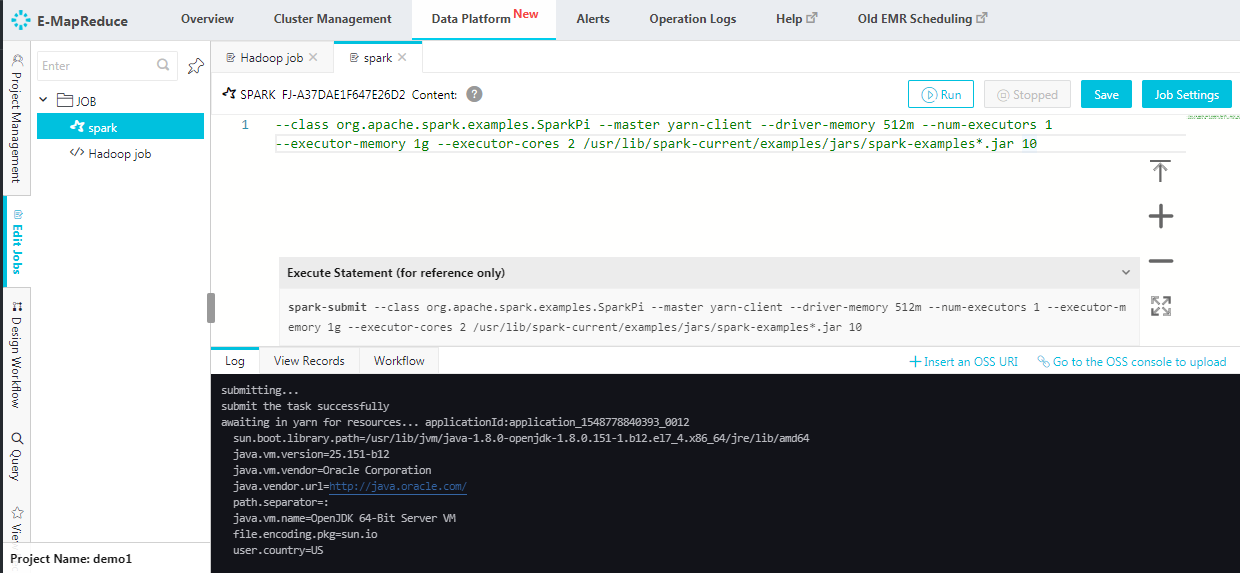

Navigate to Data Platform tab to create various jobs using the user interface. The projects which were already created will be listed under Data Platform. Either edit this or create a new project by clicking on Create Project

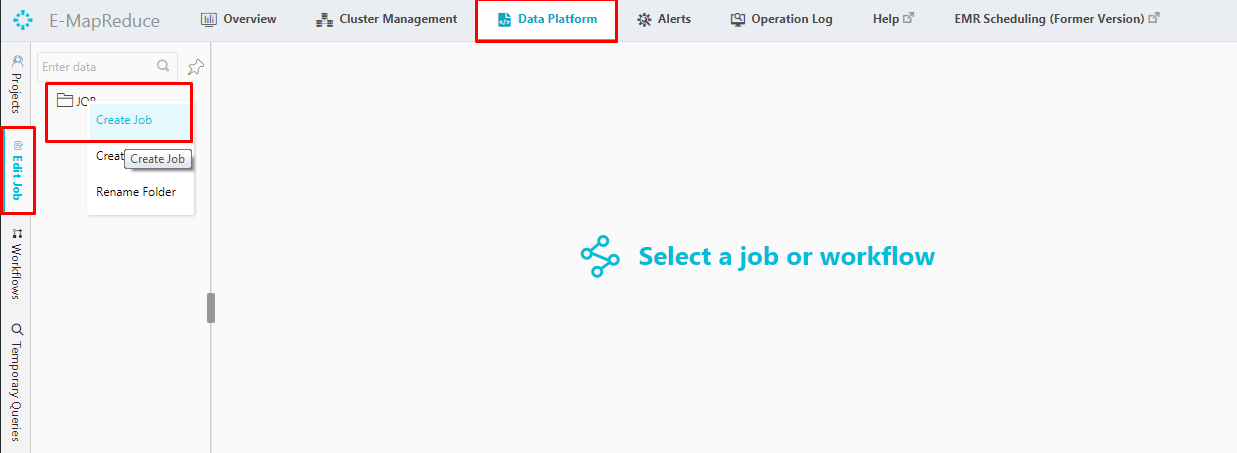

Clicking on Workflows will take you to the page of workflows and jobs. Edit jobs which are already created, else right click on Jobs and select Create Job

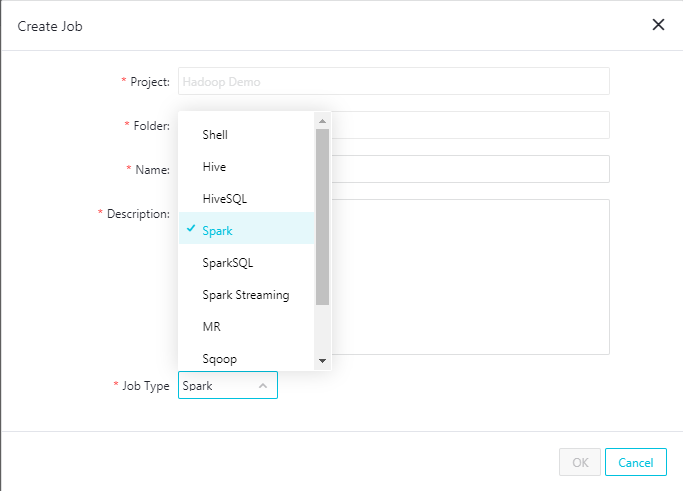

In the create job wizard, choose a project under which the job should be created. Give a name and a description for the job and choose the type of job from the options. For example, if you want to submit a spark job, then choose the type of the job to be Spark. Similarly we have all Hadoop jobs listed out here in the Alibaba E-MapReduce interface.

Just as you select the job type, submit the spark job and hit run

In Pay-As-You-Go model, when you are done with all the jobs, then the cluster can be released at any time to save money. To release a cluster, navigate to Cluster Overview under Cluster Management. Click on Instance status and choose release.

Before releasing a cluster, there will be a prompt to confirm the operation. When you confirm the release,

If you no longer need logs and want to immediately terminate running clusters, then choose forcible release. This will terminate the cluster immediately without collecting the logs.

In this tutorial, you learned how to navigate through the Alibaba E-MapReduce interface, which can make creating and managing clusters easier and can ultimately help you to gain insights from your data.

2,593 posts | 793 followers

FollowAlibaba Clouder - March 4, 2021

Alibaba Clouder - April 13, 2021

Alibaba Clouder - July 20, 2020

Alibaba Clouder - September 2, 2019

Alibaba Clouder - September 2, 2019

Alibaba Clouder - September 2, 2019

2,593 posts | 793 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn MoreMore Posts by Alibaba Clouder