Download the "Cloud Knowledge Discovery on KDD Papers" whitepaper to explore 12 KDD papers and knowledge discovery from 12 Alibaba experts.

By Shengyu Zhang, Ziqi Tan, Jin Yu, Zhou Zhao, Kun Kuang, Tan Jiang, Jingren Zhou, Hongxia Yang, Fei Wu

Video recommendation in e-commerce plays an important role in acquiring new customers. For example, a great many consumers upload videos in the product comment area to share their unique shopping experiences. This unique buyer behavior of presenting products or user experience may attract potential buyers to purchase the same or similar products. Different from professional agency-generated videos, such as ads, consumer-generated videos usually come in large quantities and depict consumers' individual preferences. Therefore, we can improve the effectiveness of e-commerce video recommendation by pushing consumer-generated videos to potential consumers who are interested in the same product.

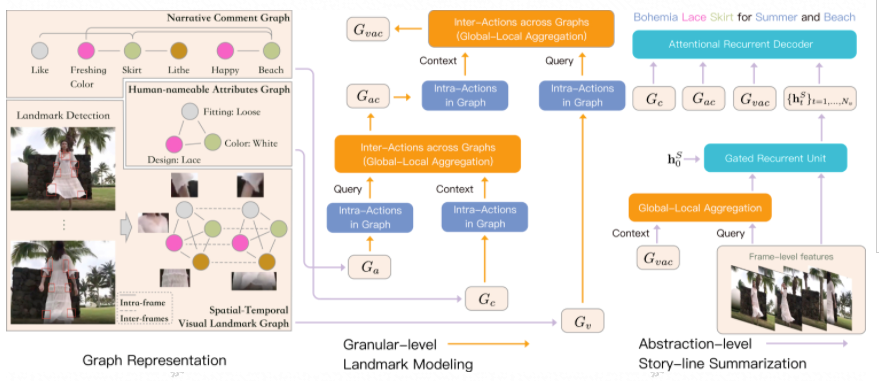

To this end, we designed a model to generate titles of consumer-generated videos automatically. This model extracts, associates, and aggregates meaningful information from the following three kinds of facts: (1) the consumer-generated videos, which visually illustrate the detailed characteristics and story-line topics of products; (2) the comment sentences written by consumers, which mainly narrate the consumers' preference to different aspects of products as well as consumers' shopping experience, although they contain many noises and therefore are infeasible to be directly taken as titles; (3) the attributes of associated products, which are extracted as inputs of the model, for example, mid- or long-length dresses, as each consumer-generated video uploaded to the product comment area is associated with a particular product. These attributes specify the human-nameable qualities of the products. The following sections explain the architecture of Gavotte and present major experiments and analysis.

This paper "Comprehensive Information Integration Modeling Framework for Video Titling" has been accepted by KDD 2020.

This section explains how Graph based Video Title generator (Gavotte), the new learning schema we proposed, represents three types of inputs as graph structures.

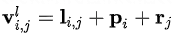

Product video information: We used landmark detection (Liu et al. 2018) to detect the features of various parts of a garment. Each part in every frame is viewed as a graph node. We fully connected the graph nodes in the same frame, and also fully connect the identical graph nodes across different frames. This allows us to capture the interactions between parts of the product in the same frame and the overall style of the product. We can also capture the dynamic changes of each part along the timeline and gain a comprehensive perception of parts from different points of view. To enhance the temporal nature and local features of the spatial-temporal visual landmark graph, we applied position embedding (Jonas et al. 2017) and type embedding to each node. The final node representation is as follows:

Video comment information: We used each word in a comment as a graph node and connect nodes that are syntax-dependent on each other. In the case of video titling, we found it more useful for capturing product-related semantics information in comments than analyzing the temporal relation.

Associated product attributes: We used each attribute value, such as the white color, as a graph node and fully connect all nodes. There is no temporal relation between the attributes. We can use graph modeling to better explore special interactions between the attributes.

As shown in the preceding figure, granular-level interaction modeling is the process of modeling the intra-actions in each of the three heterogeneous graphs and the inter-actions across these graphs.

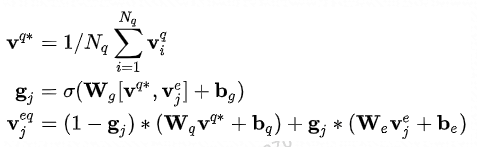

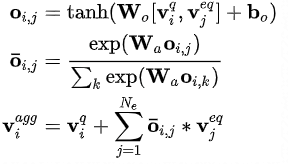

Modeling of intra-actions in graph aims to identify granular-level, product-related features. As a common practice, we leverage graph neural networks (GNNs) as a trainable schema to model intra-actions in graph. Different from the working of traditional graph neural networks, we modeled the root node and neighbor nodes in information propagation separately. We also used self gating.

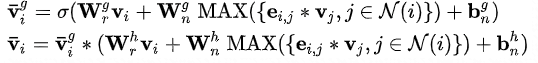

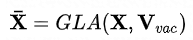

Modeling of inter-actions across graphs aims to associate and aggregate granular-level, product-related features across heterogeneous graphs. Based on its physical meanings, the module used to model inter-actions across graphs is called global-local aggregation (GLA). GLA consists of two sub-modules: global gated access (GGA) and local attention (LA). The input of GLA is a query graph and a context graph. The output is an aggregated graph that has the same structure as the query graph.

GGA is used to enhance the context graph's information that is globally related to the query graph. This process can be viewed as preliminary filtering. It is also used to suppress irrelevant information.

LA is used to filter the context graph's content that is related to the nodes of the query graph at the local level. Then, the content is aggregated to the nodes of the query graph.

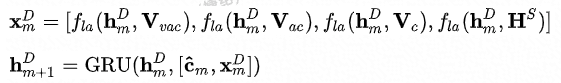

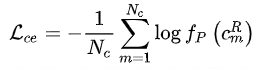

The abstraction-level story-line summarization modeling focuses on frame-level and video-level information. Therefore, it leverages a recurrent neural network (RNN) to conduct sequence modeling with frame features. First, we use the GLA module to merge granular-level information and frame information. The reason is that video frame modeling (such as product-background interaction modeling) and video modeling (such as video story-line topic modeling) are closely related to product details.

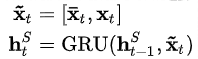

Then, we use an RNN to model the sequence of video frames.

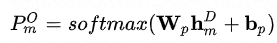

In the decoder part, we use the attention-enhanced RNN structure (Li et al. 2015). We focus on granular-level graph information and frame information in each decoding step.

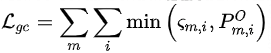

We use the commonly used cross-entropy loss for training.

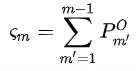

We found that the occurrence of repetitive words tended to undermine the overall attraction of video titles. To solve this problem, we incorporate a coverage-based generation loss similar to the coverage-based attention loss used in Text Summarization (See et al. 2017). This loss is proposed to penalize and suppress the occurrence of repetitive generated words.

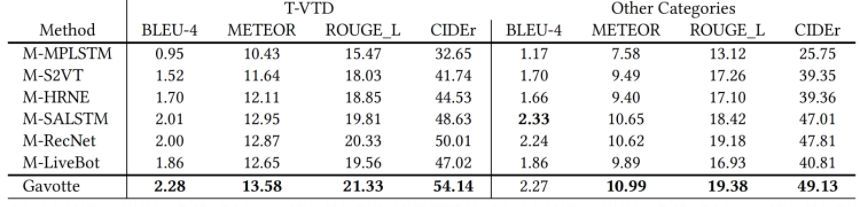

The following table lists the main experiment results.

Our model achieved the best results on the basis of two product datasets from Taobao: T-VTD clothing and other categories. Our model made significant improvements in T-VTD, whereas it made slight improvements in other categories. This is reasonable because a clearer definition of product parts was applied in the clothing category. A similar approach was applied in other categories.

The preceding figure shows two scenarios to compare the results of the Gavotte model with those of other two typical models: RNN-based SOTA model M-RecNet) (Wang et al. 2018) and Transformer-based SOTA model M-LiveBot (Ma et al. 2019). In the first scenario, the title generated by M-RecNet contains little meaningful information. In the second scenario, the title generated by M-LiveBot is incomplete and corrupted. In the first scenario, the title generated by Gavotte contains fashion buzzwords, such as "hanging out" and "steal the show", and the generated sentence is more fluent and attractive. In addition, Gavotte identifies the product detail "ripped", product information "jeans", product-background interaction "steal the show when hanging out", and video story-line information "dresses this way."

This paper presents the Gavotte model, which is used to automatically generate attractive titles for buyer-uploaded videos in product comment areas in e-commerce scenarios. Experiments show that Gavotte achieves significantly better results against other caption methods based on video description. Gavotte can capture product details, overall quality, product-background interactions, and video story-line information.

Liu, Jingyuan, and Hong Lu. "Deep fashion analysis with feature map upsampling and landmark-driven attention." In Proceedings of the European Conference on Computer Vision (ECCV), pp. 0-0. 2018.

Gehring, Jonas, Michael Auli, David Grangier, Denis Yarats, and Yann N. Dauphin. "Convolutional sequence to sequence learning." In Proceedings of the 34th International Conference on Machine Learning-Volume 70, pp. 1243-1252. JMLR. org, 2017.

Yao, Li, Atousa Torabi, Kyunghyun Cho, Nicolas Ballas, Christopher Pal, Hugo Larochelle, and Aaron Courville. "Describing videos by exploiting temporal structure." In Proceedings of the IEEE international conference on computer vision, pp. 4507-4515. 2015.

Abigail See, Peter J. Liu, Christopher D. Manning. "Get To The Point: Summarization with Pointer-Generator Networks". ACL (1), pp. 1073-1083. 2017.

Wang, Bairui, Lin Ma, Wei Zhang, and Wei Liu. "Reconstruction network for video captioning." In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7622-7631. 2018.

Ma, Shuming, Lei Cui, Damai Dai, Furu Wei, and Xu Sun. "Livebot: Generating live video comments based on visual and textual contexts." In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 6810-6817. 2019

The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

GCC: Graph Contrastive Coding for Graph Neural Network Pre-training

Learning Stable Graphs from Heterogeneous Confounded Environments

2,593 posts | 793 followers

FollowAlibaba Clouder - January 22, 2020

Alibaba Cloud MaxCompute - January 22, 2021

Alibaba Cloud Community - December 8, 2021

Alibaba Clouder - June 23, 2021

Alibaba Clouder - July 7, 2020

Alibaba Cloud Native Community - November 6, 2025

2,593 posts | 793 followers

Follow Media Solution

Media Solution

An array of powerful multimedia services providing massive cloud storage and efficient content delivery for a smooth and rich user experience.

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Broadcast Live Solution

Broadcast Live Solution

This solution provides tools and best practices to ensure a live stream is ingested, processed and distributed to a global audience.

Learn More Gaming Solution

Gaming Solution

When demand is unpredictable or testing is required for new features, the ability to spin capacity up or down is made easy with Alibaba Cloud gaming solutions.

Learn MoreMore Posts by Alibaba Clouder