By Xiyang

Cloud-native is becoming an accelerator for enterprise business innovation and solving scale challenges.

The changes brought about by cloud-native are not limited to the technical aspects of infrastructure and application architecture but also the changes in research and development concepts, delivery processes, and IT organization methods, as well as the changes in enterprise IT organization, processes, and culture. Behind the popularization of cloud-native architecture, DevOps culture and its automation tools and platform capabilities that support its landing practice have played a key role.

Compared with cloud-native, DevOps is nothing new. Its practice has penetrated modern enterprise application architecture. DevOps emphasizes communication and quick feedback between teams and achieves rapid response to business needs, product delivery, and improved delivery quality by building automated Continuous Delivery and pipelined application publishing methods. With the large-scale application of container technology in enterprises, capabilities (such as cloud computing programmable infrastructure and Kubernetes declarative APIs) have accelerated the integration of development and O&M roles.

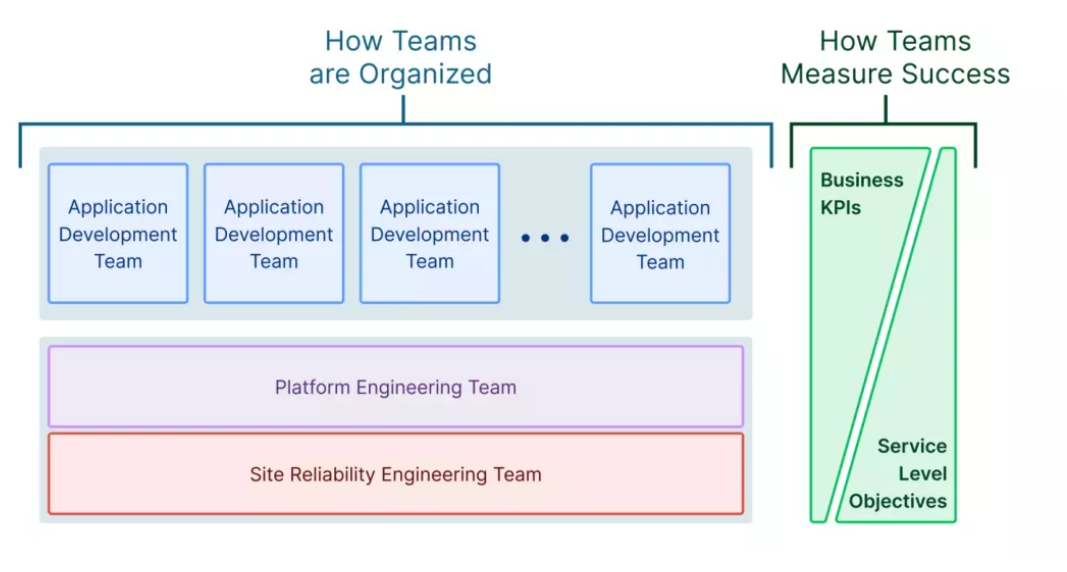

The general trend of cloud-native has made cloud migration standard for enterprises. It is inevitable to define the next generation of research and development platforms around cloud-native, and it has also forced further changes in IT organizations. As a result, new platform engineering teams have begun to emerge. In this context, how to practice DevOps efficiently in a cloud-native environment has become a new topic and demand.

With the improvement of the Kubernetes ecosystem from the bottom to the application layer, the Platform Engineering Team can easily build different application platforms based on business scenarios and the needs of users. However, it also brings challenges and difficulties to upper-layer application developers.

The Kubernetes ecosystem is rich in capability pools. However, the community does not have a scalable, convenient, and fast way to introduce consistent upper-layer abstractions to model application delivery for hybrid and distributed deployment environments under the cloud-native architecture. The lack of upper-layer abstraction in the application delivery process prevents Kubernetes from being easy for application developers.

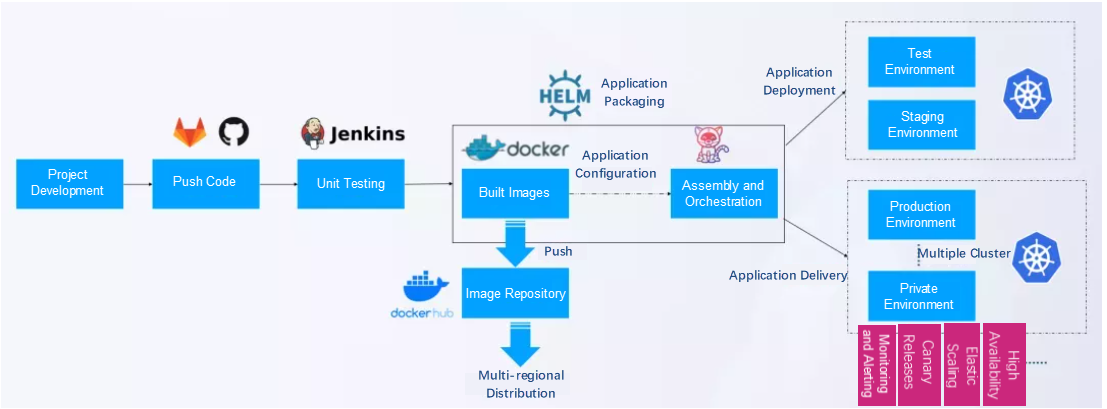

The following figure shows a typical process for a DevOps pipeline in cloud-native. The first is the development of the code. The code is hosted on GitHub and then connected to Jenkins, a unit testing tool. At this time, the basic research and development have been completed. The next step is image construction, which involves configuration and orchestration. applications can be packaged with HELM in cloud-native. The packaged application is deployed to various environments. However, there will be many challenges in the whole process.

First, different O&M capabilities are required in different environments. Second, you need to open another console to create an on-premises database during the configuration process. You also need to configure SLB. After the application is started, you need to configure additional features, including logs, policies, and security protection. Cloud resources and DevOps platform experiences are separated, and they are full of the process of creating with the help of external platforms. This is painful for novices.

The traditional DevOps pattern that preceded the advent of containers required different processes and workflows. Container technology is built from the perspective of DevOps. The functionality provided by abstract containers will affect how we view DevOps since traditional architecture development will change with the emergence of microservices. This means following the best practices of running containers on Kubernetes, extending the concept of DevOps to GitOps and DevSecOps, and making DevOps under cloud-native more efficient, secure, stable, and reliable.

Open Application Model (OAM) attempts to provide a cloud-native application modeling language to achieve separation of R&D and O&M perspectives, so the complexity of Kubernetes does not need to be transmitted through R&D. O&M supports various complex application delivery scenarios by providing modular, portable, and extensible feature components. This enables agility and platform independence in cloud-native application delivery. Its complete implementation of KubeVela on Kubernetes has been recognized by the industry as a core tool for building the next generation of continuous delivery methods and DevOps practices.

Recently, Alibaba Cloud released AppStack, a cloud effect delivery platform, at the 2021 Apsara Conference, which aims to help accelerate the scale of enterprise cloud-native DevOps. According to the AppStack Research and Development Team, it supports native Kubernetes and OAM/KubeVela at the beginning of its design to realize no binding and no intrusion to the application deployment architecture. Then, enterprises do not have to worry about migration and technological transformation costs. This also marks that KubeVela is becoming the cornerstone of application delivery in the cloud-native era.

With the rapid popularization of cloud-native ideas, hybrid environment deployment (hybrid cloud/multi-cloud/distributed cloud/edge) has become an inevitable choice for most enterprises' applications, SaaS services, and application continuous delivery platforms. The growing trend of cloud-native technology is also moving towards the goal of consistent, cross-cloud, and cross-environment application delivery.

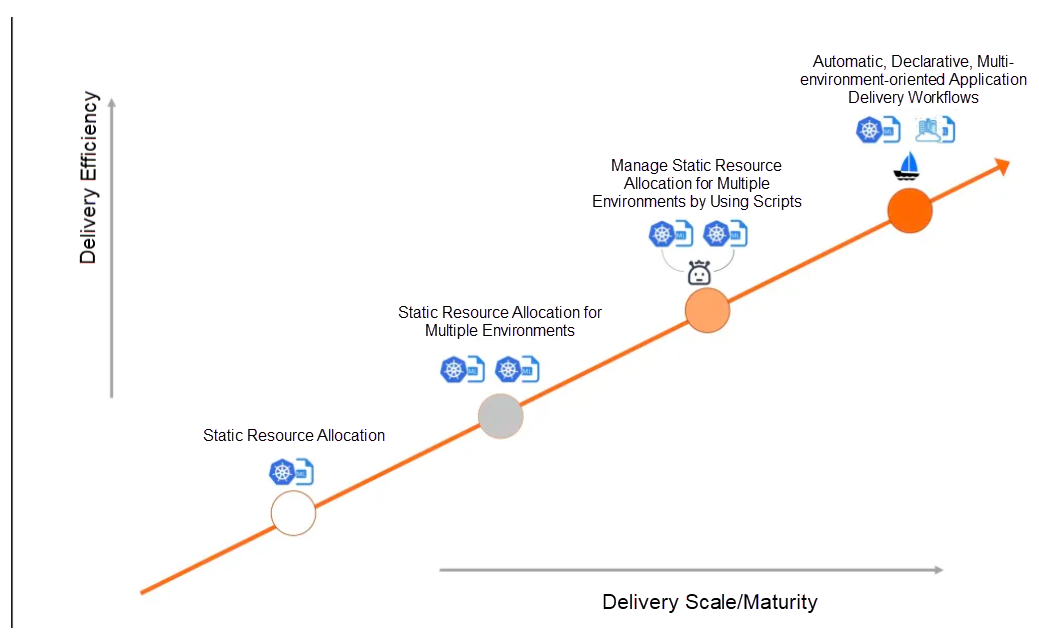

KubeVela, as out-of-the-box application delivery and management platform for the modern microservices model, has released version 1.1. In this version, KubeVela focuses more on the application delivery process for mixed environments, bringing multiple out-of-the-box capabilities (such as multi-cluster delivery, delivery process definition, canary release, and public cloud resource access) and a more user-friendly experience, helping developers upgrade from the initial stage of static configuration, template, glue code to the next generation of the automated, declarative, unified model, natural multi-environment-oriented delivery experience with workflow as the core.

Based on KubeVela, users can easily handle the following scenarios:

Multi-environment and multi-cluster delivery for Kubernetes is a standard requirement. Since version 1.1, KubeVela has implemented multi-cluster application delivery but can also work independently and directly manage multiple clusters. It can also integrate various multi-cluster management tools (such as OCM and Karmada) to perform more complex delivery operations. Based on the multi-cluster delivery strategy, you can define workflows to control the sequence, conditions, and other workflow steps of delivery to different clusters.

There are many specific scenarios of workflow. For example, users can define the order and precondition of delivery in different environments in multi-environment application delivery scenarios. The KubeVela workflow is oriented to the CD process and is also declarative. Therefore, it can be directly connected with a CI system (such as Jenkins) as a CD system, or embedded into the existing CI/CD system as an enhancement and supplement. Also, the landing method is flexible.

In the model, workflow is composed of a series of Steps. Each step in the implementation is an independent capability module, and its specific type and parameters determine its specific step capability. In version 1.1, KubeVela's built-in steps have been rich and easy to expand, helping users easily connect to existing platform capabilities and achieve seamless migration.

KubeVela's design is from the perspective of application-centric to help developers manage cloud resources better and conveniently in a serverless way, rather than struggling to cope with various cloud products and consoles. In terms of implementation, KubeVela has inner-integrated Terraform as an orchestration tool for cloud resources. It can support the deployment, binding, and management of hundreds of different types of cloud services by various cloud vendors with a unified application model.

In terms of usage, KubeVela divides cloud resources into the following three categories:

KubeVela can naturally be used in GitOps as a declarative application delivery control plane. (It can be used alone or with tools such as ArgoCD.) It can provide more end-to-end capabilities and enhancements for GitOps scenarios, helping GitOps concepts be more people-friendly and solve practical problems in the enterprise. These capabilities include:

You can learn more about KubeVela and the OAM project using the following materials:

1) Project code library: https://github.com/oam-dev/kubevela

2) Official homepage and documents of the project: https://kubevela.io/

Chinese and English documents have been provided (starting with version 1.1). More language documents are welcome to be translated by developers.

3) Slack: CNCF #kubevela Channel

664 posts | 55 followers

FollowAlibaba Clouder - December 31, 2020

Alibaba Clouder - April 28, 2021

Alibaba Developer - November 17, 2021

Alibaba Clouder - August 18, 2020

Alibaba Cloud Community - July 15, 2022

Alibaba Cloud Native Community - February 1, 2023

664 posts | 55 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native Community