With the release of large language model (LLM) applications in late 2022, the number of monthly active users exceeded 100 million within just two months. The amazing growth rate has set a new record for AI applications and brought the world into the era of LLMs.

The new technology wave has brought about innovations in business scenarios. The participation of large enterprises (such as Google and Bing) has made conversational search a new field for enterprises to explore. The primary problem to solve is how to combine the powerful logical reasoning and conversational capabilities of LLMs with the business data of an enterprise's specific field to create a conversational search service dedicated to the enterprise.

LLMs' excellent performance of understanding everything and being able to talk about everything mainly relies on the general world knowledge learned by LLMs. LLMs can answer universal questions. However, if LLMs are directly used to answer professional questions in specific fields, the obtained results are often completely wrong and irrelevant because the general world knowledge does not contain enterprise-specific data.

In the example shown in the following figure, Havenask is an open-source large-scale search engine developed by Alibaba Cloud. As the underlying engine of Alibaba Cloud OpenSearch, Havenask was open-sourced in November 2022. However, LLMs were unaware of this information. It can be inferred that enterprises need a combined solution to allow LLMs better serve their needs to build conversational search services based on their data.

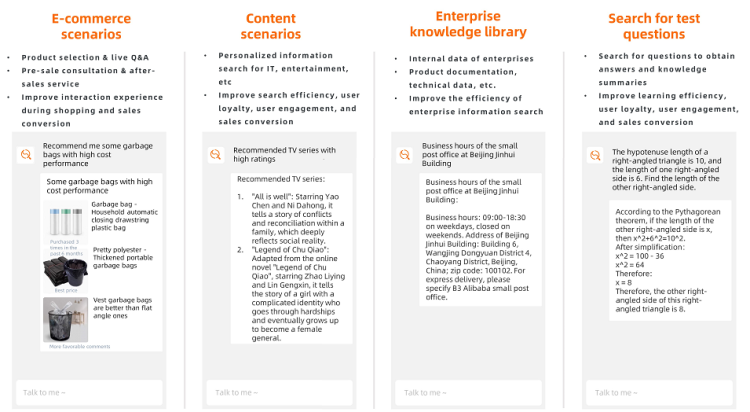

Conversational search can be applied to e-commerce, content, education, internal search services of enterprises, and other fields. Based on customer characteristics and questions, conversational search provides accurate Q&A results, so users can efficiently obtain information.

Most enterprises adopt the document slicing + vector search + LLMs for question answering solution to build conversational search in enterprise-specific fields.

With this solution, vector features are extracted from enterprise data and conversational interaction information and then stored in a vector search engine to build indexes and perform the similarity-based recall. The returned top-N results are imported into LLMs, which integrate information in a conversational manner and return the results to users. This solution has advantages in terms of cost, effectiveness, and business flexibility, making it the preferred solution for enterprises.

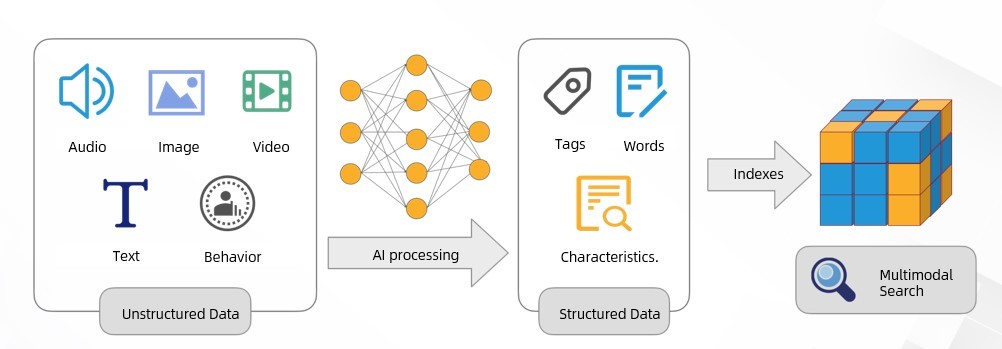

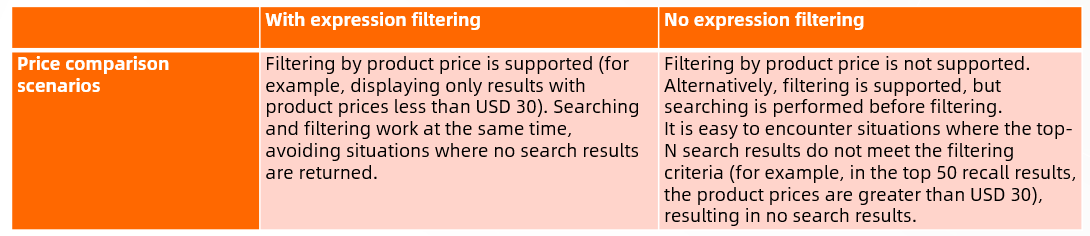

Unstructured data generated in the physical world (such as images, audio, video, and dialogue information) is converted into structured multidimensional vectors. These vectors are used to identify entities and relationships between entities. Then, the distance between the vectors is calculated. Generally, the closer the distance is, the higher the similarity is. The top-N results with the highest similarity are recalled to complete the search. We often use vector search in our daily life, for example, image search, price comparison during shopping, personalized search, and semantic understanding.

One typical application scenario of vector search is natural semantic understanding. Similarly, the core of conversational search is also the semantic understanding of questions and answers.

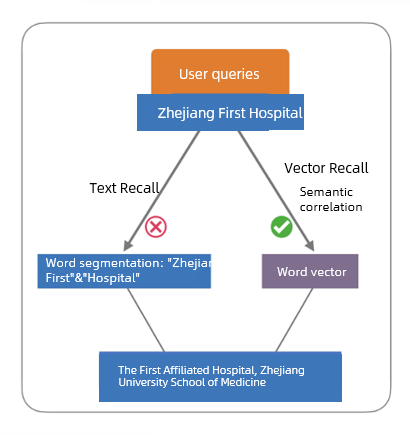

The following figure shows an example. When a user queries about Zhejiang First Hospital, the traditional keyword-based search method finds no results because databases do not contain the keyword Zhejiang First Hospital. In this case, vector analysis is introduced to analyze the correlation between expressions and clicks during historical search behavior, establish a semantic correlation model, and express data features with high-dimensional vectors. After comparing vector distances, it is found that the correlation between Zhejiang First Hospital and The First Affiliated Hospital, Zhejiang University School of Medicine is very high, and the desired information can be retrieved.

It can be seen that vectors can play an important role in semantic analysis and in returning relevant data results in conversational search solutions.

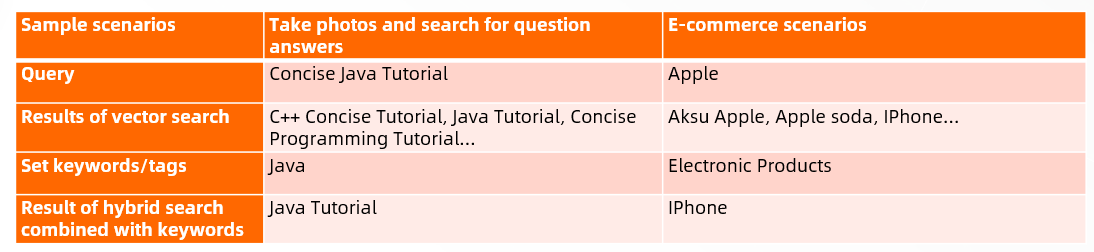

OpenSearch Vector Search Edition is a large-scale distributed search engine developed by Alibaba Cloud. Its core capabilities are widely used by Alibaba Group and Ant Group in many services. OpenSearch Vector Search Edition focuses on vector search scenarios and allows you to query data within milliseconds, update data within seconds, and write data in real-time. OpenSearch Vector Search Edition supports hybrid search that combines tag-based search and vector search. The same platform returns vector search results in Q&A scenarios for different enterprises in specific service scenarios.

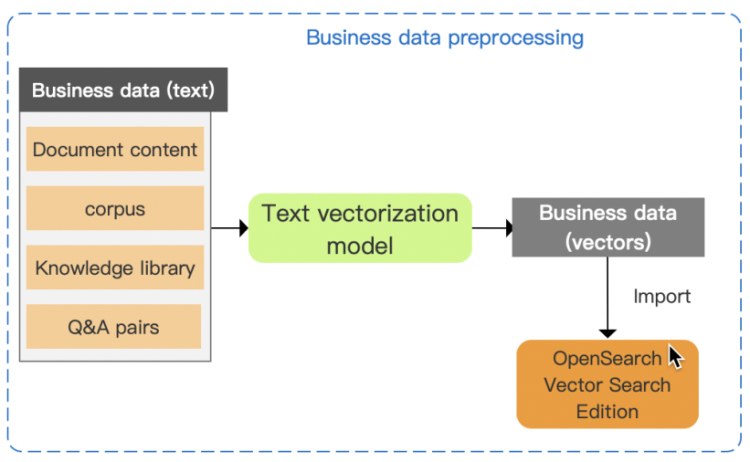

OpenSearch Vector Search Edition integrated with an LLM works in two stages. In the first stage, OpenSearch Vector Search Edition vectorizes business data. In the second stage, the online search service of OpenSearch Vector Search Edition searches for the required content and returns search results.

You must preprocess the business data and build a vector index for vector search to allow end users to search for content based on their requirements.

Step 1: Import the business data in the TEXT format into the text vectorization model to obtain business data in the form of vectors.

Step 2: Import business data in the form of vectors to OpenSearch Vector Search Edition to build a vector index.

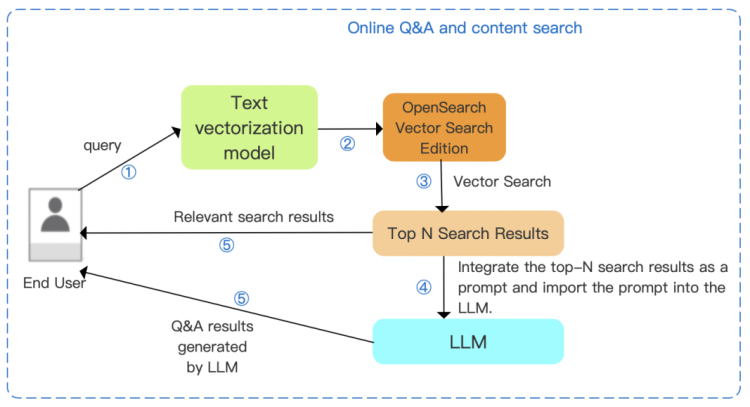

After the vector search feature is implemented, OpenSearch Vector Search Edition obtains the top-N search results and returns the Q&A results using the LLM.

Step 1: Import the query of an end user to the text vectorization model to obtain the query in the form of vectors

Step 2: Import the query in the form of vectors to OpenSearch Vector Search Edition

Step 3: The built-in vector search engine of OpenSearch Vector Search Edition obtains the top-N search results from the business data.

Step 4: OpenSearch Vector Search Edition integrates the top-N search results as a prompt and imports the prompt into the LLM.

Step 5: The system returns the Q&A results generated by the LLM and the search results retrieved based on vectors to the end user.

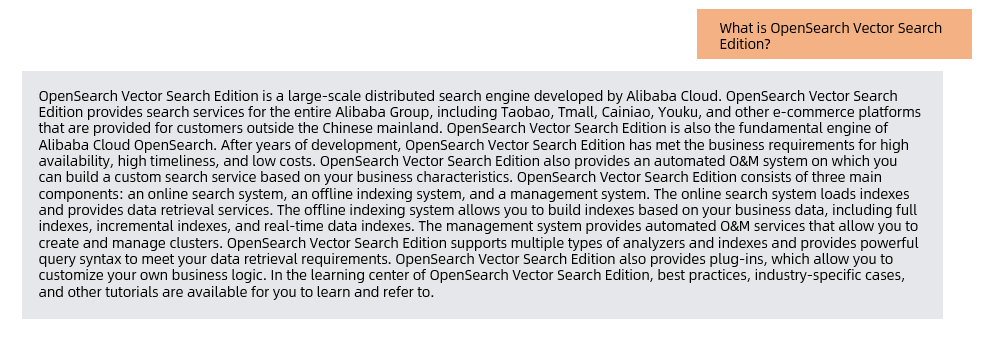

The following figure shows the result of conversational search built using this solution and using OpenSearch product documentation as business data.

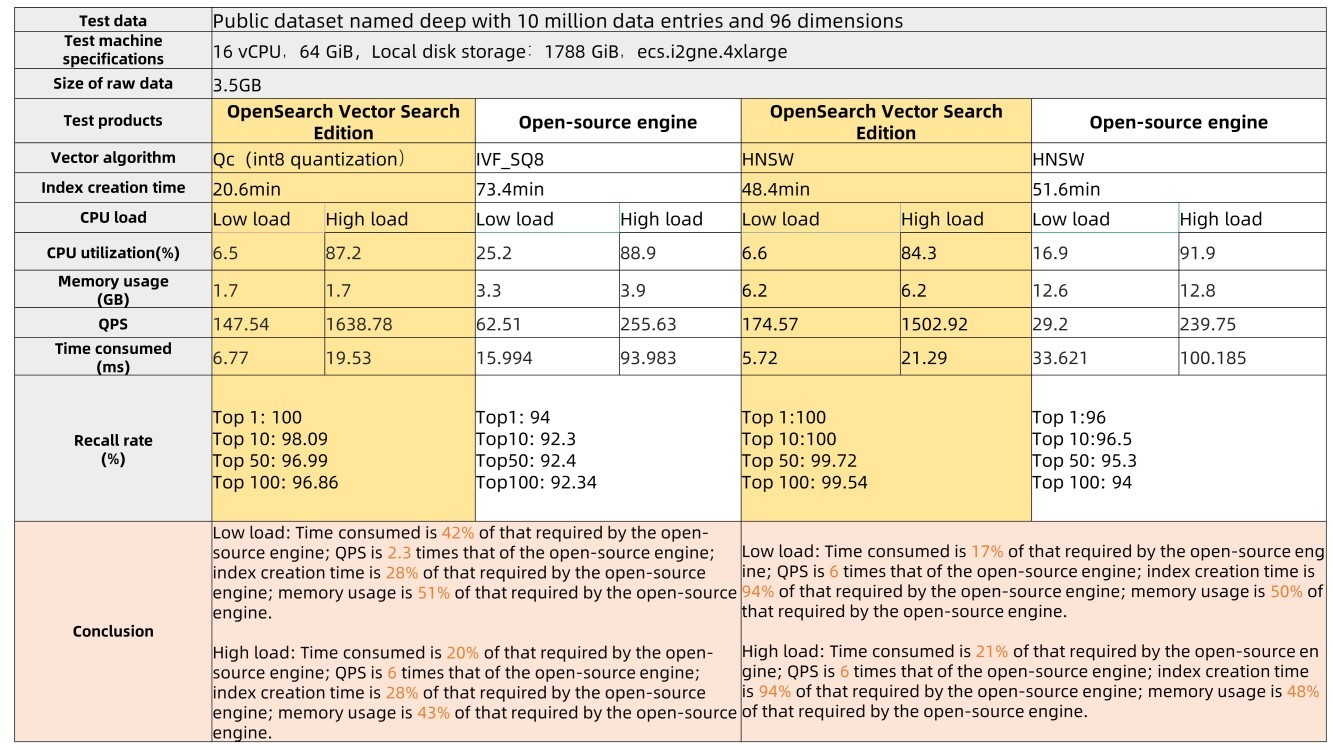

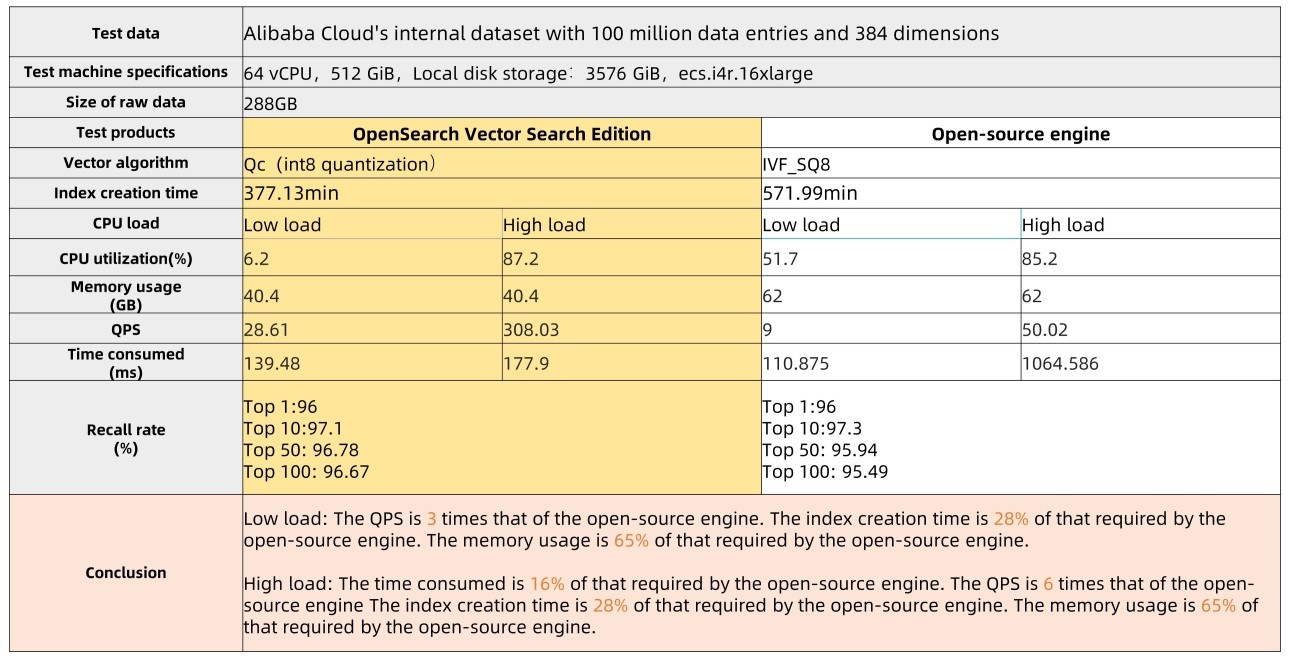

OpenSearch Vector Search Edition vs. Open-Source Vector Search Engine: Medium Data Scenarios

OpenSearch Vector Search Edition vs. Open-Source Vector Search Engine: Big Data Scenarios

Data Source: Alibaba AI Engine Division Team, November 2022

Read the Detailed Tutorial:

https://www.alibabacloud.com/help/en/opensearch/latest/opensearch-big-model-enterprise-specific-intelligent-question-and-answer-scheme

Learn More about OpenSearch:

https://www.alibabacloud.com/product/opensearch

Buy OpenSearch:

https://common-buy-intl.alibabacloud.com/?commodityCode=opensearch_ha3post_public_intl

Note: The open-source vector embedding model and LLM mentioned in this solution come from third parties (collectively referred to as third-party models). Alibaba Cloud cannot guarantee the compliance and accuracy of third-party models and assumes no responsibility for third-party models or your behavior and the results of using third-party models. Therefore, proceed with caution before visiting or using third-party models. In addition, we remind you that third-party models come with agreements (such as Open Source License and License). Please carefully read and strictly abide by the provisions of these agreements.

1 posts | 1 followers

FollowAlibaba Cloud Indonesia - October 24, 2023

PM - C2C_Yuan - April 18, 2024

Alibaba Cloud Community - January 4, 2024

Alibaba Cloud Community - June 30, 2023

Farruh - March 22, 2024

Regional Content Hub - May 13, 2024

1 posts | 1 followers

Follow Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More

Dikky Ryan Pratama July 5, 2023 at 6:12 am

awesome!