I originally intended to name this article "Setting up a Kafka Message Queue Cluster." However, unlike RabbitMQ, Kafka does not implement message queue protocols (for example, Advanced Message Queuing Protocol (AMQP). AMQP provides advanced queuing protocols for unified message services. It is an open standard for application layer protocols and designed for message-oriented middleware. Therefore, although Kafka's usage mode is more like a queue, it is still not strictly a message queue. So I decided to give this article a more generic name: "An Overview of Kafka Distributed Message System."

LinkedIn was the first company to develop Kafka using Java and Scala languages. Its source code was opened up in 2011, and it became a top project of the Apache Software Foundation in 2012. In 2014, several founders of Kafka set up a new company named Confluent, which specialized in Kafka.

The purpose of the Kafka project is to provide a unified, high-throughput, and low-delay system platform for real-time data processing. Kafka delivers the following three functions:

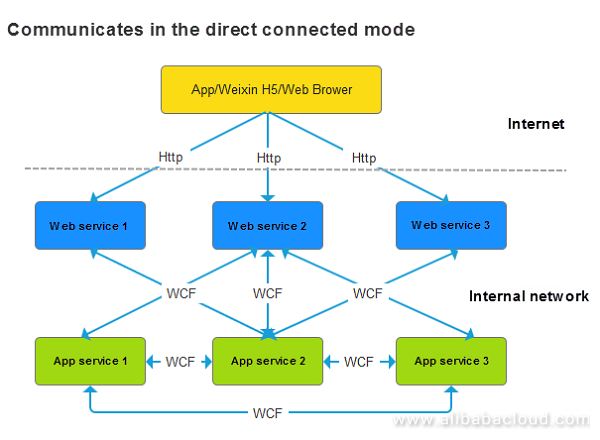

Kafka is a message system. Let us understand more about the message system and the problems it solves. Take the currently popular micro-service as an example. Let's assume that there are three terminal-oriented (WeChat official account, mobile app, and browser) web services (HTTP protocols) at the web end, namely Web1, Web2, and Web3, and three internal application services App1, App2, and App3 (Remote Procedure Call, for example, WCF and gRPC). If there is no message system and the direct connected mode is adopted, the communication mode between them may be as follows:

Figure 1: Structure of the System that Communicates in the Direct Connected Mode

The following issues exist while adopting this mode:

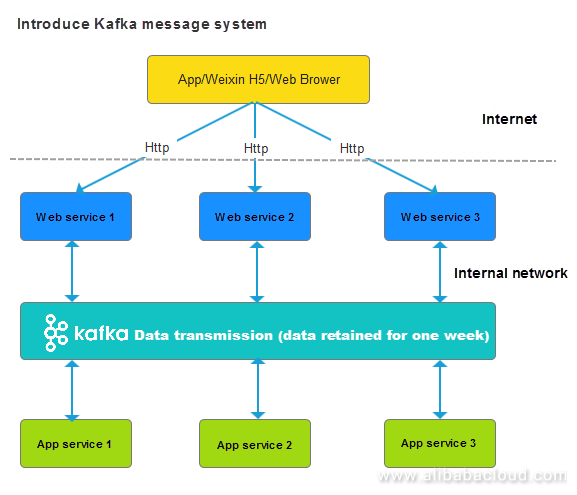

However, after the introduction of the message system, the structure changes as below:

Figure 2: Structure of the System After the Introduction of the Message System

After the introduction of the message system, all the issues mentioned previously get resolved.

Producer/Consumer Model:

Producer is an application that produces messages at one end of a data pipeline. Consumer is an application that consumes messages at one end of a data pipeline.

Outlined below are the two scenarios when the producer sends messages to a queue:

Publisher/Subscriber Model:

Publisher: an application that generates events at one end of a data pipeline.

Subscriber: an application that responds to events at one end of a data pipeline.

In Publisher/Subscriber model, the data sent to a queue is in the form of events instead of messages. In this case, data processing is the subscription of an event, and not message consumption.

If no subscriber connects to the queue after the publisher publishes an event, the event gets lost, i.e., no application responds to it. If a subscriber is online later, he will not receive the event.

In case if multiple subscribers connect to the queue after the publisher publishes an event at the same time, the event gets broadcasted to all the subscribers, and each subscriber receives the same event. Therefore, load balancing does not exist.

There is a difference between batch processing application and stream processing application. A visible boundary determines the most significant difference between batch processing and stream processing. If it exists, it is called batch processing. For example, a client collects the data once every hour, sends this data to the server for statistics, and then saves the statistical results in the statistical database.

If the boundary doesn't exist, the processing is called streaming data (stream processing). Here is an example of stream processing: Logs and orders are generated continuously on a large website just like a data flow. If the processing of each log and order takes less than several hundred milliseconds or several seconds post its generation, the application is called a stream application. If the collection of logs and orders happens once every hour followed by a unified transmission, the original stream data converts into batch data.

Occasionally, stream processing becomes imperative. For example, Jack Ma wanted to display the orders and sales on Tmall for November 11 on a large screen. If the data center works in T+1 mode and can obtain data for November 11 on November 12, Jack Ma would not agree.

The method for processing stream data is different from the method for processing batch data. Kafka provides a unique component, Kafka Streaming, to process stream data. Kafka offers different elements for other projects in the Hadoop ecosystem. For example, Spark also uses Spark Streaming to process stream data. Storm was the first system that was built to process stream data exclusively.

Apart from data boundaries, processing times can be used to differentiate stream processing and batch processing. The processing cycle for batch processing is generally hours or days, while the processing cycle for stream processing is usually seconds. Correspondingly, batch processing is referred to as offline data processing, whereas, stream processing is referred to as real-time data processing. In the unit of minutes, data processing is referred as near-line data processing. However, data processing is seldom discussed and generally processed offline unless the processing cycle reduces.

Kafka securely stores data in a distributed and fault-tolerant cluster. The default storage period is of one week. Additionally, Kafka naturally supports clusters. Kafka allows to conveniently add or reduce machines and specify the number of copies for data. This ensures that the cluster provides break free services even when individual servers in the cluster break down.

Kafka is primarily used to transmit data in our data center project. Let me first introduce the background of this project and then provide an understanding of issues that Kafka solves:

At present, 10 applications are running in the front-end which might increase with time. The front-end applications send data to the back-end data center (an application called data collector or collector for short). The collector corresponds to multiple applications. While it is idle most of the time, when numerous applications send data at the same time, the collector isn't able to process the data. In this case, there is a requirement for buffer mechanism so that the collector is not too idle or busy. Kafka is useful as a buffer pool for the data in such situations.

In this example, instead of selecting a traditional message queue component such as RabbitMQ, I have selected Kafka. This is because Kafka is inherently developed to cope up with a large batch of data and provide better performance.

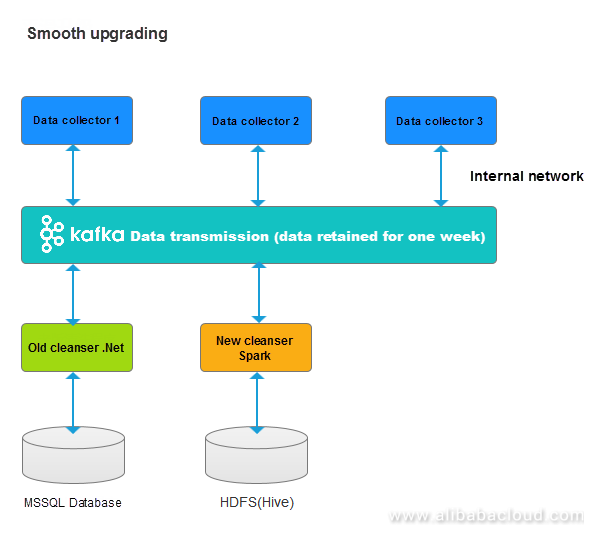

Kafka serves the function of "smooth upgrading" in a data center in addition to data buffering. Outlined below is a quick diagrammatic representation:

Figure 3: Smooth Upgrading

In the previous use case, we used .Net for developing frontend, data collection, and data cleaning applications. MS SQL stores the same. Big data technology helped us to store large amounts of data on the HDFS and Sparks helped us to collect statistics.

There was no need to change the previous versions of frontend, data collection or data cleaning applications after introducing Kafka. The new versions of collection or cleaning applications can be accessed because Kafka allows to extract data at any point of time.

It is easy to switch to the new system by simply stopping previous versions of the applications once the new versions pass the test.

Every coin has two sides. After introducing Kafka, the following changes take place:

Although the applications in the system are not mutually dependent, they depend heavily on Kafka. Stability of Kafka, therefore, becomes very important (similar to infrastructure such as Microsoft SQL Server).

In a simplified sense, a broker is a Kafka server. It is a service process that runs Kafka.

A topic is a data pipeline. A data pipeline produces messages and publishes events at the one end and consumes messages and responds to events at the other end of the pipeline. The data pipeline stores, routes, and sends messages or events.

The topic stores the data for a default period of one week.

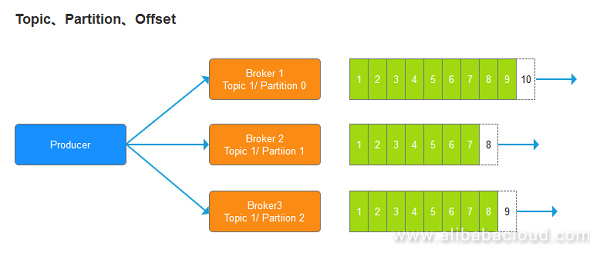

A topic can be divided into multiple partitions.

Figure 4: Kafka Topic, Partition, Offset

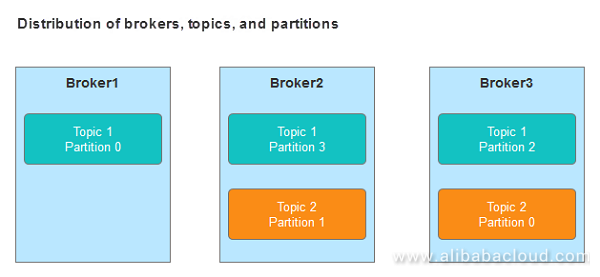

It is possible to set a different number of partitions for different topics Partitions are randomly distributed on different nodes in case if there are multiple nodes in a cluster. As shown in the following figure, topic 1 has three partitions, and topic 2 has two partitions:

Figure 5: Distribution of Brokers, Topics, and Partitions

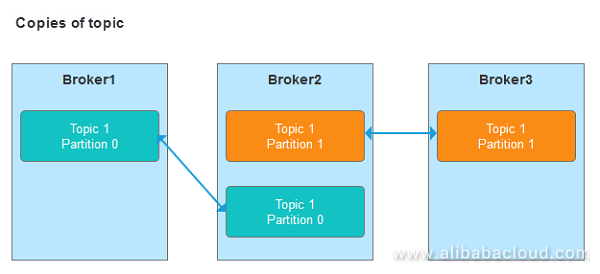

Generally, a topic has 2 or 3 number of copies. This is mainly because in case if a node fails and goes offline, the topic will remain available, and other nodes in the cluster will continue to provide the services.

Figure 6: Copies of Topic 2

It is advisable to restrict to a fewer number of copies. With an increase in a number of copies, the time taken to synchronize the data increases and at the same time, it lowers disk utilization.

Note: For both Kafka and Hadoop clusters, more nodes do not mean higher fault tolerance. There is a reduction in fault probability on relevant nodes however the fault tolerance is still the same. Let's say, there are 100 nodes present in a Hadoop cluster and we have set the number of copies to 2. It is not possible to access the data if the nodes that store these copies failed. The overall failure probability of nodes, where we have saved 2 copies, reduces compared to three or five nodes, among 100 nodes.

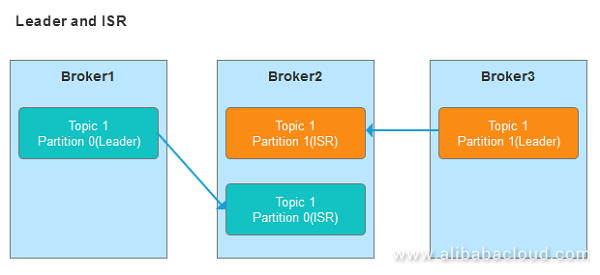

There are only one leader partition and one or more in-sync replicas (ISRs) for a topic with multiple partitions. While the leader partition performs read and write, the ISR ensures backup.

Figure 7: Copies of Topic 2 (Actual Diagram)

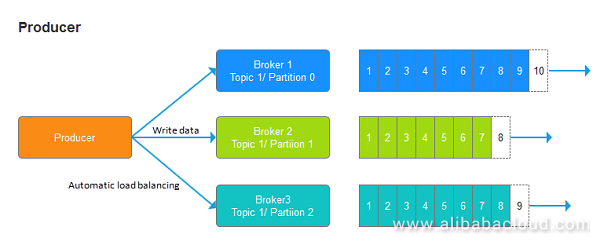

After the producer specifies the partition name and connects to any node in the cluster, Kafka automatically performs load balancing and routes the write operation to write data to the correct partition (multiple partitions are on different nodes in the cluster).

Figure 8: Producer is Used to Write Data

In the above figure, I haven't added any ISR partition to simplify the drawing.

The producer will obtain the data write notification by selecting the following modes.

Set ACKs to all. At this point, the speed is extremely slow. The producer waits for a notification from the leader, and there is no loss of ISR.

A key is specified when the producer transmits data. For example, an e-commerce order would transmit data such as Order Number, Retailer, and Customer) How would you set the key in this case?

Set the key as:

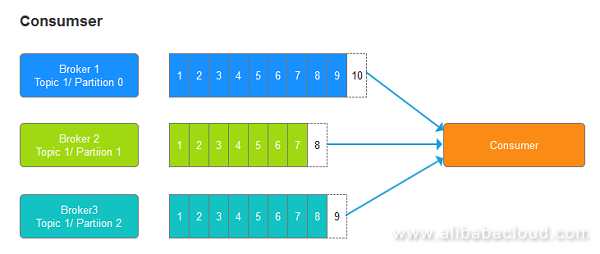

Consumers read data from topics. Similar to the producer, as long as any node in the cluster is connected, and the topic name is specified, Kafka automatically extracts data from the correct broker and partition and sends the data to consumers.

Data is ordered for each partition, as shown in the figure below:

Figure 9: Consumer is Used to Read Data

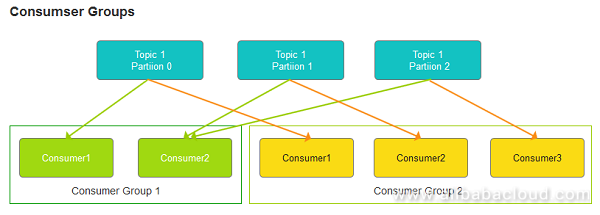

Kafka uses the group concept to integrate the producer/consumer and publisher/subscriber models.

One topic may have multiple groups, and one group may include multiple consumers. Only one consumer in the group can consume one message. For different groups, consumers are in the publisher/subscriber model. All groups receive one message. The following figure describes the relationship:

Figure 10: Consumer Groups

Note: Allocate one partition to only one consumer in the same group. If there are three partitions and four consumers in one of the groups, one consumer is redundant and cannot receive any data.

Please note – Consumer offsets mentioned here are different as compared to offsets mentioned in the previous topic. In the previous topic, offsets are related to topic (especially partitions) whereas here I am referring to consumers. Here are some more points of consideration

Submission times for offsets:

Generally, "At least once" is selected and operation is performed on the application to ensure repeated operation. However, the result is not affected.

Note: CAP theory: A distributed system can meet at the most any two aspects along with Consistency, Availability, and Partition Tolerance at once.

ZooKeeper is a distributed service registration, discovery, and governance component. Many components in the big data ecosystem, HDFS for example, use ZooKeeper. Kafka depends on ZooKeeper. The Kafka installation package directly includes its compatible ZooKeeper version.

Kafka uses ZooKeeper for the following:

This document discusses Kafka's main concepts and mechanisms. I believe that you will have a preliminary understanding of Kafka after reading this article. In the following chapters, we will perform actual operations to see how Kafka works. If you feel that Kafka is stable and robust during use, I hope you will like it.

To learn more about message queueing on Alibaba Cloud, visit www.alibabacloud.com/product/mq

Building a Spring-Boot API with a Multi-Model Database (OrientDB) on Alibaba Cloud

2,593 posts | 791 followers

FollowAlibaba Clouder - November 20, 2019

Alibaba Clouder - November 23, 2020

Alibaba Clouder - March 31, 2021

Alibaba Cloud Native - December 28, 2023

Alibaba Cloud MaxCompute - April 26, 2020

Alibaba Container Service - April 17, 2024

2,593 posts | 791 followers

FollowLearn More

E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn MoreLearn More

More Posts by Alibaba Clouder