The automatic question and answering (QA) system has been in use for decades now. However, Siri's and Watson's success in 2011 has captured the whole industry's attention. Since the success of these two technologies, the automatic QA system has stepped further into the limelight as a standalone practical application.

This success can be attributed to the considerable progress of machine learning and natural language processing technology, as well as the emergence of large-scale knowledge bases, such as Wikipedia, and extensive network information. However, the problems facing the existing QA system are far from being solved. The analysis of the question and the identification of the matching relationship between the question and the answer remain two key problems that restrict QA systems. This article is the first in a 2 part series which explores the ins and outs, problems and opportunities of the QA system.

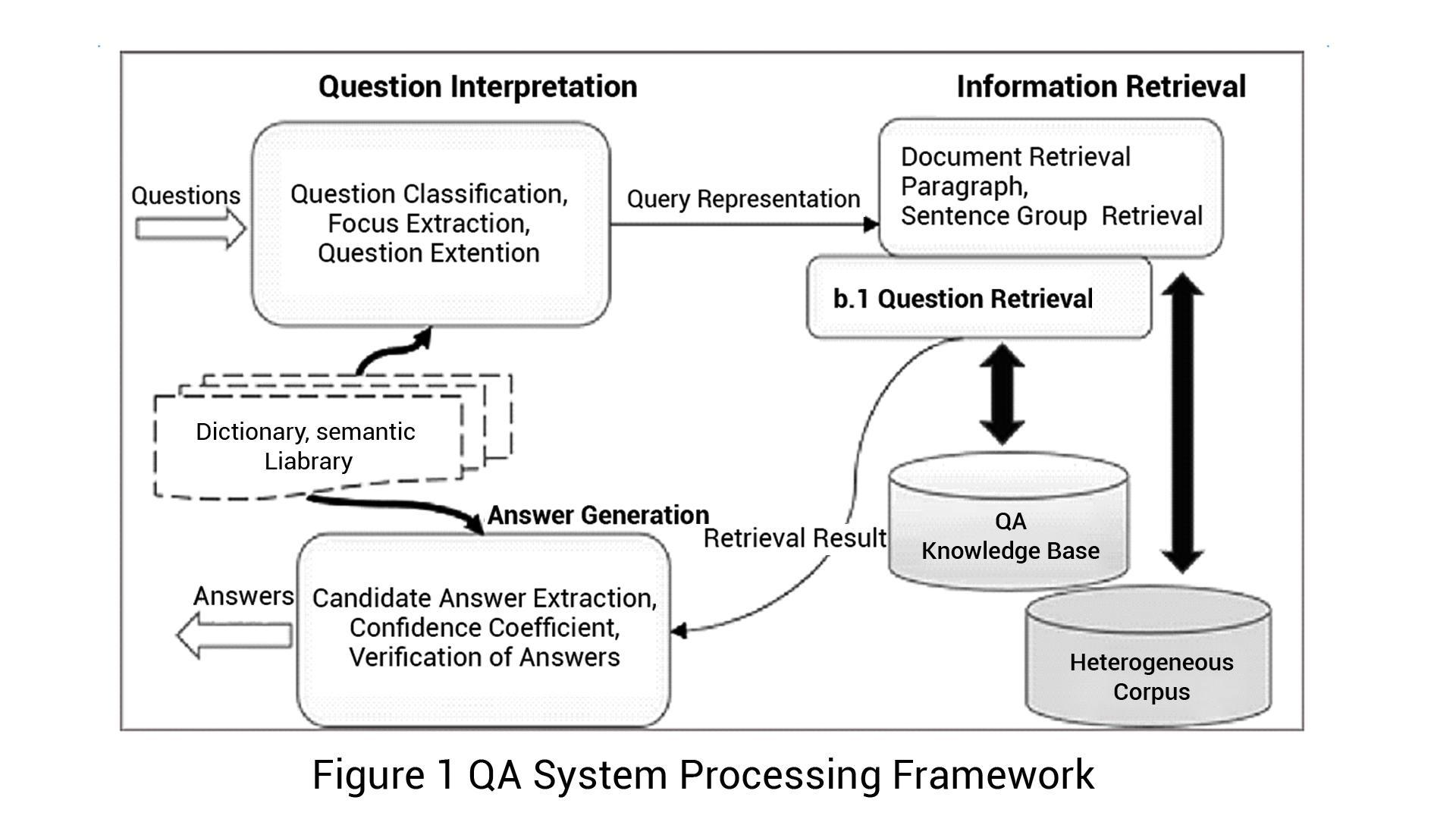

QA systems can interpret a user's questions described in natural language and return concise and accurate matched answers by searching the heterogeneous corpora or, in more common terms, the QA knowledge bases. Compared with search engines, QA systems can better interpret the intended meaning of the user's questions and therefore can meet the user's information requirements more efficiently.

The Turing test is the earliest example of a QA system implementation and tests a machine's ability for human intelligence. The Turing test requires the computer to answer a series of questions asked by human testers within 5 minutes. With the development of relevant technologies, such as artificial intelligence (AI) and natural language processing, different QA systems use various data types. Due to the limitation of intelligent technologies and domain data scales, early QA systems were mainly restricted to AI systems or expert systems of a limited domain, such as STUDENT [1] and LUNAR [2] systems. During this period, QA systems processed structured data. The system would translate the input questions into database query statements and then implement database retrieval and provide the feedback. With the rapid development of the internet and the rise of natural language processing technology, QA systems entered the open-domain-oriented and free-text-data-based development stage, such as the English QA retrieval systems Ask Jeeves (http://www.ask.com) and START (http://start.csail.mit.edu). The processing flow of such QA systems mainly includes question analysis, document and paragraph retrieval, candidate answer extraction, and answer validation. The introduction of the Question Answering Track (QA Track) at the Text Retrieval Conference (TREC) in 1999 promoted research and development based on natural language processing technology in the QA field.

Later, the internet-based community question answering (CQA) provided the data about question-answer pairs (QA pair) derived from massive user interactions, which provide a stable and reliable source of QA data for QA pair based systems. With the advent of Apple's Siri system, QA systems entered the intelligent interactive question answering stage allowing users to experience more natural human-computer interactions and make information services more convenient and practical.

A QA system's data objects include the user's questions and answers. A QA systems classification correspond to the data domains of the user's questions into those oriented to a restricted domain, an open domain, and frequently asked questions (FAQ). Also, QA systems can be categorized, according to generation and feedback mechanisms of the answers, into those based on retrieval type and generation type. This paper mainly describes the processing frameworks of QA systems based on various retrieval type.

Different QA systems are subject to different data processing methods. For example,

● FAQ-oriented QA system: obtains the candidate’s answers directly from question retrieval.

● Open-domain-oriented QA systems: implement retrieval of information about relevant documents and text segments first according to the question analysis results, and then extract the candidate answers.

Although different types of QA systems vary in functional divisions the processing framework of QA systems typically includes three functional components:

● Question interpretation

● Information retrieval

● Answer generation.

Question interpretation is a crucial link to interpret the user's intentions in the QA system, and the question interpretation module performance directly restricts the effect of the subsequent processing module. The user's intention is an abstract concept translated into a form that the machine can interpret and use as the basis of answer retrieval. The user's retrieval intentions lead to the generation of information requirements and are a representation of the user's intentions in research. According to the semantic structure, questions can be represented in two ways - class and content. The natural language processing technology is used for deep interpretation of the questions, including named entity recognition, dependency parsing, and word sense disambiguation.

Question interpretation includes question classification, topic focus extraction, and question extension processing. Question classification includes the user's questions in different classes so different answer feedback mechanisms can be used for various types of questions to obtain candidate answer sets. Question classifiers for machine learning algorithm [3, 4] are usually used in QA systems to implement classification of the user's questions.

Topic focus extraction is used to implement accurate positioning of information requirements of the user's question, of which the focus represents the question's main background or the user's interested objects. The focus represents relevant topic content of the user's question, usually the relevant information of the question topic, such as properties, actions, and instances.

According to the queries obtained from question interpretation, the information retrieval module retrieves relevant information from heterogeneous corpora and QA knowledge bases, and then transfers it to the answer generation processing module. For different QA systems, the retrieval model and retrieval data forms of the system are also different. For free-text-data-based QA systems, information retrieval is a filtering process to gradually narrow the scope of answers, including the retrieval of documents and paragraph sentence groups. For QA-pair-based QA systems, the information retrieval is to obtain the candidate questions similar to the user's question by question retrieval and return the corresponding candidate answer list.

First, document retrieval retrieves the document sets related to the user's question according to the question interpretation results. The simplest method is to implement full-text indexing of non-stop words in the question directly using the existing retrieval system (e.g., Smart and Lucene), and retrieve directly to obtain the document sets related to the user's question. The document retrieval models in QA systems include the Boolean model, vector space model, language model, and probability model.

The Boolean model is the simplest retrieval model. It organizes the keywords into a Boolean expression so that the keywords appearing in the document have to comply with the Boolean expression. The vector space model represents both the documents and queries as vectors and sorts the documents according to the similarity between corresponding vectors of the documents and queries (usually the cosine of the angle between the two vectors). The probability model estimates the correlation probability of the documents with the queries and sorts the documents according to such probability. The language model represents the queries and documents as language models respectively and estimates their similarity according to KL distance between the two language models.

The paragraph sentence group retrieval refers to retrieving the paragraphs (natural paragraphs or document fragments) that contain the answers from the candidate document sets, and further filtering the noise information to get a more accurate answer. There are three widely used paragraph retrieval algorithms: MultiText algorithm [6], IBM's algorithm [7, 8] and SiteQ algorithm [9]. The experimental results of Tellex [10] et al. show that the density-based algorithm can obtain relatively good results. The so-called density-based algorithm determines the correlation of the paragraph according to the occurrence times and proximity of the keywords within it. The retrieval algorithm proposed by Hang Cui, by contrast, parses both the question and answer into syntactic trees and expose a correlation from the structure of the syntactic trees.

The main problem for question retrieval is how to narrow the semantic gap between the user's question and the question in the knowledge base. In recent years, researchers used a method based on the rolling over model to calculate the translation probability to "translate" the user's question into the retrieval question, so as to implement question retrieval by similarity. For example, in the algorithms [11-14], the two questions are regarded as statements represented in different ways to calculate the translation probability between them. To calculate the translation probability, the probability of translation between words should be estimated. This method requires obtaining a synonymous or near-synonymous QA pair set first by calculating the similarity of answers, and then estimate the translation probability. Experiments show that this model can achieve better results than the language model, Okapi BM25, and vector space model.

Based on the retrieval information, the answer generation module implements candidate answer extraction and answer confidence calculation, and finally, returns a concise and correct answer. According to data granularity of the answers, the candidate answer extraction is classified into paragraph answer extraction, sentence answer extraction, and lexical phrase answer extraction.

The paragraph answer extraction summarizes and compresses multiple relevant solutions of a question and sorts out a complete and concise answer. The sentence answer extraction purifies the candidate answers and filters out the wrong answers that are seemingly related but semantically mismatched by matching calculation. The lexical phrase answer extraction is to accurately extract the answer words or phrases from the candidate answers using the deep language structure analysis technology.

The answer confidence calculation is to implement validation of the question and candidate answers on both syntactic and semantic levels, so as to ensure that the returned answer is the most matched result with the user's question. The most widely used confidence calculation method is based on statistical machine learning. This method defines a series of lexical, syntactic, semantic, and other relevant features (e.g., editing distance and BM25) to represent the matching between the question and candidate answers.

In recent years, the QA matching validation based on natural language processing obtains shallow syntactic and grammatical information by shallow analysis of the sentences, and then implement similarity calculation for syntactic trees (phrase or dependency syntactic tree) of the question and answers [17-20]. However, the accuracy of the QA system's answers should guarantee the semantic matching between the question and answer. For example, if the question is "what is the latest promotion price of Apple 6S Plus", and the answer is "Red Fuji apple drops down to RMB 12", it is an irrelevant answer. A standard method is to implement semantic QA matching modeling using external semantic resources, such as semantic dictionaries (WordNet) and semantic knowledge bases (Freebase) [21-23], so as to improve the calculation performance between the question and answer.

The machine learning models constructed in traditional QA systems are shallow models, such as the support vector machine (SVM) [24], conditional random field (CRF) [25], and logistic regression (LR). These QA systems, based on shallow models, feature high artificial dependency and lack generalization capability for data processing in different fields. The shallow models' engineering characterizes the artificial dependency. As shallow models lack data representation and learning capability, in terms of QA data in different fields and for different QA tasks, the researchers have to make relevant data annotations, and extract necessary effective features for the model according to their observation and experience, which results in low transplant ability of QA systems.

With the rapid development of the Internet, the vast amount of available information is growing exponentially. The traditional search engines do help users to search information conveniently to some extent, but the retrieved information is a mix of relevant and irrelevant results. It is this growing need for a more intelligent information retrieval system that Question-Answering system caters.

QA systems leverage a human-machine interface that supports natural language. This mode confirms to a more natural style of asking questions. It also has obvious benefits over the traditional search engine which lacks in understanding the intent of a question. QA systems in various functions work more efficiently than traditional search engines to retrieve accurate answers.

[1] Terry Winograd. Five Lectures on Artificial Intelligence [J]. Linguistic Structures Processing, volume 5 of Fundamental Studies in Computer Science, pages 399- 520, North Holland, 1977.

[2] Woods W A. Lunar rocks in natural English: explorations in natural language question answering [J]. Linguistic Structures Processing, 1977, 5: 521−569.

[3] Dell Zhang and Wee Sun Lee. Question classification using support vector machines. In SIGIR, pages 26–32. ACM, 2003

[4] Xin Li and Dan Roth. Learning question classifiers. In COLING, 2002

[5] Hang Cui, Min-Yen Kan, and Tat-Seng Chua. Unsupervised learning of soft patterns for generating definitions from online news. In Stuart I. Feldman, Mike Uretsky, Marc Najork, and Craig E. Wills, editors, Proceedings of the 13th international conference on World Wide Web, WWW 2004, New York, NY, USA, May 17-20, 2004, pages 90–99. ACM, 2004.

[6] Clarke C, Cormack G, Kisman D, et al. Question answering by passage selection (multitext experiments for TREC-9) [C]//Proceedings of the 9th Text Retrieval Conference(TREC-9), 2000.

[7] Ittycheriah A, Franz M, Zhu W-J, et al. IBM's statistical question answering system[C]//Proceedings of the 9th Text Retrieval Conference (TREC-9), 2000.

[8] Ittycheriah A, Franz M, Roukos S. IBM's statistical question answering system—TREC-10[C]//Proceedings of the 10th Text Retrieval Conference (TREC 2001), 2001.

[9] Lee G, Seo J, Lee S, et al. SiteQ: engineering high performance QA system using lexico-semantic pattern.

[10] Tellex S, Katz B, Lin J, et al. Quantitative evaluation of passage retrieval algorithms for question answering[C]// Proceedings of the 26th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR '03). New York, NY, USA: ACM, 2003:41–47.

[11] Jiwoon Jeon, W. Bruce Croft, and Joon Ho Lee. Finding similar questions in large question and answer archives. In Proceedings of the 2005 ACM CIKM International Conference on Information and Knowledge Management, Bremen, Germany, October 31 – November 5, 2005, pages 84–90. ACM, 2005.

[12] S. Riezler, A. Vasserman, I. Tsochantaridis, V. Mittal, Y. Liu, Statistical machine translation for query expansion in answer retrieval, in: Proceedings of the 45th Annual Meeting of the Association of Computational Linguistics, Association for Computational Linguistics, Prague, Czech Republic, 2007, pp. 464–471.

[13] M. Surdeanu, M. Ciaramita, H. Zaragoza, Learning to rank answers on large online qa collections, in: ACL, The Association for Computer Linguistics, 2008, pp. 719–727.

[14] A. Berger, R. Caruana, D. Cohn, D. Freitag, V. Mittal, Bridging the lexical chasm: statistical approaches to answer-finding, in: SIGIR '00: Proceedings of the 23rd annual international ACM SIGIR conference on Research and development in information retrieval, ACM, New York, NY, USA, 2000, pp. 192–199.

[15] Gondek, D. C., et al. "A framework for merging and ranking of answers in DeepQA." IBM Journal of Research and Development 56.3.4 (2012): 14-1.

[16] Wang, Chang, et al. "Relation extraction and scoring in DeepQA." IBM Journal of Research and Development 56.3.4 (2012): 9-1.

[17] Kenneth C. Litkowski. Question-Answering Using Semantic Triples[C]. Eighth Text Retrieval Conference (TREC-8). Gaithersburg, MD. November 17-19, 1999.

[18] H. Cui, R. Sun, K. Li, M.-Y. Kan, T.-S. Chua, Question answering passage retrieval using dependency relations., in: R. A. Baeza-Yates, N. Ziviani, G. Marchionini, A. Moffat, J. Tait (Eds.), SIGIR, ACM, 2005, pp. 400–407.

[19] M. Wang, N. A. Smith, T. Mitamura, What is the jeopardy model? a quasisynchronous grammar for qa., in: J. Eisner (Ed.), EMNLP-CoNLL, The Association for Computer Linguistics, 2007, pp. 22–32.

[20] K. Wang, Z. Ming, T.-S. Chua, A syntactic tree matching approach to finding similar questions in community-based qa services, in: Proceedings of the 32Nd International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR '09, 2009, pp. 187–194.

[21] Hovy, E.H., U. Hermjakob, and Chin-Yew Lin. 2001. The Use of External Knowledge of Factoid QA. In Proceedings of the 10th Text Retrieval Conference (TREC 2001) [C], Gaithersburg, MD, U.S.A., November 13-16, 2001.

[22] Jongwoo Ko, Laurie Hiyakumoto, Eric Nyberg. Exploiting Multiple Semantic Resources for Answer Selection. In Proceedings of LREC(Vol. 2006).

[23] Kasneci G, Suchanek F M, Ifrim G, et al. Naga: Searching and ranking knowledge. IEEE, 2008:953-962.

[24] Zhang D, Lee W S. Question Classification Using Support Vector Machines[C]. Proceedings of the 26th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval. 2003. New York, NY, USA: ACM, SIGIR'03.

[25] X. Yao, B. V. Durme, C. Callison-Burch, P. Clark, Answer extraction as sequence tagging with tree edit distance., in: HLT-NAACL, The Association for Computer Linguistics, 2013, pp. 858–867.

[26] C. Shah, J. Pomerantz, Evaluating and predicting answer quality in community qa, in: Proceedings of the 33rd International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR '10, 2010, pp. 411–418.

[27] T. Mikolov, K. Chen, G. Corrado, J. Dean, Efficient estimation of word representations in vector space, CoRR abs/1301.3781.

[28] Socher R, Lin C, Manning C, et al. Parsing natural scenes and natural language with recursive neural networks[C]. Proceedings of International Conference on Machine Learning. Haifa, Israel: Omnipress, 2011: 129-136.

[29] A. Graves, Generating sequences with recurrent neural networks, CoRR abs/1308.0850.

[30] Kalchbrenner N, Grefenstette E, Blunsom P. A Convolutional Neural Network for Modelling Sentences[C]. Proceedings of ACL. Baltimore and USA: Association for Computational Linguistics, 2014: 655-665.

[31] Bahdanau D, Cho K, Bengio Y. Neural machine translation by jointly learning to align and translate [J]. arXiv, 2014.

[32] Sutskever I, Vinyals O, Le Q V. Sequence to Sequence Learning with Neural Networks[M]. Advances in Neural Information Processing Systems 27. 2014: 3104-3112.

[33] Socher R, Pennington J, Huang E H, et al. Semi-supervised recursive auto encoders for predicting sentiment distributions[C]. EMNLP 2011

[34] Tang D, Wei F, Yang N, et al. Learning Sentiment-Specific Word Embedding for Twitter Sentiment Classification[C]. Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Baltimore, Maryland: Association for Computational Linguistics, 2014: 1555-1565.

[35] Li J, Luong M T, Jurafsky D. A Hierarchical Neural Autoencoder for Paragraphs and Documents[C]. Proceedings of ACL. 2015.

[36] Kim Y. Convolutional Neural Networks for Sentence Classification[C]. Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP). Doha, Qatar: Association for Computational Linguistics, 2014: 1746–1751.

[37] Zeng D, Liu K, Lai S, et al. Relation Classification via Convolutional Deep Neural Network[C]. Proceedings of COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers. Dublin, Ireland: Association for Computational Linguistics, 2014: 2335–2344.

[38] L. Yu, K. M. Hermann, P. Blunsom, and S. Pulman. Deep learning for answer sentence selection. CoRR, 2014.

[39] B. Hu, Z. Lu, H. Li, Q. Chen, Convolutional neural network architectures for matching natural language sentences., in: Z. Ghahramani, M. Welling, C. Cortes, N. D. Lawrence, K. Q. Weinberger (Eds.), NIPS, 2014, pp. 2042–2050.

[40] A. Severyn, A. Moschitti, Learning to rank short text pairs with convolutional deep neural networks., in: R. A. Baeza-Yates, M. Lalmas, A. Moffat, B. A. Ribeiro-Neto (Eds.), SIGIR, ACM, 2015, pp. 373-382.

[41] Wen-tau Yih, Xiaodong He, and Christopher Meek. 2014. Semantic parsing for single-relation question answering. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, pages 643–648. Association for Computational Linguistics.

[42] Li Dong, Furu Wei, Ming Zhou, and Ke Xu. 2015. Question Answering over Freebase with Multi-Column Convolutional Neural Networks. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics (ACL) and the 7th International Joint Conference on Natural Language Processing.

[43] Hochreiter S, Bengio Y, Frasconi P, et al. Gradient flow in recurrent nets: the difficulty of learning long-term dependencies[M]. A Field Guide to Dynamical Recurrent Neural Networks. New York, NY, USA: IEEE Press, 2001.

[44] Hochreiter S, Schmidhuber J. Long Short-Term Memory[J]. Neural Comput., 1997, 9(8): 1735-1780.

[45] Graves A. Generating Sequences With Recurrent Neural Networks[J]. CoRR, 2013, abs/1308.0850.

[46] Chung J, Gülçehre Ç, Cho K, et al. Gated Feedback Recurrent Neural Networks[C]. Proceedings of the 32nd International Conference on Machine Learning (ICML-15). Lille, France: JMLR Workshop and Conference Proceedings, 2015: 2067-2075.

[47] D.Wang, E. Nyberg, A long short-term memory model for answer sentence selection in question answering, in: ACL, The Association for Computer Linguistics, 2015, pp. 707–712.

[48] Malinowski M, Rohrbach M, Fritz M. Ask your neurons: A neural-based approach to answering questions about images[C]//Proceedings of the IEEE International Conference on Computer Vision. 2015: 1-9.

[49] Gao H, Mao J, Zhou J, et al. Are You Talking to a Machine? Dataset and Methods for Multilingual Image Question[C]//Advances in Neural Information Processing Systems. 2015: 2287-2295.

[50] Sun M S. Natural Language Processing Based on Naturally Annotated Web Resources [J]. Journal of Chinese Information Processing, 2011, 25(6): 26-32.

[51] Hu B, Chen Q, Zhu F. LCSTS: a large scale chinese short text summarization dataset[J]. arXiv preprint arXiv:1506.05865, 2015.

[52] Shang L, Lu Z, Li H. Neural Responding Machine for Short-Text Conversation[C]. Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing. Beijing, China: Association for Computational Linguistics, 2015: 1577-1586.

[53] O. Vinyals, and Q. V. Le. A Neural Conversational Model. arXiv: 1506.05869,2015.

[54] Kumar A, Irsoy O, Su J, et al. Ask me anything: Dynamic memory networks for natural language processing[J]. arXiv preprint arXiv:1506.07285, 2015.

[55] Sukhbaatar S, Weston J, Fergus R. End-to-end memory networks[C]//Advances in Neural Information Processing Systems. 2015: 2431-2439.

Original link: https://yq.aliyun.com/articles/58745#

Private vs. Public Cloud: Which one is right for your business?

2,605 posts | 747 followers

FollowAlibaba Clouder - September 1, 2020

Alibaba Clouder - March 9, 2017

Alibaba Clouder - October 31, 2019

Alibaba Clouder - August 18, 2020

Alibaba Clouder - October 12, 2019

Alibaba Clouder - August 28, 2019

2,605 posts | 747 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Machine Translation

Machine Translation

Relying on Alibaba's leading natural language processing and deep learning technology.

Learn MoreMore Posts by Alibaba Clouder

Raja_KT February 17, 2019 at 9:47 am

Interesting. Test cases for CPU, GPU bounds can tell us more :)