Neural networks are inspired by the biological neurological network system. But do our brains learn the same way as a computer does deep learning? The answer to this question can possibly bring us to a more powerful deep learning model, and, on the other hand, also help us to better understand human intelligence.

On December 5th, Blake A. Richards—a CIFAR researcher from the University of Toronto—and his colleagues published an article on eLife titled Towards deep learning with segregated dendrites. They described an algorithm with which to model deep learning in human brains. The network they built indicates that the neural synapses of certain mammals have the correct shapes and electric characteristics to make them suitable for deep learning.

Not only that, their experiments showed how the brain works with deep learning in a method that's surprisingly close to the natural biological model. This is hopeful in helping us further understand how we evolved our learning ability. If the connection between neurons and deep learning is confirmed, we can develop better brain/computer interfaces and we will likely gain a number of new abilities ranging from treating various kinds of disease to augmented intelligence. The possibilities seem endless.

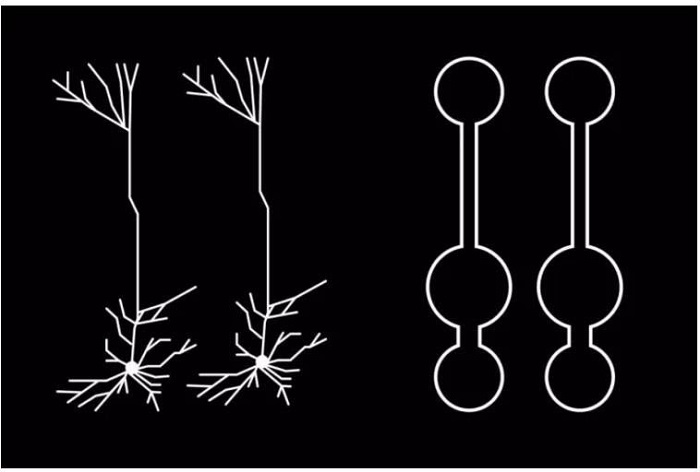

This research is done by Richards and his graduate student Jordan Guerguiev, along with DeepMind's Timothy Lillicrap. The neurons used in the experiment are the cortex dendritic cells of a mouse brain. The cortex is responsible for some high order functions such as sense, movement, spatial logic, consciousness and human languages. The dendritic cells are the bumps derived from the neurons. Under the microscope, they look somewhat like a tree bark. Dendritic cells are the input channel of neurons and they deliver electrical signals from other neurons to the main body of a neuron cell.

The model of "multi-atrioventricular neural network" constructed and studied in this paper shows a mouse's primary visual cortex pyramidal neurons on the left and simplified neurons on the right. Source: CIFAR

Using the knowledge of this neuronal structure, Richards and Guerguiev built a model called the "multi-compartment neural network model.” In this network, neurons receive signals in separate "cubicles". Due to its piecemeal nature, different layers of simulated neurons can cooperate to achieve deep learning.

The results indicate that when recognizing hand-written numbers, a multi-layer network is significantly better than a single-layer network.

Algorithms that use multi-layer network structures to identify higher order representations are at the core of deep learning. This suggests that mouse brain neurons are able to do deep learning just like artificial neurons. "It's just a set of simulations, so it does not accurately reflect what the brain is doing, but if the brain can use the algorithms which AI are using, that's enough to prove that we can conduct further experiments,” said Richards.

In the early 2000s, Richards and Lillicrap took Hinton's classes at the University of Toronto. They were convinced that the deep learning model to some extent accurately reflects the mechanics of the human brain. However, there were several challenges to validating this idea at the time. First of all, it is not yet certain whether deep learning can reach the level of complexity in a human brain. Second, deep learning algorithms typically violate biological facts, which have already been demonstrated by neuroscientists.

The patterns of activity that occur in deep learning by computer networks are similar to the patterns seen in the human brain. However, some of the features of deep learning seem to be incompatible with the way the human brain works. Moreover, neurons in artificial networks are much simpler than biological neurons.

Now, Richards and some researchers are actively seeking ways to bridge the gap between neuroscience and artificial intelligence. This article builds upon Yoshua Bengio team's study on how to train neural networks in a more biologically viable way.

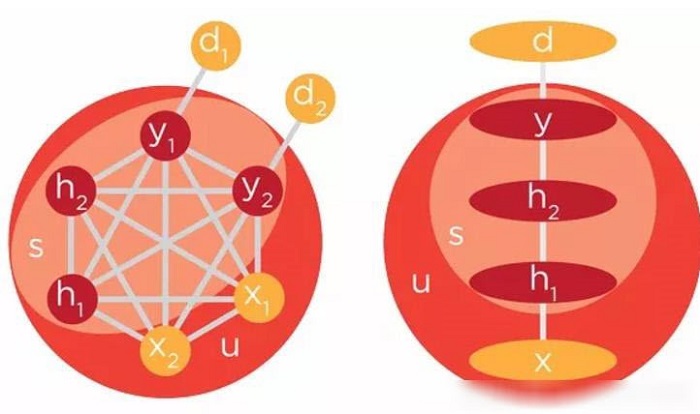

Equilibrium Propagation Diagram: The process of information transfer when using a neural network with balanced propagation.

In the figure above, the simple network on the right, layer by layer, backpropagation (BP) and balanced transmission are applicable. However, the complex network on the left, more similar to the structure of a bio-neural network, is not suitable for BP. This is one of the reasons why deep learning generally cannot reflect the mechanisms of the human brain. However, balanced propagation can be applied to multiple connections.

Bengio and his graduate student Benjamin Scellier invented a new neural network training method, known as "equilibrium propagation", as an alternative to the commonly used BP. Equilibrium Propagation is able to use single feedback loop single type calculations to reason and reverse error propagation. Therefore, the process of equilibrium transmission may be more similar to the learning process in the brain's biological neural circuits.

The study also uses algorithms developed by Timothy Lillicrap of DeepMind to further relax some of the training rules for neural networks. (By the way, Timothy Lillicrap is also one of the authors of the paper on DeepMind's most powerful general purpose AI AlphaZero.)

This paper also incorporates Matthew Larkam's study of cortex neuron structures. By combining neuroscience findings with existing algorithms, Richards' team created a better, more realistic algorithm to model the learning process in the brain.

Dendritic cortex neurons are just one of many cell types in the brain. Richards says future research should simulate different types of brain cells and study how they interact to enable deep learning. In the long run, he hopes researchers can overcome major challenges, such as learning through experience without feedback loops.

Richards thinks that in the next 10 years, we may see the virtuous circle between neuroscience and artificial intelligence. The up and coming discoveries in neuroscience will help us develop new artificial intelligence technologies. In turn, AI will help us make sense of the experimental data from neuroscientific research.

Alibaba Cloud, one of the world's largest cloud computing company, has always been at the forefront of Big Data and AI technologies. At the Mobile World Congress 2018 in Barcelona, Spain, Alibaba Cloud launched eight new Big Data and artificial intelligence (AI) products. These products will meet the surging demand for powerful and reliable cloud computing services as well as advanced AI solutions among enterprises. Read more about the launch on the official press release.

Original article:

https://mp.weixin.qq.com/s/li_z4EVy3iztU3Hp01cFew

MaxCompute2.0 Performance Metrics: Faster, Stronger Computing

Speed Matters: How To Process Big Data Securely For Real-time Applications

2,593 posts | 793 followers

FollowAlibaba Clouder - June 29, 2020

shiming xie - November 4, 2019

Alibaba Clouder - August 4, 2020

Alibaba Clouder - August 8, 2017

Alibaba Cloud Community - December 8, 2021

Lana - April 14, 2023

2,593 posts | 793 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn MoreMore Posts by Alibaba Clouder