Sharding is a common method for distributing data across multiple machines to meet the very real demands of big data growth. In this article, I aim to focus on the routing policy for write operations in a MongoDB sharded cluster as well as discussing some of the challenges encountered after a config server is converted into a replica set.

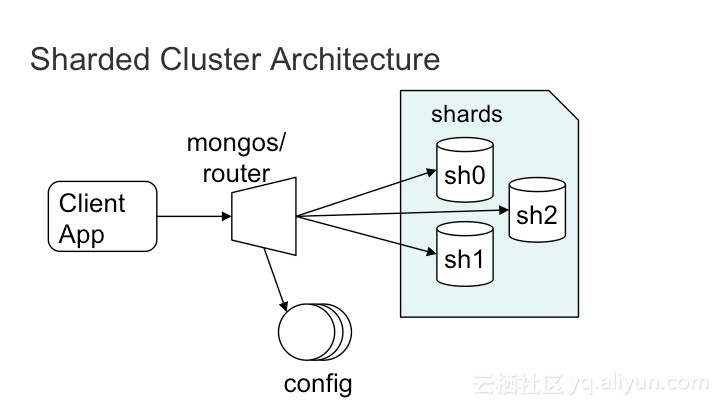

To set the tone, here’s a basic visual illustration of a MongoDB Sharded Cluster:

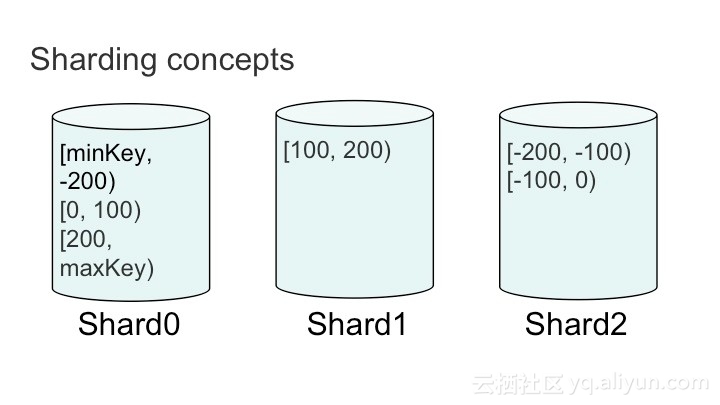

In a sharded cluster, a user can distribute cluster data as chunks to multiple shards. As shown in the following figure, cluster data is divided by shardKey into the [minKey, -200), [-200, -100), [-100, 0), [0, 100), [100, 200), [200, maxKey) chunk ranges and stored in shard0, shard1, and shard2.

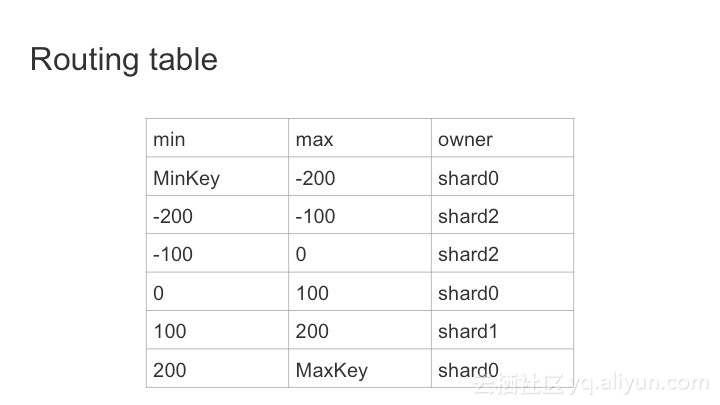

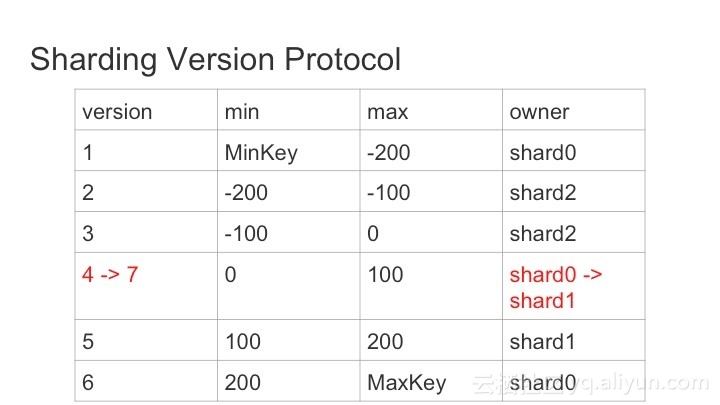

The following figure shows a route table similar to that of the config server.

When a new file is written, Mongos obtains the route table from the config server to the local server. If the {shardKey: 150} file is to be written, the request is routed to Shard1 and data is written there.

After obtaining the route table from the config server, Mongos stores it in the local memory, so that it does not need to obtain it again from the config server for every write/query request. In a sharded cluster, Mongos automatically migrates chunks among shards for load balancing (you can run the moveChunk command to manually migrate chunks). After a chunk is migrated, the local route table of MongoDB becomes invalid. In this case, a request may be routed to a wrong shard. The question here is, if this happens, how can we solve this problem?

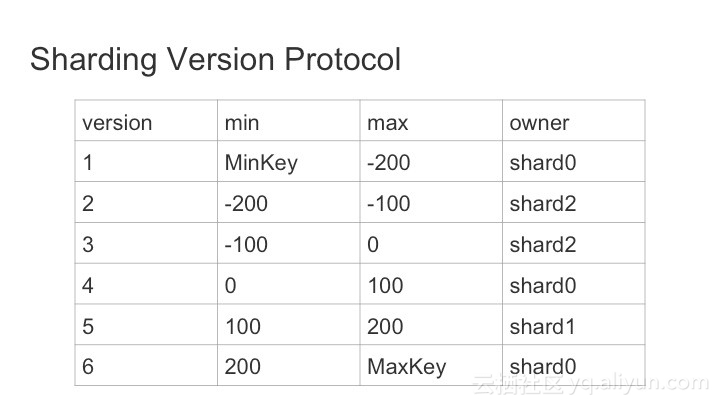

In MongoDB, a version is added to a route table. Let’s assume that the initial route table records 6 chunks and the route table version is v6 (maximum version value among the shards).

After the chunks in the [0, 100) range are migrated from shard0 to shard1, the version value increases by 1 to 7. This information is recorded in the shard and updated to the config server.

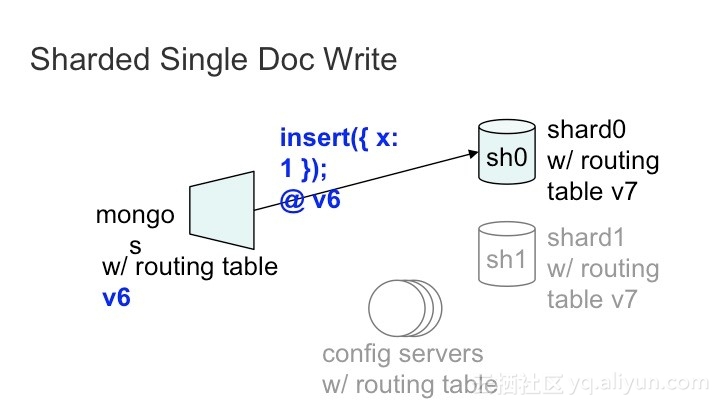

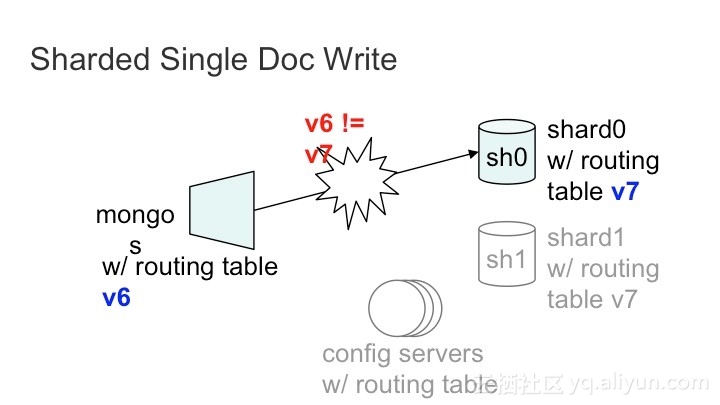

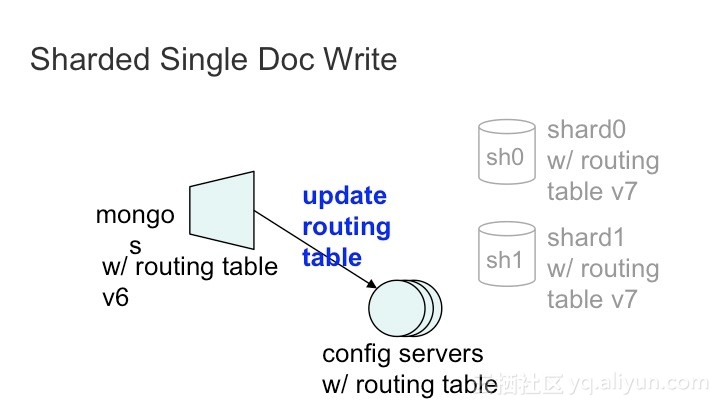

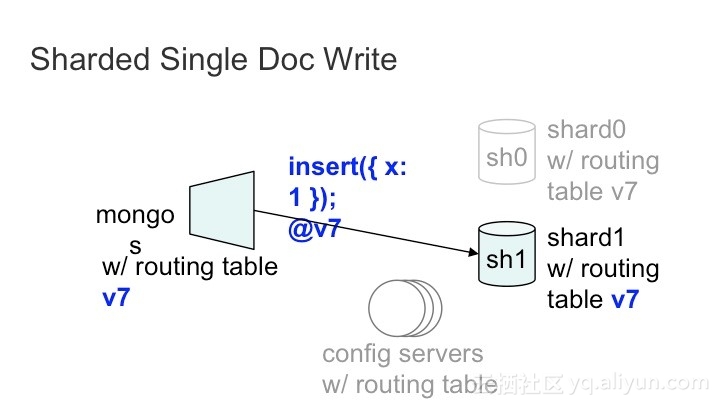

When Mongos sends a data writing request to a shard, the request carries the route table version information of Mongos. When the request reaches the shard and it finds that its route table version is later than Mongos', it infers that the version has been updated. In this case, Mongos obtains the latest route table from the config server and routes the request accordingly.

Here are a few diagrams to illustrate this:

The above-mentioned versions are used only for used for introducing the principle. In MongoDB 3.2, a version number is expressed using the (majorVersion, minorVersion) 2-tuple. After a chunk is split, the values of all the chunk minor versions increase. When a chunk is migrated between shards, the value of the major version of the migrated chunk increases on the destination shard and the value of the major version of a chunk selected on the source shard increases as well. In this case, Mongos knows that the version value has been increased whenever it accesses the source or destination shard.

Replica Set Challenges on the Config Server

In MongoDB 3.2, the mirrored nodes of the config server are replaced by replica sets. In this case, the sharded cluster encounters some implementation challenges due to the replication features. Here are two main issues you may face:

Issue 1: the data on the original primary node of a replica set may be rolled back. For Mongos, this means that the obtained route table is rolled back.

Issue 2: the data on the secondary node of a replica set may be older than that on the primary node. If data is read from the primary node, the read capacity cannot be extended. If data is read from the secondary node, the data obtained may not be the latest data. For Mongos, it may obtain an outdated route table. In the above-mentioned case, Mongos finds that its route table is updated and therefore obtains the latest route table from the config server. If the request arrives at a not-updated secondary node, the route table may not be updated successfully.

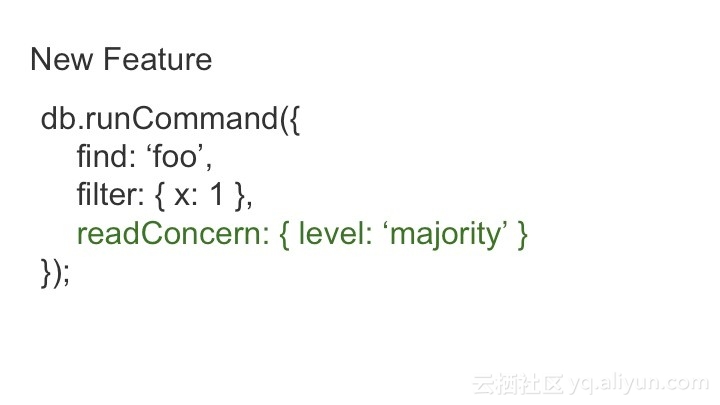

To address the first challenge, MongoDB 3.2 is added with the ReadConcern feature, which supports the local and majority levels. The local level indicates common read operations, while the majority level ensures that the data obtained by an application has been successfully written to most members of a replica set.

If data has been successfully written to most members of a replica set, the data will not be rolled back. Therefore, when Mongos reads data from the config server, readConcern is set to majority level so that the data obtained will not be rolled back.

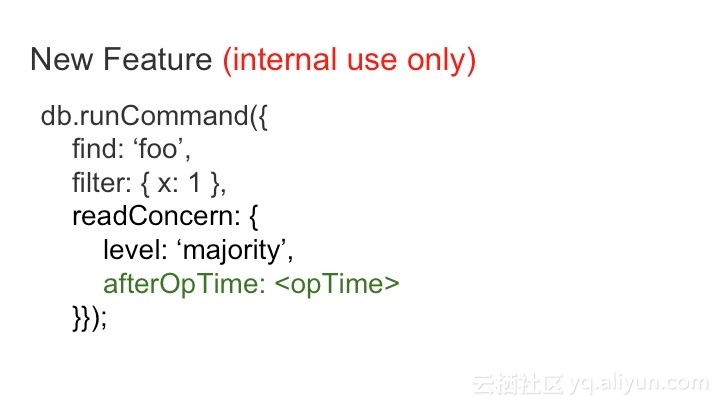

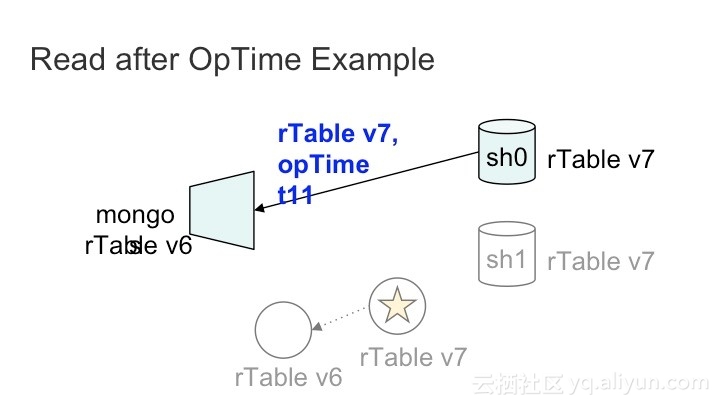

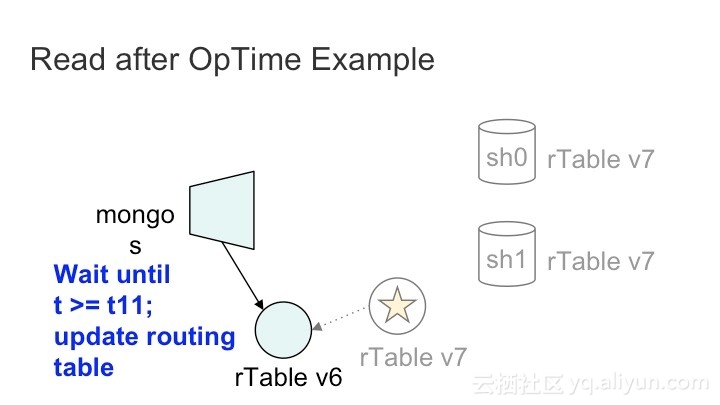

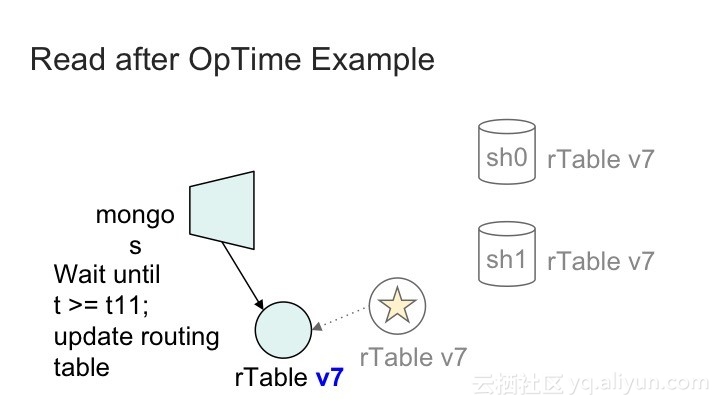

To address the second challenge, MongoDB is added with the afterOpTime parameter in addition to the majority level. Currently, this parameter applies within a sharded cluster to specify that the time stamp of the latest Oplog of the requested node must be later than the time stamp specified by afterOpTime.

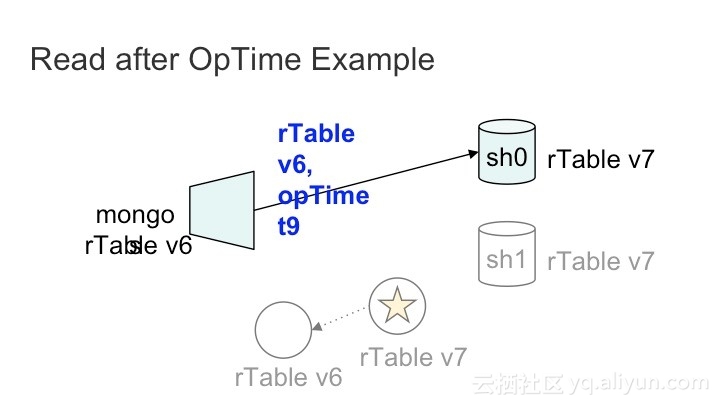

When Mongos sends a request that carries route table version information to a shard and the shard finds that its route table version is later than Mongos' (chunk migration has occurred), the shard will instruct Mongos to obtain the latest route table and notify Mongos of its optime when the config server is updated after chunk migration. When sending a request to the config server, Mongos sets readConcern to majority and sets the afterOpTime parameter to prevent obtaining an outdated route table from the secondary node.

Here’s what that roughly looks like:

2,593 posts | 793 followers

FollowApsaraDB - July 14, 2021

ApsaraDB - July 14, 2021

ApsaraDB - January 12, 2023

Alibaba Cloud Community - April 1, 2022

ApsaraDB - March 26, 2024

Alibaba Clouder - July 30, 2019

2,593 posts | 793 followers

Follow ApsaraDB for MongoDB

ApsaraDB for MongoDB

A secure, reliable, and elastically scalable cloud database service for automatic monitoring, backup, and recovery by time point

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn More Database Migration Solution

Database Migration Solution

Migrating to fully managed cloud databases brings a host of benefits including scalability, reliability, and cost efficiency.

Learn MoreMore Posts by Alibaba Clouder