By Lv Renqi.

If you haven't already, you'll want to check out the first part of this two part series before you continue any further. You can find this article here.

In this part, we're just going to continue to jump right into all the technical stuff.

Take into account the following factors when building a data channel: timeliness, reliability, throughput, security (such as channel security and auditing), online compatibility of data formats, along with extract, transform, and load (ETL) or extract, load, and transform (ELT), and unified or exclusive mode (for example, GoldenGate is Oracle proprietary, with strong coupling). Select Kafka Connect first.

The Connector API is implemented for connectors and consists of two parts. The "divide and rule" principle is observed. Connectors are equivalent to splitters, and tasks are equivalent to executors after splitting.

1. A connector provides the following functions:

2. A task is used to transfer data to or remove data from Kafka.

Compared with the Publisher API and Consumer API of Kafka that are directly used to implement the incoming and outgoing data logic, Kafka Connect has done much fundamental work for the abstract definition of the Connector API, rich connector implementation in the ecosystem, and the functions of the worker process, including a RESTful API call, configuration management, reliability, high availability, scalability, and load balancing. Experienced developers knew that it takes one to two days to read data from Kafka and insert the data into a database during coding, but several months to handle issues such as configuration, exceptions, RESTful APIs, monitoring, deployment, scaling, and failure. A connector handles many complex issues when it is used to replicate data. Connector development is simplified by the offset tracking mechanism of Kafka. An offset indicates not only the message consumption progress in Kafka but also the data sourcing progress. Behavior consistency is guaranteed when multiple connectors are used.

The source connector returns a record that contains the partitions and offsets of the source system. The worker process sends the record to Kafka. The worker process saves the offsets if Kafka returns an acknowledgement message to indicate that the record is saved successfully. The offset storage mechanism is pluggable, and offsets are stored in a topic, which, in my opinion, is a waste of resources. If a connector crashes and restarts, it can continue to process data from the most recent offset.

The distributed cluster that Alibaba Cloud built is based on the High-Speed Service Framework (HSF) is a large and unified cluster, with data centers distributed in Shenzhen, Zhangjiakou, and other regions, but with intra-data center clusters that do not converge with each other. Millions of clusters are supported by ConfigServer and other fundamental services. Local on-premises is a non-default behavior. From the perspective of service governance, I think it is more reasonable to build a cluster that spans the entire city. Service integration and calling across data centers require special control and management.

The following architectural principles must be followed considering actual limits, such as high latency-the biggest technical issues-limited bandwidth, and the high costs of public network broadband.

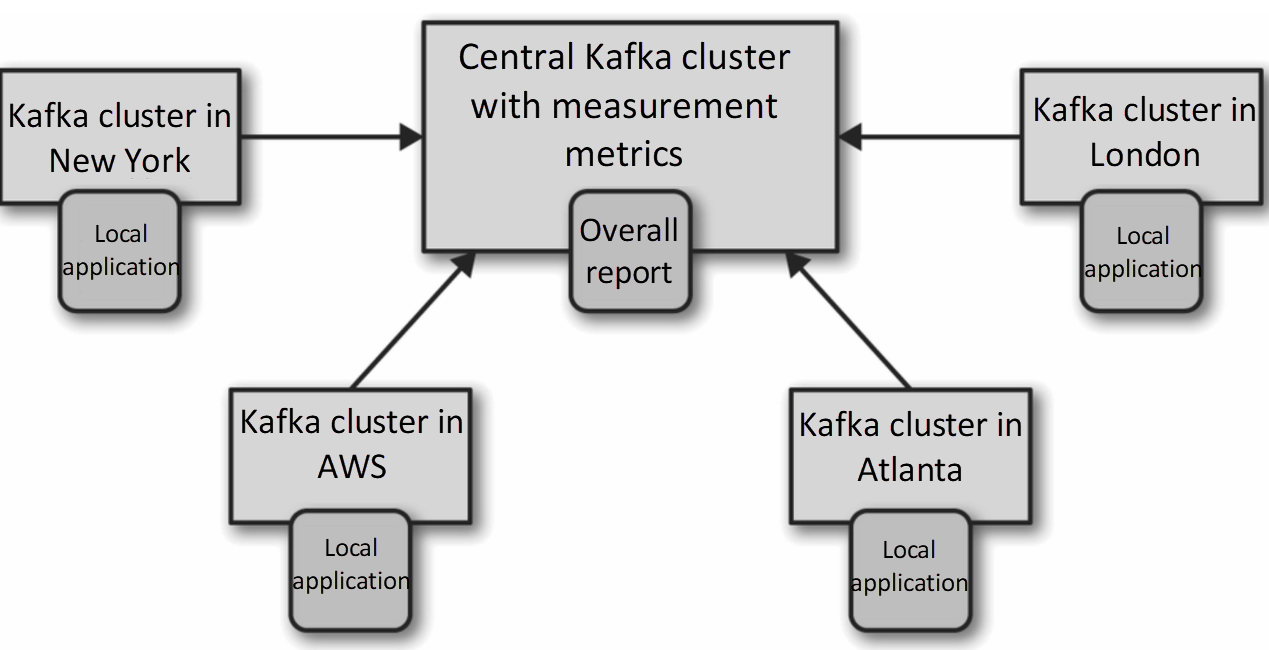

The hub and spoke architecture is applicable to the scenario where a central Kafka cluster corresponds to multiple local Kafka clusters, as shown in the following figure. Data is generated only in local data centers, and the data of each data center is mirrored to the central data center only once. An application that processes the data of only one data center can be deployed in the local data center, whereas an application that processes the data of multiple data centers must be deployed in the central data center. The hub and spoke architecture is easy to deploy, configure, and monitor because data replication is unidirectional, and consumers always read data from the same cluster. However, applications in one data center cannot access data in another data center.

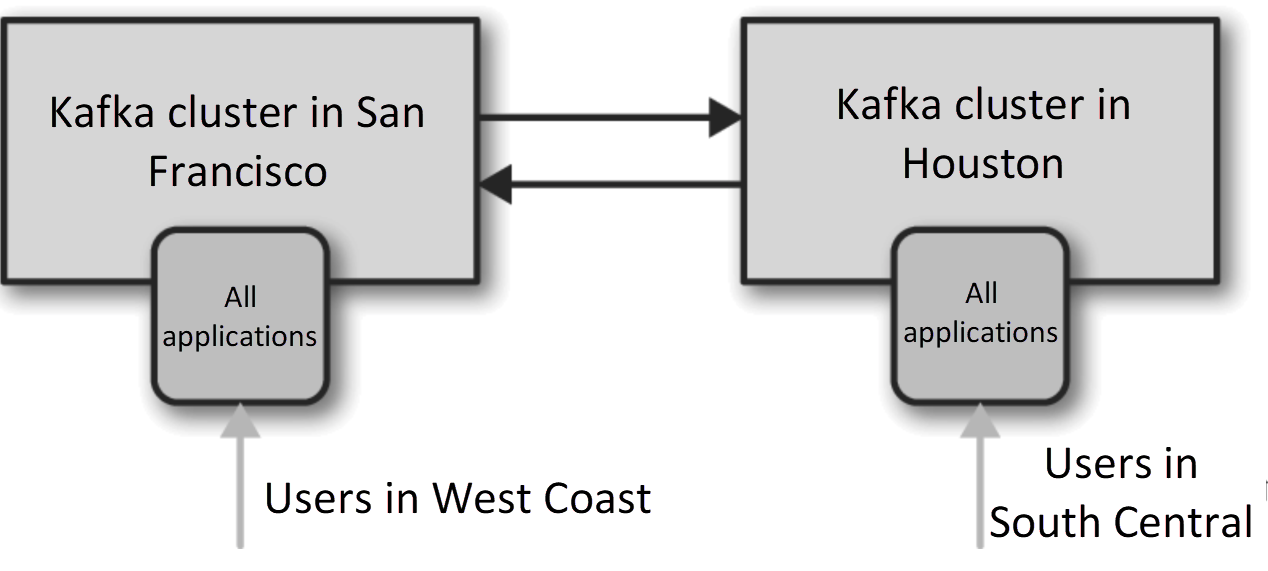

The active-active architecture provides services for nearby users by leveraging its performance benefits. It does not compromise functionality for data availability (functional compromise exists in the hub and spoke architecture). The active-active architecture supports redundancy and elasticity. Each data center has complete functions, which allows it to redirect users to another data center when it fails. Such redirection is network-based and provides the simplest and most transparent failover method. This architecture needs to solve the major issue of how to avoid conflicts when data is asynchronously read and updated in multiple locations. Consider the issue of how to stop the endless mirroring of the same data record. Data consistency is more critical.

We recommend that you use the active-active architecture if you can properly solve the conflict that occurs when data is asynchronously read and updated in multiple locations. This architecture is the most scalable, elastic, flexible, and cost-effective solution we have ever known about. It is worth our effort to find ways to avoid circular replication, to paste requests of the same user to the same data center, and to solve conflicts. Active-active mirroring is bidirectional mirroring between every two data centers, especially when more than two data centers exist. If 5 data centers exist, at least 20 and sometimes up to 40 mirroring processes need to be maintained, and each process must be configured with redundancy for high availability.

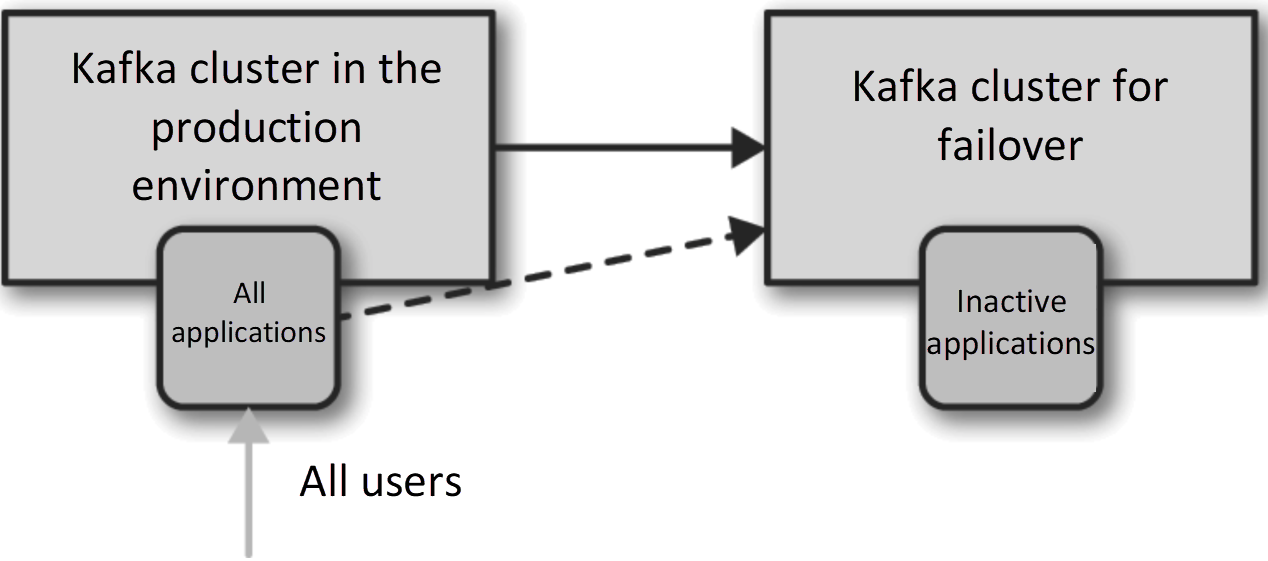

The active-standby architecture is easy to implement, but a cluster is wasted.

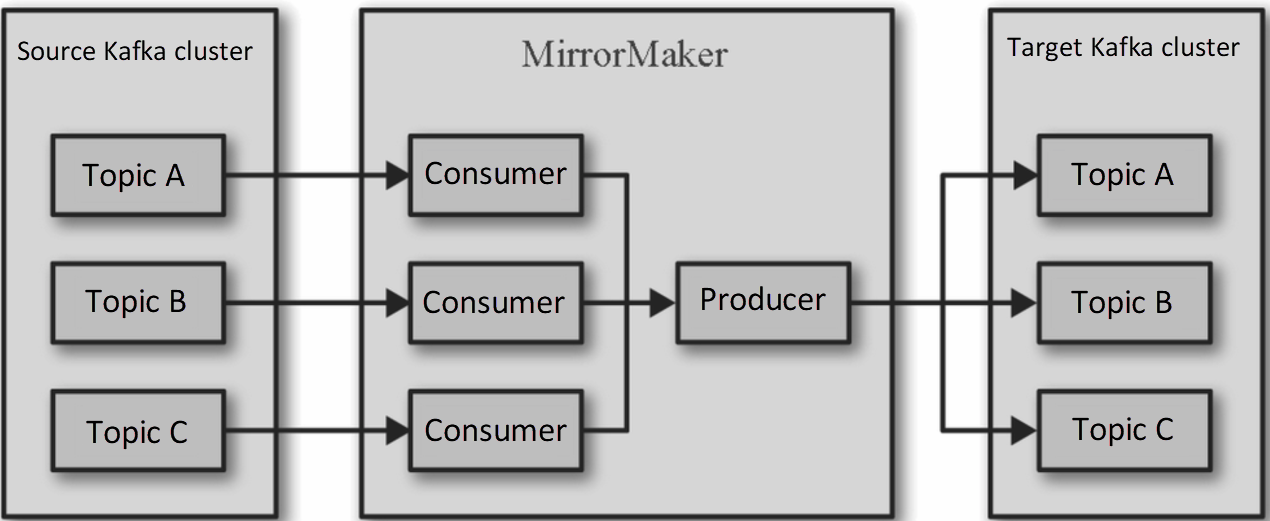

MirrorMaker is completely stateless, and its receive-and-forward mode is similar to the proxy mode, with performance determined by the threading model design.

Try to make MirrorMaker run in the target data center. That is to say, if you want to send data from New York City to San Francisco, deploy MirrorMaker in the data center located in San Francisco. Long-distance external networks are less reliable than the internal networks of data centers. If network segmentation occurs and inter-data center connection is interrupted, a consumer that cannot connect to a cluster has much fewer security threats than a producer failed cluster connection. A consumer that fails to connect to a cluster cannot read data from the cluster, but the data is still retained in the Kafka cluster for a long time, without the risk of being lost. Data may be lost if MirrorMaker has read data before network segmentation occurs but fails to generate the data to the target cluster. Therefore, remote reading is more secure than remote writing.

Note: If traffic across data centers needs to be encrypted, we recommend that you deploy MirrorMaker in the source data center to allow it to read local non-encrypted data and generate the data to a remote data center over SSL. The performance problem is mitigated because SSL is used by the producer. Ensure that MirrorMaker does not commit any offset until it receives a valid replica acknowledgement message from the target broker and immediately stops mirroring when the maximum number of retries is exceeded or the producer buffer overruns.

Let us look at the concept of "prefer event over command." Here, "event" is a definite fact that has occurred.

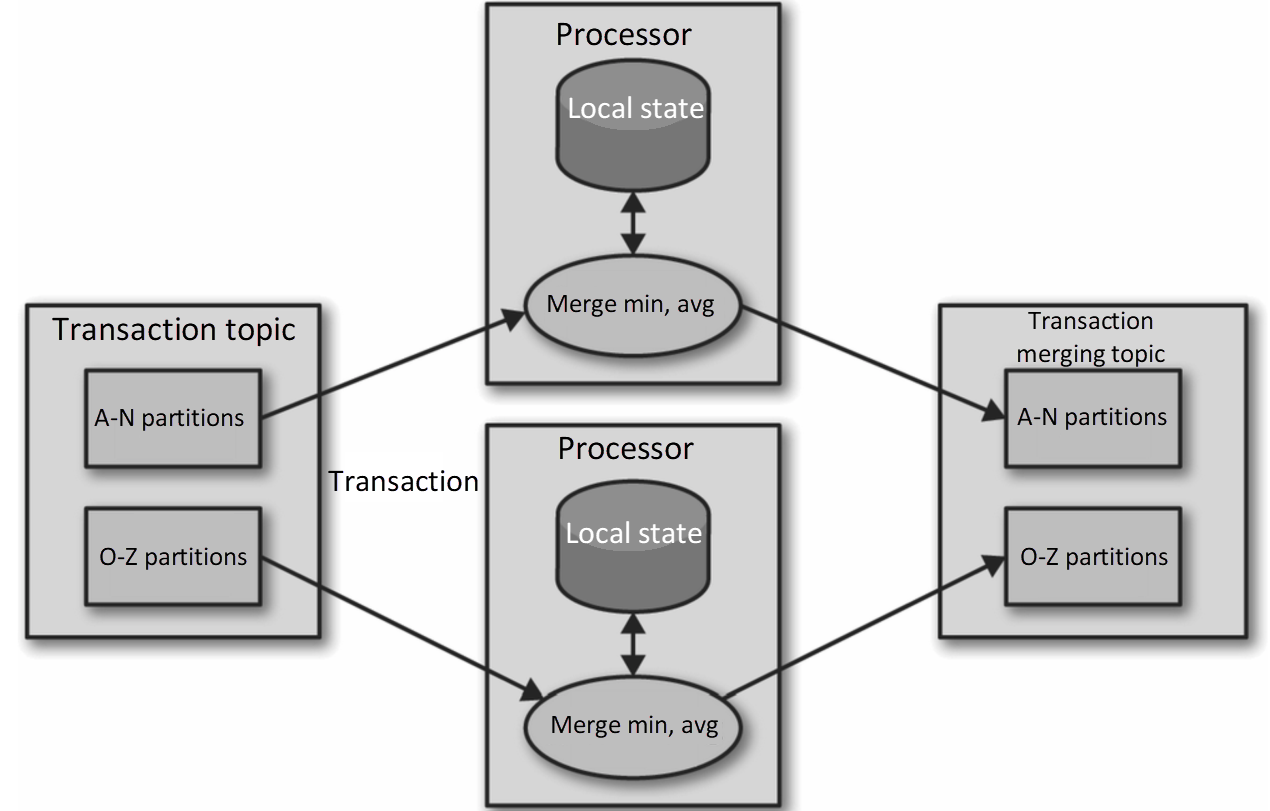

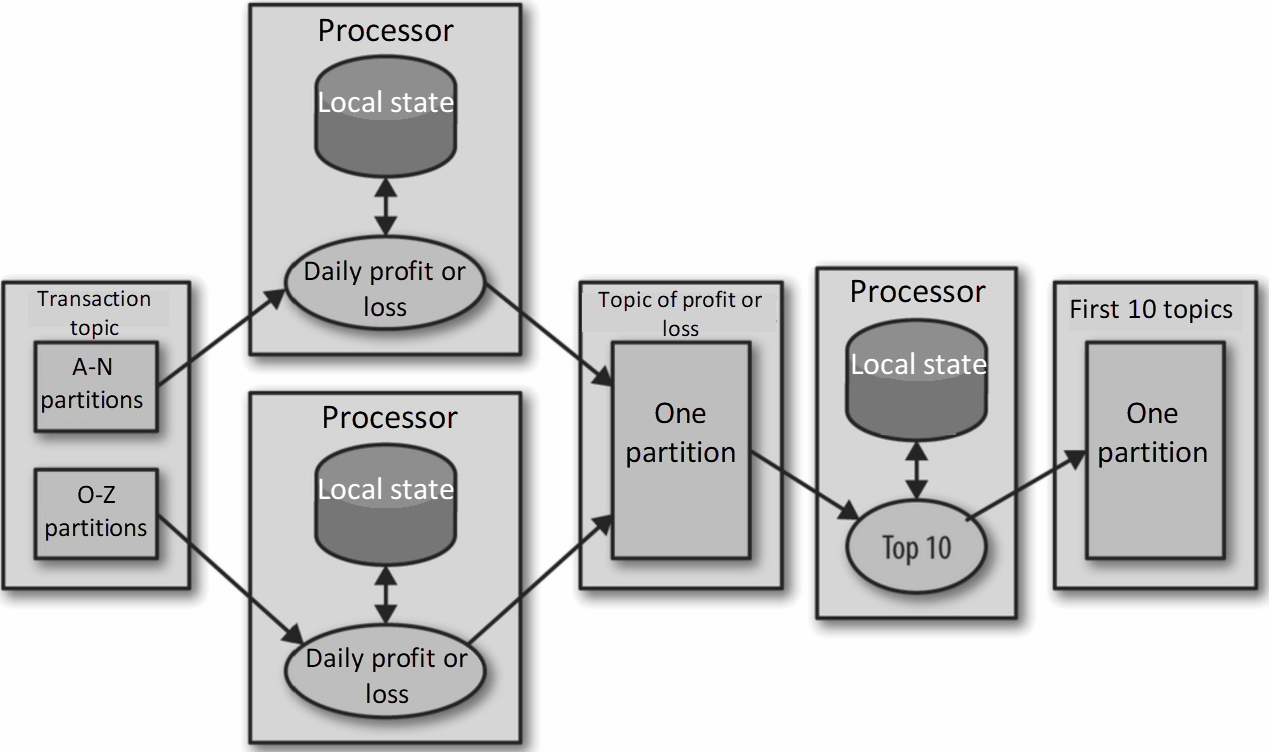

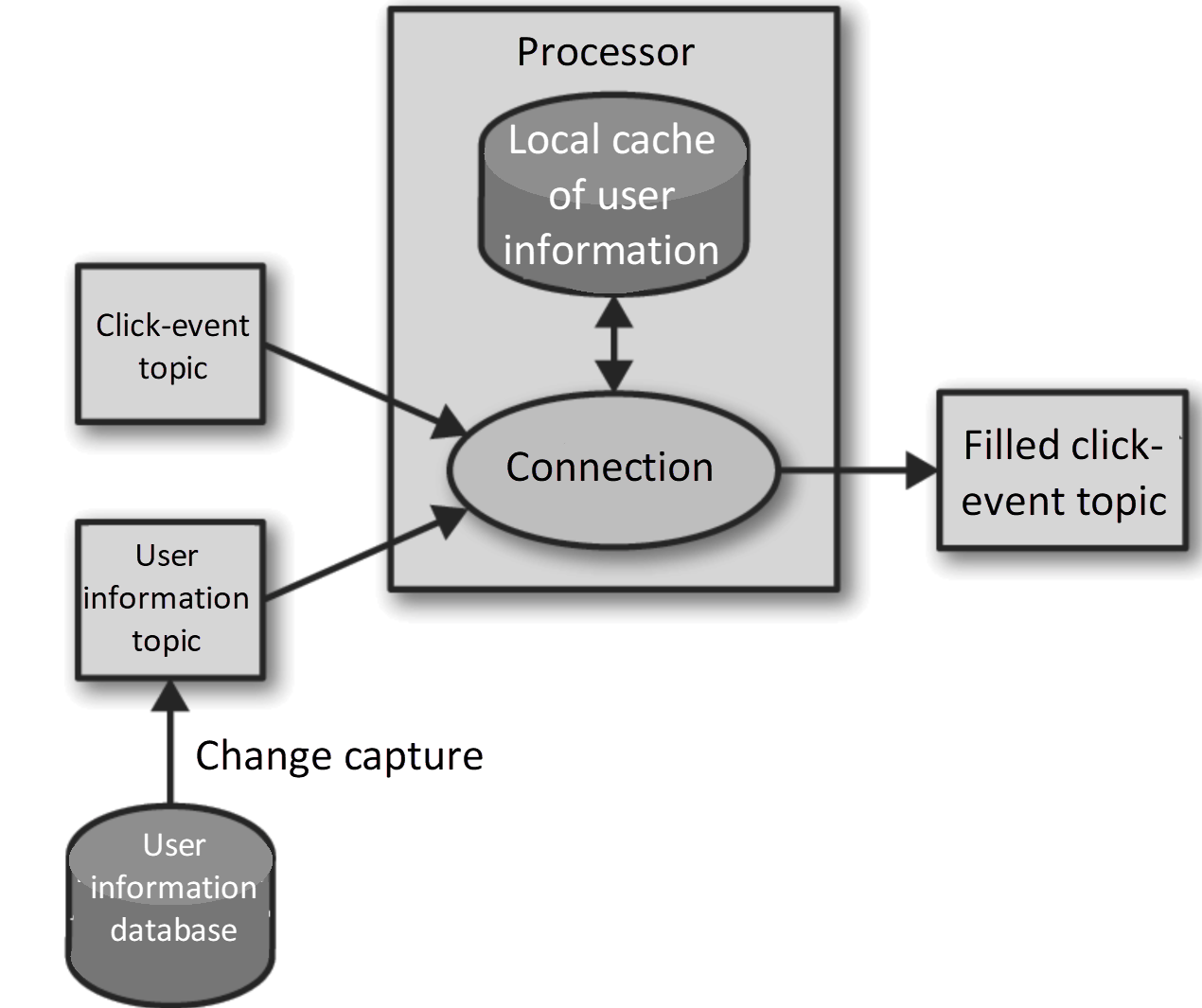

Connect+Kafka transactional semantics.

JeffLv - December 2, 2019

Apache Flink Community - July 28, 2025

Alibaba Cloud Native Community - May 13, 2024

Alibaba Cloud Native - July 5, 2023

Alibaba Clouder - October 15, 2019

Apache Flink Community - February 27, 2025

Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More API Gateway

API Gateway

API Gateway provides you with high-performance and high-availability API hosting services to deploy and release your APIs on Alibaba Cloud products.

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More