Dify is an open-source platform for LLM application development, integrating Backend as a Service with LLMOps to enable rapid development of production-ready Generative AI applications. This topic describes the steps to create an Intelligent Q&A application using ApsaraDB RDS for PostgreSQL and the Dify platform.

Step 1: Create an ApsaraDB RDS for PostgreSQL instance

-

Create an account and a database for the RDS instance. For more information, see Create an account and a database.

Among them:

-

When creating an account, select Account Type as Privileged Account.

-

When creating a database, select the created privileged account for Authorized Account.

-

-

Apply for a public endpoint for an ApsaraDB RDS for PostgreSQL instance. For specific operations, see Enable or disable a public endpoint.

Add the public IP address of the ECS instance to the whitelist of ApsaraDB RDS for PostgreSQL. For specific operations, see Set a whitelist.

-

Enable the vector plug-in for the target database of ApsaraDB RDS for PostgreSQL. For detailed operations, see Manage plug-ins.

Step 2: deploy Dify

-

Create an ECS instance. For more information, see Custom Purchase of ECS Instances.

-

The CPU version of the ECS instance supports adding online AI large models.

-

The GPU version of the ECS instance supports adding both online and ECS local AI large models.

Important-

This topic uses Alibaba Cloud Linux 3 as an example.

-

If you purchase a GPU version of the ECS instance, you need to install the corresponding GPU driver when configuring the image. By using the GPU version of the ECS instance, you can deploy large models on ECS using Ollama.

-

-

Install Docker in ECS. For more information, see Install Docker.

-

(Optional) If you purchase a GPU version of the ECS instance, execute the following command to install the container-toolkit component.

-

Execute the following command to obtain the Dify source code.

git clone https://github.com/langgenius/dify.gitYou can specify the version branch by using the

branchparameter to install a specific version. For example, to install version v1.0.0, you can use the following command. For Dify branch versions, see dify.git clone https://github.com/langgenius/dify.git --branch 1.0.0NoteIf the Git command is not installed, execute

sudo yum install git -yto install it. -

Configure environment variables to set ApsaraDB RDS for PostgreSQL as the default database and vector store.

-

Set ApsaraDB RDS for PostgreSQL as the default database.

export DB_USERNAME=testdbuser export DB_PASSWORD=dbPassword export DB_HOST=pgm-****.pg.rds.aliyuncs.com export DB_PORT=5432 export DB_DATABASE=testdb01Replace the parameter values in the code according to the actual situation.

Parameter

Description

DB_USERNAME

The privileged account of the ApsaraDB RDS for PostgreSQL instance.

DB_PASSWORD

The password of the privileged account of the ApsaraDB RDS for PostgreSQL instance.

DB_HOST

The public endpoint of the ApsaraDB RDS for PostgreSQL instance.

DB_PORT

The public port of the ApsaraDB RDS for PostgreSQL instance. The default is 5432.

DB_DATABASE

The database name in the ApsaraDB RDS for PostgreSQL instance.

-

Set ApsaraDB RDS for PostgreSQL as the default vector store.

export VECTOR_STORE=pgvector export PGVECTOR_HOST=pgm-****.pg.rds.aliyuncs.com export PGVECTOR_PORT=5432 export PGVECTOR_USER=testdbuser export PGVECTOR_PASSWORD=dbPassword export PGVECTOR_DATABASE=testdb01Replace the parameter values in the code according to the actual situation.

Parameter

Description

VECTOR_STORE

Use the vector plug-in.

PGVECTOR_USERNAME

The privileged account of the ApsaraDB RDS for PostgreSQL instance.

PGVECTOR_PASSWORD

The password of the privileged account of the ApsaraDB RDS for PostgreSQL instance.

PGVECTOR_HOST

The public endpoint of the ApsaraDB RDS for PostgreSQL instance.

PGVECTOR_PORT

The public port of the ApsaraDB RDS for PostgreSQL instance. The default is 5432.

PGVECTOR_DATABASE

The database name in the ApsaraDB RDS for PostgreSQL instance.

You can also configure ApsaraDB RDS for PostgreSQL as the default database and vector store through the

.envfile. -

-

(Optional) If you want to avoid running the default database and Weaviate container in ECS to save traffic and storage space, edit the

.envanddocker-compose.yamlfiles to disable the default database and Weaviate container. -

-

Execute the following command to start the Dify image.

cd /root/dify/docker docker compose -f docker-compose.yaml up -d

Step 3: access the Dify service

-

Access

http://<ECS public IP address>/installin your browser to access the Dify service.NoteIf access fails, refresh the page several times. Dify is initializing the storage table structure and related information.

-

Follow the prompts on the page to Set Administrator Account (that is, email address, username, and password) to register on the Dify platform and use the service.

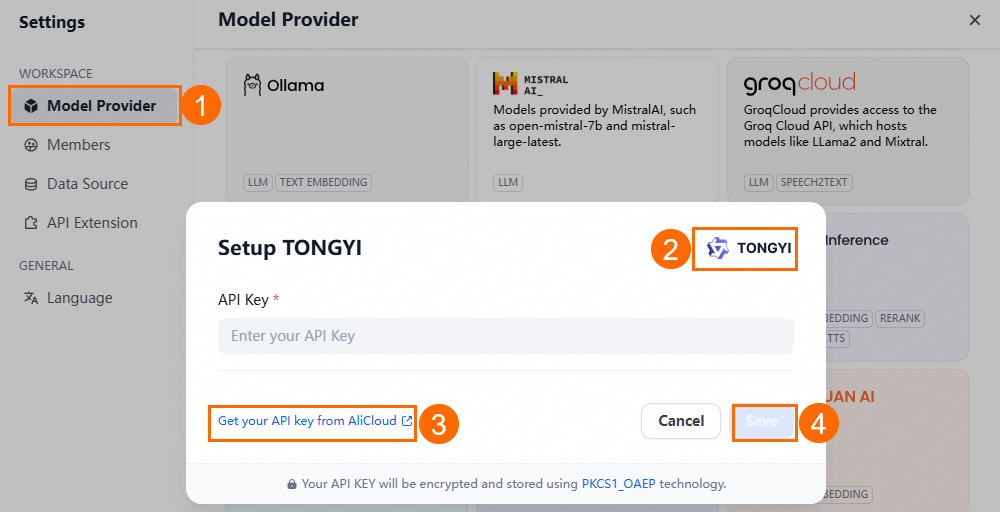

Step 4: add and configure AI models

This topic uses Tongyi Qianwen as an example.

-

Log on to the Dify platform.

-

In the upper right corner, click User Name > Settings.

-

On the Settings page, select Model Provider > Tongyi Qianwen > (settings).

-

On Tongyi Qianwen's Settings page, click the link to retrieve the API Key of Alibaba Cloud Bailian.

-

After entering the obtained API Key, click Save.

If you purchase the GPU version of ECS, you can deploy the LLM service of Tongyi Qianwen on ECS.

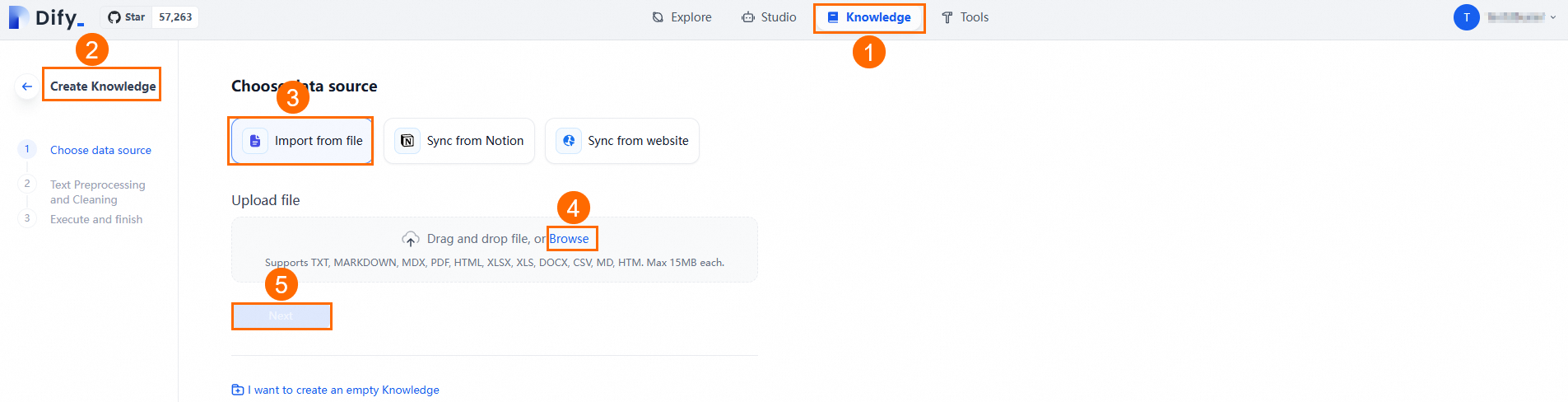

Step 5: Create a knowledge base

A dedicated knowledge base enables the Intelligent Q&A application to answer questions with greater accuracy and professionalism.

Prerequisites

Ensure the corpus file for the knowledge base is prepared. Supported file formats include TXT, MARKDOWN, MDX, PDF, HTML, XLSX, XLS, DOCX, CSV, MD, and HTM, with each file not exceeding 15 MB.

Procedure

-

Click Knowledge Base > Create Knowledge Base > Import Existing Text > Select File > Next, in sequence, to upload the prepared file to the knowledge base.

-

After clicking Next, follow the page guide to perform Text Segmentation And Traffic Scrubbing.

You can use the default settings, which will automatically scrub, segment, and index the uploaded documents, enhancing the Intelligent Q&A application's ability to retrieve and reference information.

Verify the knowledge base and confirm the index through ApsaraDB RDS for PostgreSQL

After setting up the knowledge base, verify its content in the ApsaraDB RDS for PostgreSQL database and confirm the index for each knowledge base table.

-

Connect to the ApsaraDB RDS for PostgreSQL database utilized by Dify. For instructions on connecting to the ApsaraDB RDS for PostgreSQL instance, see the referenced document.

-

Execute the command below to view the ID corresponding to the knowledge base.

SELECT * FROM datasets; -

Replace

-with_in the target ID, add the prefixembedding_vector_index_and the suffix_nodto form the table name that stores the knowledge base. For example, execute the command below to view the data in the target knowledge base within ApsaraDB RDS for PostgreSQL.SELECT * FROM embedding_vector_index_6b169753_****_****_be66_9bddc44a4848_nod; -

Confirm the knowledge base index.

By default, the Dify platform creates an HNSW index for each knowledge base to enhance vector similarity queries using the pgvector plug-in. The system uses the SQL statement below by default for vector similarity queries.

SELECT meta, text, embedding <=> $1 AS distance FROM embedding_vector_index_6b169753_****_****_be66_9bddc44a4848_nod ORDER BY distance LIMIT $2;To verify if the knowledge base table's index and its default parameters satisfy the recall rate requirements, use the following statement. For information on the relationship between the HNSW index parameters

mandef_constructionand the recall rate, see pgvector performance testing (based on HNSW index).SELECT * FROM pg_indexes WHERE tablename = 'embedding_vector_index_6b169753_****_****_be66_9bddc44a4848_nod';If the index is not created automatically or the default parameters do not meet the recall rate requirements, execute the command below to manually create the index.

-

(Optional) Delete the existing index.

DROP INDEX IF EXISTS embedding_vector_index_6b169753_****_****_be66_9bddc44a4848_nod; -

Create an index.

CREATE INDEX ON embedding_vector_index_6b169753_****_****_be66_9bddc44a4848_nod USING hnsw (embedding vector_cosine_ops); WITH (m = '16', ef_construction = '100');

Noteembedding_vector_index_6b169753_****_****_be66_9bddc44a4848_nodis the index table name. Replace the table name as needed in actual applications. -

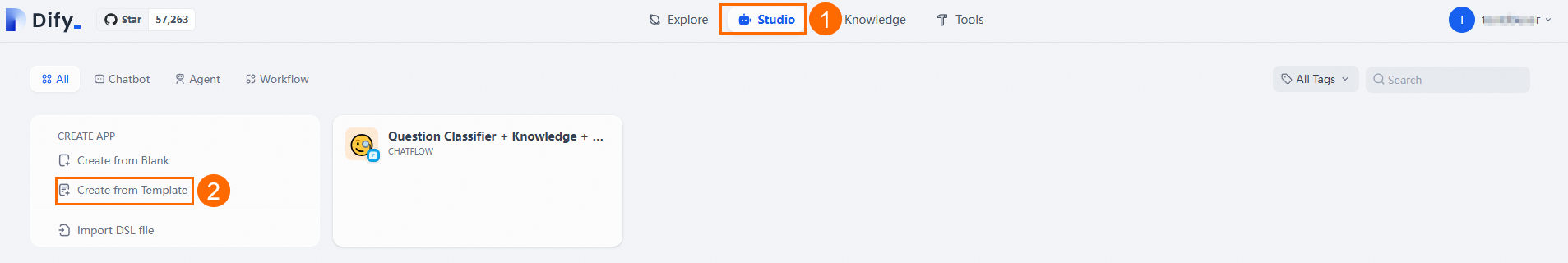

Step 6: Create an Intelligent Q&A application

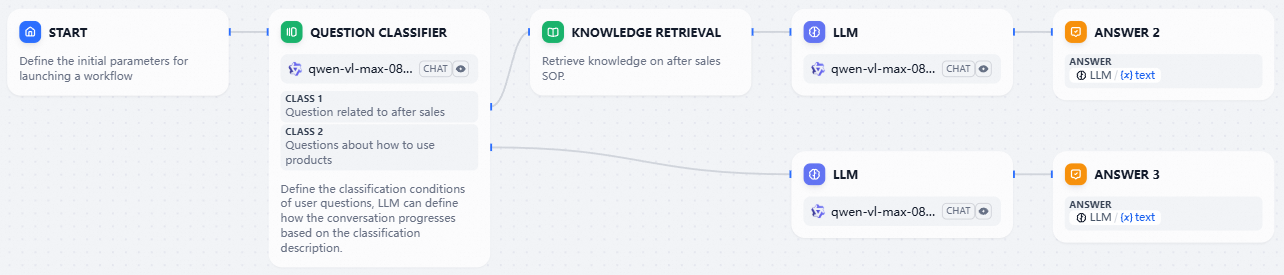

This guide uses the Question Classifier + Knowledge + Chatbot template as an example from the application templates.

-

Click Studio > Create From Application Template.

-

Locate the Question Classifier + Knowledge + Chatbot template and click Use This Template.

-

After setting the application name and icon, click Create.

-

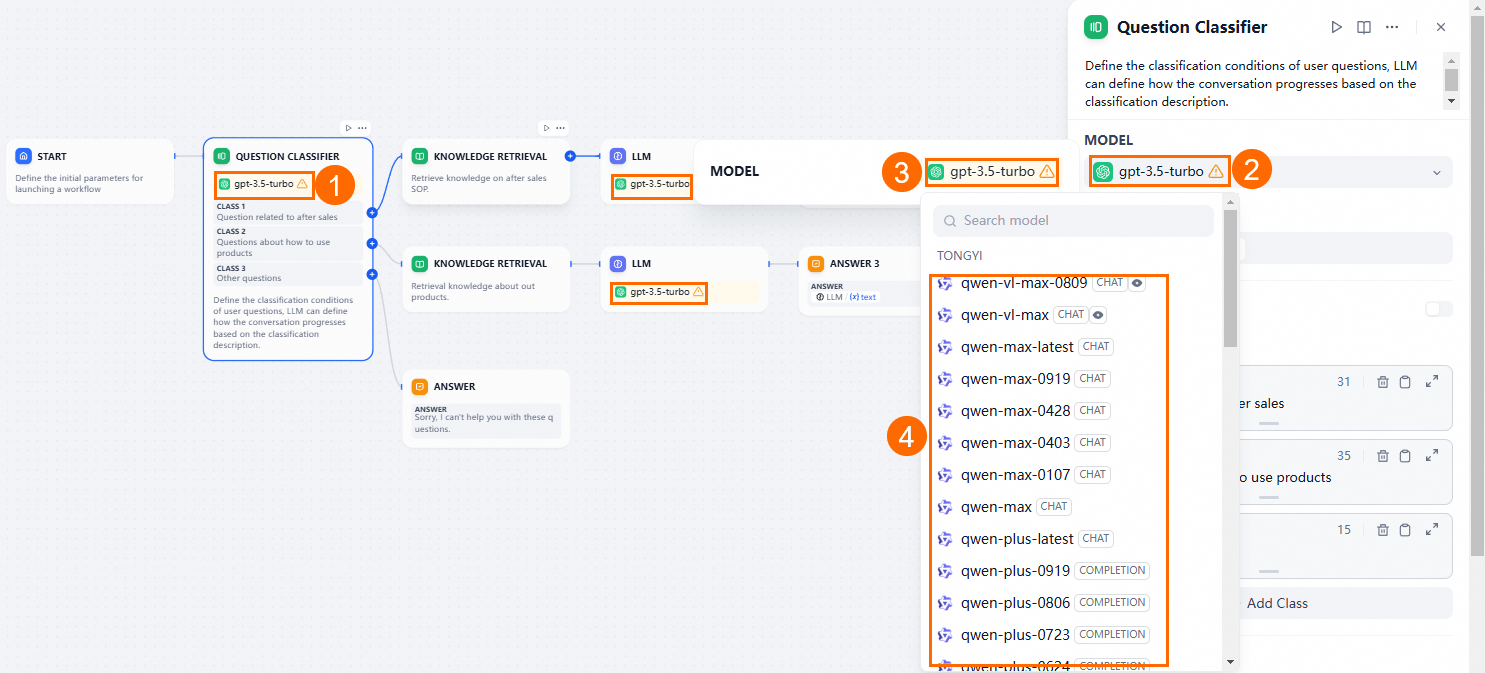

On the Studio page, select the newly created application card to access the application orchestration page.

-

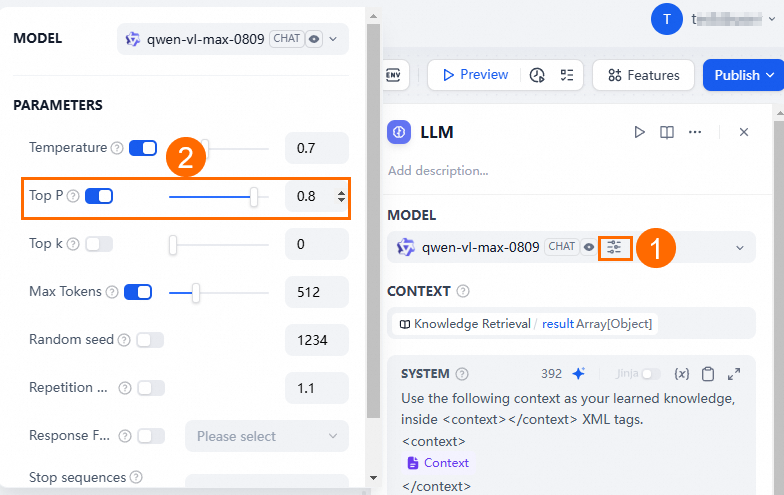

Configure the application's workflow by removing the Answer module, keeping a Knowledge Retrieval module, and updating the AI models of the Question Classifier and LLM modules to Tongyi Qianwen.

When setting up the Tongyi Qianwen large model, ensure the Top P value is set to less than 1.

-

Customize the Question Classifier module to suit your needs. For instance, if your knowledge base contains PostgreSQL information, you can configure it as follows:

-

For questions pertaining to PostgreSQL, combine the knowledge base with the Tongyi Qianwen large model for analysis and summarization.

-

For questions unrelated to PostgreSQL, use the Tongyi Qianwen large model to provide answers.

-

-

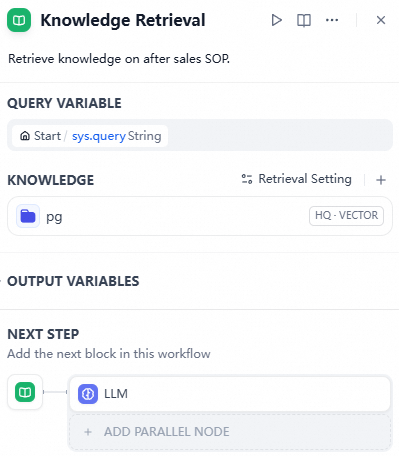

Update the Knowledge Retrieval module by adding the PostgreSQL-related knowledge base you created earlier.

-

Click Preview in the upper right corner to test the Q&A demo. Once the demo works correctly, click Publish to release the application.