This is the second article in a two part series about QA Systems and Deep Learning. You can read part 1 here. Deep Learning is a subfield of machine learning, and aims at using machines for data abstraction with the help of multiple processing layers and complex algorithms. Although similar to Artificial Intelligence (AI) and Machine Learning, Deep Learning takes a more granular approach to learning from data. One of the best applications of deep learning is digital assistants such as Siri and Google Now. These assistants can retrieve information about a human-to-human interaction. For instance, they can answer the question, "What movie is playing at my local cinema?" However, they do not know how to parse that sentence and need to be programmed to understand the context. This is where deep learning is vital, as it enables machines to decipher speech and text.

In recent years, researchers further explored deep neural networks (DNNs) in regards to image classification and speech recognition. Language learning and representation via DNNs gradually became a new research trend. However, due to the flexibility of human languages and the complexity involved in the abstraction of semantic information, the DNN model is facing challenges in implementing language representation and learning.

First, compared to voices and images, language is a non-natural signal and symbol system that is entirely produced and processed by the brain. The variability and flexibility of languages are far more than that of image and voice signals. Second, images and voices have precise mathematical representations. For example, grayscale images are mathematical matrices, and even the most granular element has a definite physical meaning, with the value at each point of the pixel indicating a grayscale color value. In contrast, the previous bag-of-word representation method may result in problems such as difficulty in deriving meaningful insights due to excess number of dimensions, high sparsely, and semantic information loss in language representation.

Researchers are increasingly interested in the application of the deep learning model for natural language processing (NLP), focusing on the representation and learning of words, sentences, articles, and relevant applications. For example, Bengio et al. obtained a new vector image called word embedding or word vector using the neural network model [27]. This vector is a low-dimensional, dense, and continuous vector representation, and contains semantic and grammatical information of the words. At present, word vector representation influences the implementation of most neural network based NLP methods.

Researchers designed the DNN model to learn about vector representation of sentences, which includes sentence modeling of the recursive neural network, the recurrent neural network (RNN) and the convolutional neural network (CNN) [28-30]. Researchers applied sentence representation to a large number of NLP tasks and achieved prominent outcomes, such as machine translation [31, 32] and sentiment analysis [33, 34]. The representation of sentences and the learning of articles are still relatively difficult and receive little research. An example of such research is that done by Li and his team, who implemented a representation of articles by encoding and decoding them via hierarchical RNN [35].

Two fundamental issues exist in the QA field. The first is how to implement the semantic representation of a question and answer. Both the interpretation of the user's question and the extraction and validation of the response require abstract representation of essential information of the question and answer. It involves representation of not only syntactic and grammatical information of the QA statements but also of the user's intention and matching information on the semantic level.

The second is how to implement semantic matching between the question and the answer. To ensure the reply to the user's question meets strict semantic rules, the system must make reasonable use of a high-level abstract semantic representation of the statements to capture the semantic-matching model for the two texts.

Given the language representation capability that CNN and RNN have shown in the NLP field in recent years, more researchers are trying the deep learning method to complete key activities in the QA field, such as question classification, answer selection, and automatic response generation. Also, the naturally annotated data [50] generated by internet users for exchange of information, such as microblog replies and community QA pairs provide reliable data resources for training the DNN model, thereby solving the data shortage problem in the QA research field to a large extent.

DNNs are gaining popularity in the world of machine translation. Researchers have designed various kinds of DNNs, such as deep stack networks (DSNs), deep belief networks (DBNs), recurrent neural networks (RNNs) and convolutional neural networks (CNNs). In NLP, the primary aim of all DNNs is to learn the syntactic and semantic representations of words, sentences, phrases, structures, and sentences so that it can grasp similar words (phrases or structures).

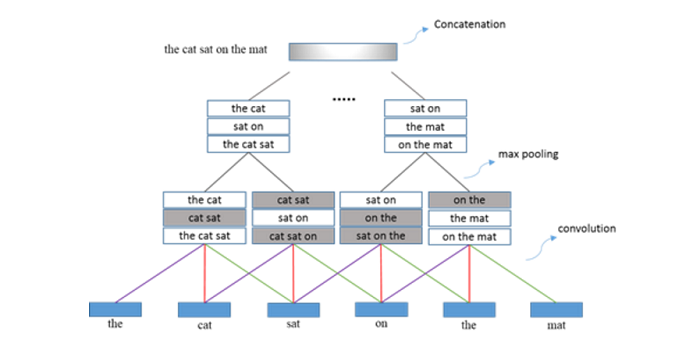

The learning of CNN-based DNNs is aims at grasping representation vectors that form sentences. It does so by scanning sentences; extracting, and selecting characteristics. First, a sliding window is used to scan the sentence from left to right. Each sliding window contains multiple words, with a vector representing every word. In the sliding window, the convolution extracts the characteristics. Then, max pooling (a sample-based discretization process) selects characteristics. Repeating the above operation several times results in the retrieval of multiple vectors for representation. Connecting these vectors enables the semantic representation of whole sentences. As shown in Figure 1, inputs of CNN-based sentence modeling are in the form of word-vector matrices.

The output is meaningful after connecting values of multiple points on each line in the matrix, as it then represents the corresponding word in the sentence. The word vector matrix is obtained by transforming words in a sentence into corresponding word vectors and then arranging them in the same order as the words. This model is used to express a sentence as a vector of fixed length through multilayer overlapping, convolution, and max pooling. Such architectures can be used to handle various supervised natural languages by adding a classifier on the top layer of the model.

Figure 1: CNN-based Sentence Modeling

CNN-based sentence modeling can be presented as a "combination operator" with a local selection function. With the progressive deepening of the model level, the representation output obtained from the model can cover a wider range of words in a sentence. A multi-layer operation achieves sentence representation vectors of fixed dimension. This process is functionally similar to the recurrent operation mechanism [33] of "recursive automatic coding."

The sentence model formed through one layer of convolution and global max pooling is called a shallow convolutional neural network model. It is widely used for sentence level classification in NLP, for example, sentence classification [36] and relation classification [37]. However, the shallow convolutional neural network model can neither be used for complicated local semantic relations in sentences nor provide a better representation of semantic combination at a deeper layer in the sentence. Global max pooling results in the loss of word order characteristics in the sentence. As a result, the shallow convolutional neural network model only can be used for local attribute matching between statements. For complex and diversified natural language representations in questions and answers, the QA matching model [38-40] usually uses the deep convolutional neural network (DCNN) to complete the sentence modeling for questions and answers and conducts QA matching by transferring QA semantic representations from high-level output to multilayer perceptrons (MLP).

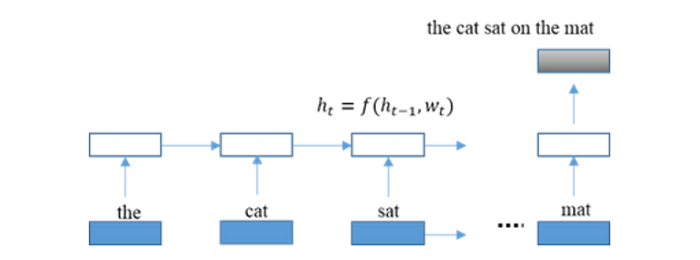

In RNN-based sentence modeling, a sentence is considered a sequence of words and a vector represents each word. There is an intermediate representation of each position, and such representation is composed of vectors to account for the semantics from the beginning of the sentence to each position. The intermediate representation of each position is determined by the word vector on the current position and the intermediate representation of the previous position and formed through an RNN model. The RNN model considers the intermediate representation at the end of the sentence as the semantic representation of the whole sentence, as shown in Figure 2.

Figure 2: RNN-based Sentence Modeling

The RNN model has a structure similar to that of the hidden Markov model, but with more powerful presentation skills. The intermediate representation has no Markov assumption, and the model is non-linear. However, with the increase of the sequence length, the vanishing gradient problem occurs [43] during the RNN training. To solve this issue, researchers improved the design of recurrent computing units in the RNN and proposed different variants, such as Long Short-Term Memory (LSTM) [44, 45] and Gated Recurrent Unit (GRU) [56].

The two RNN types mentioned above can be used to process long-range dependence relations and to provide a better semantic representation of the whole sentence. Through Bidirectional LSTM, Wang and Nyberg [47] studied the semantic representation of question-answer pairs and inputted the acquired representations into a classifier to compute the classification confidence level.

Recently, researchers finished semantic representation learning in image scenarios of questions by integrating CNN and RNN. During the word sequence scanning conducted by RNN for questions, the model uses the combined learning mechanism based on deep learning to finish learning "with texts and graphics," to realize question modeling in the image scenario for the final QA matching.

For instance, during the RNN's traversal on the words in questions, the learning model proposed by Malinowski, et al. [48] considers the image representation obtained by CNN and the word vector on current word position as the input information for RNN. It tries to learn from the current intermediate representation, thus realizing the combined learning of images and questions.

By contrast, Gao et al. [49] first used RNN to complete the sentence modeling for questions and then regarded both the semantic representation vector of questions and the image representation vector obtained by CNN as the scenario information to generate answers during the answer generation.

Primary function modules involved in the semantic matching of a QA system include the question retrieval (i.e., question paraphrasing detection), the answer extraction (i.e., matching calculation of questions and candidate text statements), and the answer confidence sequencing (i.e., marking on semantic matching between questions and candidate answers).

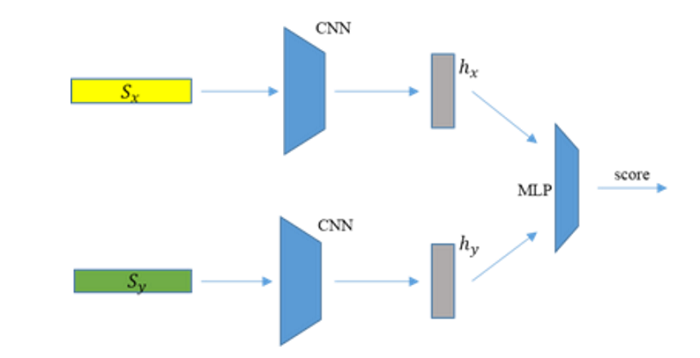

The first kind of DCNN-based semantic matching architecture is the parallel matching [38-40] architecture. Semantic representations (real value vectors) of two sentences result from inputting them into two CNN-based sentence models. These two semantic representations will then be input into a multilayer neural network to judge the semantic matching degree of the two sentences and determine whether they can form a matching sentence pair (QA pair). This is the basic idea of the DCNN-based parallel semantic matching model.

Figure 3: DCNN-based Parallel Matching Architecture

The parallel matching architecture outlined in Figure 3 shows that two independent CNNs acquire representations of two sentences, and information between these two sentences will not affect each other before receiving their own representations. This model is used to match two sentences from the global semantic aspect but ignores more sophisticated local matching characteristics. However, in questions related to statement matching, local matching often exists between two sentences. For example, question-answer pairs as shown below:

Sx: I'm starving, where are we going to eat today?

Sy: I've heard that KFC recently launched new products, shall we try some.

In this QA pair, there is a strong matching relation between "to eat" and "KFC," while the parallel matching reflects in the global representation of these two sentences. Before the representation for the whole sentence is concluded, "to eat" and "KFC" do not affect each other.

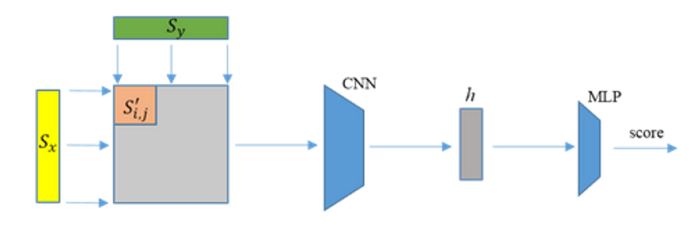

The second kind of DCNN-based semantic matching architecture is the interactive matching [39] architecture. Unlike parallel matching, the core idea of interactive matching is to understand the matching pattern of two sentences directly and conduct local interactions in different granularities between them at different depths of the model.

Next, the representation of sentences matching at various levels is noted and, finally, the equal representation of sentences for fixed dimensions is obtained with the matching representation being marked.

Figure 4: DCNN-based Interactive Matching Architecture

As shown in Figure 4, the first layer of the interactive matching architecture directly obtains the local matching representation at a lower layer between sentences by the convolutional matching of the sliding windows between them. In the subsequent high-level learning, it employs two-dimensional convolution and two-dimensional local max pooling similar to those during image field processing, to learn the high-level matching representation between sentences of questions and answers.

In this form, the matching model can not only conduct rich modeling for the local matching relation between two sentences but also perform modeling for information in each sentence. It is clear that the result vector obtained from interactive matching learning contains not only the position information of sliding windows for these two sentences but also their matching representation.

For semantic matching between questions and answers, interactive matching fully allows for the internal matching relation between questions and answers as well as obtaining matching representation vectors between them through two-dimensional convolution and two-dimensional local max pooling. During the process, interactive matching focuses more on the matching relation between sentences and conducts exact matching on them.

Compared with parallel matching, interactive matching considers not only the combination quality of words in the sliding window of each sentence but also the quality of matching relation for the combination of the two sentences. The advantage of parallel matching is that respective word order information can be maintained during matching, as the parallel matching carries out modeling on sliding windows for sequences on both sentences. Comparatively speaking, the QA matching process of interactive matching is the interactive mode to learn local information between statements.

Since neither the local convolution operation nor the local max pooling can change the overall sequence of local matching representation of two sentences, the interactive matching model can maintain the word order information of questions and answers. In short, interactive matching can obtain a local matching mode between two sentences by conducting modeling for the matching between questions and answers.

Compared with the retrieval-based reply mechanism, the generation-based answer feedback mechanism gives the answer that is automatically generated according to information entered by current users. It is composed of word orders, rather than answer statements generated by users editing through the retrieval of the knowledge base. This mechanism is used to construct a natural language generation model by using a large number of interactive data pairs. Using this information, the system can automatically generate a reply of natural language representation.

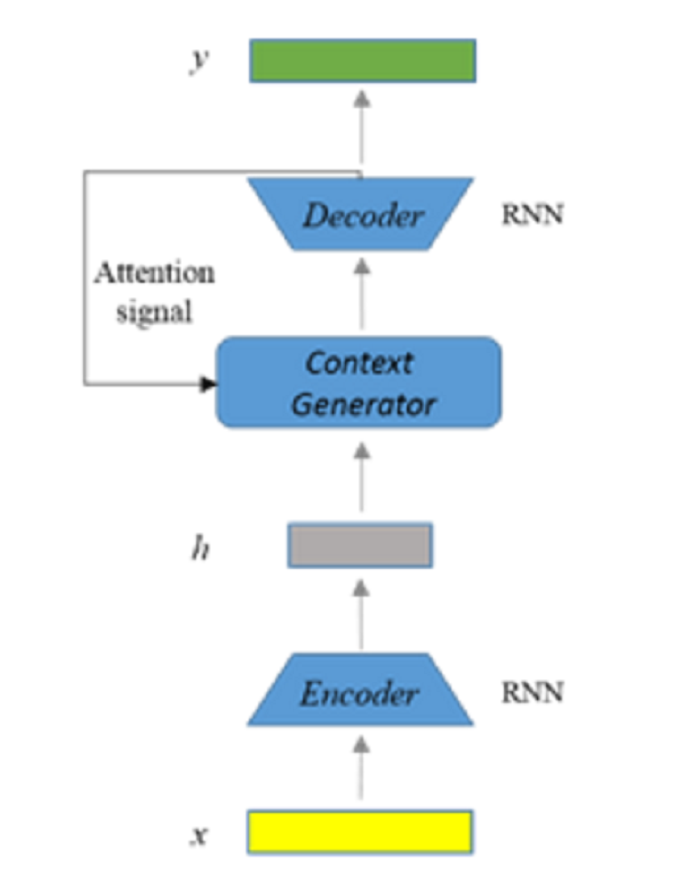

The automatic answer generation mode needs to solve two significant problems: sentence presentation and language production. In recent years, the recurrent neural network has performed well in both language representation and generation, in particular for the RNN-based encoding-decoding architecture, which has made a breakthrough in machine translation [31, 32] and automatic abstraction [51].

Based on the encoding-decoding frame of the GRU (Gated Recurrent Unit) [46] recurrent neural network, Shang [52] et al. proposed the dialog model "Neural Responding Machine" (NRM) that is based on the neural network and can be used to realize man-machine single-turn dialogs. The NRM is used to learn people's replies from a large scale of information pairs (question-answer pairs, microblog-reply pairs) and to save models acquired in nearly four million model parameters for the system, that is, to obtain a natural language generation model.

As shown in Figure 5, NRM regards the sentence input as a sequence of word representations. Then, NRMS transforms it into a sequence of intermediate representations through an encoder, that is, an RNN model, and finally converts it into a series of words as inputs of a sentence through a decoder, that is, another RNN model. Since the NRM uses a hybrid mechanism during coding, the sequence of an intermediate representation obtained from coding cannot only fully grasp user's statement information, but also retain other details of sentences. It also employs the attention mechanism [31] during decoding to ensure the generation model can easily grasp the complex interactive model in the QA process.

The generation-based question answering mechanism and the retrieval-based retrieval feedback mechanism have their characteristics: in microblog data with personalized expression forms, the accuracy rate of the former is relatively higher than that of the latter, namely 76 percent and 70 percent respectively. However, answers obtained from the former may have grammar impassibility and poor coherence while those from the latter may have rational and reliable expressions since microblog users edited them.

Figure 5: Answer Generation Model Based on Encoding-decoding Frame

At present, the NRM and Google's Neural Conversational Model (NCM) [53] still realize the language generation at the upper level of complicated language model memory and combination but are unable to use external knowledge during an interaction. For instance, in the sentence, "How does the West Lake of Hangzhou compare to the May Day of last year?" they are unable to give a reply related to the real situation (comparison results).

Nevertheless, the significance of NRM and NCM is that they preliminarily realize the humanoid automatic language feedback. In the past few decades, most QA and dialogue models generated through researchers' unremitting efforts were based on rules and templates or research conducted in an extensive database. These two modes cannot generate feedback and lack adequate language comprehension and representation. This is often due to limited data points and the expression of templates/examples. These modes have certain deficiencies in their accuracy and flexibility and struggle to consider both, the natural smoothness of language and the matching semantic content.

This paper briefly introduces the development history and basic architecture of the QA system. It also presents the DNN-based semantic representations, semantic matching models of different matching architectures, and answer generation models for solving some of the fundamental problems in the QA system. Deep learning has helped achieve this. However, there are still problems to be solved in the technical research of the QA system, for instance, how to understand users' questions under a continuous interactive QA scenario, such as the language understanding in the interaction with Siri. In addition, how to learn the external semantic knowledge to ensure the QA system can conduct simple knowledge reasoning to reply to relation inference questions, such as "What hospital department should I visit if I feel chest distress and cough all the time?" Moreover, with the recent research and popularization of attention mechanism and memory network [54, 55] in the natural language understanding and knowledge reasoning, new development opportunities will be provided for research on automatic question answering.

[1] Terry Winograd. Five Lectures on Artificial Intelligence [J]. Linguistic Structures Processing, volume 5 of Fundamental Studies in Computer Science, pages 399- 520, North Holland, 1977.

[2] Woods W A. Lunar rocks in natural English: explorations in natural language question answering [J]. Linguistic Structures Processing, 1977, 5: 521−569.

[3] Dell Zhang and Wee Sun Lee. Question classification using support vector machines. In SIGIR, pages 26–32. ACM, 2003

[4] Xin Li and Dan Roth. Learning question classifiers. In COLING, 2002

[5] Hang Cui, Min-Yen Kan, and Tat-Seng Chua. Unsupervised learning of soft patterns for generating definitions from online news. In Stuart I. Feldman, Mike Uretsky, Marc Najork, and Craig E. Wills, editors, Proceedings of the 13th International conference on World Wide Web, WWW 2004, New York, NY, USA, May 17-20, 2004, pages 90–99. ACM, 2004.

[6] Clarke C, Cormack G, Kisman D, et al. Question answering by passage selection (multitext experiments for TREC-9) [C]//Proceedings of the 9th Text Retrieval Conference(TREC-9), 2000.

[7] Ittycheriah A, Franz M, Zhu W-J, et al. IBM's statistical question answering system[C]//Proceedings of the 9th Text Retrieval Conference (TREC-9), 2000.

[8] Ittycheriah A, Franz M, Roukos S. IBM's statistical question answering system—TREC-10[C]//Proceedings of the 10th Text Retrieval Conference (TREC 2001), 2001.

[9] Lee G, Seo J, Lee S, et al. SiteQ: engineering high performance QA system using lexico-semantic pattern.

[10] Tellex S, Katz B, Lin J, et al. Quantitative evaluation of passage retrieval algorithms for question answering[C]// Proceedings of the 26th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR '03). New York, NY, USA: ACM, 2003:41–47.

[11] Jiwoon Jeon, W. Bruce Croft, and Joon Ho Lee. Finding similar questions in large question and answer archives. In Proceedings of the 2005 ACM CIKM International Conference on Information and Knowledge Management, Bremen, Germany, October 31 – November 5, 2005, pages 84–90. ACM, 2005.

[12] S. Riezler, A. Vasserman, I. Tsochantaridis, V. Mittal, Y. Liu, Statistical machine translation for query expansion in answer retrieval, in: Proceedings of the 45th Annual Meeting of the Association of Computational Linguistics, Association for Computational Linguistics, Prague, Czech Republic, 2007, pp. 464–471.

[13] M. Surdeanu, M. Ciaramita, H. Zaragoza, Learning to rank answers on large online qa collections, in: ACL, The Association for Computer Linguistics, 2008, pp. 719–727.

[14] A. Berger, R. Caruana, D. Cohn, D. Freitag, V. Mittal, Bridging the lexical chasm: statistical approaches to answer-finding, in: SIGIR '00: Proceedings of the 23rd annual international ACM SIGIR conference on Research and development in information retrieval, ACM, New York, NY, USA, 2000, pp. 192–199.

[15] Gondek, D. C., et al. "A framework for merging and ranking of answers in DeepQA." IBM Journal of Research and Development 56.3.4 (2012): 14-1.

[16] Wang, Chang, et al. "Relation extraction and scoring in DeepQA." IBM Journal of Research and Development 56.3.4 (2012): 9-1.

[17] Kenneth C. Litkowski. Question-Answering Using Semantic Triples[C]. Eighth Text REtrieval Conference (TREC-8). Gaithersburg, MD. November 17-19, 1999.

[18] H. Cui, R. Sun, K. Li, M.-Y. Kan, T.-S. Chua, Question answering passage retrieval using dependency relations., in: R. A. Baeza-Yates, N. Ziviani, G. Marchionini, A. Moffat, J. Tait (Eds.), SIGIR, ACM, 2005, pp. 400–407.

[19] M. Wang, N. A. Smith, T. Mitamura, What is the jeopardy model? a quasi-synchronous grammar for qa., in: J. Eisner (Ed.), EMNLP-CoNLL, The Association for Computer Linguistics, 2007, pp. 22–32.

[20] K. Wang, Z. Ming, T.-S. Chua, A syntactic tree matching approach to finding similar questions in community-based qa services, in: Proceedings of the 32Nd International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR '09, 2009, pp. 187–194.

[21] Hovy, E.H., U. Hermjakob, and Chin-Yew Lin. 2001. The Use of External Knowledge of Factoid QA. In Proceedings of the 10th Text Retrieval Conference (TREC 2001) [C], Gaithersburg, MD, U.S.A., November 13-16, 2001.

[22] Jongwoo Ko, Laurie Hiyakumoto, Eric Nyberg. Exploiting Multiple Semantic Resources for Answer Selection. In Proceedings of LREC(Vol. 2006).

[23] Kasneci G, Suchanek F M, Ifrim G, et al. Naga: Searching and ranking knowledge. IEEE, 2008:953-962.

[24] Zhang D, Lee W S. Question Classification Using Support Vector Machines[C]. Proceedings of the 26th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval. 2003. New York, NY, USA: ACM, SIGIR'03.

[25] X. Yao, B. V. Durme, C. Callison-Burch, P. Clark, Answer extraction as sequence tagging with tree edit distance., in: HLT-NAACL, The Association for Computer Linguistics, 2013, pp. 858–867.

[26] C. Shah, J. Pomerantz, Evaluating and predicting answer quality in community qa, in: Proceedings of the 33rd International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR '10, 2010, pp. 411–418.

[27] T. Mikolov, K. Chen, G. Corrado, J. Dean, Efficient estimation of word representations in vector space, CoRR abs/1301.3781.

[28] Socher R, Lin C, Manning C, et al. Parsing natural scenes and natural language with recursive neural networks[C]. Proceedings of International Conference on Machine Learning. Haifa, Israel: Omnipress, 2011: 129-136.

[29] A. Graves, Generating sequences with recurrent neural networks, CoRR abs/1308.0850.

[30] Kalchbrenner N, Grefenstette E, Blunsom P. A Convolutional Neural Network for Modelling Sentences[C]. Proceedings of ACL. Baltimore and USA: Association for Computational Linguistics, 2014: 655-665.

[31] Bahdanau D, Cho K, Bengio Y. Neural machine translation by jointly learning to align and translate [J]. arXiv, 2014.

[32] Sutskever I, Vinyals O, Le Q V V. Sequence to Sequence Learning with Neural Networks[M]. Advances in Neural Information Processing Systems 27. 2014: 3104-3112.

[33] Socher R, Pennington J, Huang E H, et al. Semi-supervised recursive auto encoders for predicting sentiment distributions[C]. EMNLP 2011

[34] Tang D, Wei F, Yang N, et al. Learning Sentiment-Specific Word Embedding for Twitter Sentiment Classification[C]. Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Baltimore, Maryland: Association for Computational Linguistics, 2014: 1555-1565.

[35] Li J, Luong M T, Jurafsky D. A Hierarchical Neural Autoencoder for Paragraphs and Documents[C]. Proceedings of ACL. 2015.

[36] Kim Y. Convolutional Neural Networks for Sentence Classification[C]. Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP). Doha, Qatar: Association for Computational Linguistics, 2014: 1746–1751.

[37] Zeng D, Liu K, Lai S, et al. Relation Classification via Convolutional Deep Neural Network[C]. Proceedings of COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers. Dublin, Ireland: Association for Computational Linguistics, 2014: 2335–2344.

[38] L. Yu, K. M. Hermann, P. Blunsom, and S. Pulman. Deep learning for answer sentence selection. CoRR, 2014.

[39] B. Hu, Z. Lu, H. Li, Q. Chen, Convolutional neural network architectures for matching natural language sentences., in: Z. Ghahramani, M. Welling, C. Cortes, N. D. Lawrence, K. Q. Weinberger (Eds.), NIPS, 2014, pp. 2042–2050.

[40] A. Severyn, A. Moschitti, Learning to rank short text pairs with convolutional deep neural networks., in: R. A. Baeza-Yates, M. Lalmas, A. Moffat, B. A. Ribeiro-Neto (Eds.), SIGIR, ACM, 2015, pp. 373-382.

[41] Wen-tau Yih, Xiaodong He, and Christopher Meek. 2014. Semantic parsing for single-relation question answering. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, pages 643–648. Association for Computational Linguistics.

[42] Li Dong, Furu Wei, Ming Zhou, and Ke Xu. 2015. Question Answering over Freebase with Multi-Column Convolutional Neural Networks. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics (ACL) and the 7th International Joint Conference on Natural Language Processing.

[43] Hochreiter S, Bengio Y, Frasconi P, et al. Gradient flow in recurrent nets: the difficulty of learning long-term dependencies [M]. A Field Guide to Dynamical Recurrent Neural Networks. New York, NY, USA: IEEE Press, 2001.

[44] Hochreiter S, Schmidhuber J. Long Short-Term Memory[J]. Neural Comput., 1997, 9(8): 1735-1780.

[45] Graves A. Generating Sequences With Recurrent Neural Networks[J]. CoRR, 2013, abs/1308.0850.

[46] Chung J, Gülçehre Ç, Cho K, et al. Gated Feedback Recurrent Neural Networks[C]. Proceedings of the 32nd International Conference on Machine Learning (ICML-15). Lille, France: JMLR Workshop and Conference Proceedings, 2015: 2067-2075.

[47] D.Wang, E. Nyberg, A long short-term memory model for answer sentence selection in question answering., in: ACL, The Association for Computer Linguistics, 2015, pp. 707–712.

[48] Malinowski M, Rohrbach M, Fritz M. Ask your neurons: A neural-based approach to answering questions about images[C]//Proceedings of the IEEE International Conference on Computer Vision. 2015: 1-9.

[49] Gao H, Mao J, Zhou J, et al. Are You Talking to a Machine? Dataset and Methods for Multilingual Image Question[C]//Advances in Neural Information Processing Systems. 2015: 2287-2295.

[50] Sun M S. Natural Language Processing Based on Naturally Annotated Web Resources [J]. Journal of Chinese Information Processing, 2011, 25(6): 26-32.

[51] Hu B, Chen Q, Zhu F. LCSTS: a large scale Chinese short text summarization dataset[J]. arXiv preprint arXiv:1506.05865, 2015.

[52] Shang L, Lu Z, Li H. Neural Responding Machine for Short-Text Conversation[C]. Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing. Beijing, China: Association for Computational Linguistics, 2015: 1577-1586.

[53] O. Vinyals, and Q. V. Le. A Neural Conversational Model. arXiv: 1506.05869,2015.

[54] Kumar A, Irsoy O, Su J, et al. Ask me anything: Dynamic memory networks for natural language processing[J]. arXiv preprint arXiv:1506.07285, 2015.

[55] Sukhbaatar S, Weston J, Fergus R. End-to-end memory networks[C]//Advances in Neural Information Processing Systems. 2015: 2431-2439.

Original link: https://yq.aliyun.com/articles/58745#

2,599 posts | 762 followers

FollowAlibaba Clouder - September 1, 2020

Alibaba Clouder - March 8, 2017

Alibaba Clouder - October 31, 2019

Alibaba Clouder - October 12, 2019

Alibaba Clouder - August 18, 2020

Alibaba Clouder - August 28, 2019

2,599 posts | 762 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Online Education Solution

Online Education Solution

This solution enables you to rapidly build cost-effective platforms to bring the best education to the world anytime and anywhere.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn MoreMore Posts by Alibaba Clouder