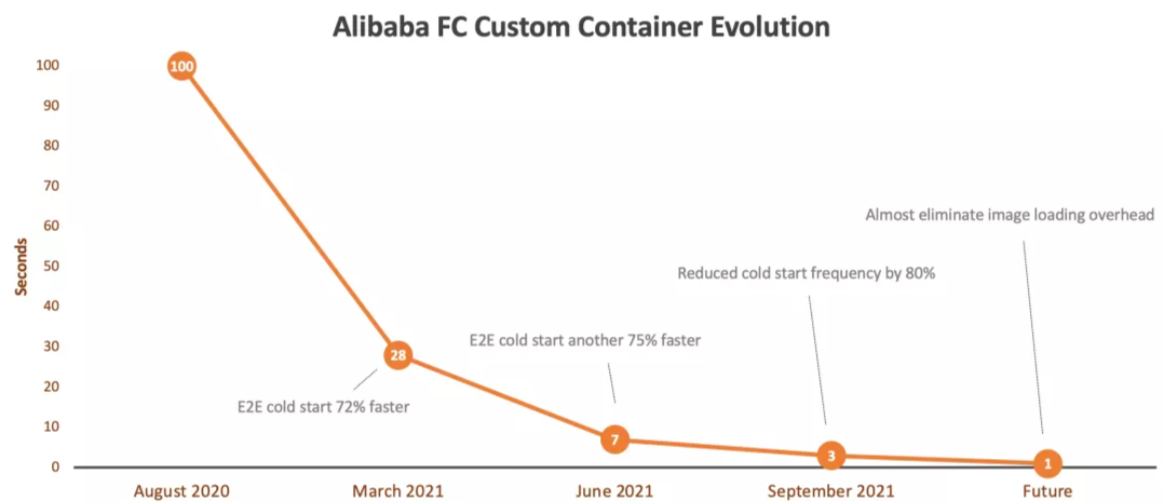

In August 2020, Function Compute (FC) added an innovative way to deploy functions in container mirror images. In December 2020, AWS Lambda held re:Invent. In June 2021, other domestic FaaS providers also announced a blockbuster feature allowing FaaS to support containers. Cold start has always been a pain point for FaaS. After the introduction of container mirror are dozens of times larger than the code compression packages, cold start deterioration has become the biggest concern for developers.

In the design phase of supporting container mirroring, Function Compute (FC) decided to make developers' experience using mirror image as if it were a code package (second-level resiliency), maintaining both ease of use and the ultimate resiliency of FaaS. Therefore, users no longer struggle with choices. The ideal user experience is that function calls can hardly feel the additional consumption of delay caused by the remote transmission of mirror data.

Optimizing mirror image acceleration cold start is divided into two methods: reducing the absolute delay and reducing the probability of cold start. Since the container mirror image was launched, we have used image acceleration technology to reduce the absolute latency in stages. This article introduces the use of Function Compute next-generation IaaS base to help reduce the absolute delay and the cold start frequency.

Take a mirror image as an example

Previously, the problem was the internal data of the mirror image was fully pulled before the image was started. As a result, useless mirror image data was downloaded completely, which took up too much preparation time. Therefore, our initial optimization direction is to ignore useless mirror data as much as possible to achieve on-demand loading. For this purpose, we achieve the technical details related to the improvement of the cold start of the Function Compute custom mirroring from minutes to seconds by omitting the time of pulling useless data by using the mirroring acceleration technology.

We found that the I/O data access modes of function instances are highly consistent during container startup and initialization. According to the feature of the FaaS platform scheduling resources based on the application running mode, we recorded the desensitization data of the I/O trace when the function instance was started for the first time. In the subsequent instance startup, we used the trace data as a prompt to prefetch the mirror image data to the local in advance, reducing the cold start delay.

The preceding two acceleration optimizations reduce the absolute delay of cold start, but the traditional ECS VM will be recycled after being idle for some time. The cold start will be triggered again when a new machine is started again. Therefore, knowing how to reduce the frequency of cold start has become one of the key issues in the next stage.

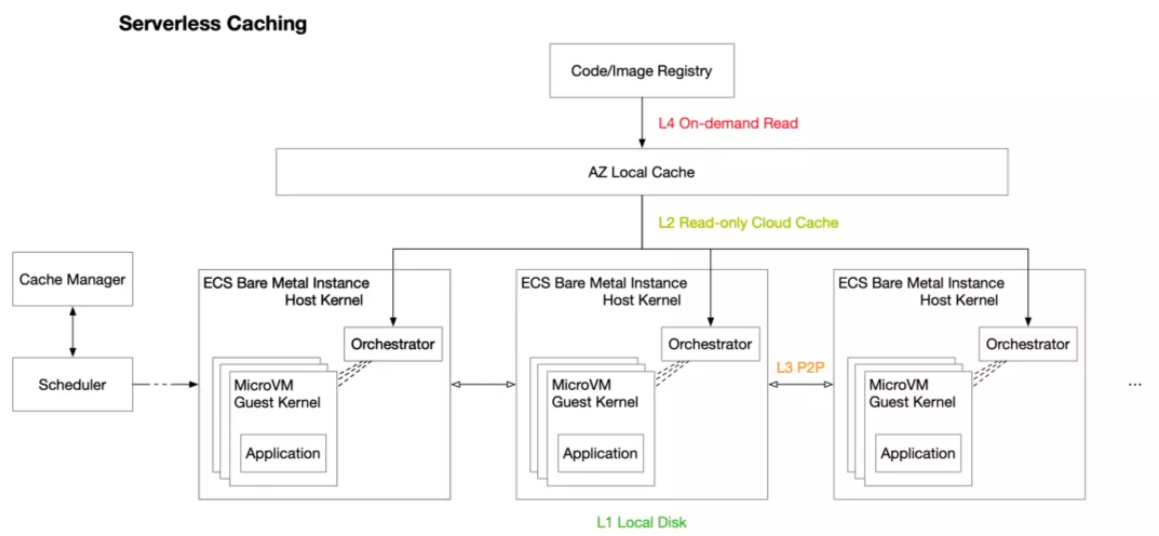

When designing the next generation architecture, we consider solving the problem of cold start frequency but also pay attention to the impact of cache on startup delay. Therefore, we invented Serverless Caching. It can realize the co-optimization of software and hardware and build a data-driven, intelligent, and efficient cache system based on the characteristics of different storage services. This improves the experience of using Custom Container. The iteration time of the Function Compute backend X-Dragon is much longer than the idle recycle time of the ECS VM. For the user side, the hot start frequency is significantly increased, the cache is continuously retained on the X-Dragon machine after a cold start, and the cache hit rate can reach over 90%.

Compared with ECS virtual machines, the architecture of X-Dragon bare metal plus micro virtual machines brings more optimization space for mirror image acceleration:

The landing in X-Dragon of Function Compute Custom Container also improves resource utilization and reduces costs. This is a win-win situation for users and server maintenance.

The architecture of Serverless Caching can provide more optimization potential without increasing resource usage costs.

L1 to L4 are different levels of cache, ranging from small to large distance and latency.

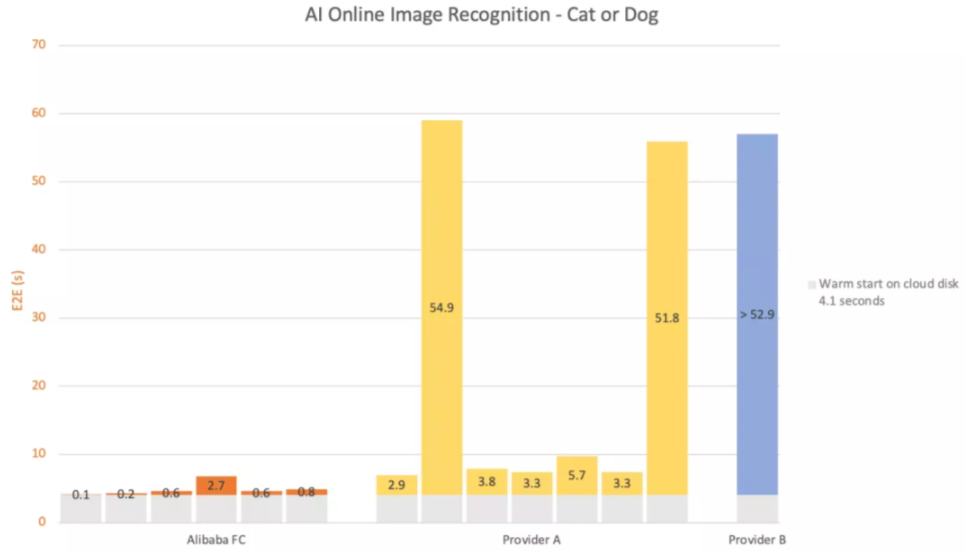

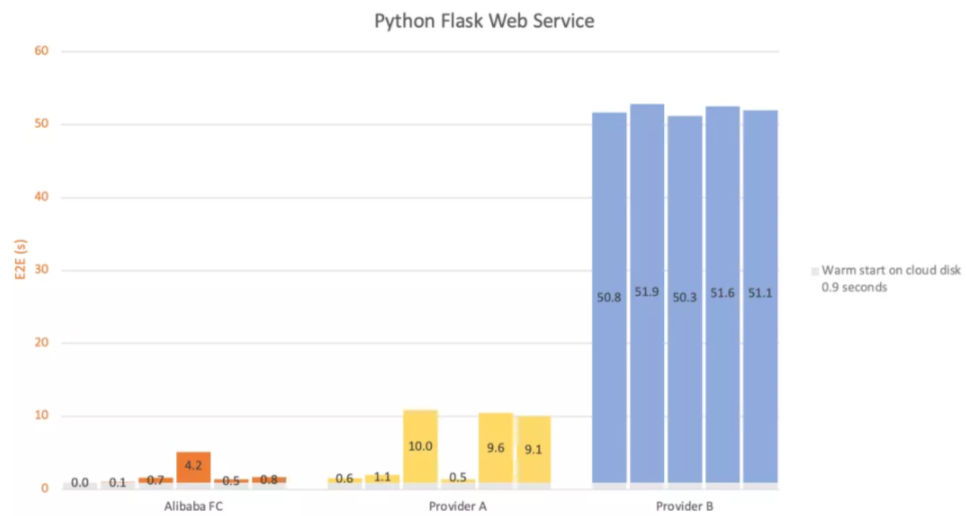

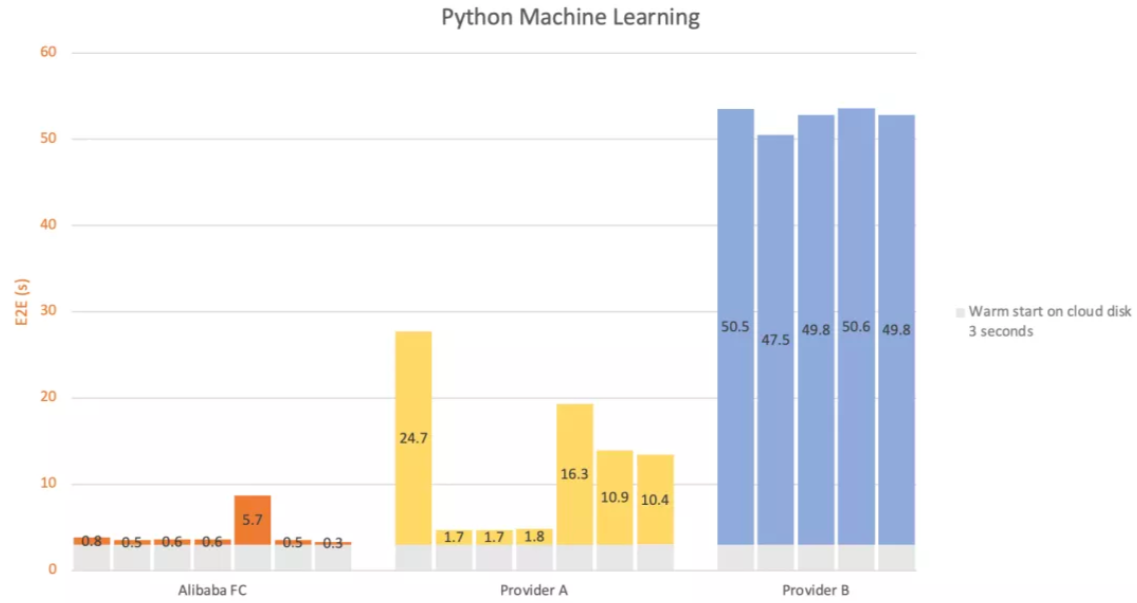

Until now, we have optimized the mirror image acceleration to a high level. We selected four typical mirror images in the Function Compute public use cases and adapted them to several large cloud vendors at home and abroad for horizontal comparison. (The names are replaced by vendor A and vendor B.) We called the preceding mirror images every three hours and repeated them several times. We obtained the following results:

This mirror image contains an image recognition application based on the TensorFlow deep learning framework. Alibaba Cloud Function Compute and Vendor A can operate normally, while Vendor B cannot operate normally. In the following figure, the delay data of Alibaba Cloud Function Compute and Vendor A includes the end-to-end delay of mirror image pulling, container startup, and inference operation. However, the data of Vendor B only has the delay of mirror image pulling, which is the slowest. FC is relatively stable. It can be seen that Function Compute has greater advantages in CPU consumption, such as AI reasoning.

Use the cloud disk hot start as a base (gray) to compare the extra overhead of each manufacturer (color).

This mirror image is a common network service. Python is used in combination with the Flask service framework. The role of this mirror image is to test whether different cloud products can complete efficient on-demand loading. FC and Vendor A both fluctuate, but the latter is the most volatile.

Use the hot start of the cloud disk as a base (in gray) to compare the extra overhead of each vendor (in color).

The mirror image is also a Python operating environment. It can be seen that each manufacturer still maintains its characteristics. Vendor B downloads in full, and Vendor A requests optimized but unstable.

Use the hot start of the cloud disk as a base (in gray) to compare the extra overhead of each vendor (in color).

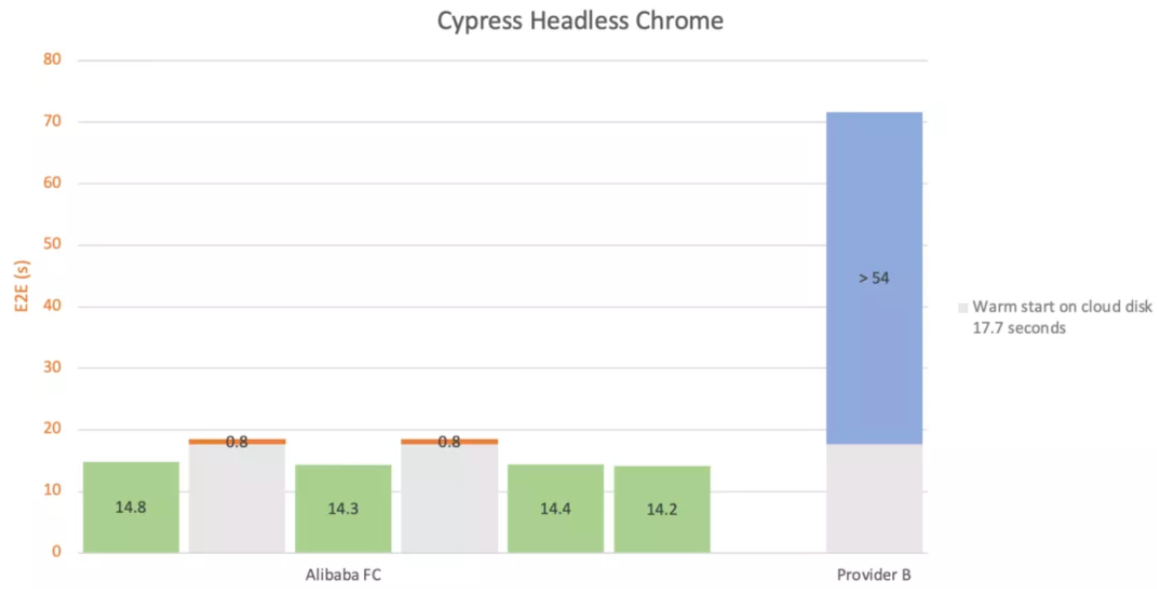

This mirror image contains a headless browser test process. Vendor A cannot run due to programming model limitations and incompatible running environments. However, Vendor B is too slow and can only take 71.1 seconds to complete application initialization within the specified time. It is not hard to see that Function Compute still performs well in mirroring I/O.

Based on the hot start of the cloud disk (gray), the extra cost of each vendor (color) is compared. The green part is the end-to-end duration better than the baseline.

Supporting container technology is an essential feature of FaaS. Containers increase portability and delivery agility, while cloud services reduce O&M and idle costs and provide elastic scaling capabilities. The combination of custom mirror images and Function Compute directly solves the problems caused by users customizing large-capacity business logic for cloud vendors.

FaaS needs to eliminate the extra overhead when running containers as much as possible to make the user experience similar to the local running scenarios. Stable and fast operation is also the standard of excellent FaaS. FC provides mirror image loading and optimization while reducing the cold start frequency to ensure stable and fast operation. Moreover, it is necessary to smooth the portability of applications not to limit the development mode but also to reduce the use threshold as much as possible. Function Compute custom mirror images support standard HTTP services, freely configure available ports, and can be read and written at the same time. It provides a variety of tool chains and diversified deployment schemes. It has no mandatory waiting time for mirror image preparation to complete. It has its own HTTP trigger and does not rely on other cloud services. It supports a series of high-quality solutions, such as custom domain names.

Function Compute custom mirror images are applicable (but not limited to) artificial intelligence inference, big data analysis, game settlement, online course education, and audio and video processing. We recommend using ACR EE for an Alibaba Cloud Container Registry Enterprise Edition instance with the mirror image acceleration feature, eliminating the need to manually enable accelerated pull and accelerate image preparation when you use an ACR mirror image.

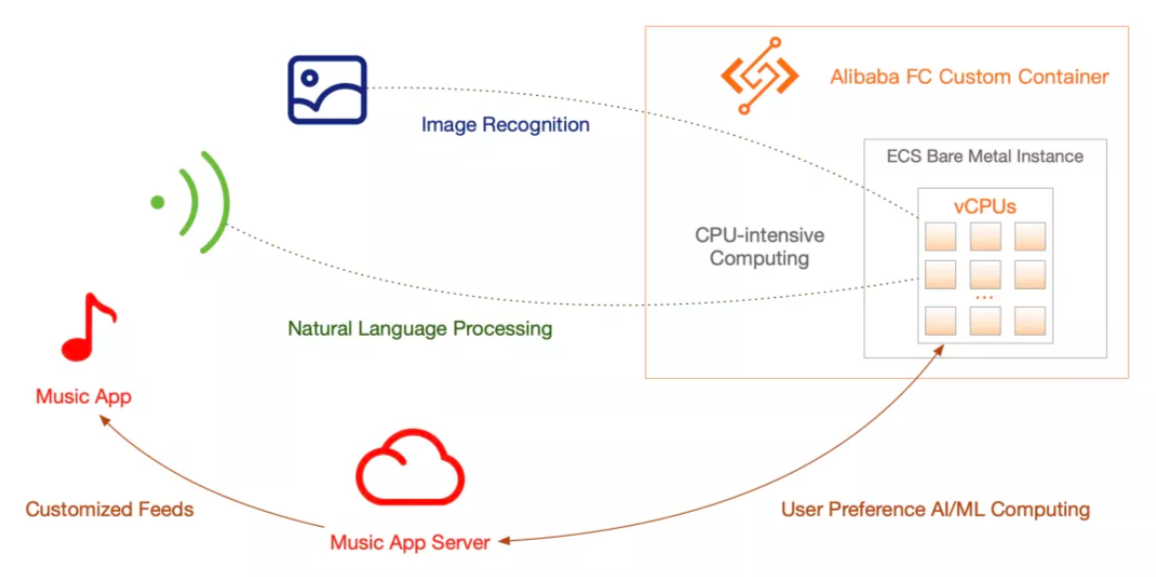

Inference computing relies on a large-volume underlying training framework and a large amount of data processing. Ordinary AI frameworks (such as Tensorflow mirror images) can easily reach the GB level, which already requires high CPU requirements. It is even more challenging to meet the expansion. Function Compute custom images can be used to solve such requirements. Users only need to directly use the underlying training framework mirror image and package it with data processing logic into a new image, which can easily save the migration overhead caused by replacing the operating environment and meet the fast training results brought by elastic scaling. Song preference inference and image AI recognition analysis can be seamlessly connected with Function Compute to achieve the flexibility to meet a large number of dynamic online inference requests.

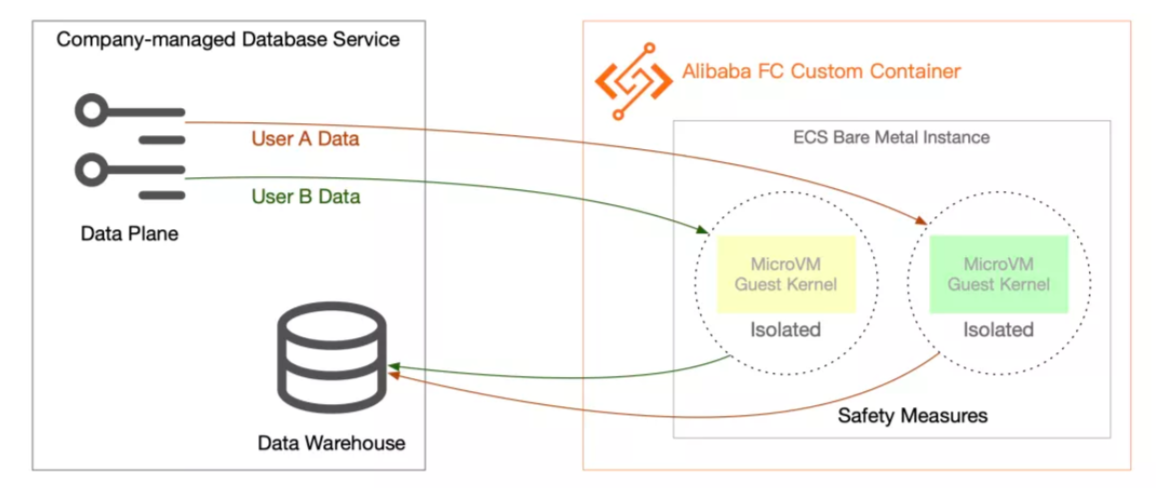

Services rely on data, and data processing often consumes a large amount of resources to meet efficient and fast data change requests. Like other Function Compute run time, custom mirror images can meet the security isolation during data processing while retaining the convenient ability to freely package the business logic of the data processing part into mirror images. It provides smooth migration and meets the low extra latency of mirror image startup. It meets the secure, efficient, and elastic data processing requirements of users for application scenarios, such as database governance and IoT.

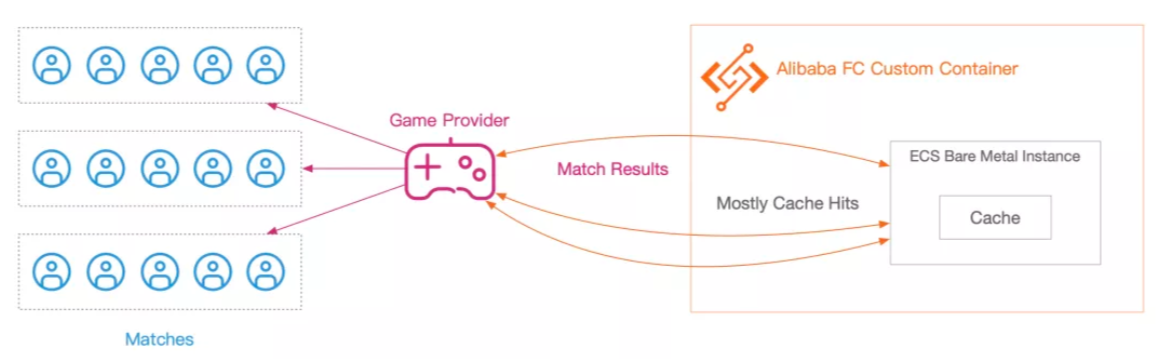

In various games, scenarios (such as daily tasks) are usually set up to gather a large number of players in a short time and data processing (such as battle settlement) is required. Battle data verification usually needs to be completed in just a few seconds to prevent game players from losing patience, and the data settlement unit time of a single player cannot deteriorate with the increase in the number of players. The business logic of this type of data processing is usually complicated and highly repetitive. Packing the player data processing logic into a Function Compute custom image can flexibly meet a large number of similar player settlement requests in a short time.

The original intention of optimizing Function Compute (FC) custom mirror images was to prevent users from feeling the extra delay caused by container mirror image transmission, giving cloud-native developers the best experience. The optimization will not stop. Our ultimate goal is to eliminate the extra overhead of container mirror image pulling. The mirror image warehouse becomes the bottleneck when a large amount of capacity is expanded and achieves a fast scale out. The Custom Container feature will help web applications and job-like workloads on Kubernetes run seamlessly on functional computing in the future while improving Serverless Caching. Kubernetes handles resident, traffic-stabilized workloads. Serverless services share significantly fluctuating compute and will increasingly become a cloud-native best practice.

1) Function Compute Public Use Cases

4) Serverless Desktop (Desktop Client)

By Xiuzong

Review & Proofreading by Chang Shuai, Wangchen

99 posts | 7 followers

FollowOpenAnolis - December 7, 2022

Alibaba Clouder - November 30, 2020

Alibaba Clouder - September 29, 2017

Alibaba F(x) Team - September 1, 2021

Alibaba Cloud Community - September 19, 2024

Alibaba Developer - July 13, 2021

99 posts | 7 followers

Follow Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn MoreMore Posts by Alibaba Cloud Serverless