By Yaolu and Junbao

Recently, the Cloud-Native Computing Foundation (CNCF) Technical Oversight Committee (TOC) voted to accept Argo as an incubation-level hosted project. As a new project, Argo focuses on Kubernetes-native workflows and continuous deployment.

The Argo project is a collection of Kubernetes-native tools that run and manage jobs and applications on Kubernetes. It provides a simple combination of three computing modes for creating jobs and applications on Kubernetes: the service mode, workflow mode, and event-based mode. All Argo tools are implemented as controllers and custom resources.

Alibaba Cloud Container Service for Kubernetes (ACK) was one of the first teams to use the Argo Workflow in China. During production, the ACK team resolved many performance bottlenecks and developed multiple features, which they contributed to the community. One of the ACK team members is a maintainer of the Argo project.

Directed acyclic graphs (DAGs) are a typical method used in computer graph theory. DAGs can be used to simulate interdependent data processing tasks, such as audio and video transcoding, machine learning data streams, and big data analysis.

Argo first became famous in the community through Argo Workflow. The Argo Workflow project was the first project of the Argo organization. Argo Workflow focuses on Kubernetes-native workflow design and has a declarative workflow mechanism. It is fully compatible with Kubernetes clusters through custom resources definition (CRD.) Each task runs in a pod, and the workflow provides DAG and other dependency topologies. Multiple workflows can be combined or spliced through Workflow Template CRD.

The preceding figure shows a typical DAG structure. Argo Workflow can easily build an interdependent workflow based on the orchestration templates submitted by users. Argo Workflow can process these dependencies and run them in the sequence specified by users.

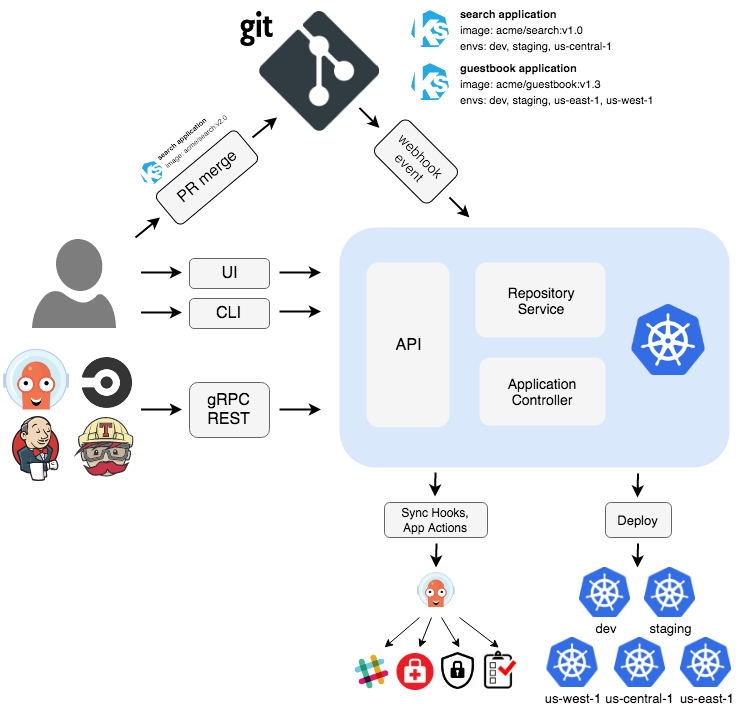

Argo CD is another well-known project. Argo CD is mainly used for the Gitops process. It meets the requirements for one-click deployment to Kubernetes through Git and can quickly track and roll back deployment according to version tags. Argo CD also provides a multi-cluster deployment feature to deploy the same application to multiple clusters.

Argo Event provides declarative management based on event dependencies and Kubernetes resource triggers based on various event sources. Argo Event is commonly used to trigger Argo Workflow and generates events for the long-running service deployed by using Argo CD.

Argo Rollout was designed as a solution for various deployment modes. Argo Rollout implements multiple phased release methods and integrates Ingress, Service Mesh, and other methods for traffic management and phased testing.

Argo subprojects can be used separately or together. Generally, multiple subprojects used together can make better use of Argo's capabilities and provide more features.

Alibaba Cloud implemented the Argo Workflow project very early on. The first problem we found when using Argo Workflow was permission management. Each Argo Workflow task is executed through a pod, and a sidecar container monitors the primary task. The sidecar listening method is implemented through mount docker.sock, which bypasses the Kubernetes API server RBAC mechanism and cannot precisely control user permissions. We worked with the community to develop the Argo Kubernetes API server Native Executor feature. The sidecar can listen to the API server through the service account to obtain the status and information of the primary container. This allows it to implement Kubernetes RBAC and permission control.

During DAG parsing, Argo Workflow scans the status of all pods in each step according to the workflow label to determine whether to proceed with the next step. However, each scan is performed in serial mode. When many workflows exist in a cluster, the scan speed is slow, and workflow tasks must wait a long time for execution. To resolve this issue, we had developed the parallel scan feature to parallelize all scan actions using goroutine, which greatly improves the workflow execution efficiency. Tasks that previously required 20 hours to run can now be completed in 4 hours. This feature has been provided to the community and released in Argo Workflow v2.4.

In production, the more steps Argo Workflow executes, the more space it occupies. All steps are recorded in the CRD Status field. When the number of tasks exceeds 1,000, a single object is too large to be stored in etcd and the API server may crash due to excessive traffic. We worked with the community to develop a status compression technology that can compress the Status string. The size of the compressed Status field is 1/20 of the original size. This ensures that large workflows with more than 5,000 steps can be run.

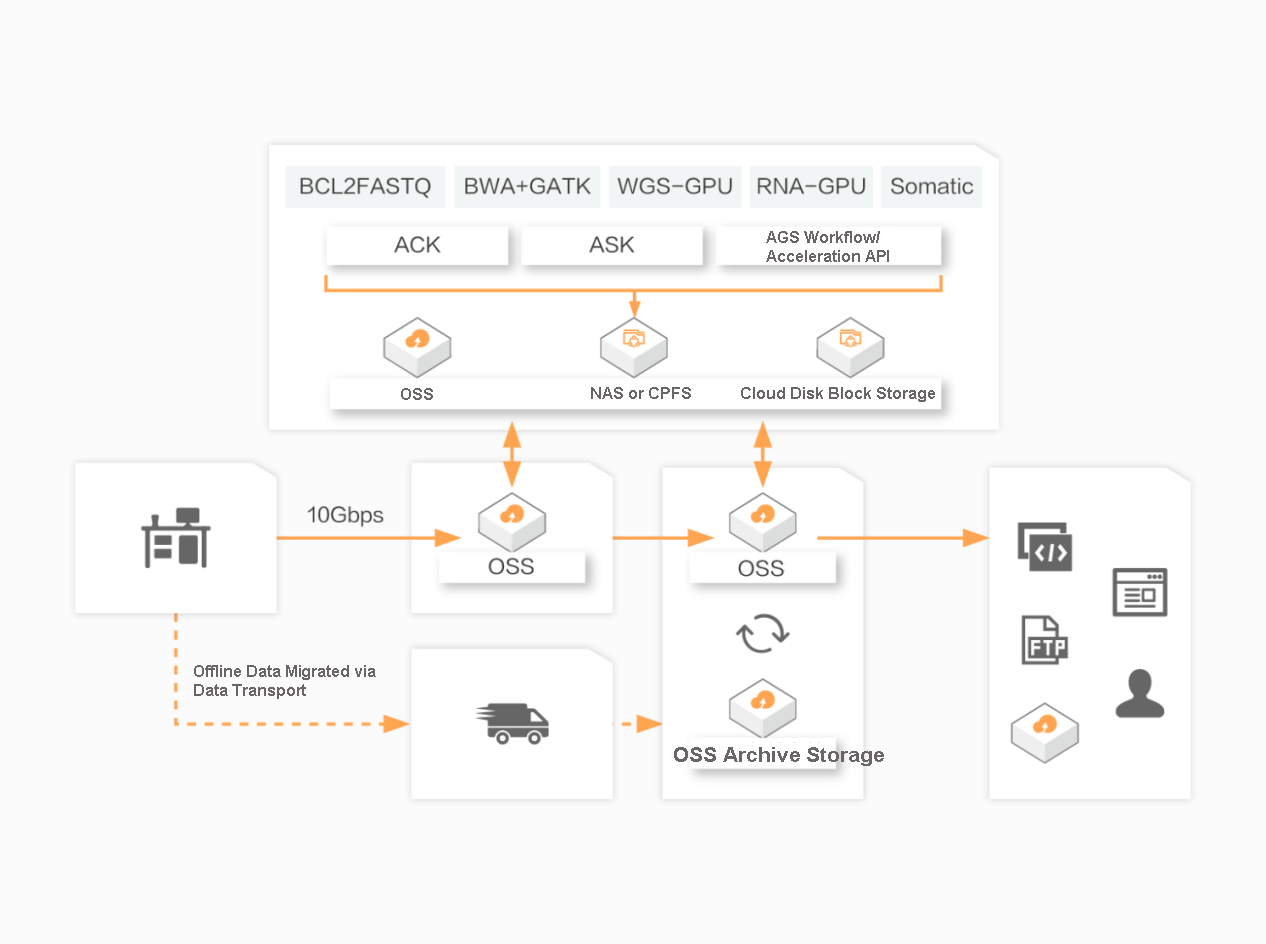

Alibaba Genomics Service (AGS) is mainly used for the secondary analysis of genome sequencing. Gene comparison, sorting, deduplication, and variation detection for 30x whole genome sequencing (WGS) can be completed in 15 minutes with the AGS acceleration API, which is 120 times faster than the typical process and 2 to 4 times faster than the fastest FPGA or GPU solution in the world.

By analyzing the mutation mechanism of individual genome sequences, AGS provides strong support for genetic disease detection and cancer screening. In the future, it will play a bigger role in clinical medicine and genetic diagnosis. The human genome is made up of over 3 billion base pairs. The sequencing data for one 30x WGS is about 100 GB. AGS offers great advantages in terms of computing speed, precision, cost, usability, and compatibility with upstream sequencers. It is suitable for scenarios, such as DNA SNP/INDEL, CNV structure mutation detection, and DNA or RNA virus detection.

The AGS workflow is implemented based on Argo and provides a local containerized workflow for Kubernetes. Each step in the workflow is defined as a container.

The workflow engine is implemented as Kubernetes CRD. Therefore, you can use kubectl to manage workflows and locally integrate other Kubernetes services, such as Volumes, Secrets, and RBAC. The workflow controller provides complete workflow features, including parameter replacement, storage and workflow loop, and recursion.

In genetic computing scenarios, Alibaba Cloud uses Argo Workflow to run data processing and analysis services on Kubernetes clusters. Argo Workflow supports large workflows with more than 5,000 steps and is 100 times faster than traditional data processing methods. The custom workflow engine greatly improves the efficiency of gene data processing.

Chen Xianlu, an Alibaba Cloud Technical Expert, has been deeply engaged in Docker and Kubernetes for many years. He is a contributor to multiple Docker projects, a Kubernetes group member, and the author of "Write Your Own Docker". He focuses on container technology orchestration and basic environment research. He likes to study source code, loves open-source culture, and actively participates in the R&D for community open-source projects.

Junbao is a Kubernetes project contributor and a member of the Kubernetes and Kubernetes-SIGs communities. He has many years of practical experience in the container and Kubernetes fields. Currently, he works on the Alibaba Cloud Computing Container Service team. His main research interests include container storage, container orchestration, and AGS products.

Learn more about AGS at: https://www.alibabacloud.com/help/doc-detail/156348.htm

How Does Alibaba Cloud Build High-Performance Cloud-Native Pod Networks in Production Environments?

484 posts | 48 followers

FollowAlibaba Developer - June 29, 2021

Alibaba Container Service - April 12, 2024

Alibaba Cloud Native Community - March 11, 2024

Alibaba Cloud Native Community - September 20, 2022

Alibaba Container Service - May 16, 2024

Alibaba Cloud Native Community - March 20, 2023

484 posts | 48 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Cloud Native Community