By Alibaba Cloud EMR Open-Source Big Data OLAP Team and Data Lake Analytics Team of StarRocks Community

As digital industrialization and industrial digitization become important driving forces for the economy, data analysis scenarios of enterprises are becoming more abundant, and the requirements for data analysis architecture are also increasingly demanding. In addition, new data analysis scenarios have brought new requirements mainly from the following users:

The advent of a data lake meets the first two requirements of users. The data lake allows users to import any amount of real-time data. Users can collect data from multiple sources and store data in its original form in the data lake. The data lake has an extremely high level of scalability, enabling users to store data on any scale. At the same time, the bottom layer of the data lake usually uses cheap storage schemes, which reduces the cost of storing data for users. The data lake uses sensitive data identification, hierarchical classification, privacy protection, resource access control, encrypted data transmission, encrypted storage, data risk identification, and compliance audits to help users establish security alerting mechanisms. Therefore, it enhances the overall capability for security protection, makes data secure and available only for use, and ensures compliance.

We need a set of analytics engines suitable for data lakes to further meet the requirements of users for data lake analysis, which can utilize more data from more sources in a shorter time and enable users to coordinate data processing and analysis in different ways to make better and faster decisions. This article reveals the key technologies of such a data lake analytics engine in detail and uses StarRocks to help users further understand the architecture of the system.

In addition, we will publish two more articles to introduce the core and cases of the blazing-fast data lake analytics engine in more detail:

What is a data lake? According to Wikipedia, “A data lake is a system or repository of data stored in its natural/raw format, usually object blobs or files.” Generally speaking, a data lake can be understood as a layer added to cheap Object Storage Service (OSS) or distributed file systems, so discrete objects or files in these storage systems can be combined to present unified semantics, such as the definition of table, common in a relational database.

After understanding the definition of a data lake, we also want to know which unique capabilities a data lake can provide for us and why we should use a data lake.

Before the concept of data lake came out, many enterprises or organizations had already used HDFS or AWS S3 (Amazon Simple Storage Service) to store all kinds of data generated in the daily operations of their business. (For example, an enterprise developing apps may want to record the click events generated by users in detail.) Since the value of those data may not be discovered in a short period of time, enterprises or organizations are willing to find a cheap storage system to temporarily store the data and expect to extract valuable information from the data that can come in handy someday. However, the semantics provided by HDFS and S3 is relatively limited (the semantics of files provided by HDFS and the semantics of objects provided by S3). Over time, engineers may not know which data they have stored. Engineers have come up with the idea of organizing data with consistent definitions and then describing those data with additional data to avoid parsing data one by one to understand its meaning for using that data. The additional data is called metadata because it is used to describe those data. Therefore, the specific meaning of those data can be explained later by parsing the metadata. This is the primitive role of a data lake.

With stricter requirements of users for data quality, data lakes begin to deliver other capabilities. For example, a data lake provides database-like ACID semantics (atomicity, consistency, isolation, durability semantics) to help users obtain point-in-time views during continuous data writing and avoid errors during data reading. For another example, a data lake provides users with higher-performance data import capabilities. Up to now, data lakes have evolved from a system with simple metadata management into one with richer and more database-like semantics.

In less precise terms, a data lake is an AP database with cheaper storage costs. However, data lakes only provide data storage and organization capabilities. A complete database has capabilities for data storage and data analysis. Therefore, this article focuses on how to build an efficient analytics engine for a data lake and provide users with capabilities for insight into the data. The following sections are used to gradually illustrate the internal structure and implementation of a modern OLAP analytics engine:

Starting from this section, let's come back to the database course. An analytics engine for a data lake and one for databases are similar in architecture. In general, the analytics engine will be divided into the following parts:

The Optimizer and Execution Engine are core modules that affect the data lake analytics engine performance. Next, we will illustrate the core technical principles of these two modules one by one from three dimensions and compare different technical solutions to help you understand the whole process of a modern data lake analytics engine.

The Optimizer is responsible for generating a query execution plan with the least (or relatively low) cost for a given query. The performance of different execution plans will vary thousands of times. The more complex the query and the larger the data volume, the more important the query optimization is.

Rule-Based Optimization (RBO) is a common optimization strategy for a traditional analytics engine. The essence of RBO is that its core is an equivalent transformation based on relational algebra, which transforms queries through a set of pre-established rules to obtain a lower-cost execution plan. Common RBO rules include predicate pushdown, limit pushdown, and constant folding. RBO has a strict set of usage rules. As long as users write query statements according to the rules, the generated execution plan is fixed regardless of the contents of the data table. However, the magnitude of the data will seriously affect the performance of the query, and RBO cannot use that information to generate a better execution plan in the actual business environment.

The optimization strategy of Cost-Based Optimization (CBO) was created to remove the limits of RBO. CBO estimates the cost of executing a plan by collecting statistics on the data. These statistics include information, such as the scale of the data set, the number of columns, and the cardinality of columns. For example, we have three tables named A, B, and C. We cannot tell the difference in the execution order cost between joins without corresponding statistics when performing a query of A join B join C. According to statistics we collected on these three tables, if the volume of data of both table A and table B is one million rows, but table C is only ten rows, the volume of data of intermediate results will be significantly reduced by executing B join C first, which is generally impossible to judge without statistics.

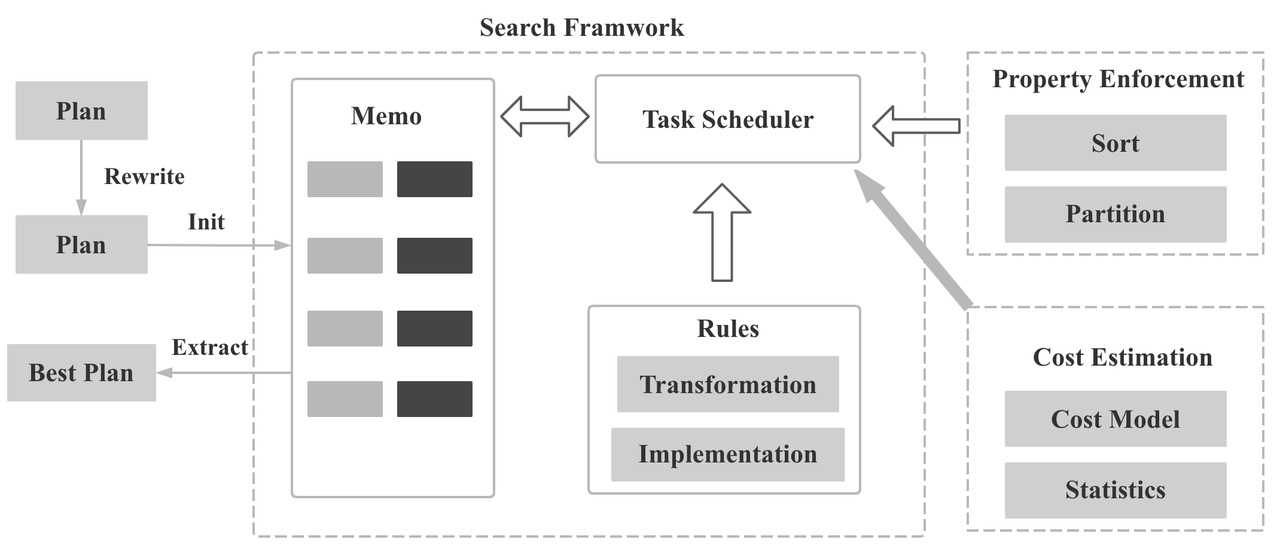

With the increasing complexity of queries, the state space of an execution plan will become very large. Those who have done massive algorithm exercises all know that once the state space is very large, it is impossible to AC through violent searches. At this time, a good search algorithm is especially important. Usually, CBO uses a dynamic planning algorithm to obtain the optimal solution and reduce the cost of repeatedly calculating subspaces. When the state space reaches a certain level, we can only choose the greedy algorithm or some other heuristic algorithm to obtain the local optima. Essentially, a search algorithm is a method of doing a trade-off between search time and result quality.

Common Implementation Architectures of CBO

An execution plan can be considered as an execution flow formed by a string of operators (operators of the relational algebra) connected end to end. The output of the previous operator is the input of the next one. The traditional analytics engine is row-oriented. In other words, the output and input of an operator are data in rows.

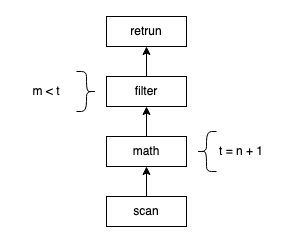

For example, we have the following table and query statement:

CREATE TABLE t (n int, m int, o int, p int);

SELECT o FROM t WHERE m < n + 1; Example source: GitHub - jordanlewis/exectoy

The following figure shows the preceding query statement is expanded into an execution plan.

Typically, the execution process of an execution plan can be represented by the following pseudo codes in the row-oriented model:

next:

for:

row = source.next()

if filterExpr.Eval(row):

// return a new row containing just column o

returnedRow row

for col in selectedCols:

returnedRow.append(row[col])

return returnedRow According to the evaluation of DBMSs On A Modern Processor: Where Does Time Go?, this execution method experiences a large number of L2 data stalls, L1 I-cache stalls, and low efficiency of branch prediction.

With the rapid development of hardware technologies, such as disks, and the widespread use of compression algorithms that replace CPU with I/O, encoding algorithms, and storage technologies, CPU performance has gradually become a bottleneck for analytics engines. Academia began to think about solutions to solve the problems existing in the row-oriented approach. The paper, Block oriented processing of Relational Database operations in modern Computer Architectures, points out using a block-based method to transmit data between operators. This method can balance the time-consuming work of condition inspection and branch prediction. Based on that, the paper, MonetDB/X100: Hyper-Pipelining Query Execution, puts forward a method that improves the efficiency of CPU Cache by changing the original row-oriented data to column-oriented data. This method is also more conducive to optimizing a compiler. In the column-oriented model, the execution process of an execution plan can be represented by the following pseudo codes:

// first create an n + 1 result, for all values in the n column

projPlusIntIntConst.Next():

batch = source.Next()

for i < batch.n:

outCol[i] = intCol[i] + constArg

return batch

// then, compare the new column to the m column, putting the result into

// a selection vector: a list of the selected indexes in the column batch

selectLTIntInt.Next():

batch = source.Next()

for i < batch.n:

if int1Col < int2Col:

selectionVector.append(i)

return batch with selectionVector

// finally, we materialize the batch, returning actual rows to the user,

// containing just the columns requested:

materialize.Next():

batch = source.Next()

for s < batch.n:

i = selectionVector[i]

returnedRow row

for col in selectedCols:

returnedRow.append(cols[col][i])

yield returnedRow A column-oriented model has better data locality and instruction locality, which helps improve the hit rate of CPU Cache, and the compiler can perform SIMD optimization easier, etc.

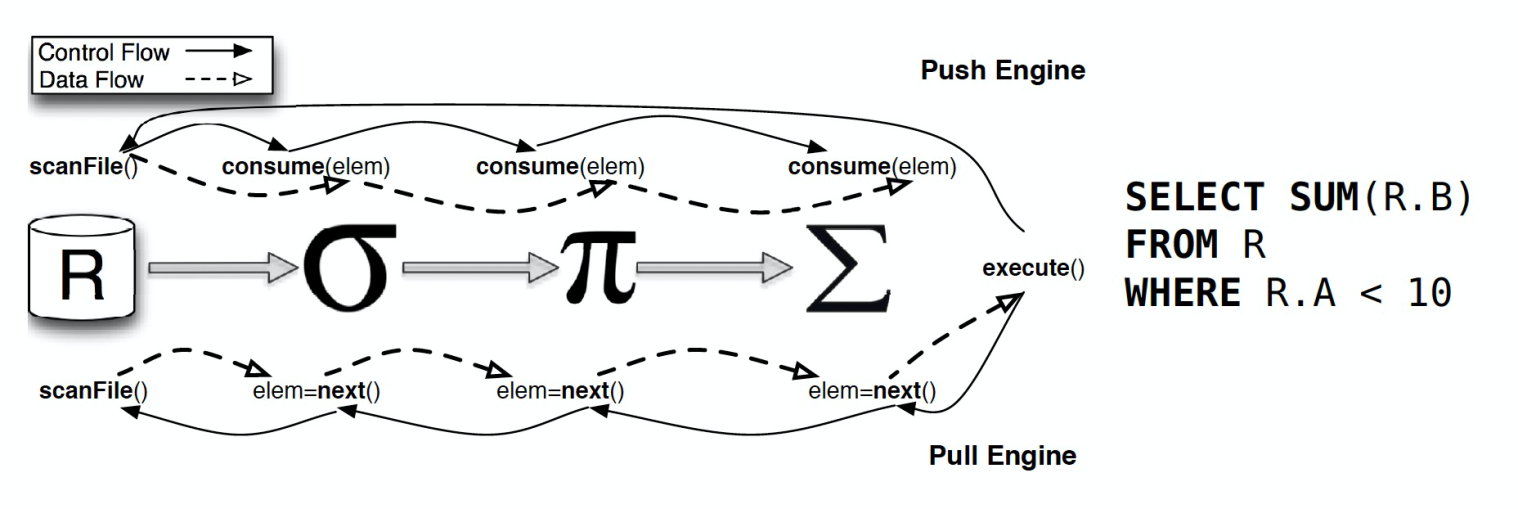

In a database system, an input SQL statement is usually converted into a series of operators. Then, a physical execution plan is generated for the actual calculation, and the result is returned. In the generated physical execution plan, a pipeline is usually used for the operators. There are two common pipeline methods:

The push-based execution model improves cache efficiency and improves query performance.

Reference: Push- vs. Pull-Based Loop Fusion in Query Engines

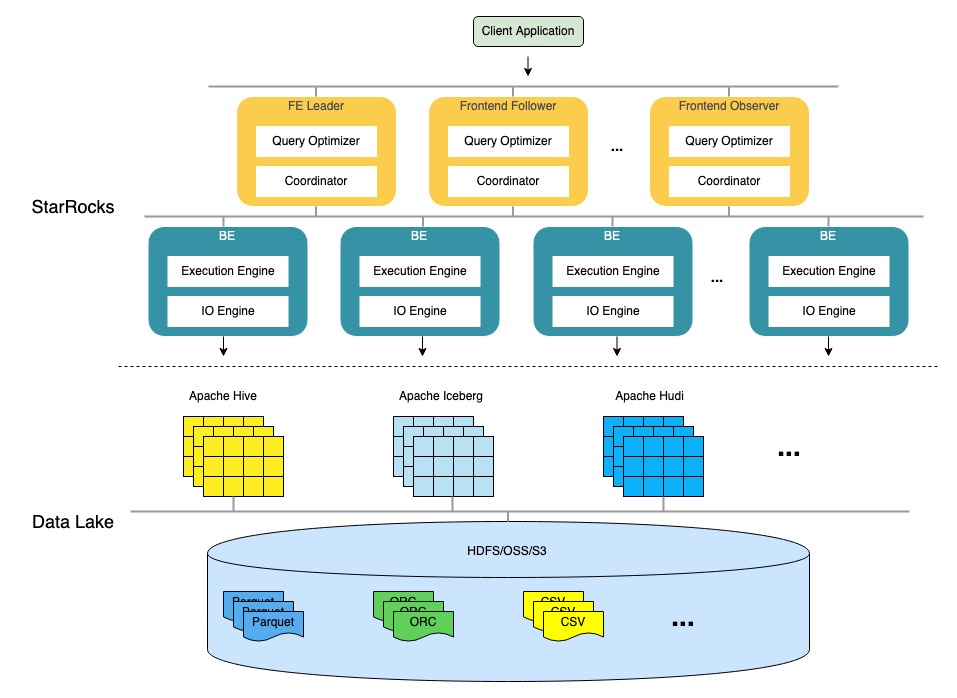

After the introduction in the previous section, I believe you have an understanding of cutting-edge theories of a data lake analytics engine. In this section, let's take StarRocks as an example to further introduce how a data lake analytics engine is combined with the advanced theories above and presents itself to users through an elegant system architecture.

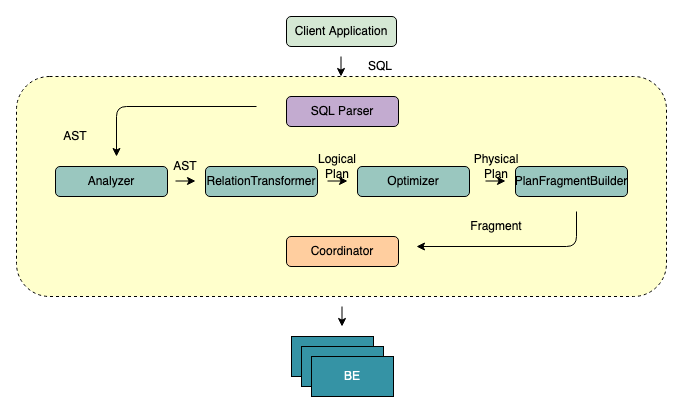

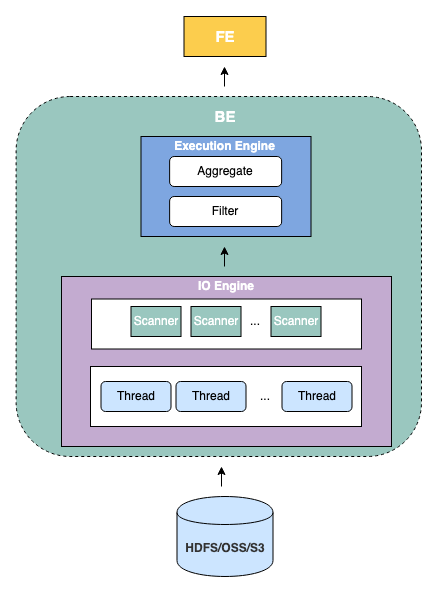

As shown in the preceding figure, the architecture of StarRocks is very simple. The core of the entire system only is frontend (FE) and backend (BE) processes. The system does not rely on any external components, which facilitates deployment and maintenance. FE process is mainly responsible for parsing query statements (SQL), optimizing queries, and query scheduling, while BE process is mainly responsible for reading data from the data lake and completing operations, such as to filter and aggregate data.

The main feature of FE is to convert SQL statements into fragments that BE can recognize through transformation and optimization. Let's take a less accurate but easy-to-understand metaphor. If BE cluster is regarded as a distributed thread pool, fragments are tasks in the thread pool. From SQL texts to fragments, FE is mainly responsible for the following steps:

BE is the backend node of StarRocks. It receives fragments from FE and returns the result to FE. The BE nodes of StarRocks are corresponding to each other, and FE distributes data to the corresponding BE nodes according to certain policies. The common fragment workflow is to read some files in the data lake, call corresponding Readers (such as Parquet Reader adapted to Parquet files and ORC Reader adapted to ORC files, etc.) to parse the data in those files, use a vectorized execution engine to filter and aggregate the parsed data, and return the data to other BE or FE.

This article mainly introduces the core technical principles of the blazing-fast data lake analytics engine and compares different technical implementation solutions from multiple dimensions. It introduces the system architecture design of the open-source data lake analytics engine, StarRocks, to facilitate the next in-depth discussion. I hope to discuss and communicate with you soon.

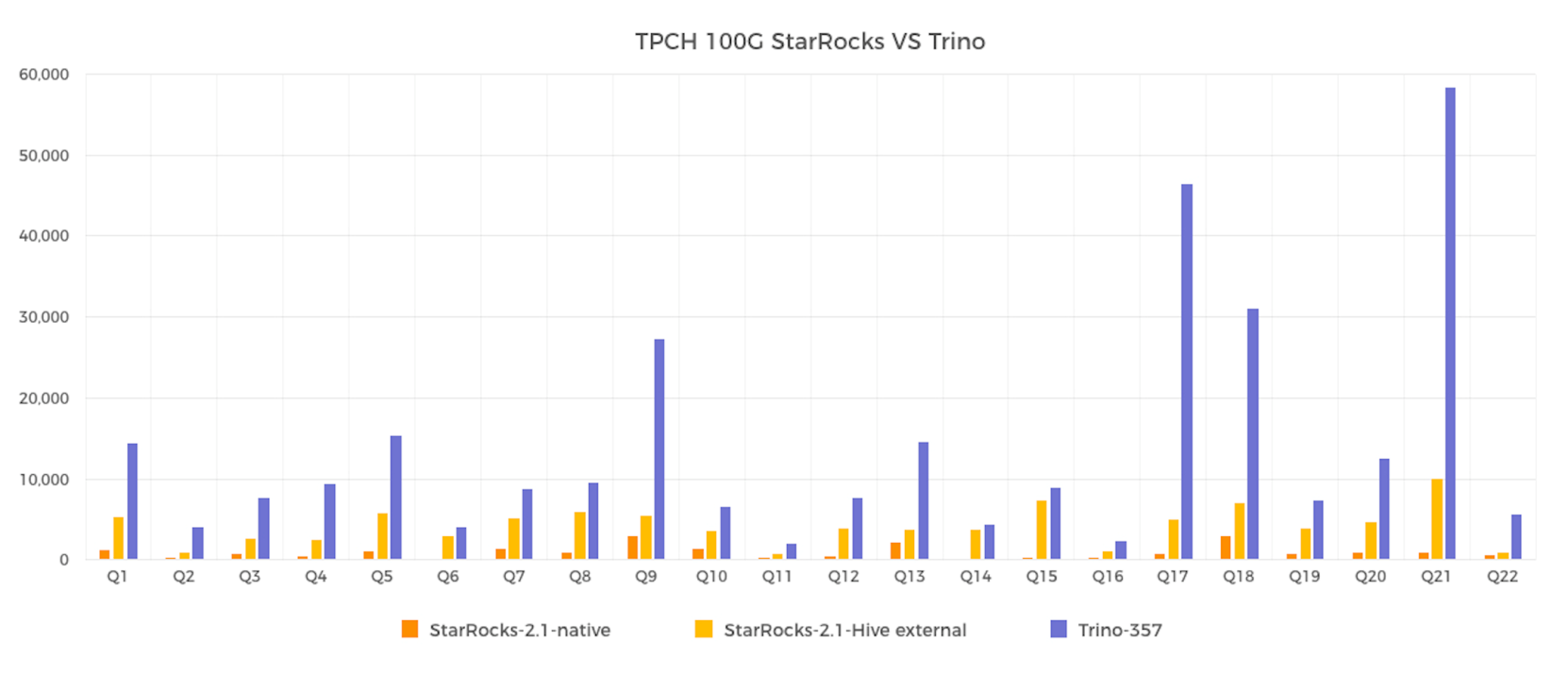

This test uses the standard test set of TPCH 100G to compare and test the performance gap between StarRocks local tables, StarRocks on Hive, and Trino (PrestoSQL) on Hive.

A comparative test was performed on a dataset on the scale of TPCH 100G and then generated 22 queries. The results are listed below:

StarRocks uses the local storage query and the Hive external table query for testing. The StarRocks on Hive and Trino on Hive query the same data. The data is stored in ORC format and compressed in zlib format. The test environment is built using Alibaba Cloud Elastic MapReduce (EMR).

The StarRocks local storage query takes 21 seconds, and the StarRocks Hive external table query takes 92 seconds. The total time of the Trino query is 307 seconds. StarRocks on Hive far exceeds Trino in query performance but is still a long way from Trino in terms of the local storage query. The main reason is that accessing remote storage increases network overhead, and the latency and IOPS of remote storage are usually not as good as those of local storage. The following plan is to make up for the problem through Cache and other mechanisms to bridge the gap between StarRocks local tables and StarRocks on Hive.

[1] GitHub - jordanlewis/exectoy

[2] DBMSs On A Modern Processor: Where Does Time Go?

[3] Block oriented processing of Relational Database operations in modern Computer Architectures

[4] MonetDB/X100: Hyper-Pipelining Query Execution

[5] https://help.aliyun.com/document_detail/404790.html (Document in Chinese)

How to Build a Cloud-Native Open-Source Big Data Platform | The Application Practice of Weimiao

Zuoyebang's Best Practices for Building Data Lakes Based on Delta Lake

58 posts | 5 followers

FollowAlibaba EMR - May 20, 2022

ApsaraDB - November 17, 2020

Alibaba EMR - October 12, 2021

ApsaraDB - February 25, 2021

Alibaba Clouder - November 12, 2018

Alibaba EMR - July 9, 2021

58 posts | 5 followers

Follow Data Lake Formation

Data Lake Formation

An end-to-end solution to efficiently build a secure data lake

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Data Lake Analytics

Data Lake Analytics

A premium, serverless, and interactive analytics service

Learn MoreMore Posts by Alibaba EMR