By Zhao Haikai (Xiaokai)

Note: The following analysis is based on the open-source ClickHouse v19.15.2.2 Stable. The code of the latest edition for the community has been modified significantly, but the general idea remains unchanged.

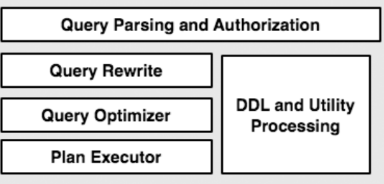

In traditional relational databases, the SQL processor has the following components:

It performs lexical and syntactic analysis to convert the format of a program from SQL for human read to abstract syntax tree (AST) for machine read.

Lexical analysis decomposes SQL characters into independent lexical units, such as Token(<type, value>). Syntactic analysis identifies all kinds of phrases in the tokens output by the lexical parser and constructs an AST. Specifically, based on the direction of AST construction, syntactic analysis includes top-down and bottom-up analyses. ClickHouse uses a syntax analyzer featuring recursive descent analysis.

This is usually referred to as the Logical Optimizer or the Rule-Based Optimizer (RBO).

It is responsible for applying some heuristic rules and simplifying and standardizing queries without changing the query semantics.

Common operations include predicate and operator pushdown, view expansion, simplification of constant operation expressions, predicate logic rewriting, and semantic optimization.

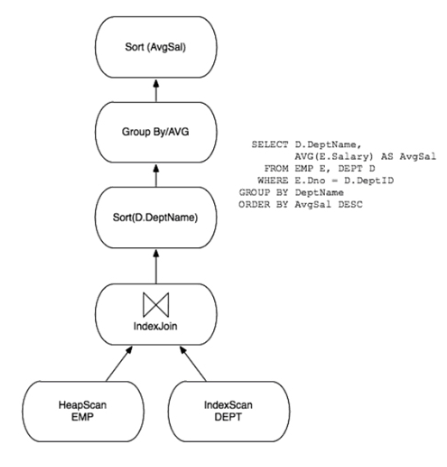

It refers to the Physical Optimizer, which converts internal queries into efficient query plans and guides DBMS on how to retrieve data from tables, sort data, and perform Join operation. As shown in the following figure, a query plan is similar to a data stream graph. Table data is transferred from one operator to another like water that flows in a pipe.

A Query Plan

It executes specific query plans, obtains data from storage engines, and obtains results by executing query plans for the data.

Execution engines are divided into many types, such as the classic Volcano Model and the Vectorization Model used by ClickHouse.

Figure from the paper entitled *Architecture of a Database System

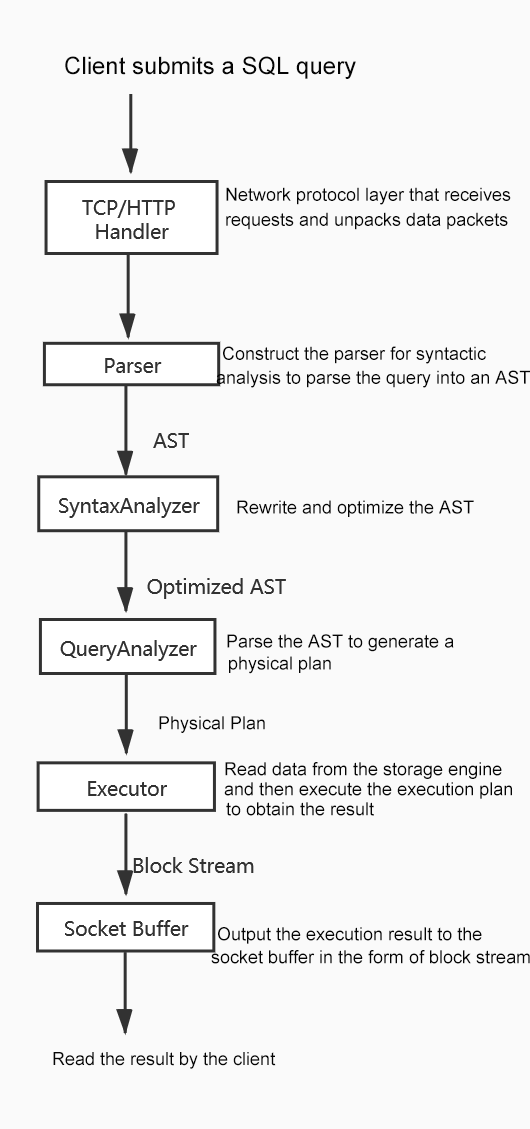

However, the processes of SQL parsing and execution plan generation are similar for conventional relational databases and non-relational databases. Based on the ClickHouse source code, the process of submitting a SQL query statement can be summarized like this:

From the server side, let's first see what server.cpp probably does:

Only important logics are displayed:

int Server::main()

{

// Initialize the context

global_context = std::make_unique<Context>(Context::createGlobal());

global_context->setApplicationType(Context::ApplicationType::SERVER);

// Initialize ZooKeeper

zkutil::ZooKeeperNodeCache main_config_zk_node_cache([&] { return global_context->getZooKeeper(); });

// Initialize other configurations

//...

// Bind the port to provide external services

auto address = make_socket_address(host, port);

socket.bind(address, /* reuseAddress = */ true);

// Establish different types of servers according to the network protocol

//Supported server types include HTTP, HTTPS, TCP, InterServer, and MySQL

// Take TCP server as an example:

create_server("tcp_port", [&](UInt16 port)

{

Poco::Net::ServerSocket socket;

auto address = socket_bind_listen(socket, listen_host, port);

servers.emplace_back(std::make_unique<Poco::Net::TCPServer>(

new TCPHandlerFactory(*this),

server_pool,

socket,

new Poco::Net::TCPServerParams));

});

//Start the server

for (auto & server : servers)

server->start();

}The queries sent from clients are processed using handlers according to the network protocol. When the server is started, handler will be initialized and bound to the specified port. Take TCPHandler as an example to see how the server processes the queries sent from the client. The TCPHandler::runImpl function implementation is listed below:

void TCPHandler::runImpl()

{

// Instantiate input and output stream buffers for the socket

in = std::make_shared<ReadBufferFromPocoSocket>(socket());

out = std::make_shared<WriteBufferFromPocoSocket>(socket());

while (1){

// Receive request message

receivePacket();

// Execute the query

state.io = executeQuery(state.query, *query_context, false, state.stage, may_have_embedded_data);

// Process the query by its type

// Process INSERT query

processInsertQuery();

// Process ordinary queries in parallel

processOrdinaryQueryWithProcessors();

// Process ordinary queries in a single thread

processOrdinaryQuery();

}

}What is the specific logic of ClickHouse in processing queries sent from the client?

You can refer to dbms/src/Interpreters/executeQuery.cpp.

The specific logics are contained in the executeQueryImpl function. The following part displays some core logics:

static std::tuple<ASTPtr, BlockIO> executeQueryImpl()

{

// Construct the parser

ParserQuery parser(end, settings.enable_debug_queries);

ASTPtr ast;

// Convert the query to an AST

ast = parseQuery(parser, begin, end, "", max_query_size);

// Generate an interpreter instance

auto interpreter = InterpreterFactory::get(ast, context, stage);

// Interpreter parses the AST and the result is Block I/O

res = interpreter->execute();

// The return result is a binary group of AST and result after parsing AST.

return std::make_tuple(ast, res);

}What the function does:

InterpreterFactory

Summary:

executeQuery to process the query sent by the client. The executed result is saved in the I/O member of the state struct.Each query corresponds to a state struct, which records the metadata of the query, such as the query ID, processing status, compression algorithm, query text, and I/O stream corresponding to the data.

processOrdinaryQuery method to encapsulate the output stream results into an asynchronous I/O stream and send it back to the client.

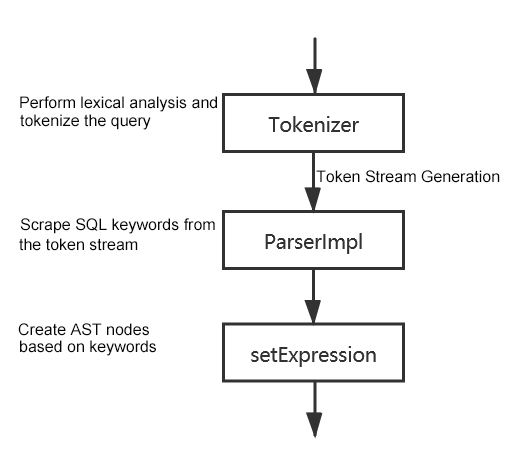

ClickHouse uses a recursive-descent parser to parse the SQL query. The result generated is an AST that corresponds to the SQL query. The AST is represented by nodes (IAST) that represent each operation. This section describes the core logic of the parser.

The core logic of lexical analysis and syntactic analysis is contained in the tryParseQuery function of parsecequery.cpp.

This function uses the lexer to identify query characters and divide them into tokens. The token_iterator is a token stream iterator. Then, the parser parses the token stream to generate an AST.

ASTPtr tryParseQuery()

{

//A token is the basic unit after lexical analysis by the lexer. The result is a token stream.

Tokens tokens(pos, end, max_query_size);

IParser::Pos token_iterator(tokens);

ASTPtr res;

//Token stream generates AST after syntactic analysis.

bool parse_res = parser.parse(token_iterator, res, expected);

return res;

}The core of syntactic analysis is the parse method executed by the parser. For the implementation of the parse method, see parseImpl in ParserQuery.cpp.

bool ParserQuery::parseImpl(Pos & pos, ASTPtr & node, Expected & expected)

{

ParserQueryWithOutput query_with_output_p(enable_explain);

ParserInsertQuery insert_p(end);

ParserUseQuery use_p;

ParserSetQuery set_p;

ParserSystemQuery system_p;

bool res = query_with_output_p.parse(pos, node, expected)

|| insert_p.parse(pos, node, expected)

|| use_p.parse(pos, node, expected)

|| set_p.parse(pos, node, expected)

|| system_p.parse(pos, node, expected);

return res;

}This method roughly divides queries into five types. However, queries can be roughly divided into two types. The first type has result output and corresponds to statements, such as SHOW, SELECT, and CREATE. The second type has no result output, which corresponds to INSERT, USE, SET, and system-related statements, such as EXIT.

QueryWithOutputInsertQueryUseQuerySetQuerySystemQueryEach type of query customizes its own parser. Therefore, the code logic is that parsers of all types of queries are applied after receiving a query until one parser is applied successfully.

Now, let's understand the parser that corresponds to the SELECT statement.

The core logic can be summarized like this:

setExpression function assembles the AST node corresponding to the keyword.Each SQL statement, including SELECT, DROP, INSERT, and CREATE, has an AST class that contains keywords specific to these statements.

bool ParserSelectQuery::parseImpl(Pos & pos, ASTPtr & node, Expected & expected)

{

// Create AST node

auto select_query = std::make_shared<ASTSelectQuery>();

node = select_query;

// Specify the keywords that will appear in the SELECT statement

ParserKeyword s_select("SELECT");

ParserKeyword s_distinct("DISTINCT");

ParserKeyword s_from("FROM");

ParserKeyword s_prewhere("PREWHERE");

ParserKeyword s_where("WHERE");

ParserKeyword s_group_by("GROUP BY");

ParserKeyword s_with("WITH");

ParserKeyword s_totals("TOTALS");

ParserKeyword s_having("HAVING");

ParserKeyword s_order_by("ORDER BY");

ParserKeyword s_limit("LIMIT");

ParserKeyword s_settings("SETTINGS");

ParserKeyword s_by("BY");

ParserKeyword s_rollup("ROLLUP");

ParserKeyword s_cube("CUBE");

ParserKeyword s_top("TOP");

ParserKeyword s_with_ties("WITH TIES");

ParserKeyword s_offset("OFFSET");

//...

// Scrape the keywords from the token stream

ParserTablesInSelectQuery().parse(pos, tables, expected)

// Set the Expression attribute of AST based on the syntactic analysis result. If an SQL query contains the keyword, the keyword is converted into a node on AST.

select_query->setExpression(ASTSelectQuery::Expression::WITH, std::move(with_expression_list));

select_query->setExpression(ASTSelectQuery::Expression::SELECT, std::move(select_expression_list));

select_query->setExpression(ASTSelectQuery::Expression::TABLES, std::move(tables));

select_query->setExpression(ASTSelectQuery::Expression::PREWHERE, std::move(prewhere_expression));

select_query->setExpression(ASTSelectQuery::Expression::WHERE, std::move(where_expression));

select_query->setExpression(ASTSelectQuery::Expression::GROUP_BY, std::move(group_expression_list));

select_query->setExpression(ASTSelectQuery::Expression::HAVING, std::move(having_expression));

select_query->setExpression(ASTSelectQuery::Expression::ORDER_BY, std::move(order_expression_list));

select_query->setExpression(ASTSelectQuery::Expression::LIMIT_BY_OFFSET, std::move(limit_by_offset));

select_query->setExpression(ASTSelectQuery::Expression::LIMIT_BY_LENGTH, std::move(limit_by_length));

select_query->setExpression(ASTSelectQuery::Expression::LIMIT_BY, std::move(limit_by_expression_list));

select_query->setExpression(ASTSelectQuery::Expression::LIMIT_OFFSET, std::move(limit_offset));

select_query->setExpression(ASTSelectQuery::Expression::LIMIT_LENGTH, std::move(limit_length));

select_query->setExpression(ASTSelectQuery::Expression::SETTINGS, std::move(settings));

}The following figure shows the processing procedure in the parser:

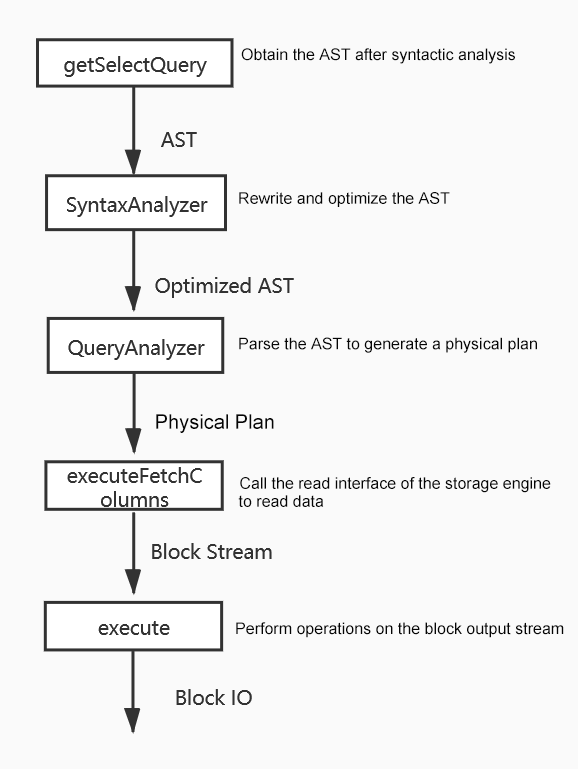

The interpreter creates a pipeline for query execution from the AST. The entire pipeline is based on BlockInputStream and BlockOutputStream. For example, if the "SELECT" statement is used together with the "FROM" clause that involves the block output stream, the selection result is also output in the form of block output stream. First, let's move to dbms/src/Interpreters/InterpreterFactory.cpp.

Each query type has a corresponding interpreter. This Factory method instantiates the corresponding interpreter based on the AST type and applies the interpreter to execute the execution plan of the corresponding AST.

std::unique_ptr<IInterpreter> InterpreterFactory::get(ASTPtr & query, Context & context, QueryProcessingStage::Enum stage)

{

// For example, if the AST is converted from SELECT statement,

if (query->as<ASTSelectQuery>())

{

/// This is internal part of ASTSelectWithUnionQuery.

/// Even if there is SELECT without union, it is represented by ASTSelectWithUnionQuery with single ASTSelectQuery as a child.

return std::make_unique<InterpreterSelectQuery>(query, context, SelectQueryOptions(stage));

}

}Let's use InterpreterSelectQuery as an example to learn the core logic of interpreter instantiation:

InterpreterSelectQuery::InterpreterSelectQuery()

{

// Obtain the AST

auto & query = getSelectQuery();

// Perform syntactic analysis on AST to rewrite and optimize the AST

syntax_analyzer_result = SyntaxAnalyzer(context, options).analyze(

query_ptr, source_header.getNamesAndTypesList(), required_result_column_names, storage, NamesAndTypesList());

// Each query corresponds to a unique expression analyzer for scraping AST to generate an execution plan (operation chain)

query_analyzer = std::make_unique<SelectQueryExpressionAnalyzer>(

query_ptr, syntax_analyzer_result, context,

NameSet(required_result_column_names.begin(), required_result_column_names.end()),

options.subquery_depth, !options.only_analyze);

}It is not optimal to convert the AST generated after syntactic analysis into an execution plan. Therefore, SyntaxAnalyzer rewrites and optimizes the AST. In the source code, SyntaxAnalyzer involves a lot of rule-based optimization tricks.

SyntaxAnalyzer checks these rules one by one to determine whether they meet the conversion rules. If yes, SyntaxAnalyzer converts them.

SyntaxAnalyzerResultPtr SyntaxAnalyzer::analyze()

{

// Eliminate duplicate columns

removeDuplicateColumns(result.source_columns);

// Determine whether to push the predicate down based on the enable_optimize_predicate_expression configuration in the settings.

replaceJoinedTable(node);

// Rewrite the IN and JOIN expressions based on the distributed_product_mode configuration in the settings.

InJoinSubqueriesPreprocessor(context).visit(query);

// Optimize the Boolean expression in the query

LogicalExpressionsOptimizer().perform();

// Create a mapping dictionary from the alias to the AST node

QueryAliasesVisitor(query_aliases_data, log.stream()).visit(query);

// Eliminate common subexpressions

QueryNormalizer(normalizer_data).visit(query);

// Eliminate unneeded columns from the SELECT clause

removeUnneededColumnsFromSelectClause(select_query, required_result_columns, remove_duplicates);

// Execute the scalar subquery and replace the results with constants

executeScalarSubqueries(query, context, subquery_depth);

// For SELECT statement, the following optimizations will be made:

// Predicate pushdown optimization

PredicateExpressionsOptimizer(select_query, settings, context).optimize();

/// GROUP BY clause optimization

optimizeGroupBy(select_query, source_columns_set, context);

/// Unneeded item elimination from ORDER BY clause

optimizeOrderBy(select_query);

/// Unneeded column elimination from LIMIT BY clause

optimizeLimitBy(select_query);

/// Unneeded column elimination from USING statement

optimizeUsing(select_query);

}Here are brief introductions to some of these rules:

If the expression x op y has been calculated and the value of the expression has not changed, this expression is called a common subexpression. Common subexpression elimination searches for all instances of the same computed expression and analyzes whether it is worth replacing them with a single variable that holds the computed value. If it is worth replacing, the replacement can reduce the computing overhead.

A scalar subquery returns a single value. Similar to the common subexpression elimination, constants can replace all scalar subquery results in SQL queries to reduce computing overhead.

The predicates of the WHERE clause in the outer query block are pushed down to lower-level query blocks, such as views. By doing so, the data filtering operation is performed as close to the data source as possible. Data filtering in advance can reduce the amount of data transmitted over the network or read from the memory significantly, thus, improving the query efficiency.

query_analyzer parses the rewritten and optimized AST and generates an operation chain involving the required operations. The chain is called the physical execution plan. For example:

ExpressionActionsChain chain;

analyzer.appendWhere(chain);

chain.addStep();

analyzer.appendSelect(chain);

analyzer.appendOrderBy(chain);

chain.finalize();The code above adds WHERE, SELECT, and ORDER BY operations to the operation chain. Then, blocks can be read from the storage layer, and the operations in the operation chain above can be performed on the block data. The core execution logic is contained in the implementation of the executeImpl method of the corresponding Interpreter. Here, let's use the interpreter of the SELECT statement as an example to understand the process of reading block data and performing corresponding operations.

void InterpreterSelectQuery::executeImpl(TPipeline & pipeline, const BlockInputStreamPtr & prepared_input)

{

// AST of the corresponding query

auto & query = getSelectQuery();

AnalysisResult expressions;

// Physical plan that determines whether the expression contains scripts such as where, aggregate, having, order_by, and litmit_by

expressions = analyzeExpressions(

getSelectQuery(),

*query_analyzer,

QueryProcessingStage::FetchColumns,

options.to_stage,

context,

storage,

true,

filter_info);

// Read data from Storage

executeFetchColumns(from_stage, pipeline, sorting_info, expressions.prewhere_info, expressions.columns_to_remove_after_prewhere);

// eg: Based on keywords in the SQL query, perform corresponding operations in the block stream. Operations like where, aggregate, and distinct are all executed by one function.

executeWhere(pipeline, expressions.before_where, expressions.remove_where_filter);

executeAggregation(pipeline, expressions.before_aggregation, aggregate_overflow_row, aggregate_final);

executeDistinct(pipeline, true, expressions.selected_columns);

}Now, since the execution plan AnalysisResult (physical execution plan) is known, the next step is to read data from the storage layer and perform the corresponding operations. The core logic is in executeFetchColumns. The core operation is to read the blocks of the columns to be processed from the storage layer and organize them into a block stream.

void InterpreterSelectQuery::executeFetchColumns(

QueryProcessingStage::Enum processing_stage, TPipeline & pipeline,

const SortingInfoPtr & sorting_info, const PrewhereInfoPtr & prewhere_info, const Names & columns_to_remove_after_prewhere)

{

// Instantiate the block stream

auto streams = storage->read(required_columns, query_info, context, processing_stage, max_block_size, max_streams)

// Read blocks of corresponding columns and organize them into a block stream

streams = {std::make_shared<NullBlockInputStream>(storage->getSampleBlockForColumns(required_columns))};

streams.back() = std::make_shared<ExpressionBlockInputStream>(streams.back(), query_info.prewhere_info->remove_columns_actions);

}After organizing the block stream, perform various execution operations, such as executeAggregation and executeWhere. For more information about these operations, please see the code in InterpreterSelectQuery::executeImpl.

The processing procedure of the Interpreter is summarized below:

After the read and processing of the block stream, how will the generated results be written back to the storage layer? The following section uses the interpreter of the INSERT statement as an example for introduction:

BlockIO InterpreterInsertQuery::execute()

{

// Table is the storage engine interface.

StoragePtr table = getTable(query);

BlockOutputStreamPtr out;

// Read the block stream from the storage engine

auto query_sample_block = getSampleBlock(query, table);

out = std::make_shared<AddingDefaultBlockOutputStream>(

out, query_sample_block, out->getHeader(), table->getColumns().getDefaults(), context);

// Encapsulate the execution results as block I/O

BlockIO res;

res.out = std::move(out);

}In the preceding code, StoragePtr is the interface of the storage engine IStorage.

using StoragePtr = std::shared_ptr<IStorage>;Write and read operations rely on the write and read interfaces of the underlying storage engine, such as MergeTree. Block data is read in stream mode through the storage engine interfaces, and the result is organized as block I/O stream output. The Interpreter process is summarized below:

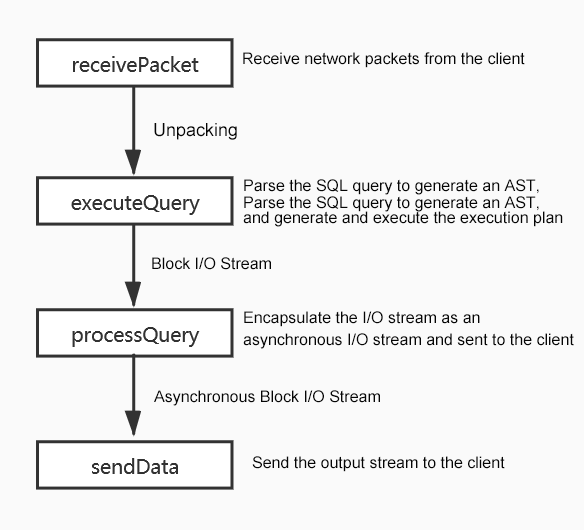

In TCPHandler::runImpl, after performing the executeQuery operation, various processQuery methods are called to return the execution result of the SQL query to the client. Here, let's use TCPHandler::processOrdinaryQuery as an example.

void TCPHandler::processOrdinaryQuery()

{

// Encapsulate the block stream as an asynchronous stream, then reading data from the stream is an asynchronous operation.

AsynchronousBlockInputStream async_in(state.io.in);

while(true){

Block block;

// Read block data from I/O stream

block = async_in.read();

// Send block data

sendData(block);

}

}The server is responsible for writing the output result to the socket output buffer by using the sendData function. The client can obtain the result by reading from this output buffer.

void TCPHandler::sendData(const Block & block)

{

//Initialize parameters of OutputStream

initBlockOutput(block);

// Call the write function of BlockOutputStream and write blocks to the output stream

state.block_out->write(block);

state.maybe_compressed_out->next();

out->next();

}Database users can clearly understand how to write optimized SQL queries by understanding the SQL query process of ClickHouse. At the same time, database kernel developers can deepen their understanding of the database architecture to improve development efficiency. This article does not cover in-depth technical details of ClickHouse, such as the vectorized execution engine, single instruction multiple data (SIMD), LLVM-based dynamic code generation, and the MergeTree-like storage engine. From the macro perspective, this article describes what happens to the kernel when executing SQL queries. More articles will be available to interpret the kernel source code in the future. Please stay tuned for more!

Alibaba Cloud has launched the ClickHouse cloud hosting product. You can visit the product homepage for more information.

Secondary Index in Alibaba Cloud ClickHouse – Best Practices

Clouders - January 13, 2022

ApsaraDB - July 8, 2021

ApsaraDB - May 7, 2021

ApsaraDB - May 7, 2021

Alibaba Cloud Native - December 28, 2023

ApsaraDB - May 7, 2021

Database Overview

Database Overview

ApsaraDB: Faster, Stronger, More Secure

Learn More DBStack

DBStack

DBStack is an all-in-one database management platform provided by Alibaba Cloud.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More ApsaraDB for OceanBase

ApsaraDB for OceanBase

A financial-grade distributed relational database that features high stability, high scalability, and high performance.

Learn MoreMore Posts by ApsaraDB